diff --git a/applications/ChatGPT/.gitignore b/applications/ChatGPT/.gitignore

new file mode 100644

index 000000000..40f3f6deb

--- /dev/null

+++ b/applications/ChatGPT/.gitignore

@@ -0,0 +1,146 @@

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+pip-wheel-metadata/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.nox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+*.py,cover

+.hypothesis/

+.pytest_cache/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+db.sqlite3-journal

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/_build/

+docs/.build/

+

+# PyBuilder

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# IPython

+profile_default/

+ipython_config.py

+

+# pyenv

+.python-version

+

+# pipenv

+# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

+# However, in case of collaboration, if having platform-specific dependencies or dependencies

+# having no cross-platform support, pipenv may install dependencies that don't work, or not

+# install all needed dependencies.

+#Pipfile.lock

+

+# PEP 582; used by e.g. github.com/David-OConnor/pyflow

+__pypackages__/

+

+# Celery stuff

+celerybeat-schedule

+celerybeat.pid

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+.dmypy.json

+dmypy.json

+

+# Pyre type checker

+.pyre/

+

+# IDE

+.idea/

+.vscode/

+

+# macos

+*.DS_Store

+#data/

+

+docs/.build

+

+# pytorch checkpoint

+*.pt

+

+# ignore version.py generated by setup.py

+colossalai/version.py

diff --git a/applications/ChatGPT/LICENSE b/applications/ChatGPT/LICENSE

new file mode 100644

index 000000000..0528c89ea

--- /dev/null

+++ b/applications/ChatGPT/LICENSE

@@ -0,0 +1,202 @@

+Copyright 2021- HPC-AI Technology Inc. All rights reserved.

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright 2021- HPC-AI Technology Inc.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/applications/ChatGPT/README.md b/applications/ChatGPT/README.md

new file mode 100644

index 000000000..dce59ad4b

--- /dev/null

+++ b/applications/ChatGPT/README.md

@@ -0,0 +1,80 @@

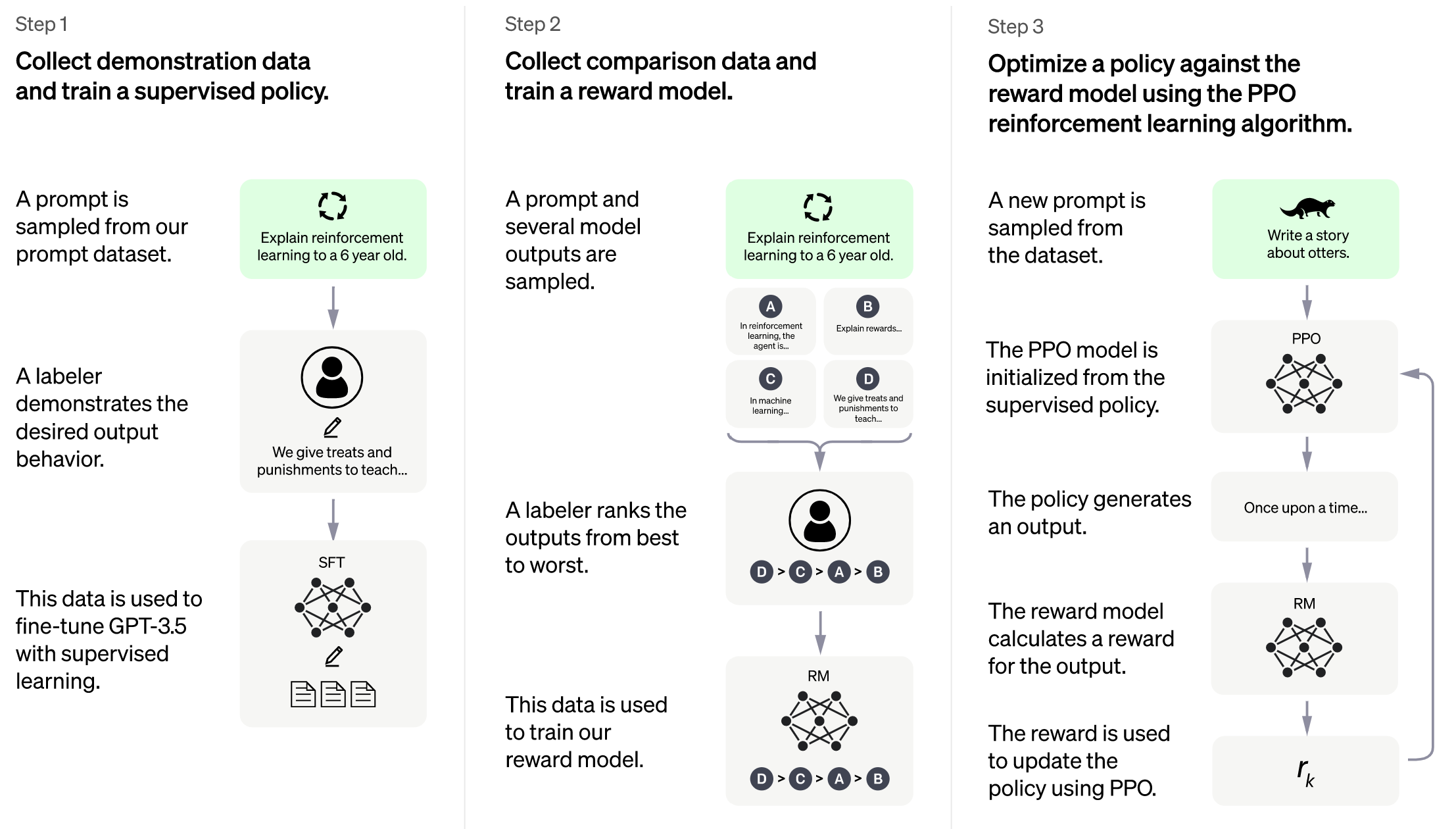

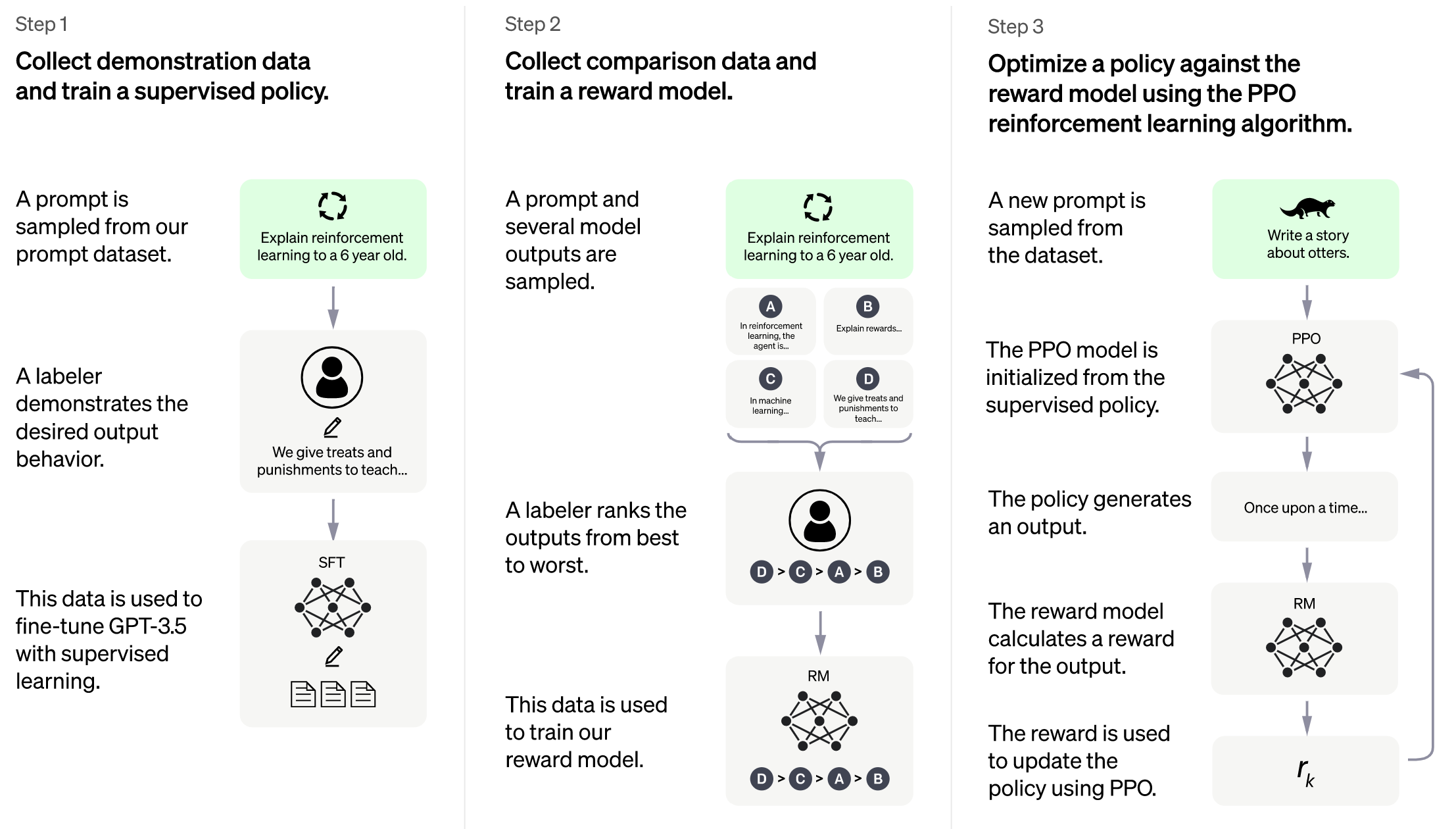

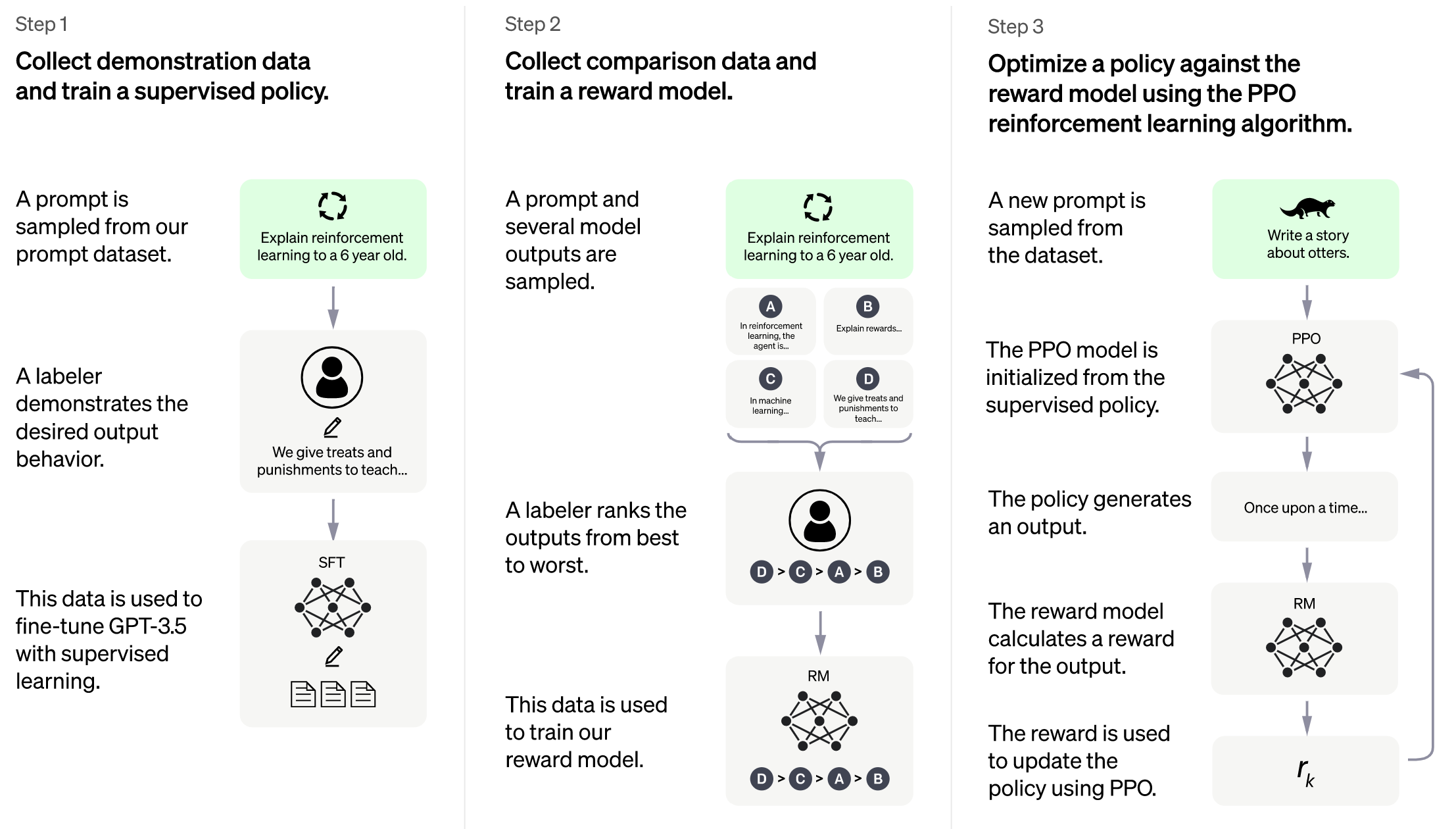

+# RLHF - ColossalAI

+

+Implementation of RLHF (Reinforcement Learning with Human Feedback) powered by ColossalAI. It supports distributed training and offloading, which can fit extremly large models.

+

+

+ +

+

+

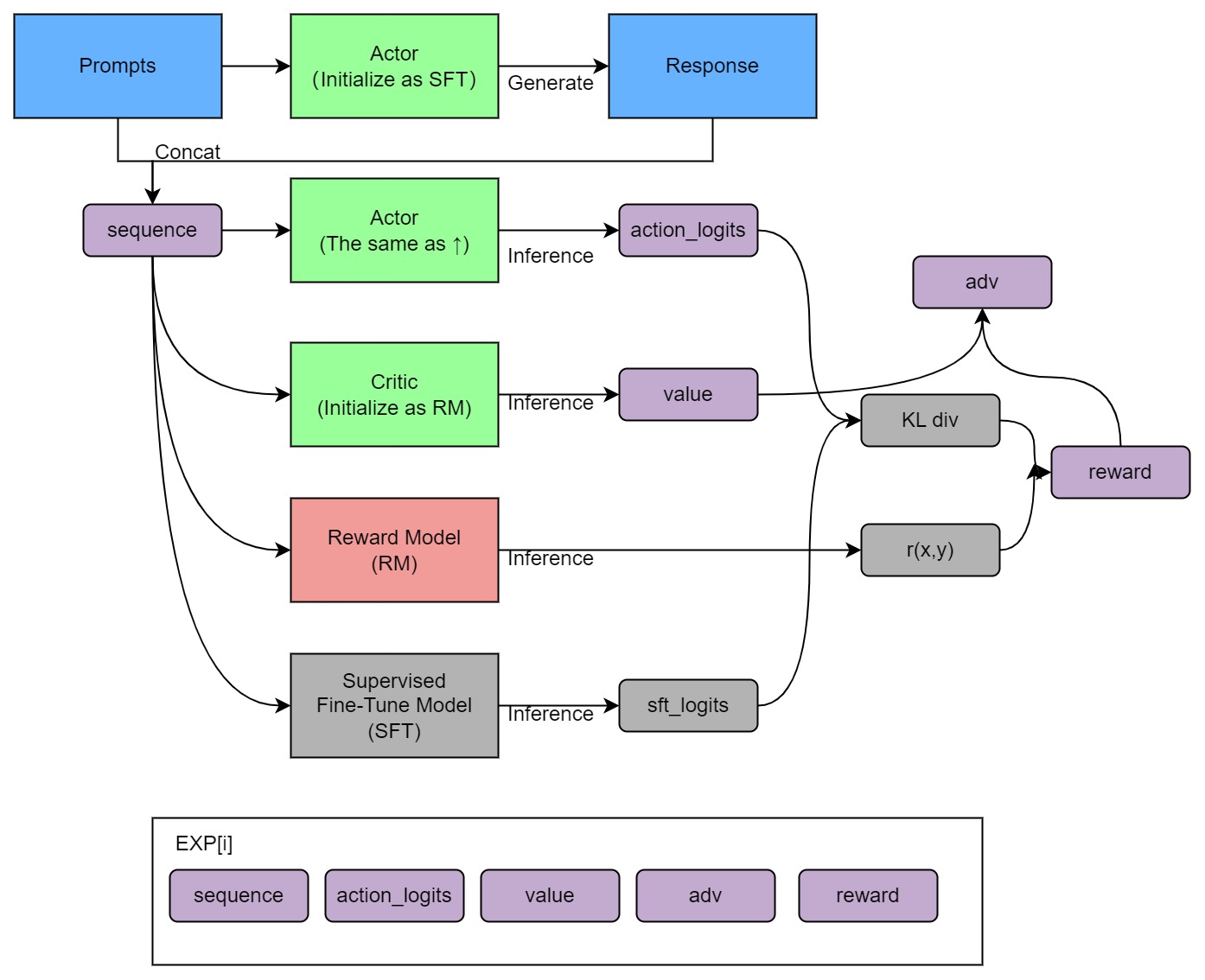

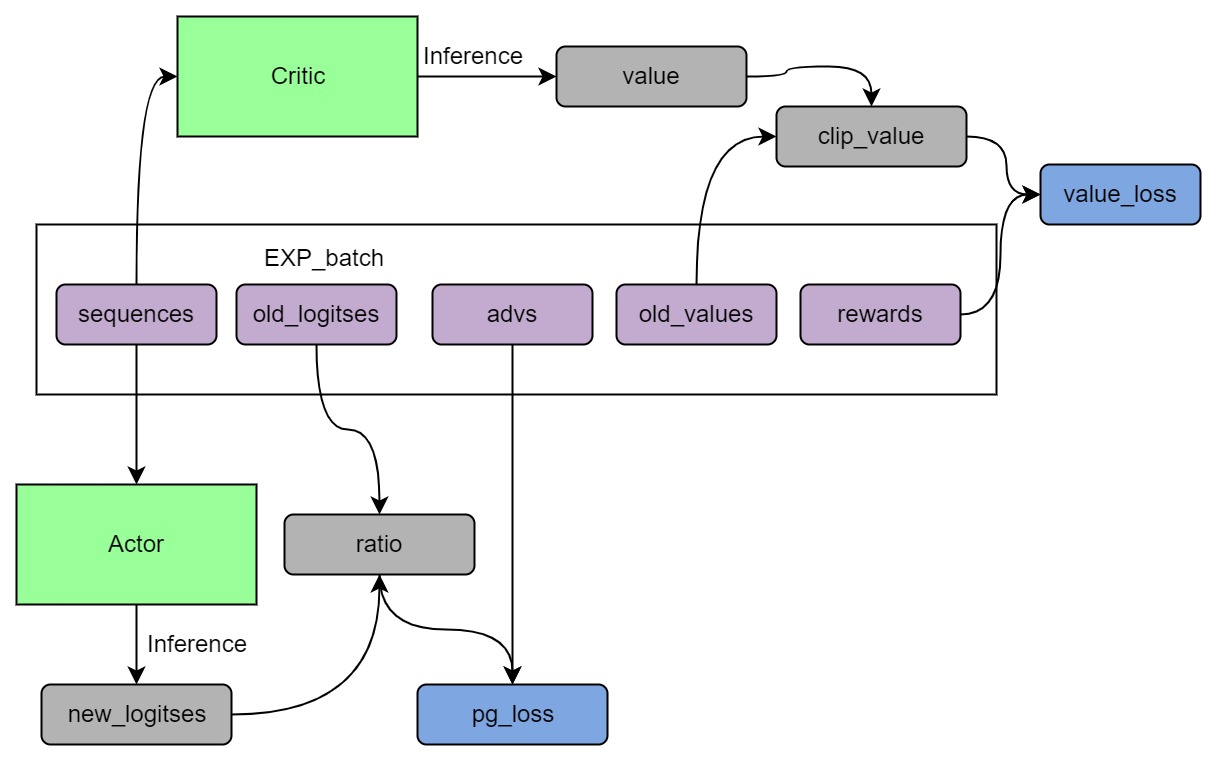

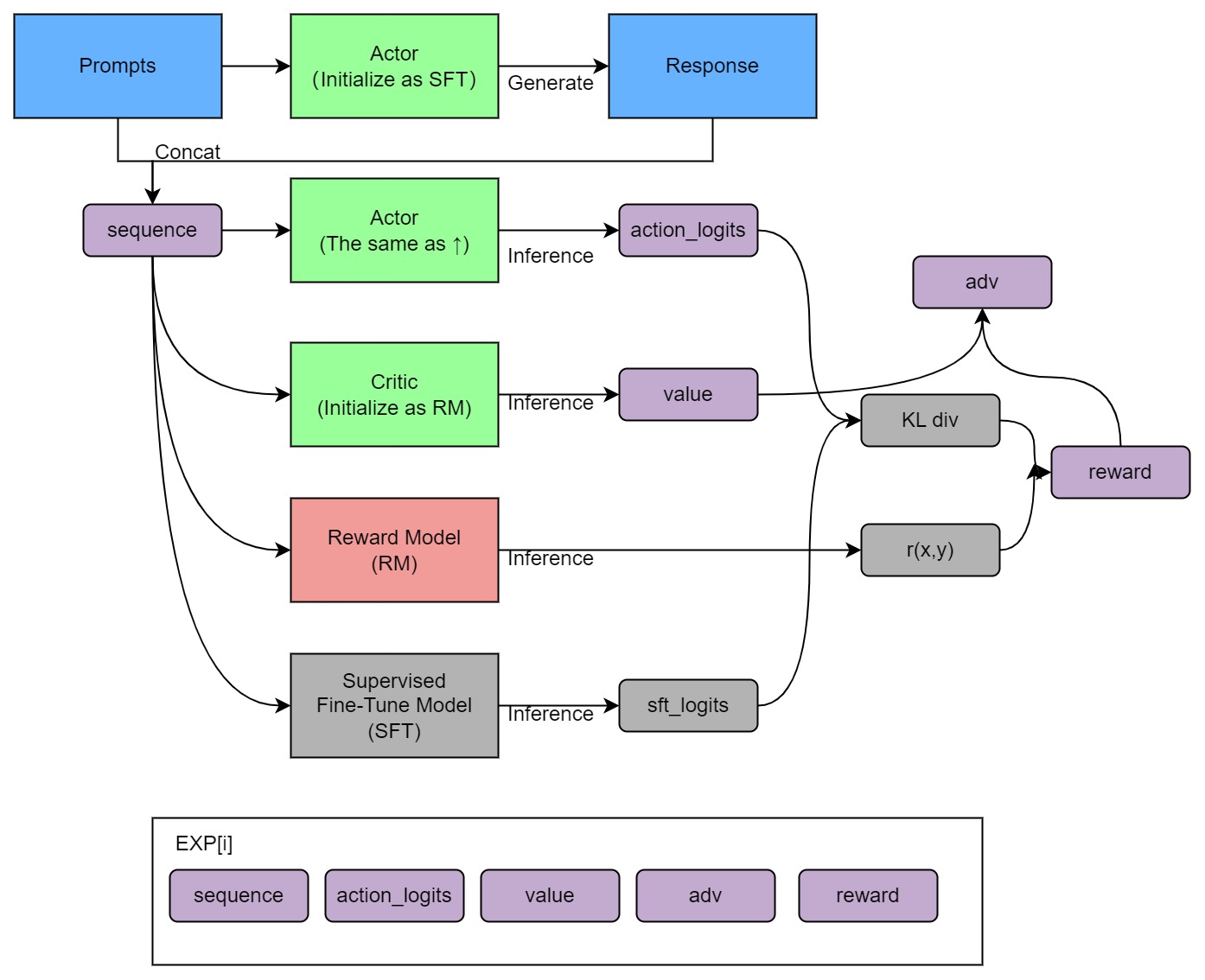

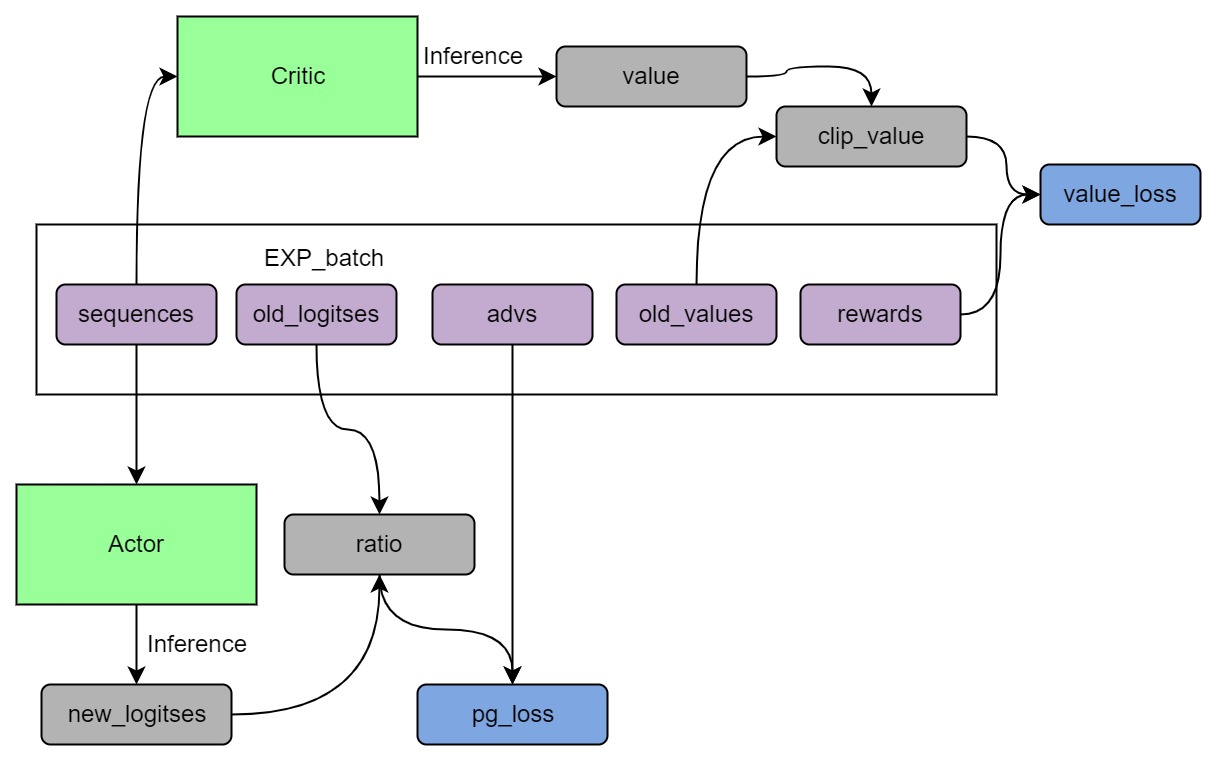

+## Training process (step 3)

+

+ +

+

+

+ +

+

+

+

+## Install

+```shell

+pip install .

+```

+

+

+## Usage

+

+The main entrypoint is `Trainer`. We only support PPO trainer now. We support many training strategies:

+

+- NaiveStrategy: simplest strategy. Train on single GPU.

+- DDPStrategy: use `torch.nn.parallel.DistributedDataParallel`. Train on multi GPUs.

+- ColossalAIStrategy: use Gemini and Zero of ColossalAI. It eliminates model duplication on each GPU and supports offload. It's very useful when training large models on multi GPUs.

+

+Simplest usage:

+

+```python

+from chatgpt.trainer import PPOTrainer

+from chatgpt.trainer.strategies import ColossalAIStrategy

+

+strategy = ColossalAIStrategy()

+

+with strategy.model_init_context():

+ # init your model here

+ actor = Actor()

+ critic = Critic()

+

+trainer = PPOTrainer(actor = actor, critic= critic, strategy, ...)

+

+trainer.fit(dataset, ...)

+```

+

+For more details, see `examples/`.

+

+We also support training reward model with true-world data. See `examples/train_reward_model.py`.

+

+## Todo

+

+- [x] implement PPO training

+- [x] implement training reward model

+- [x] support LoRA

+- [ ] implement PPO-ptx fine-tuning

+- [ ] integrate with Ray

+- [ ] support more RL paradigms, like Implicit Language Q-Learning (ILQL)

+

+## Citations

+

+```bibtex

+@article{Hu2021LoRALA,

+ title = {LoRA: Low-Rank Adaptation of Large Language Models},

+ author = {Edward J. Hu and Yelong Shen and Phillip Wallis and Zeyuan Allen-Zhu and Yuanzhi Li and Shean Wang and Weizhu Chen},

+ journal = {ArXiv},

+ year = {2021},

+ volume = {abs/2106.09685}

+}

+

+@article{ouyang2022training,

+ title={Training language models to follow instructions with human feedback},

+ author={Ouyang, Long and Wu, Jeff and Jiang, Xu and Almeida, Diogo and Wainwright, Carroll L and Mishkin, Pamela and Zhang, Chong and Agarwal, Sandhini and Slama, Katarina and Ray, Alex and others},

+ journal={arXiv preprint arXiv:2203.02155},

+ year={2022}

+}

+```

diff --git a/applications/ChatGPT/benchmarks/README.md b/applications/ChatGPT/benchmarks/README.md

new file mode 100644

index 000000000..f7212fc89

--- /dev/null

+++ b/applications/ChatGPT/benchmarks/README.md

@@ -0,0 +1,94 @@

+# Benchmarks

+

+## Benchmark GPT on dummy prompt data

+

+We provide various GPT models (string in parentheses is the corresponding model name used in this script):

+

+- GPT2-S (s)

+- GPT2-M (m)

+- GPT2-L (l)

+- GPT2-XL (xl)

+- GPT2-4B (4b)

+- GPT2-6B (6b)

+- GPT2-8B (8b)

+- GPT2-10B (10b)

+- GPT2-12B (12b)

+- GPT2-15B (15b)

+- GPT2-18B (18b)

+- GPT2-20B (20b)

+- GPT2-24B (24b)

+- GPT2-28B (28b)

+- GPT2-32B (32b)

+- GPT2-36B (36b)

+- GPT2-40B (40b)

+- GPT3 (175b)

+

+We also provide various training strategies:

+

+- ddp: torch DDP

+- colossalai_gemini: ColossalAI GeminiDDP with `placement_policy="cuda"`, like zero3

+- colossalai_gemini_cpu: ColossalAI GeminiDDP with `placement_policy="cpu"`, like zero3-offload

+- colossalai_zero2: ColossalAI zero2

+- colossalai_zero2_cpu: ColossalAI zero2-offload

+- colossalai_zero1: ColossalAI zero1

+- colossalai_zero1_cpu: ColossalAI zero1-offload

+

+We only support `torchrun` to launch now. E.g.

+

+```shell

+# run GPT2-S on single-node single-GPU with min batch size

+torchrun --standalone --nproc_pero_node 1 benchmark_gpt_dummy.py --model s --strategy ddp --experience_batch_size 1 --train_batch_size 1

+# run GPT2-XL on single-node 4-GPU

+torchrun --standalone --nproc_per_node 4 benchmark_gpt_dummy.py --model xl --strategy colossalai_zero2

+# run GPT3 on 8-node 8-GPU

+torchrun --nnodes 8 --nproc_per_node 8 \

+ --rdzv_id=$JOB_ID --rdzv_backend=c10d --rdzv_endpoint=$HOST_NODE_ADDR \

+ benchmark_gpt_dummy.py --model 175b --strategy colossalai_gemini

+```

+

+> ⚠ Batch sizes in CLI args and outputed throughput/TFLOPS are all values of per GPU.

+

+In this benchmark, we assume the model architectures/sizes of actor and critic are the same for simplicity. But in practice, to reduce training cost, we may use a smaller critic.

+

+We also provide a simple shell script to run a set of benchmarks. But it only supports benchmark on single node. However, it's easy to run on multi-nodes by modifying launch command in this script.

+

+Usage:

+

+```shell

+# run for GPUS=(1 2 4 8) x strategy=("ddp" "colossalai_zero2" "colossalai_gemini" "colossalai_zero2_cpu" "colossalai_gemini_cpu") x model=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b") x batch_size=(1 2 4 8 16 32 64 128 256)

+./benchmark_gpt_dummy.sh

+# run for GPUS=2 x strategy=("ddp" "colossalai_zero2" "colossalai_gemini" "colossalai_zero2_cpu" "colossalai_gemini_cpu") x model=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b") x batch_size=(1 2 4 8 16 32 64 128 256)

+./benchmark_gpt_dummy.sh 2

+# run for GPUS=2 x strategy=ddp x model=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b") x batch_size=(1 2 4 8 16 32 64 128 256)

+./benchmark_gpt_dummy.sh 2 ddp

+# run for GPUS=2 x strategy=ddp x model=l x batch_size=(1 2 4 8 16 32 64 128 256)

+./benchmark_gpt_dummy.sh 2 ddp l

+```

+

+## Benchmark OPT with LoRA on dummy prompt data

+

+We provide various OPT models (string in parentheses is the corresponding model name used in this script):

+

+- OPT-125M (125m)

+- OPT-350M (350m)

+- OPT-700M (700m)

+- OPT-1.3B (1.3b)

+- OPT-2.7B (2.7b)

+- OPT-3.5B (3.5b)

+- OPT-5.5B (5.5b)

+- OPT-6.7B (6.7b)

+- OPT-10B (10b)

+- OPT-13B (13b)

+

+We only support `torchrun` to launch now. E.g.

+

+```shell

+# run OPT-125M with no lora (lora_rank=0) on single-node single-GPU with min batch size

+torchrun --standalone --nproc_pero_node 1 benchmark_opt_lora_dummy.py --model 125m --strategy ddp --experience_batch_size 1 --train_batch_size 1 --lora_rank 0

+# run OPT-350M with lora_rank=4 on single-node 4-GPU

+torchrun --standalone --nproc_per_node 4 benchmark_opt_lora_dummy.py --model 350m --strategy colossalai_zero2 --lora_rank 4

+```

+

+> ⚠ Batch sizes in CLI args and outputed throughput/TFLOPS are all values of per GPU.

+

+In this benchmark, we assume the model architectures/sizes of actor and critic are the same for simplicity. But in practice, to reduce training cost, we may use a smaller critic.

diff --git a/applications/ChatGPT/benchmarks/benchmark_gpt_dummy.py b/applications/ChatGPT/benchmarks/benchmark_gpt_dummy.py

new file mode 100644

index 000000000..8474f3ba7

--- /dev/null

+++ b/applications/ChatGPT/benchmarks/benchmark_gpt_dummy.py

@@ -0,0 +1,183 @@

+import argparse

+from copy import deepcopy

+

+import torch

+import torch.distributed as dist

+import torch.nn as nn

+from chatgpt.nn import GPTActor, GPTCritic, RewardModel

+from chatgpt.nn.generation_utils import gpt_prepare_inputs_fn, update_model_kwargs_fn

+from chatgpt.trainer import PPOTrainer

+from chatgpt.trainer.callbacks import PerformanceEvaluator

+from chatgpt.trainer.strategies import ColossalAIStrategy, DDPStrategy, Strategy

+from torch.optim import Adam

+from transformers.models.gpt2.configuration_gpt2 import GPT2Config

+from transformers.models.gpt2.tokenization_gpt2 import GPT2Tokenizer

+

+from colossalai.nn.optimizer import HybridAdam

+

+

+def get_model_numel(model: nn.Module, strategy: Strategy) -> int:

+ numel = sum(p.numel() for p in model.parameters())

+ if isinstance(strategy, ColossalAIStrategy) and strategy.stage == 3 and strategy.shard_init:

+ numel *= dist.get_world_size()

+ return numel

+

+

+def preprocess_batch(samples) -> dict:

+ input_ids = torch.stack(samples)

+ attention_mask = torch.ones_like(input_ids, dtype=torch.long)

+ return {'input_ids': input_ids, 'attention_mask': attention_mask}

+

+

+def print_rank_0(*args, **kwargs) -> None:

+ if dist.get_rank() == 0:

+ print(*args, **kwargs)

+

+

+def print_model_numel(model_dict: dict) -> None:

+ B = 1024**3

+ M = 1024**2

+ K = 1024

+ outputs = ''

+ for name, numel in model_dict.items():

+ outputs += f'{name}: '

+ if numel >= B:

+ outputs += f'{numel / B:.2f} B\n'

+ elif numel >= M:

+ outputs += f'{numel / M:.2f} M\n'

+ elif numel >= K:

+ outputs += f'{numel / K:.2f} K\n'

+ else:

+ outputs += f'{numel}\n'

+ print_rank_0(outputs)

+

+

+def get_gpt_config(model_name: str) -> GPT2Config:

+ model_map = {

+ 's': GPT2Config(),

+ 'm': GPT2Config(n_embd=1024, n_layer=24, n_head=16),

+ 'l': GPT2Config(n_embd=1280, n_layer=36, n_head=20),

+ 'xl': GPT2Config(n_embd=1600, n_layer=48, n_head=25),

+ '2b': GPT2Config(n_embd=2048, n_layer=40, n_head=16),

+ '4b': GPT2Config(n_embd=2304, n_layer=64, n_head=16),

+ '6b': GPT2Config(n_embd=4096, n_layer=30, n_head=16),

+ '8b': GPT2Config(n_embd=4096, n_layer=40, n_head=16),

+ '10b': GPT2Config(n_embd=4096, n_layer=50, n_head=16),

+ '12b': GPT2Config(n_embd=4096, n_layer=60, n_head=16),

+ '15b': GPT2Config(n_embd=4096, n_layer=78, n_head=16),

+ '18b': GPT2Config(n_embd=4096, n_layer=90, n_head=16),

+ '20b': GPT2Config(n_embd=8192, n_layer=25, n_head=16),

+ '24b': GPT2Config(n_embd=8192, n_layer=30, n_head=16),

+ '28b': GPT2Config(n_embd=8192, n_layer=35, n_head=16),

+ '32b': GPT2Config(n_embd=8192, n_layer=40, n_head=16),

+ '36b': GPT2Config(n_embd=8192, n_layer=45, n_head=16),

+ '40b': GPT2Config(n_embd=8192, n_layer=50, n_head=16),

+ '175b': GPT2Config(n_positions=2048, n_embd=12288, n_layer=96, n_head=96),

+ }

+ try:

+ return model_map[model_name]

+ except KeyError:

+ raise ValueError(f'Unknown model "{model_name}"')

+

+

+def main(args):

+ if args.strategy == 'ddp':

+ strategy = DDPStrategy()

+ elif args.strategy == 'colossalai_gemini':

+ strategy = ColossalAIStrategy(stage=3, placement_policy='cuda', initial_scale=2**5)

+ elif args.strategy == 'colossalai_gemini_cpu':

+ strategy = ColossalAIStrategy(stage=3, placement_policy='cpu', initial_scale=2**5)

+ elif args.strategy == 'colossalai_zero2':

+ strategy = ColossalAIStrategy(stage=2, placement_policy='cuda')

+ elif args.strategy == 'colossalai_zero2_cpu':

+ strategy = ColossalAIStrategy(stage=2, placement_policy='cpu')

+ elif args.strategy == 'colossalai_zero1':

+ strategy = ColossalAIStrategy(stage=1, placement_policy='cuda')

+ elif args.strategy == 'colossalai_zero1_cpu':

+ strategy = ColossalAIStrategy(stage=1, placement_policy='cpu')

+ else:

+ raise ValueError(f'Unsupported strategy "{args.strategy}"')

+

+ model_config = get_gpt_config(args.model)

+

+ with strategy.model_init_context():

+ actor = GPTActor(config=model_config).cuda()

+ critic = GPTCritic(config=model_config).cuda()

+

+ initial_model = deepcopy(actor).cuda()

+ reward_model = RewardModel(deepcopy(critic.model), deepcopy(critic.value_head)).cuda()

+

+ actor_numel = get_model_numel(actor, strategy)

+ critic_numel = get_model_numel(critic, strategy)

+ initial_model_numel = get_model_numel(initial_model, strategy)

+ reward_model_numel = get_model_numel(reward_model, strategy)

+ print_model_numel({

+ 'Actor': actor_numel,

+ 'Critic': critic_numel,

+ 'Initial model': initial_model_numel,

+ 'Reward model': reward_model_numel

+ })

+ performance_evaluator = PerformanceEvaluator(actor_numel,

+ critic_numel,

+ initial_model_numel,

+ reward_model_numel,

+ enable_grad_checkpoint=False,

+ ignore_episodes=1)

+

+ if args.strategy.startswith('colossalai'):

+ actor_optim = HybridAdam(actor.parameters(), lr=5e-6)

+ critic_optim = HybridAdam(critic.parameters(), lr=5e-6)

+ else:

+ actor_optim = Adam(actor.parameters(), lr=5e-6)

+ critic_optim = Adam(critic.parameters(), lr=5e-6)

+

+ tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

+ tokenizer.pad_token = tokenizer.eos_token

+

+ trainer = PPOTrainer(strategy,

+ actor,

+ critic,

+ reward_model,

+ initial_model,

+ actor_optim,

+ critic_optim,

+ max_epochs=args.max_epochs,

+ train_batch_size=args.train_batch_size,

+ experience_batch_size=args.experience_batch_size,

+ tokenizer=preprocess_batch,

+ max_length=512,

+ do_sample=True,

+ temperature=1.0,

+ top_k=50,

+ pad_token_id=tokenizer.pad_token_id,

+ eos_token_id=tokenizer.eos_token_id,

+ prepare_inputs_fn=gpt_prepare_inputs_fn,

+ update_model_kwargs_fn=update_model_kwargs_fn,

+ callbacks=[performance_evaluator])

+

+ random_prompts = torch.randint(tokenizer.vocab_size, (1000, 400), device=torch.cuda.current_device())

+ trainer.fit(random_prompts,

+ num_episodes=args.num_episodes,

+ max_timesteps=args.max_timesteps,

+ update_timesteps=args.update_timesteps)

+

+ print_rank_0(f'Peak CUDA mem: {torch.cuda.max_memory_allocated()/1024**3:.2f} GB')

+

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--model', default='s')

+ parser.add_argument('--strategy',

+ choices=[

+ 'ddp', 'colossalai_gemini', 'colossalai_gemini_cpu', 'colossalai_zero2',

+ 'colossalai_zero2_cpu', 'colossalai_zero1', 'colossalai_zero1_cpu'

+ ],

+ default='ddp')

+ parser.add_argument('--num_episodes', type=int, default=3)

+ parser.add_argument('--max_timesteps', type=int, default=8)

+ parser.add_argument('--update_timesteps', type=int, default=8)

+ parser.add_argument('--max_epochs', type=int, default=3)

+ parser.add_argument('--train_batch_size', type=int, default=8)

+ parser.add_argument('--experience_batch_size', type=int, default=8)

+ args = parser.parse_args()

+ main(args)

diff --git a/applications/ChatGPT/benchmarks/benchmark_gpt_dummy.sh b/applications/ChatGPT/benchmarks/benchmark_gpt_dummy.sh

new file mode 100755

index 000000000..d70f88725

--- /dev/null

+++ b/applications/ChatGPT/benchmarks/benchmark_gpt_dummy.sh

@@ -0,0 +1,45 @@

+#!/usr/bin/env bash

+# Usage: $0

+set -xu

+

+BASE=$(realpath $(dirname $0))

+

+

+PY_SCRIPT=${BASE}/benchmark_gpt_dummy.py

+export OMP_NUM_THREADS=8

+

+function tune_batch_size() {

+ # we found when experience batch size is equal to train batch size

+ # peak CUDA memory usage of making experience phase is less than or equal to that of training phase

+ # thus, experience batch size can be larger than or equal to train batch size

+ for bs in 1 2 4 8 16 32 64 128 256; do

+ torchrun --standalone --nproc_per_node $1 $PY_SCRIPT --model $2 --strategy $3 --experience_batch_size $bs --train_batch_size $bs || return 1

+ done

+}

+

+if [ $# -eq 0 ]; then

+ num_gpus=(1 2 4 8)

+else

+ num_gpus=($1)

+fi

+

+if [ $# -le 1 ]; then

+ strategies=("ddp" "colossalai_zero2" "colossalai_gemini" "colossalai_zero2_cpu" "colossalai_gemini_cpu")

+else

+ strategies=($2)

+fi

+

+if [ $# -le 2 ]; then

+ models=("s" "m" "l" "xl" "2b" "4b" "6b" "8b" "10b")

+else

+ models=($3)

+fi

+

+

+for num_gpu in ${num_gpus[@]}; do

+ for strategy in ${strategies[@]}; do

+ for model in ${models[@]}; do

+ tune_batch_size $num_gpu $model $strategy || break

+ done

+ done

+done

diff --git a/applications/ChatGPT/benchmarks/benchmark_opt_lora_dummy.py b/applications/ChatGPT/benchmarks/benchmark_opt_lora_dummy.py

new file mode 100644

index 000000000..accbc4155

--- /dev/null

+++ b/applications/ChatGPT/benchmarks/benchmark_opt_lora_dummy.py

@@ -0,0 +1,178 @@

+import argparse

+from copy import deepcopy

+

+import torch

+import torch.distributed as dist

+import torch.nn as nn

+from chatgpt.nn import OPTActor, OPTCritic, RewardModel

+from chatgpt.nn.generation_utils import opt_prepare_inputs_fn, update_model_kwargs_fn

+from chatgpt.trainer import PPOTrainer

+from chatgpt.trainer.callbacks import PerformanceEvaluator

+from chatgpt.trainer.strategies import ColossalAIStrategy, DDPStrategy, Strategy

+from torch.optim import Adam

+from transformers import AutoTokenizer

+from transformers.models.opt.configuration_opt import OPTConfig

+

+from colossalai.nn.optimizer import HybridAdam

+

+

+def get_model_numel(model: nn.Module, strategy: Strategy) -> int:

+ numel = sum(p.numel() for p in model.parameters())

+ if isinstance(strategy, ColossalAIStrategy) and strategy.stage == 3 and strategy.shard_init:

+ numel *= dist.get_world_size()

+ return numel

+

+

+def preprocess_batch(samples) -> dict:

+ input_ids = torch.stack(samples)

+ attention_mask = torch.ones_like(input_ids, dtype=torch.long)

+ return {'input_ids': input_ids, 'attention_mask': attention_mask}

+

+

+def print_rank_0(*args, **kwargs) -> None:

+ if dist.get_rank() == 0:

+ print(*args, **kwargs)

+

+

+def print_model_numel(model_dict: dict) -> None:

+ B = 1024**3

+ M = 1024**2

+ K = 1024

+ outputs = ''

+ for name, numel in model_dict.items():

+ outputs += f'{name}: '

+ if numel >= B:

+ outputs += f'{numel / B:.2f} B\n'

+ elif numel >= M:

+ outputs += f'{numel / M:.2f} M\n'

+ elif numel >= K:

+ outputs += f'{numel / K:.2f} K\n'

+ else:

+ outputs += f'{numel}\n'

+ print_rank_0(outputs)

+

+

+def get_gpt_config(model_name: str) -> OPTConfig:

+ model_map = {

+ '125m': OPTConfig.from_pretrained('facebook/opt-125m'),

+ '350m': OPTConfig(hidden_size=1024, ffn_dim=4096, num_hidden_layers=24, num_attention_heads=16),

+ '700m': OPTConfig(hidden_size=1280, ffn_dim=5120, num_hidden_layers=36, num_attention_heads=20),

+ '1.3b': OPTConfig.from_pretrained('facebook/opt-1.3b'),

+ '2.7b': OPTConfig.from_pretrained('facebook/opt-2.7b'),

+ '3.5b': OPTConfig(hidden_size=3072, ffn_dim=12288, num_hidden_layers=32, num_attention_heads=32),

+ '5.5b': OPTConfig(hidden_size=3840, ffn_dim=15360, num_hidden_layers=32, num_attention_heads=32),

+ '6.7b': OPTConfig.from_pretrained('facebook/opt-6.7b'),

+ '10b': OPTConfig(hidden_size=5120, ffn_dim=20480, num_hidden_layers=32, num_attention_heads=32),

+ '13b': OPTConfig.from_pretrained('facebook/opt-13b'),

+ }

+ try:

+ return model_map[model_name]

+ except KeyError:

+ raise ValueError(f'Unknown model "{model_name}"')

+

+

+def main(args):

+ if args.strategy == 'ddp':

+ strategy = DDPStrategy()

+ elif args.strategy == 'colossalai_gemini':

+ strategy = ColossalAIStrategy(stage=3, placement_policy='cuda', initial_scale=2**5)

+ elif args.strategy == 'colossalai_gemini_cpu':

+ strategy = ColossalAIStrategy(stage=3, placement_policy='cpu', initial_scale=2**5)

+ elif args.strategy == 'colossalai_zero2':

+ strategy = ColossalAIStrategy(stage=2, placement_policy='cuda')

+ elif args.strategy == 'colossalai_zero2_cpu':

+ strategy = ColossalAIStrategy(stage=2, placement_policy='cpu')

+ elif args.strategy == 'colossalai_zero1':

+ strategy = ColossalAIStrategy(stage=1, placement_policy='cuda')

+ elif args.strategy == 'colossalai_zero1_cpu':

+ strategy = ColossalAIStrategy(stage=1, placement_policy='cpu')

+ else:

+ raise ValueError(f'Unsupported strategy "{args.strategy}"')

+

+ torch.cuda.set_per_process_memory_fraction(args.cuda_mem_frac)

+

+ model_config = get_gpt_config(args.model)

+

+ with strategy.model_init_context():

+ actor = OPTActor(config=model_config, lora_rank=args.lora_rank).cuda()

+ critic = OPTCritic(config=model_config, lora_rank=args.lora_rank).cuda()

+

+ initial_model = deepcopy(actor).cuda()

+ reward_model = RewardModel(deepcopy(critic.model), deepcopy(critic.value_head)).cuda()

+

+ actor_numel = get_model_numel(actor, strategy)

+ critic_numel = get_model_numel(critic, strategy)

+ initial_model_numel = get_model_numel(initial_model, strategy)

+ reward_model_numel = get_model_numel(reward_model, strategy)

+ print_model_numel({

+ 'Actor': actor_numel,

+ 'Critic': critic_numel,

+ 'Initial model': initial_model_numel,

+ 'Reward model': reward_model_numel

+ })

+ performance_evaluator = PerformanceEvaluator(actor_numel,

+ critic_numel,

+ initial_model_numel,

+ reward_model_numel,

+ enable_grad_checkpoint=False,

+ ignore_episodes=1)

+

+ if args.strategy.startswith('colossalai'):

+ actor_optim = HybridAdam(actor.parameters(), lr=5e-6)

+ critic_optim = HybridAdam(critic.parameters(), lr=5e-6)

+ else:

+ actor_optim = Adam(actor.parameters(), lr=5e-6)

+ critic_optim = Adam(critic.parameters(), lr=5e-6)

+

+ tokenizer = AutoTokenizer.from_pretrained('facebook/opt-350m')

+ tokenizer.pad_token = tokenizer.eos_token

+

+ trainer = PPOTrainer(strategy,

+ actor,

+ critic,

+ reward_model,

+ initial_model,

+ actor_optim,

+ critic_optim,

+ max_epochs=args.max_epochs,

+ train_batch_size=args.train_batch_size,

+ experience_batch_size=args.experience_batch_size,

+ tokenizer=preprocess_batch,

+ max_length=512,

+ do_sample=True,

+ temperature=1.0,

+ top_k=50,

+ pad_token_id=tokenizer.pad_token_id,

+ eos_token_id=tokenizer.eos_token_id,

+ prepare_inputs_fn=opt_prepare_inputs_fn,

+ update_model_kwargs_fn=update_model_kwargs_fn,

+ callbacks=[performance_evaluator])

+

+ random_prompts = torch.randint(tokenizer.vocab_size, (1000, 400), device=torch.cuda.current_device())

+ trainer.fit(random_prompts,

+ num_episodes=args.num_episodes,

+ max_timesteps=args.max_timesteps,

+ update_timesteps=args.update_timesteps)

+

+ print_rank_0(f'Peak CUDA mem: {torch.cuda.max_memory_allocated()/1024**3:.2f} GB')

+

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--model', default='125m')

+ parser.add_argument('--strategy',

+ choices=[

+ 'ddp', 'colossalai_gemini', 'colossalai_gemini_cpu', 'colossalai_zero2',

+ 'colossalai_zero2_cpu', 'colossalai_zero1', 'colossalai_zero1_cpu'

+ ],

+ default='ddp')

+ parser.add_argument('--num_episodes', type=int, default=3)

+ parser.add_argument('--max_timesteps', type=int, default=8)

+ parser.add_argument('--update_timesteps', type=int, default=8)

+ parser.add_argument('--max_epochs', type=int, default=3)

+ parser.add_argument('--train_batch_size', type=int, default=8)

+ parser.add_argument('--experience_batch_size', type=int, default=8)

+ parser.add_argument('--lora_rank', type=int, default=4)

+ parser.add_argument('--cuda_mem_frac', type=float, default=1.0)

+ args = parser.parse_args()

+ main(args)

diff --git a/applications/ChatGPT/chatgpt/__init__.py b/applications/ChatGPT/chatgpt/__init__.py

new file mode 100644

index 000000000..e69de29bb

diff --git a/applications/ChatGPT/chatgpt/dataset/__init__.py b/applications/ChatGPT/chatgpt/dataset/__init__.py

new file mode 100644

index 000000000..2f330ee67

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/dataset/__init__.py

@@ -0,0 +1,3 @@

+from .reward_dataset import RewardDataset

+

+__all__ = ['RewardDataset']

diff --git a/applications/ChatGPT/chatgpt/dataset/reward_dataset.py b/applications/ChatGPT/chatgpt/dataset/reward_dataset.py

new file mode 100644

index 000000000..14edcce30

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/dataset/reward_dataset.py

@@ -0,0 +1,52 @@

+from typing import Callable

+

+from torch.utils.data import Dataset

+from tqdm import tqdm

+

+

+class RewardDataset(Dataset):

+ """

+ Dataset for reward model

+

+ Args:

+ dataset: dataset for reward model

+ tokenizer: tokenizer for reward model

+ max_length: max length of input

+ """

+

+ def __init__(self, dataset, tokenizer: Callable, max_length: int) -> None:

+ super().__init__()

+ self.chosen = []

+ self.reject = []

+ for data in tqdm(dataset):

+ prompt = data['prompt']

+

+ chosen = prompt + data['chosen'] + "<|endoftext|>"

+ chosen_token = tokenizer(chosen,

+ max_length=max_length,

+ padding="max_length",

+ truncation=True,

+ return_tensors="pt")

+ self.chosen.append({

+ "input_ids": chosen_token['input_ids'],

+ "attention_mask": chosen_token['attention_mask']

+ })

+

+ reject = prompt + data['rejected'] + "<|endoftext|>"

+ reject_token = tokenizer(reject,

+ max_length=max_length,

+ padding="max_length",

+ truncation=True,

+ return_tensors="pt")

+ self.reject.append({

+ "input_ids": reject_token['input_ids'],

+ "attention_mask": reject_token['attention_mask']

+ })

+

+ def __len__(self):

+ length = len(self.chosen)

+ return length

+

+ def __getitem__(self, idx):

+ return self.chosen[idx]["input_ids"], self.chosen[idx]["attention_mask"], self.reject[idx][

+ "input_ids"], self.reject[idx]["attention_mask"]

diff --git a/applications/ChatGPT/chatgpt/experience_maker/__init__.py b/applications/ChatGPT/chatgpt/experience_maker/__init__.py

new file mode 100644

index 000000000..39ca7576b

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/experience_maker/__init__.py

@@ -0,0 +1,4 @@

+from .base import Experience, ExperienceMaker

+from .naive import NaiveExperienceMaker

+

+__all__ = ['Experience', 'ExperienceMaker', 'NaiveExperienceMaker']

diff --git a/applications/ChatGPT/chatgpt/experience_maker/base.py b/applications/ChatGPT/chatgpt/experience_maker/base.py

new file mode 100644

index 000000000..61895322c

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/experience_maker/base.py

@@ -0,0 +1,77 @@

+from abc import ABC, abstractmethod

+from dataclasses import dataclass

+from typing import Optional

+

+import torch

+import torch.nn as nn

+from chatgpt.nn.actor import Actor

+

+

+@dataclass

+class Experience:

+ """Experience is a batch of data.

+ These data should have the the sequence length and number of actions.

+ Left padding for sequences is applied.

+

+ Shapes of each tensor:

+ sequences: (B, S)

+ action_log_probs: (B, A)

+ values: (B)

+ reward: (B)

+ advatanges: (B)

+ attention_mask: (B, S)

+ action_mask: (B, A)

+

+ "A" is the number of actions.

+ """

+ sequences: torch.Tensor

+ action_log_probs: torch.Tensor

+ values: torch.Tensor

+ reward: torch.Tensor

+ advantages: torch.Tensor

+ attention_mask: Optional[torch.LongTensor]

+ action_mask: Optional[torch.BoolTensor]

+

+ @torch.no_grad()

+ def to_device(self, device: torch.device) -> None:

+ self.sequences = self.sequences.to(device)

+ self.action_log_probs = self.action_log_probs.to(device)

+ self.values = self.values.to(device)

+ self.reward = self.reward.to(device)

+ self.advantages = self.advantages.to(device)

+ if self.attention_mask is not None:

+ self.attention_mask = self.attention_mask.to(device)

+ if self.action_mask is not None:

+ self.action_mask = self.action_mask.to(device)

+

+ def pin_memory(self):

+ self.sequences = self.sequences.pin_memory()

+ self.action_log_probs = self.action_log_probs.pin_memory()

+ self.values = self.values.pin_memory()

+ self.reward = self.reward.pin_memory()

+ self.advantages = self.advantages.pin_memory()

+ if self.attention_mask is not None:

+ self.attention_mask = self.attention_mask.pin_memory()

+ if self.action_mask is not None:

+ self.action_mask = self.action_mask.pin_memory()

+ return self

+

+

+class ExperienceMaker(ABC):

+

+ def __init__(self,

+ actor: Actor,

+ critic: nn.Module,

+ reward_model: nn.Module,

+ initial_model: Actor,

+ kl_coef: float = 0.1) -> None:

+ super().__init__()

+ self.actor = actor

+ self.critic = critic

+ self.reward_model = reward_model

+ self.initial_model = initial_model

+ self.kl_coef = kl_coef

+

+ @abstractmethod

+ def make_experience(self, input_ids: torch.Tensor, **generate_kwargs) -> Experience:

+ pass

diff --git a/applications/ChatGPT/chatgpt/experience_maker/naive.py b/applications/ChatGPT/chatgpt/experience_maker/naive.py

new file mode 100644

index 000000000..f4fd2078c

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/experience_maker/naive.py

@@ -0,0 +1,36 @@

+import torch

+from chatgpt.nn.utils import compute_reward, normalize

+

+from .base import Experience, ExperienceMaker

+

+

+class NaiveExperienceMaker(ExperienceMaker):

+ """

+ Naive experience maker.

+ """

+

+ @torch.no_grad()

+ def make_experience(self, input_ids: torch.Tensor, **generate_kwargs) -> Experience:

+ self.actor.eval()

+ self.critic.eval()

+ self.initial_model.eval()

+ self.reward_model.eval()

+

+ sequences, attention_mask, action_mask = self.actor.generate(input_ids,

+ return_action_mask=True,

+ **generate_kwargs)

+ num_actions = action_mask.size(1)

+

+ action_log_probs = self.actor(sequences, num_actions, attention_mask)

+ base_action_log_probs = self.initial_model(sequences, num_actions, attention_mask)

+ value = self.critic(sequences, action_mask, attention_mask)

+ r = self.reward_model(sequences, attention_mask)

+

+ reward = compute_reward(r, self.kl_coef, action_log_probs, base_action_log_probs, action_mask=action_mask)

+

+ advantage = reward - value

+ # TODO(ver217): maybe normalize adv

+ if advantage.ndim == 1:

+ advantage = advantage.unsqueeze(-1)

+

+ return Experience(sequences, action_log_probs, value, reward, advantage, attention_mask, action_mask)

diff --git a/applications/ChatGPT/chatgpt/nn/__init__.py b/applications/ChatGPT/chatgpt/nn/__init__.py

new file mode 100644

index 000000000..c728d7df3

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/__init__.py

@@ -0,0 +1,18 @@

+from .actor import Actor

+from .bloom_actor import BLOOMActor

+from .bloom_critic import BLOOMCritic

+from .bloom_rm import BLOOMRM

+from .critic import Critic

+from .gpt_actor import GPTActor

+from .gpt_critic import GPTCritic

+from .gpt_rm import GPTRM

+from .loss import PairWiseLoss, PolicyLoss, PPOPtxActorLoss, ValueLoss

+from .opt_actor import OPTActor

+from .opt_critic import OPTCritic

+from .opt_rm import OPTRM

+from .reward_model import RewardModel

+

+__all__ = [

+ 'Actor', 'Critic', 'RewardModel', 'PolicyLoss', 'ValueLoss', 'PPOPtxActorLoss', 'PairWiseLoss', 'GPTActor',

+ 'GPTCritic', 'GPTRM', 'BLOOMActor', 'BLOOMCritic', 'BLOOMRM', 'OPTActor', 'OPTCritic', 'OPTRM'

+]

diff --git a/applications/ChatGPT/chatgpt/nn/actor.py b/applications/ChatGPT/chatgpt/nn/actor.py

new file mode 100644

index 000000000..c4c0d579d

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/actor.py

@@ -0,0 +1,62 @@

+from typing import Optional, Tuple, Union

+

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+

+from .generation import generate

+from .lora import LoRAModule

+from .utils import log_probs_from_logits

+

+

+class Actor(LoRAModule):

+ """

+ Actor model base class.

+

+ Args:

+ model (nn.Module): Actor Model.

+ lora_rank (int): LoRA rank.

+ lora_train_bias (str): LoRA bias training mode.

+ """

+

+ def __init__(self, model: nn.Module, lora_rank: int = 0, lora_train_bias: str = 'none') -> None:

+ super().__init__(lora_rank=lora_rank, lora_train_bias=lora_train_bias)

+ self.model = model

+ self.convert_to_lora()

+

+ @torch.no_grad()

+ def generate(

+ self,

+ input_ids: torch.Tensor,

+ return_action_mask: bool = True,

+ **kwargs

+ ) -> Union[Tuple[torch.LongTensor, torch.LongTensor], Tuple[torch.LongTensor, torch.LongTensor, torch.BoolTensor]]:

+ sequences = generate(self.model, input_ids, **kwargs)

+ attention_mask = None

+ pad_token_id = kwargs.get('pad_token_id', None)

+ if pad_token_id is not None:

+ attention_mask = sequences.not_equal(pad_token_id).to(dtype=torch.long, device=sequences.device)

+ if not return_action_mask:

+ return sequences, attention_mask

+ input_len = input_ids.size(1)

+ eos_token_id = kwargs.get('eos_token_id', None)

+ if eos_token_id is None:

+ action_mask = torch.ones_like(sequences, dtype=torch.bool)

+ else:

+ # left padding may be applied, only mask action

+ action_mask = (sequences[:, input_len:] == eos_token_id).cumsum(dim=-1) == 0

+ action_mask = F.pad(action_mask, (1 + input_len, -1), value=True) # include eos token and input

+ action_mask[:, :input_len] = False

+ action_mask = action_mask[:, 1:]

+ return sequences, attention_mask, action_mask[:, -(sequences.size(1) - input_len):]

+

+ def forward(self,

+ sequences: torch.LongTensor,

+ num_actions: int,

+ attention_mask: Optional[torch.Tensor] = None) -> torch.Tensor:

+ """Returns action log probs

+ """

+ output = self.model(sequences, attention_mask=attention_mask)

+ logits = output['logits']

+ log_probs = log_probs_from_logits(logits[:, :-1, :], sequences[:, 1:])

+ return log_probs[:, -num_actions:]

diff --git a/applications/ChatGPT/chatgpt/nn/bloom_actor.py b/applications/ChatGPT/chatgpt/nn/bloom_actor.py

new file mode 100644

index 000000000..103536bc3

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/bloom_actor.py

@@ -0,0 +1,35 @@

+from typing import Optional

+

+import torch

+from transformers import BloomConfig, BloomForCausalLM, BloomModel

+

+from .actor import Actor

+

+

+class BLOOMActor(Actor):

+ """

+ BLOOM Actor model.

+

+ Args:

+ pretrained (str): Pretrained model name or path.

+ config (BloomConfig): Model config.

+ checkpoint (bool): Enable gradient checkpointing.

+ lora_rank (int): LoRA rank.

+ lora_train_bias (str): LoRA bias training mode.

+ """

+

+ def __init__(self,

+ pretrained: str = None,

+ config: Optional[BloomConfig] = None,

+ checkpoint: bool = False,

+ lora_rank: int = 0,

+ lora_train_bias: str = 'none') -> None:

+ if pretrained is not None:

+ model = BloomForCausalLM.from_pretrained(pretrained)

+ elif config is not None:

+ model = BloomForCausalLM(config)

+ else:

+ model = BloomForCausalLM(BloomConfig())

+ if checkpoint:

+ model.gradient_checkpointing_enable()

+ super().__init__(model, lora_rank, lora_train_bias)

diff --git a/applications/ChatGPT/chatgpt/nn/bloom_critic.py b/applications/ChatGPT/chatgpt/nn/bloom_critic.py

new file mode 100644

index 000000000..3b03471a3

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/bloom_critic.py

@@ -0,0 +1,37 @@

+from typing import Optional

+

+import torch

+import torch.nn as nn

+from transformers import BloomConfig, BloomForCausalLM, BloomModel

+

+from .critic import Critic

+

+

+class BLOOMCritic(Critic):

+ """

+ BLOOM Critic model.

+

+ Args:

+ pretrained (str): Pretrained model name or path.

+ config (BloomConfig): Model config.

+ checkpoint (bool): Enable gradient checkpointing.

+ lora_rank (int): LoRA rank.

+ lora_train_bias (str): LoRA bias training mode.

+ """

+

+ def __init__(self,

+ pretrained: str = None,

+ config: Optional[BloomConfig] = None,

+ checkpoint: bool = False,

+ lora_rank: int = 0,

+ lora_train_bias: str = 'none') -> None:

+ if pretrained is not None:

+ model = BloomModel.from_pretrained(pretrained)

+ elif config is not None:

+ model = BloomModel(config)

+ else:

+ model = BloomModel(BloomConfig())

+ if checkpoint:

+ model.gradient_checkpointing_enable()

+ value_head = nn.Linear(model.config.hidden_size, 1)

+ super().__init__(model, value_head, lora_rank, lora_train_bias)

diff --git a/applications/ChatGPT/chatgpt/nn/bloom_rm.py b/applications/ChatGPT/chatgpt/nn/bloom_rm.py

new file mode 100644

index 000000000..0d4dd43fa

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/bloom_rm.py

@@ -0,0 +1,37 @@

+from typing import Optional

+

+import torch

+import torch.nn as nn

+from transformers import BloomConfig, BloomForCausalLM, BloomModel

+

+from .reward_model import RewardModel

+

+

+class BLOOMRM(RewardModel):

+ """

+ BLOOM Reward model.

+

+ Args:

+ pretrained (str): Pretrained model name or path.

+ config (BloomConfig): Model config.

+ checkpoint (bool): Enable gradient checkpointing.

+ lora_rank (int): LoRA rank.

+ lora_train_bias (str): LoRA bias training mode.

+ """

+

+ def __init__(self,

+ pretrained: str = None,

+ config: Optional[BloomConfig] = None,

+ checkpoint: bool = False,

+ lora_rank: int = 0,

+ lora_train_bias: str = 'none') -> None:

+ if pretrained is not None:

+ model = BloomModel.from_pretrained(pretrained)

+ elif config is not None:

+ model = BloomModel(config)

+ else:

+ model = BloomModel(BloomConfig())

+ if checkpoint:

+ model.gradient_checkpointing_enable()

+ value_head = nn.Linear(model.config.hidden_size, 1)

+ super().__init__(model, value_head, lora_rank, lora_train_bias)

diff --git a/applications/ChatGPT/chatgpt/nn/critic.py b/applications/ChatGPT/chatgpt/nn/critic.py

new file mode 100644

index 000000000..f3a123854

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/critic.py

@@ -0,0 +1,47 @@

+from typing import Optional

+

+import torch

+import torch.nn as nn

+

+from .lora import LoRAModule

+from .utils import masked_mean

+

+

+class Critic(LoRAModule):

+ """

+ Critic model base class.

+

+ Args:

+ model (nn.Module): Critic model.

+ value_head (nn.Module): Value head to get value.

+ lora_rank (int): LoRA rank.

+ lora_train_bias (str): LoRA bias training mode.

+ """

+

+ def __init__(self,

+ model: nn.Module,

+ value_head: nn.Module,

+ lora_rank: int = 0,

+ lora_train_bias: str = 'none') -> None:

+

+ super().__init__(lora_rank=lora_rank, lora_train_bias=lora_train_bias)

+ self.model = model

+ self.value_head = value_head

+ self.convert_to_lora()

+

+ def forward(self,

+ sequences: torch.LongTensor,

+ action_mask: Optional[torch.Tensor] = None,

+ attention_mask: Optional[torch.Tensor] = None) -> torch.Tensor:

+ outputs = self.model(sequences, attention_mask=attention_mask)

+ last_hidden_states = outputs['last_hidden_state']

+

+ values = self.value_head(last_hidden_states).squeeze(-1)[:, :-1]

+

+ if action_mask is not None:

+ num_actions = action_mask.size(1)

+ values = values[:, -num_actions:]

+ value = masked_mean(values, action_mask, dim=1)

+ return value

+ value = values.mean(dim=1).squeeze(1)

+ return value

diff --git a/applications/ChatGPT/chatgpt/nn/generation.py b/applications/ChatGPT/chatgpt/nn/generation.py

new file mode 100644

index 000000000..4ee797561

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/generation.py

@@ -0,0 +1,137 @@

+from typing import Any, Callable, Optional

+

+import torch

+import torch.nn as nn

+

+try:

+ from transformers.generation_logits_process import (

+ LogitsProcessorList,

+ TemperatureLogitsWarper,

+ TopKLogitsWarper,

+ TopPLogitsWarper,

+ )

+except ImportError:

+ from transformers.generation import LogitsProcessorList, TemperatureLogitsWarper, TopKLogitsWarper, TopPLogitsWarper

+

+

+def prepare_logits_processor(top_k: Optional[int] = None,

+ top_p: Optional[float] = None,

+ temperature: Optional[float] = None) -> LogitsProcessorList:

+ processor_list = LogitsProcessorList()

+ if temperature is not None and temperature != 1.0:

+ processor_list.append(TemperatureLogitsWarper(temperature))

+ if top_k is not None and top_k != 0:

+ processor_list.append(TopKLogitsWarper(top_k))

+ if top_p is not None and top_p < 1.0:

+ processor_list.append(TopPLogitsWarper(top_p))

+ return processor_list

+

+

+def sample(model: nn.Module,

+ input_ids: torch.Tensor,

+ max_length: int,

+ early_stopping: bool = False,

+ eos_token_id: Optional[int] = None,

+ pad_token_id: Optional[int] = None,

+ top_k: Optional[int] = None,

+ top_p: Optional[float] = None,

+ temperature: Optional[float] = None,

+ prepare_inputs_fn: Optional[Callable[[torch.Tensor, Any], dict]] = None,

+ update_model_kwargs_fn: Optional[Callable[[dict, Any], dict]] = None,

+ **model_kwargs) -> torch.Tensor:

+ if input_ids.size(1) >= max_length:

+ return input_ids

+

+ logits_processor = prepare_logits_processor(top_k, top_p, temperature)

+ unfinished_sequences = input_ids.new(input_ids.shape[0]).fill_(1)

+

+ for _ in range(input_ids.size(1), max_length):

+ model_inputs = prepare_inputs_fn(input_ids, **model_kwargs) if prepare_inputs_fn is not None else {

+ 'input_ids': input_ids

+ }

+ outputs = model(**model_inputs)

+

+ next_token_logits = outputs['logits'][:, -1, :]

+ # pre-process distribution

+ next_token_logits = logits_processor(input_ids, next_token_logits)

+ # sample

+ probs = torch.softmax(next_token_logits, dim=-1, dtype=torch.float)

+ next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

+

+ # finished sentences should have their next token be a padding token

+ if eos_token_id is not None:

+ if pad_token_id is None:

+ raise ValueError("If `eos_token_id` is defined, make sure that `pad_token_id` is defined.")

+ next_tokens = next_tokens * unfinished_sequences + pad_token_id * (1 - unfinished_sequences)

+

+ # update generated ids, model inputs for next step

+ input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

+ if update_model_kwargs_fn is not None:

+ model_kwargs = update_model_kwargs_fn(outputs, **model_kwargs)

+

+ # if eos_token was found in one sentence, set sentence to finished

+ if eos_token_id is not None:

+ unfinished_sequences = unfinished_sequences.mul((next_tokens != eos_token_id).long())

+

+ # stop when each sentence is finished if early_stopping=True

+ if early_stopping and unfinished_sequences.max() == 0:

+ break

+

+ return input_ids

+

+

+def generate(model: nn.Module,

+ input_ids: torch.Tensor,

+ max_length: int,

+ num_beams: int = 1,

+ do_sample: bool = True,

+ early_stopping: bool = False,

+ eos_token_id: Optional[int] = None,

+ pad_token_id: Optional[int] = None,

+ top_k: Optional[int] = None,

+ top_p: Optional[float] = None,

+ temperature: Optional[float] = None,

+ prepare_inputs_fn: Optional[Callable[[torch.Tensor, Any], dict]] = None,

+ update_model_kwargs_fn: Optional[Callable[[dict, Any], dict]] = None,

+ **model_kwargs) -> torch.Tensor:

+ """Generate token sequence. The returned sequence is input_ids + generated_tokens.

+

+ Args:

+ model (nn.Module): model

+ input_ids (torch.Tensor): input sequence

+ max_length (int): max length of the returned sequence

+ num_beams (int, optional): number of beams. Defaults to 1.

+ do_sample (bool, optional): whether to do sample. Defaults to True.

+ early_stopping (bool, optional): if True, the sequence length may be smaller than max_length due to finding eos. Defaults to False.

+ eos_token_id (Optional[int], optional): end of sequence token id. Defaults to None.

+ pad_token_id (Optional[int], optional): pad token id. Defaults to None.

+ top_k (Optional[int], optional): the number of highest probability vocabulary tokens to keep for top-k-filtering. Defaults to None.

+ top_p (Optional[float], optional): If set to float < 1, only the smallest set of most probable tokens with probabilities that add up to top_p or higher are kept for generation. Defaults to None.

+ temperature (Optional[float], optional): The value used to module the next token probabilities. Defaults to None.

+ prepare_inputs_fn (Optional[Callable[[torch.Tensor, Any], dict]], optional): Function to preprocess model inputs. Arguments of this function should be input_ids and model_kwargs. Defaults to None.

+ update_model_kwargs_fn (Optional[Callable[[dict, Any], dict]], optional): Function to update model_kwargs based on outputs. Arguments of this function should be outputs and model_kwargs. Defaults to None.

+ """

+ is_greedy_gen_mode = ((num_beams == 1) and do_sample is False)

+ is_sample_gen_mode = ((num_beams == 1) and do_sample is True)

+ is_beam_gen_mode = ((num_beams > 1) and do_sample is False)

+ if is_greedy_gen_mode:

+ # run greedy search

+ raise NotImplementedError

+ elif is_sample_gen_mode:

+ # run sample

+ return sample(model,

+ input_ids,

+ max_length,

+ early_stopping=early_stopping,

+ eos_token_id=eos_token_id,

+ pad_token_id=pad_token_id,

+ top_k=top_k,

+ top_p=top_p,

+ temperature=temperature,

+ prepare_inputs_fn=prepare_inputs_fn,

+ update_model_kwargs_fn=update_model_kwargs_fn,

+ **model_kwargs)

+ elif is_beam_gen_mode:

+ raise NotImplementedError

+ else:

+ raise ValueError("Unsupported generation mode")

diff --git a/applications/ChatGPT/chatgpt/nn/generation_utils.py b/applications/ChatGPT/chatgpt/nn/generation_utils.py

new file mode 100644

index 000000000..c7bc1b383

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/generation_utils.py

@@ -0,0 +1,92 @@

+from typing import Optional

+

+import torch

+

+

+def gpt_prepare_inputs_fn(input_ids: torch.Tensor, past: Optional[torch.Tensor] = None, **kwargs) -> dict:

+ token_type_ids = kwargs.get("token_type_ids", None)

+ # only last token for inputs_ids if past is defined in kwargs

+ if past:

+ input_ids = input_ids[:, -1].unsqueeze(-1)

+ if token_type_ids is not None:

+ token_type_ids = token_type_ids[:, -1].unsqueeze(-1)

+

+ attention_mask = kwargs.get("attention_mask", None)

+ position_ids = kwargs.get("position_ids", None)

+

+ if attention_mask is not None and position_ids is None:

+ # create position_ids on the fly for batch generation

+ position_ids = attention_mask.long().cumsum(-1) - 1

+ position_ids.masked_fill_(attention_mask == 0, 1)

+ if past:

+ position_ids = position_ids[:, -1].unsqueeze(-1)

+ else:

+ position_ids = None

+ return {

+ "input_ids": input_ids,

+ "past_key_values": past,

+ "use_cache": kwargs.get("use_cache"),

+ "position_ids": position_ids,

+ "attention_mask": attention_mask,

+ "token_type_ids": token_type_ids,

+ }

+

+

+def update_model_kwargs_fn(outputs: dict, **model_kwargs) -> dict:

+ if "past_key_values" in outputs:

+ model_kwargs["past"] = outputs["past_key_values"]

+ else:

+ model_kwargs["past"] = None

+

+ # update token_type_ids with last value

+ if "token_type_ids" in model_kwargs:

+ token_type_ids = model_kwargs["token_type_ids"]

+ model_kwargs["token_type_ids"] = torch.cat([token_type_ids, token_type_ids[:, -1].unsqueeze(-1)], dim=-1)

+

+ # update attention mask

+ if "attention_mask" in model_kwargs:

+ attention_mask = model_kwargs["attention_mask"]

+ model_kwargs["attention_mask"] = torch.cat(

+ [attention_mask, attention_mask.new_ones((attention_mask.shape[0], 1))], dim=-1)

+

+ return model_kwargs

+

+

+def opt_prepare_inputs_fn(input_ids: torch.Tensor,

+ past: Optional[torch.Tensor] = None,

+ attention_mask: Optional[torch.Tensor] = None,

+ use_cache: Optional[bool] = None,

+ **kwargs) -> dict:

+ # if model is used as a decoder in encoder-decoder model, the decoder attention mask is created on the fly

+ if attention_mask is None:

+ attention_mask = input_ids.new_ones(input_ids.shape)

+

+ if past:

+ input_ids = input_ids[:, -1:]

+ # first step, decoder_cached_states are empty

+ return {

+ "input_ids": input_ids, # encoder_outputs is defined. input_ids not needed

+ "attention_mask": attention_mask,

+ "past_key_values": past,

+ "use_cache": use_cache,

+ }

+

+

+def bloom_prepare_inputs_fn(input_ids: torch.Tensor,

+ past: Optional[torch.Tensor] = None,

+ attention_mask: Optional[torch.Tensor] = None,

+ use_cache: Optional[bool] = None,

+ **kwargs) -> dict:

+ # if model is used as a decoder in encoder-decoder model, the decoder attention mask is created on the fly

+ if attention_mask is None:

+ attention_mask = input_ids.new_ones(input_ids.shape)

+

+ if past:

+ input_ids = input_ids[:, -1:]

+ # first step, decoder_cached_states are empty

+ return {

+ "input_ids": input_ids, # encoder_outputs is defined. input_ids not needed

+ "attention_mask": attention_mask,

+ "past_key_values": past,

+ "use_cache": use_cache,

+ }

diff --git a/applications/ChatGPT/chatgpt/nn/gpt_actor.py b/applications/ChatGPT/chatgpt/nn/gpt_actor.py

new file mode 100644

index 000000000..491182ffa

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/gpt_actor.py

@@ -0,0 +1,31 @@

+from typing import Optional

+

+from transformers.models.gpt2.configuration_gpt2 import GPT2Config

+from transformers.models.gpt2.modeling_gpt2 import GPT2LMHeadModel

+

+from .actor import Actor

+

+

+class GPTActor(Actor):

+ """

+ GPT Actor model.

+

+ Args:

+ pretrained (str): Pretrained model name or path.

+ config (GPT2Config): Model config.

+ checkpoint (bool): Enable gradient checkpointing.

+ """

+

+ def __init__(self,

+ pretrained: Optional[str] = None,

+ config: Optional[GPT2Config] = None,

+ checkpoint: bool = False) -> None:

+ if pretrained is not None:

+ model = GPT2LMHeadModel.from_pretrained(pretrained)

+ elif config is not None:

+ model = GPT2LMHeadModel(config)

+ else:

+ model = GPT2LMHeadModel(GPT2Config())

+ if checkpoint:

+ model.gradient_checkpointing_enable()

+ super().__init__(model)

diff --git a/applications/ChatGPT/chatgpt/nn/gpt_critic.py b/applications/ChatGPT/chatgpt/nn/gpt_critic.py

new file mode 100644

index 000000000..b0a001f4a

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/gpt_critic.py

@@ -0,0 +1,33 @@

+from typing import Optional

+

+import torch.nn as nn

+from transformers.models.gpt2.configuration_gpt2 import GPT2Config

+from transformers.models.gpt2.modeling_gpt2 import GPT2Model

+

+from .critic import Critic

+

+

+class GPTCritic(Critic):

+ """

+ GPT Critic model.

+

+ Args:

+ pretrained (str): Pretrained model name or path.

+ config (GPT2Config): Model config.

+ checkpoint (bool): Enable gradient checkpointing.

+ """

+

+ def __init__(self,

+ pretrained: Optional[str] = None,

+ config: Optional[GPT2Config] = None,

+ checkpoint: bool = False) -> None:

+ if pretrained is not None:

+ model = GPT2Model.from_pretrained(pretrained)

+ elif config is not None:

+ model = GPT2Model(config)

+ else:

+ model = GPT2Model(GPT2Config())

+ if checkpoint:

+ model.gradient_checkpointing_enable()

+ value_head = nn.Linear(model.config.n_embd, 1)

+ super().__init__(model, value_head)

diff --git a/applications/ChatGPT/chatgpt/nn/gpt_rm.py b/applications/ChatGPT/chatgpt/nn/gpt_rm.py

new file mode 100644

index 000000000..c6c41a45a

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/gpt_rm.py

@@ -0,0 +1,33 @@

+from typing import Optional

+

+import torch.nn as nn

+from transformers.models.gpt2.configuration_gpt2 import GPT2Config

+from transformers.models.gpt2.modeling_gpt2 import GPT2Model

+

+from .reward_model import RewardModel

+

+

+class GPTRM(RewardModel):

+ """

+ GPT Reward model.

+

+ Args:

+ pretrained (str): Pretrained model name or path.

+ config (GPT2Config): Model config.

+ checkpoint (bool): Enable gradient checkpointing.

+ """

+

+ def __init__(self,

+ pretrained: Optional[str] = None,

+ config: Optional[GPT2Config] = None,

+ checkpoint: bool = False) -> None:

+ if pretrained is not None:

+ model = GPT2Model.from_pretrained(pretrained)

+ elif config is not None:

+ model = GPT2Model(config)

+ else:

+ model = GPT2Model(GPT2Config())

+ if checkpoint:

+ model.gradient_checkpointing_enable()

+ value_head = nn.Linear(model.config.n_embd, 1)

+ super().__init__(model, value_head)

diff --git a/applications/ChatGPT/chatgpt/nn/lora.py b/applications/ChatGPT/chatgpt/nn/lora.py

new file mode 100644

index 000000000..46a43ec91

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/lora.py

@@ -0,0 +1,127 @@

+import math

+from typing import Optional

+

+import loralib as lora

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+

+

+class LoraLinear(lora.LoRALayer, nn.Module):

+ """Replace in-place ops to out-of-place ops to fit gemini. Convert a torch.nn.Linear to LoraLinear.

+ """

+

+ def __init__(

+ self,

+ weight: nn.Parameter,

+ bias: Optional[nn.Parameter],

+ r: int = 0,

+ lora_alpha: int = 1,

+ lora_dropout: float = 0.,

+ fan_in_fan_out: bool = False, # Set this to True if the layer to replace stores weight like (fan_in, fan_out)

+ merge_weights: bool = True,

+ ):

+ nn.Module.__init__(self)

+ lora.LoRALayer.__init__(self,

+ r=r,

+ lora_alpha=lora_alpha,

+ lora_dropout=lora_dropout,

+ merge_weights=merge_weights)

+ self.weight = weight

+ self.bias = bias

+

+ out_features, in_features = weight.shape

+ self.in_features = in_features

+ self.out_features = out_features

+

+ self.fan_in_fan_out = fan_in_fan_out

+ # Actual trainable parameters

+ if r > 0:

+ self.lora_A = nn.Parameter(self.weight.new_zeros((r, in_features)))

+ self.lora_B = nn.Parameter(self.weight.new_zeros((out_features, r)))

+ self.scaling = self.lora_alpha / self.r

+ # Freezing the pre-trained weight matrix

+ self.weight.requires_grad = False

+ self.reset_parameters()

+ if fan_in_fan_out:

+ self.weight.data = self.weight.data.T

+

+ def reset_parameters(self):

+ if hasattr(self, 'lora_A'):

+ # initialize A the same way as the default for nn.Linear and B to zero

+ nn.init.kaiming_uniform_(self.lora_A, a=math.sqrt(5))

+ nn.init.zeros_(self.lora_B)

+

+ def train(self, mode: bool = True):

+

+ def T(w):

+ return w.T if self.fan_in_fan_out else w

+

+ nn.Module.train(self, mode)

+ if self.merge_weights and self.merged:

+ # Make sure that the weights are not merged

+ if self.r > 0:

+ self.weight.data -= T(self.lora_B @ self.lora_A) * self.scaling

+ self.merged = False

+

+ def eval(self):

+

+ def T(w):

+ return w.T if self.fan_in_fan_out else w

+

+ nn.Module.eval(self)

+ if self.merge_weights and not self.merged:

+ # Merge the weights and mark it

+ if self.r > 0:

+ self.weight.data += T(self.lora_B @ self.lora_A) * self.scaling

+ self.merged = True

+

+ def forward(self, x: torch.Tensor):

+

+ def T(w):

+ return w.T if self.fan_in_fan_out else w

+

+ if self.r > 0 and not self.merged:

+ result = F.linear(x, T(self.weight), bias=self.bias)

+ if self.r > 0:

+ result = result + (self.lora_dropout(x) @ self.lora_A.t() @ self.lora_B.t()) * self.scaling

+ return result

+ else:

+ return F.linear(x, T(self.weight), bias=self.bias)

+

+

+def lora_linear_wrapper(linear: nn.Linear, lora_rank: int) -> LoraLinear:

+ assert lora_rank <= linear.in_features, f'LoRA rank ({lora_rank}) must be less than or equal to in features ({linear.in_features})'

+ lora_linear = LoraLinear(linear.weight, linear.bias, r=lora_rank, merge_weights=False)

+ return lora_linear

+

+

+def convert_to_lora_recursively(module: nn.Module, lora_rank: int) -> None:

+ for name, child in module.named_children():

+ if isinstance(child, nn.Linear):

+ setattr(module, name, lora_linear_wrapper(child, lora_rank))

+ else:

+ convert_to_lora_recursively(child, lora_rank)

+

+

+class LoRAModule(nn.Module):

+ """A LoRA module base class. All derived classes should call `convert_to_lora()` at the bottom of `__init__()`.

+ This calss will convert all torch.nn.Linear layer to LoraLinear layer.

+

+ Args:

+ lora_rank (int, optional): LoRA rank. 0 means LoRA is not applied. Defaults to 0.

+ lora_train_bias (str, optional): Whether LoRA train biases.

+ 'none' means it doesn't train biases. 'all' means it trains all biases. 'lora_only' means it only trains biases of LoRA layers.

+ Defaults to 'none'.

+ """

+

+ def __init__(self, lora_rank: int = 0, lora_train_bias: str = 'none') -> None:

+ super().__init__()

+ self.lora_rank = lora_rank

+ self.lora_train_bias = lora_train_bias

+

+ def convert_to_lora(self) -> None:

+ if self.lora_rank <= 0:

+ return

+ convert_to_lora_recursively(self, self.lora_rank)

+ lora.mark_only_lora_as_trainable(self, self.lora_train_bias)

diff --git a/applications/ChatGPT/chatgpt/nn/loss.py b/applications/ChatGPT/chatgpt/nn/loss.py

new file mode 100644

index 000000000..0ebcfea06

--- /dev/null

+++ b/applications/ChatGPT/chatgpt/nn/loss.py

@@ -0,0 +1,105 @@

+from typing import Optional

+

+import torch

+import torch.nn as nn

+

+from .utils import masked_mean

+

+

+class GPTLMLoss(nn.Module):

+ """

+ GPT Language Model Loss

+ """

+

+ def __init__(self):

+ super().__init__()

+ self.loss = nn.CrossEntropyLoss()

+

+ def forward(self, logits: torch.Tensor, labels: torch.Tensor) -> torch.Tensor:

+ shift_logits = logits[..., :-1, :].contiguous()

+ shift_labels = labels[..., 1:].contiguous()

+ # Flatten the tokens

+ return self.loss(shift_logits.view(-1, shift_logits.size(-1)), shift_labels.view(-1))

+

+

+class PolicyLoss(nn.Module):

+ """

+ Policy Loss for PPO

+ """

+