diff --git a/README.md b/README.md

index 9dc9da535..c790f1f36 100644

--- a/README.md

+++ b/README.md

@@ -73,25 +73,33 @@ Colossal-AI provides a collection of parallel training components for you. We ai

distributed deep learning models just like how you write your model on your laptop. We provide user-friendly tools to kickstart

distributed training in a few lines.

-- Data Parallelism

-- Pipeline Parallelism

-- 1D, 2D, 2.5D, 3D tensor parallelism

-- Sequence parallelism

-- Friendly trainer and engine

-- Extensible for new parallelism

-- Mixed Precision Training

-- Zero Redundancy Optimizer (ZeRO)

+- Parallelism strategies

+ - Data Parallelism

+ - Pipeline Parallelism

+ - 1D, [2D](https://arxiv.org/abs/2104.05343), [2.5D](https://arxiv.org/abs/2105.14500), 3D Tensor parallelism

+ - [Sequence parallelism](https://arxiv.org/abs/2105.13120)

+ - [Zero Redundancy Optimizer (ZeRO)](https://arxiv.org/abs/2108.05818)

+

+- Heterogeneous Memory Menagement

+ - [PatrickStar](https://arxiv.org/abs/2108.05818)

+

+- Friendly Usage

+ - Configuration file based

(back to top)

## Demo

### ViT

+

+

+

- 14x larger batch size, and 5x faster training for Tensor Parallelism = 64

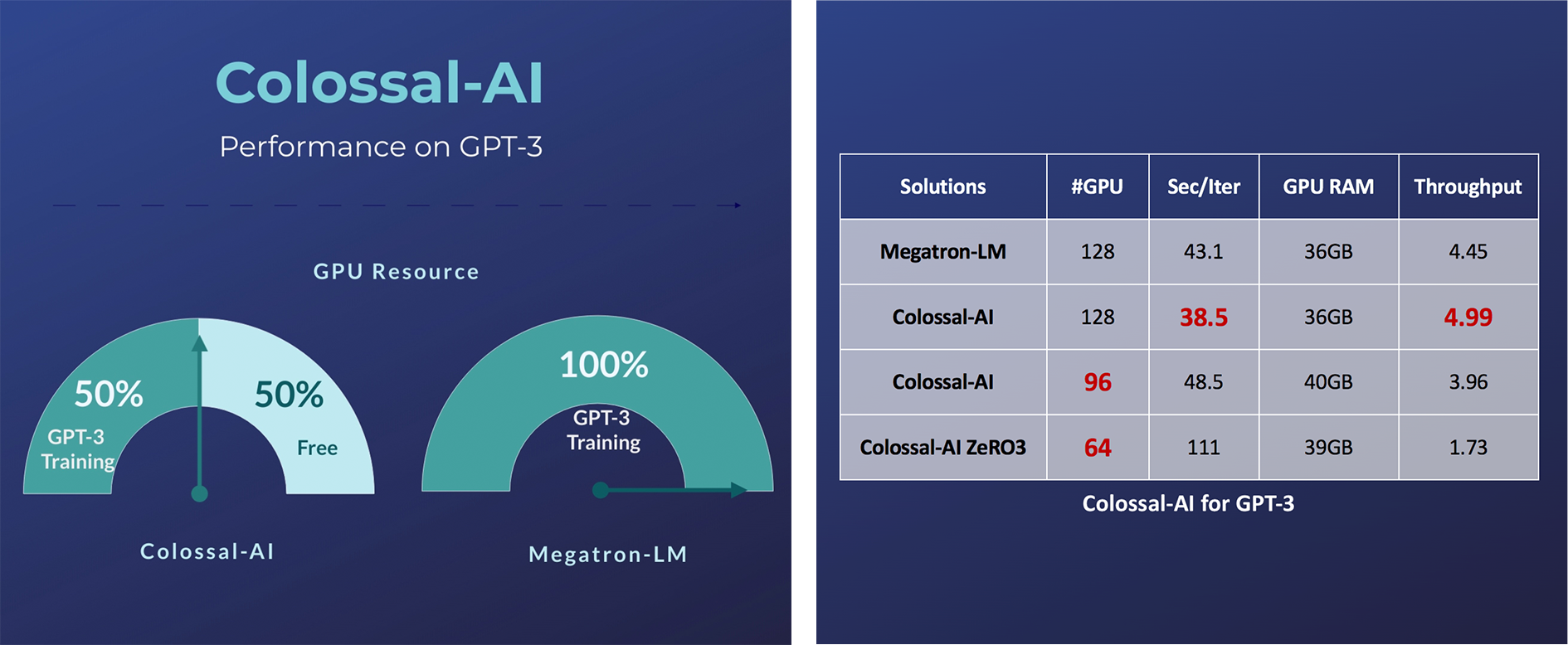

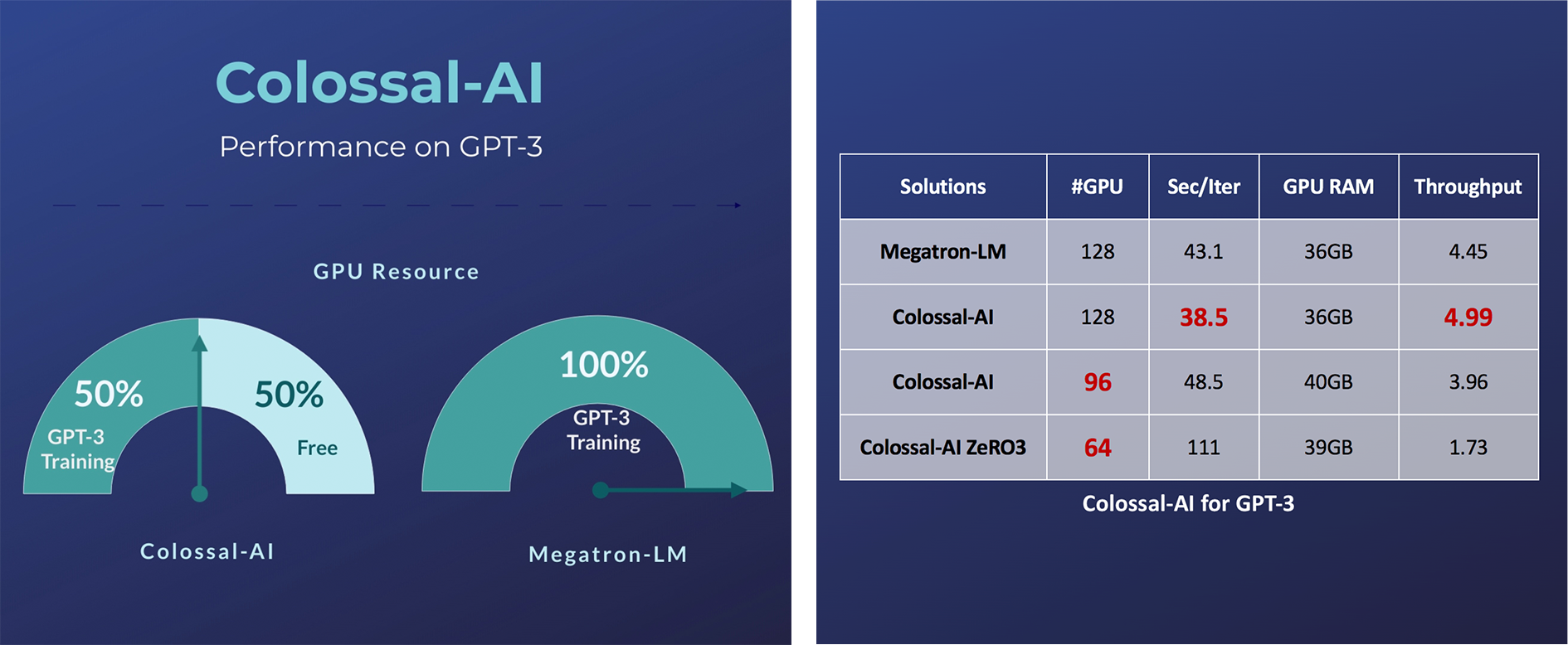

### GPT-3

+

+

+

- Save 50% GPU resources, and 10.7% acceleration

+

+ +

+ +

+