diff --git a/README-zh-Hans.md b/README-zh-Hans.md

index 4b0ba9c42..e16db47f9 100644

--- a/README-zh-Hans.md

+++ b/README-zh-Hans.md

@@ -3,7 +3,7 @@

[](https://www.colossalai.org/)

- Colossal-AI: 一个面向大模型时代的通用深度学习系统

+ Colossal-AI: 让AI大模型更低成本、方便易用、高效扩展

论文 |

文档 |

@@ -23,10 +23,10 @@

## 新闻

+* [2023/02] [Open source solution replicates ChatGPT training process! Ready to go with only 1.6GB GPU memory](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt)

* [2023/01] [Hardware Savings Up to 46 Times for AIGC and Automatic Parallelism](https://www.hpc-ai.tech/blog/colossal-ai-0-2-0)

* [2022/11] [Diffusion Pretraining and Hardware Fine-Tuning Can Be Almost 7X Cheaper](https://www.hpc-ai.tech/blog/diffusion-pretraining-and-hardware-fine-tuning-can-be-almost-7x-cheaper)

* [2022/10] [Use a Laptop to Analyze 90% of Proteins, With a Single-GPU Inference Sequence Exceeding 10,000](https://www.hpc-ai.tech/blog/use-a-laptop-to-analyze-90-of-proteins-with-a-single-gpu-inference-sequence-exceeding)

-* [2022/10] [Embedding Training With 1% GPU Memory and 100 Times Less Budget for Super-Large Recommendation Model](https://www.hpc-ai.tech/blog/embedding-training-with-1-gpu-memory-and-10-times-less-budget-an-open-source-solution-for)

* [2022/09] [HPC-AI Tech Completes $6 Million Seed and Angel Round Fundraising](https://www.hpc-ai.tech/blog/hpc-ai-tech-completes-6-million-seed-and-angel-round-fundraising-led-by-bluerun-ventures-in-the)

@@ -64,6 +64,7 @@

Colossal-AI 成功案例

@@ -209,6 +210,29 @@ Colossal-AI 为您提供了一系列并行组件。我们的目标是让您的

(返回顶端)

## Colossal-AI 成功案例

+### ChatGPT

+低成本复现[ChatGPT](https://openai.com/blog/chatgpt/)完整流程 [[代码]](https://github.com/hpcaitech/ColossalAI/tree/main/applications/ChatGPT) [[博客]](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt)

+

+ +

+

+

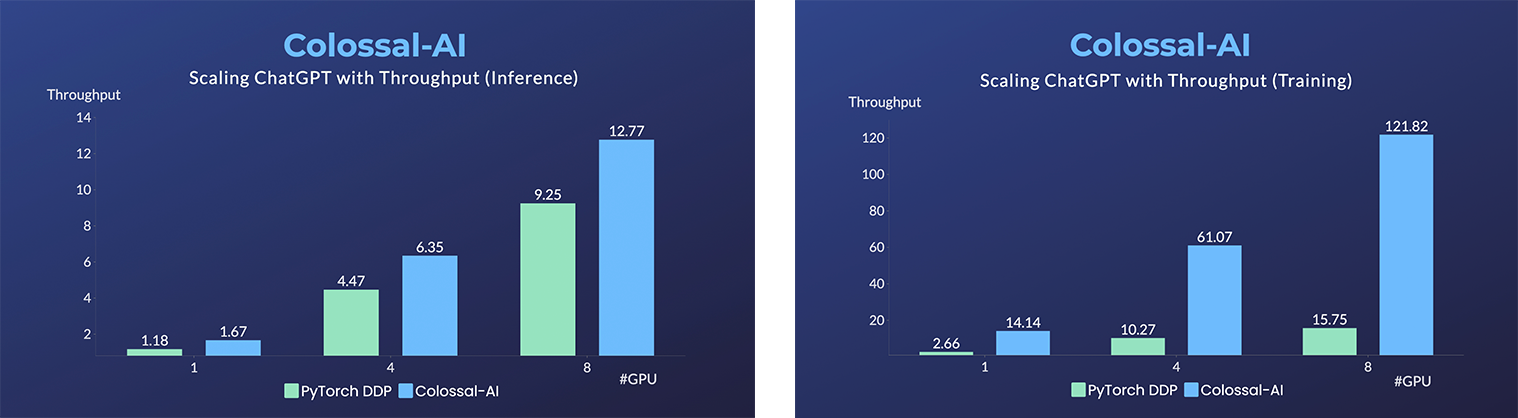

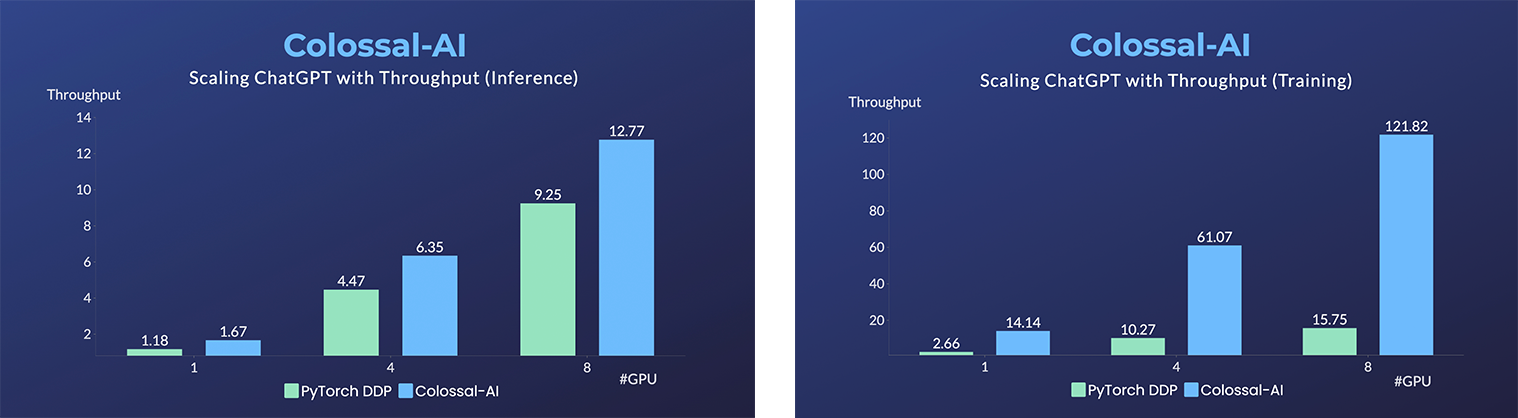

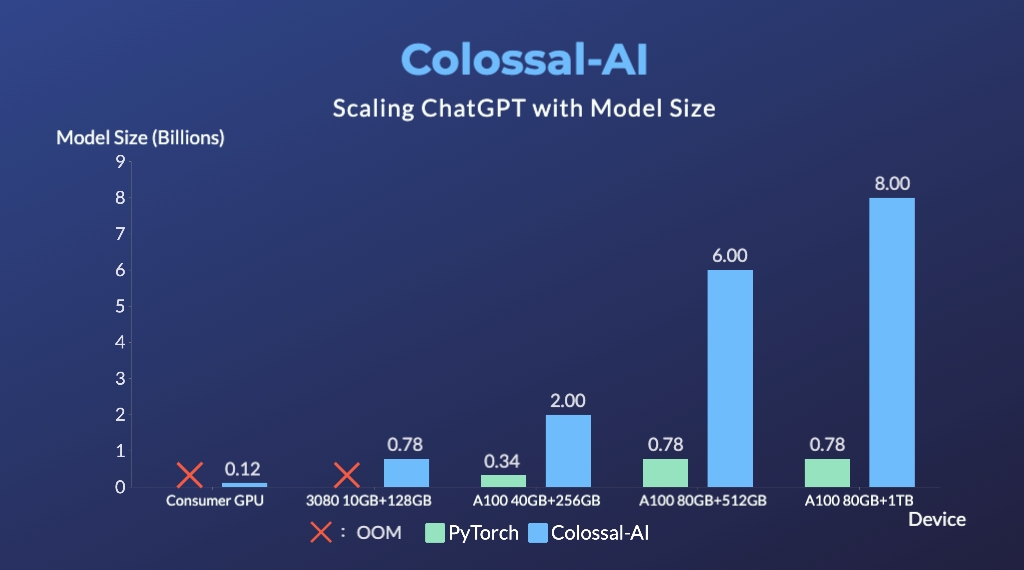

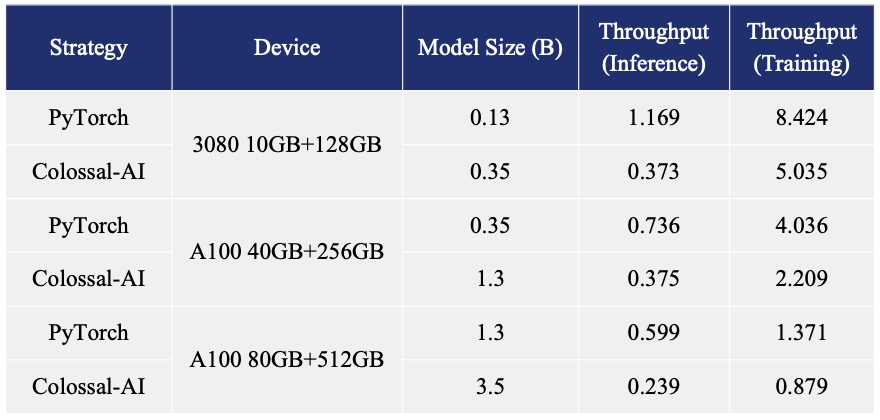

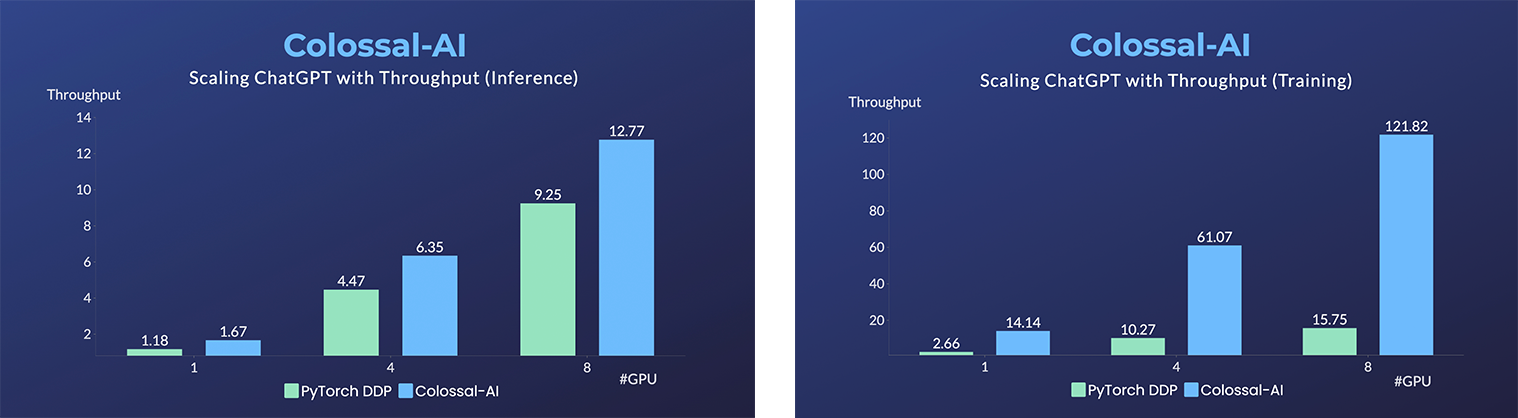

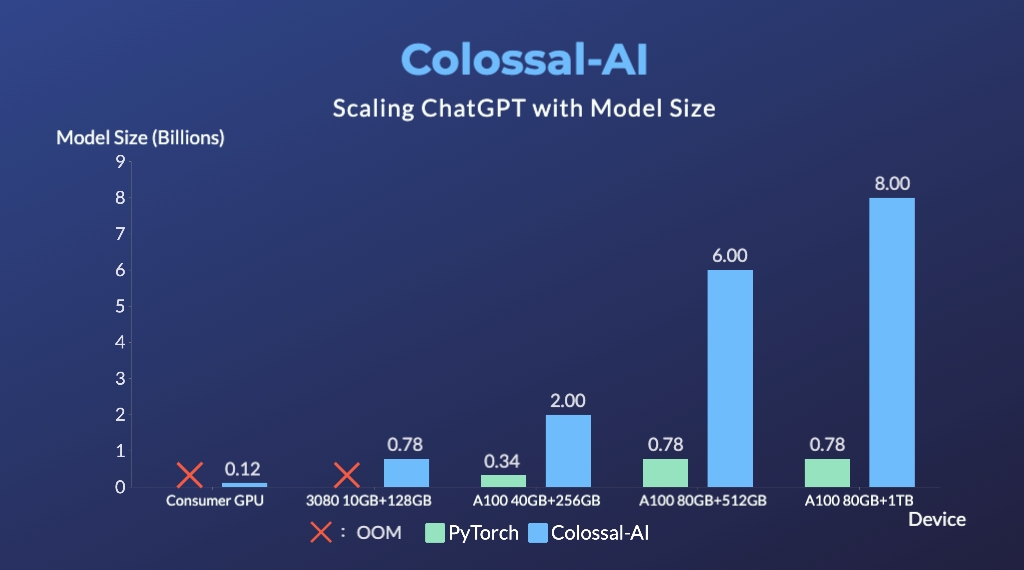

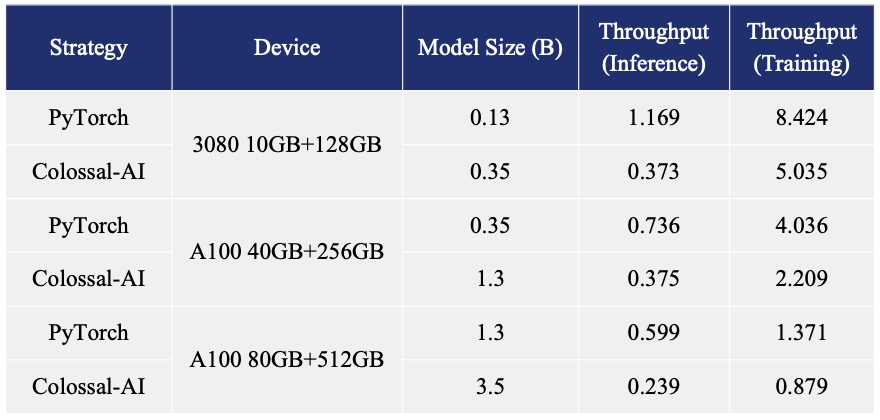

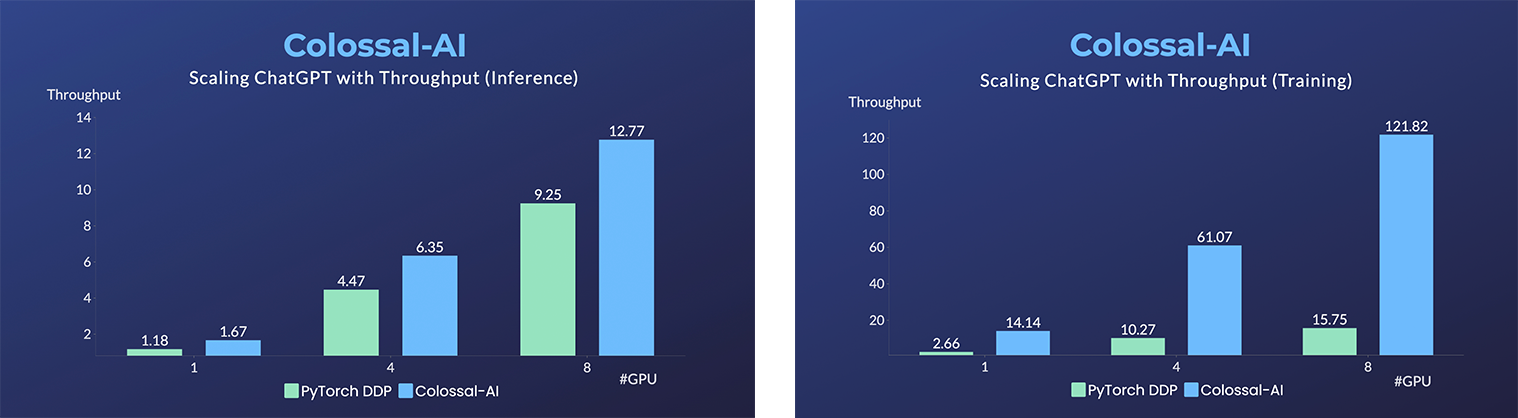

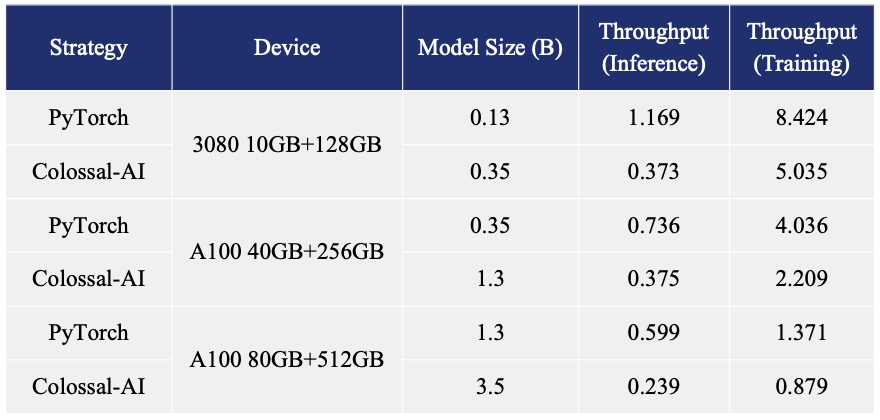

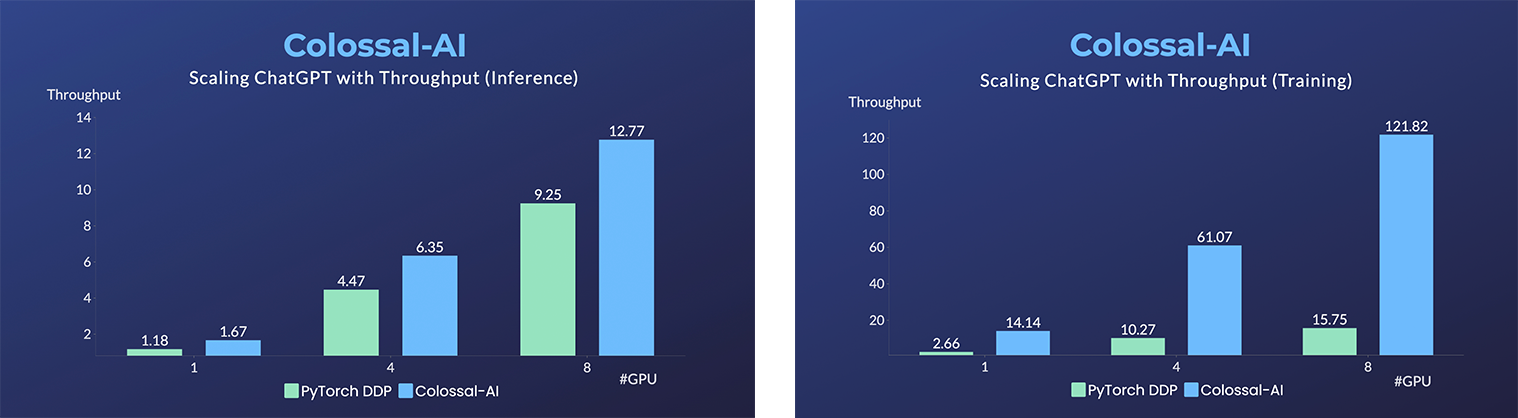

+- 最高可提升单机训练速度7.73倍,单卡推理速度1.42倍

+

+

+ +

+

+

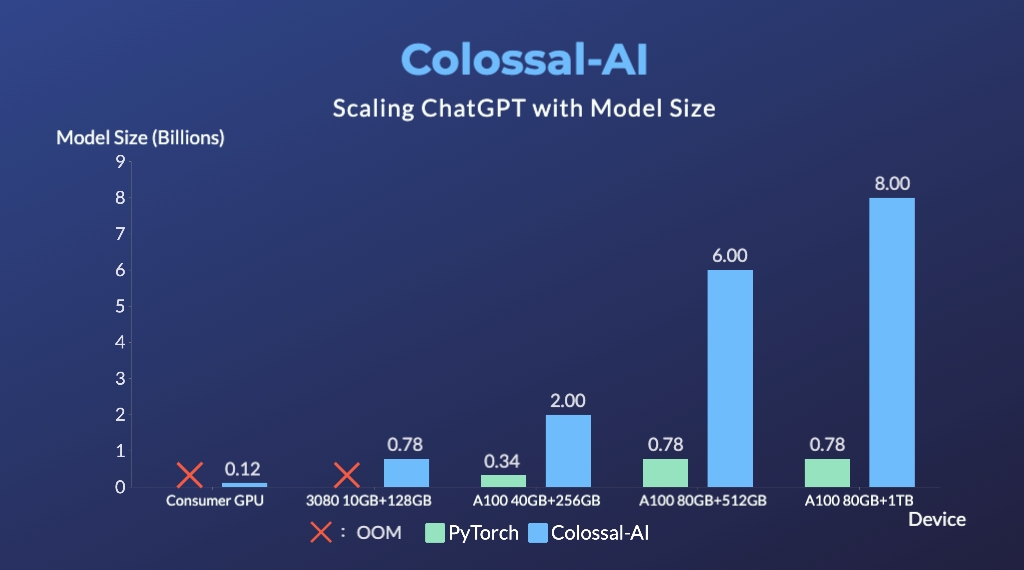

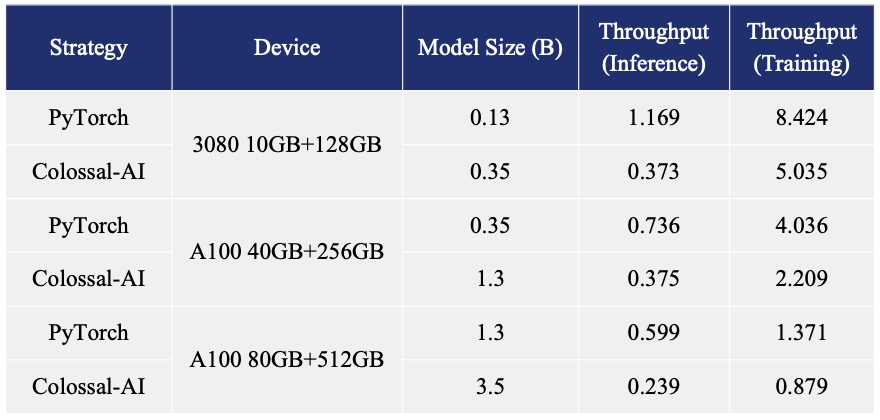

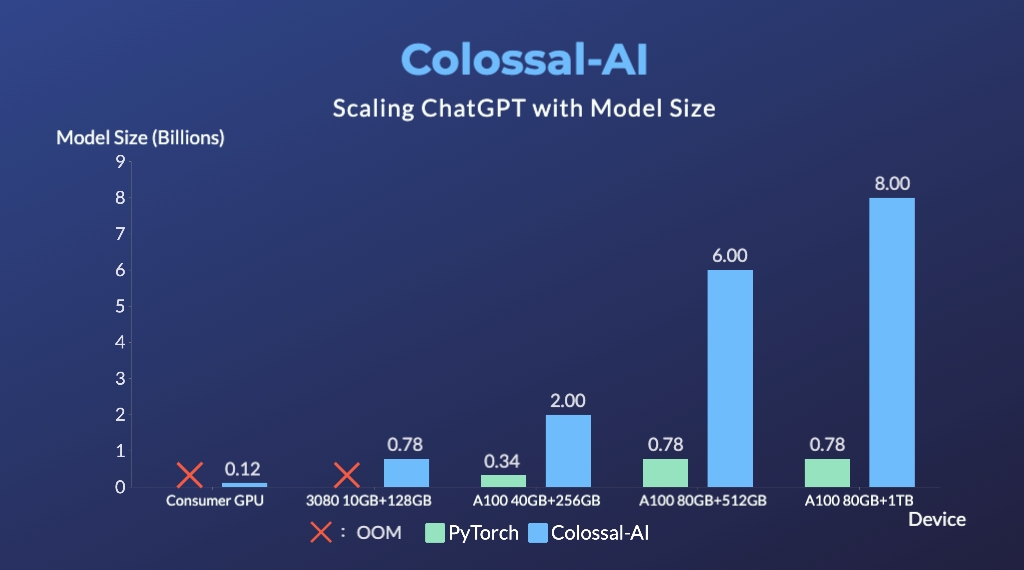

+- 单卡模型容量最多提升10.3倍

+- 最小demo训练流程最低仅需1.62GB显存 (任意消费级GPU)

+

+

+ +

+

+

+- 提升单卡的微调模型容量3.7倍

+- 同时保持高速运行

+

+(back to top)

### AIGC

加速AIGC(AI内容生成)模型,如[Stable Diffusion v1](https://github.com/CompVis/stable-diffusion) 和 [Stable Diffusion v2](https://github.com/Stability-AI/stablediffusion)

diff --git a/README.md b/README.md

index 703e3f3bf..e4ffca890 100644

--- a/README.md

+++ b/README.md

@@ -3,7 +3,7 @@

[](https://www.colossalai.org/)

- Colossal-AI: A Unified Deep Learning System for Big Model Era

+ Colossal-AI: Make big AI models cheaper, easier, and scalable

Paper |

Documentation |

@@ -24,10 +24,10 @@

## Latest News

+* [2023/02] [Open source solution replicates ChatGPT training process! Ready to go with only 1.6GB GPU memory](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt)

* [2023/01] [Hardware Savings Up to 46 Times for AIGC and Automatic Parallelism](https://www.hpc-ai.tech/blog/colossal-ai-0-2-0)

* [2022/11] [Diffusion Pretraining and Hardware Fine-Tuning Can Be Almost 7X Cheaper](https://www.hpc-ai.tech/blog/diffusion-pretraining-and-hardware-fine-tuning-can-be-almost-7x-cheaper)

* [2022/10] [Use a Laptop to Analyze 90% of Proteins, With a Single-GPU Inference Sequence Exceeding 10,000](https://www.hpc-ai.tech/blog/use-a-laptop-to-analyze-90-of-proteins-with-a-single-gpu-inference-sequence-exceeding)

-* [2022/10] [Embedding Training With 1% GPU Memory and 100 Times Less Budget for Super-Large Recommendation Model](https://www.hpc-ai.tech/blog/embedding-training-with-1-gpu-memory-and-10-times-less-budget-an-open-source-solution-for)

* [2022/09] [HPC-AI Tech Completes $6 Million Seed and Angel Round Fundraising](https://www.hpc-ai.tech/blog/hpc-ai-tech-completes-6-million-seed-and-angel-round-fundraising-led-by-bluerun-ventures-in-the)

## Table of Contents

@@ -64,6 +64,7 @@

Colossal-AI for Real World Applications

@@ -211,6 +212,30 @@ Please visit our [documentation](https://www.colossalai.org/) and [examples](htt

(back to top)

## Colossal-AI in the Real World

+### ChatGPT

+A low-cost [ChatGPT](https://openai.com/blog/chatgpt/) equivalent implementation process. [[code]](https://github.com/hpcaitech/ColossalAI/tree/main/applications/ChatGPT) [[blog]](https://www.hpc-ai.tech/blog/colossal-ai-chatgpt)

+

+ +

+

+

+- Up to 7.73 times faster for single server training and 1.42 times faster for single-GPU inference

+

+

+ +

+

+

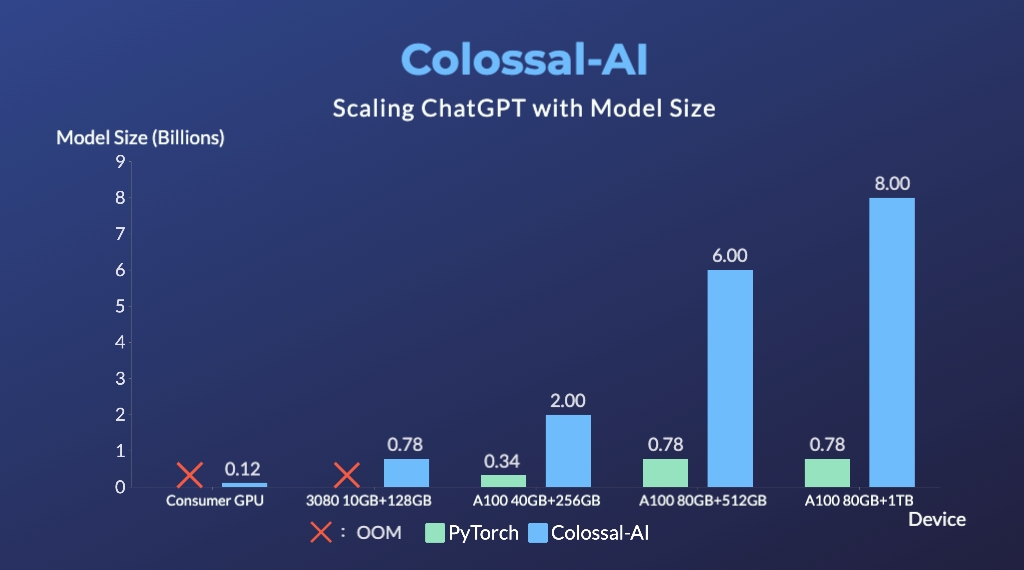

+- Up to 10.3x growth in model capacity on one GPU

+- A mini demo training process requires only 1.62GB of GPU memory (any consumer-grade GPU)

+

+

+ +

+

+

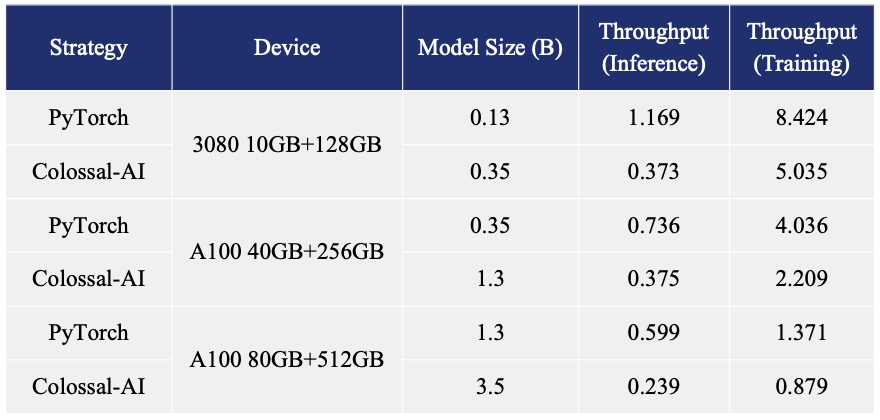

+- Increase the capacity of the fine-tuning model by up to 3.7 times on a single GPU

+- Keep in a sufficiently high running speed

+

+(back to top)

+

### AIGC

Acceleration of AIGC (AI-Generated Content) models such as [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion) and [Stable Diffusion v2](https://github.com/Stability-AI/stablediffusion).

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+