-

+

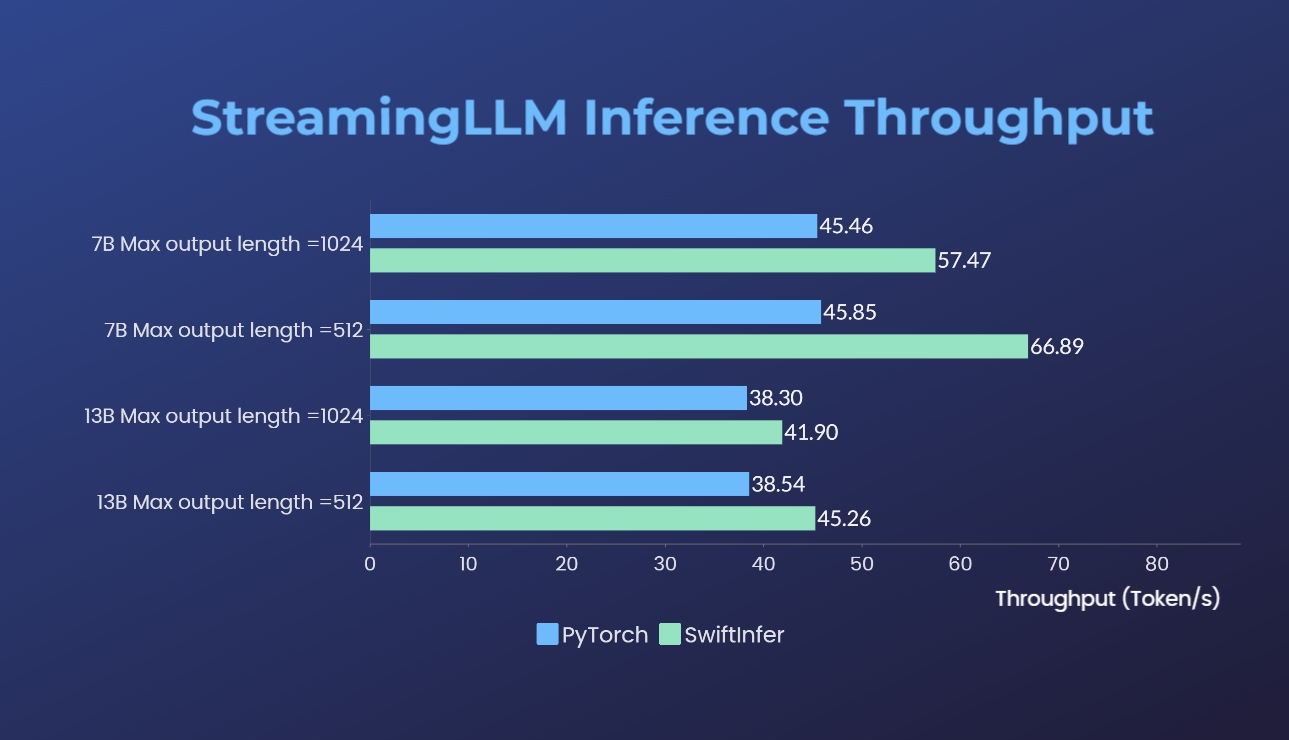

- SwiftInfer:Breaks the Length Limit of LLM for Multi-Round Conversations with 46% Acceleration

- GPT-3

- OPT-175B Online Serving for Text Generation

- 176B BLOOM @@ -121,9 +123,6 @@ distributed training and inference in a few lines. - Friendly Usage - Parallelism based on the configuration file -- Inference - - [Energon-AI](https://github.com/hpcaitech/EnergonAI) - ## Colossal-AI in the Real World @@ -220,7 +219,7 @@ Acceleration of AIGC (AI-Generated Content) models such as [Stable Diffusion v1] - [DreamBooth Fine-tuning](https://github.com/hpcaitech/ColossalAI/tree/main/examples/images/dreambooth): Personalize your model using just 3-5 images of the desired subject. -

+

+ +

+

diff --git a/applications/README.md b/applications/README.md

index 92096e559..49a2900f1 100644

--- a/applications/README.md

+++ b/applications/README.md

@@ -9,6 +9,7 @@ The list of applications include:

- [X] [ColossalChat](./Chat/README.md): Replication of ChatGPT with RLHF.

- [X] [FastFold](https://github.com/hpcaitech/FastFold): Optimizing AlphaFold (Biomedicine) Training and Inference on GPU Clusters.

- [X] [ColossalQA](./ColossalQA/README.md): Document Retrieval Conversation System

+- [X] [SwiftInfer](https://github.com/hpcaitech/SwiftInfer): Breaks the Length Limit of LLM Inference for Multi-Round Conversations

> Please note that the `Chatbot` application is migrated from the original `ChatGPT` folder.

diff --git a/docs/README-zh-Hans.md b/docs/README-zh-Hans.md

index a0330a62d..0c438c726 100644

--- a/docs/README-zh-Hans.md

+++ b/docs/README-zh-Hans.md

@@ -24,6 +24,7 @@

## 新闻

+* [2024/01] [Inference Performance Improved by 46%, Open Source Solution Breaks the Length Limit of LLM for Multi-Round Conversations](https://hpc-ai.com/blog/Colossal-AI-SwiftInfer)

* [2024/01] [Construct Refined 13B Private Model With Just $5000 USD, Upgraded Colossal-AI Llama-2 Open Source](https://hpc-ai.com/blog/colossal-llama-2-13b)

* [2023/11] [Enhanced MoE Parallelism, Open-source MoE Model Training Can Be 9 Times More Efficient](https://www.hpc-ai.tech/blog/enhanced-moe-parallelism-open-source-moe-model-training-can-be-9-times-more-efficient)

* [2023/09] [One Half-Day of Training Using a Few Hundred Dollars Yields Similar Results to Mainstream Large Models, Open-Source and Commercial-Free Domain-Specific LLM Solution](https://www.hpc-ai.tech/blog/one-half-day-of-training-using-a-few-hundred-dollars-yields-similar-results-to-mainstream-large-models-open-source-and-commercial-free-domain-specific-llm-solution)

@@ -69,8 +70,9 @@

diff --git a/applications/README.md b/applications/README.md

index 92096e559..49a2900f1 100644

--- a/applications/README.md

+++ b/applications/README.md

@@ -9,6 +9,7 @@ The list of applications include:

- [X] [ColossalChat](./Chat/README.md): Replication of ChatGPT with RLHF.

- [X] [FastFold](https://github.com/hpcaitech/FastFold): Optimizing AlphaFold (Biomedicine) Training and Inference on GPU Clusters.

- [X] [ColossalQA](./ColossalQA/README.md): Document Retrieval Conversation System

+- [X] [SwiftInfer](https://github.com/hpcaitech/SwiftInfer): Breaks the Length Limit of LLM Inference for Multi-Round Conversations

> Please note that the `Chatbot` application is migrated from the original `ChatGPT` folder.

diff --git a/docs/README-zh-Hans.md b/docs/README-zh-Hans.md

index a0330a62d..0c438c726 100644

--- a/docs/README-zh-Hans.md

+++ b/docs/README-zh-Hans.md

@@ -24,6 +24,7 @@

## 新闻

+* [2024/01] [Inference Performance Improved by 46%, Open Source Solution Breaks the Length Limit of LLM for Multi-Round Conversations](https://hpc-ai.com/blog/Colossal-AI-SwiftInfer)

* [2024/01] [Construct Refined 13B Private Model With Just $5000 USD, Upgraded Colossal-AI Llama-2 Open Source](https://hpc-ai.com/blog/colossal-llama-2-13b)

* [2023/11] [Enhanced MoE Parallelism, Open-source MoE Model Training Can Be 9 Times More Efficient](https://www.hpc-ai.tech/blog/enhanced-moe-parallelism-open-source-moe-model-training-can-be-9-times-more-efficient)

* [2023/09] [One Half-Day of Training Using a Few Hundred Dollars Yields Similar Results to Mainstream Large Models, Open-Source and Commercial-Free Domain-Specific LLM Solution](https://www.hpc-ai.tech/blog/one-half-day-of-training-using-a-few-hundred-dollars-yields-similar-results-to-mainstream-large-models-open-source-and-commercial-free-domain-specific-llm-solution)

@@ -69,8 +70,9 @@