diff --git a/README.md b/README.md

index 6ffbc85ba..1b0ca7e97 100644

--- a/README.md

+++ b/README.md

@@ -5,10 +5,10 @@

Colossal-AI: A Unified Deep Learning System for Big Model Era

- Paper |

- Documentation |

- Examples |

- Forum |

+

[](https://github.com/hpcaitech/ColossalAI/actions/workflows/build.yml)

@@ -17,7 +17,7 @@

[](https://huggingface.co/hpcai-tech)

[](https://join.slack.com/t/colossalaiworkspace/shared_invite/zt-z7b26eeb-CBp7jouvu~r0~lcFzX832w)

[](https://raw.githubusercontent.com/hpcaitech/public_assets/main/colossalai/img/WeChat.png)

-

+

| [English](README.md) | [中文](README-zh-Hans.md) |

@@ -35,7 +35,7 @@

Why Colossal-AI

Features

- Parallel Training Demo

+ Parallel Training Demo

- Single GPU Training Demo

+ Single GPU Training Demo

- Inference (Energon-AI) Demo

+ Inference (Energon-AI) Demo

- Colossal-AI for Real World Applications

+ Colossal-AI for Real World Applications

- AIGC: Acceleration of Stable Diffusion

- Biomedicine: Acceleration of AlphaFold Protein Structure

@@ -106,7 +106,7 @@ distributed training and inference in a few lines.

- [Zero Redundancy Optimizer (ZeRO)](https://arxiv.org/abs/1910.02054)

- [Auto-Parallelism](https://github.com/hpcaitech/ColossalAI/tree/main/examples/language/gpt/auto_parallel_with_gpt)

-- Heterogeneous Memory Management

+- Heterogeneous Memory Management

- [PatrickStar](https://arxiv.org/abs/2108.05818)

- Friendly Usage

@@ -115,7 +115,7 @@ distributed training and inference in a few lines.

- Inference

- [Energon-AI](https://github.com/hpcaitech/EnergonAI)

-- Colossal-AI in the Real World

+- Colossal-AI in the Real World

- Biomedicine: [FastFold](https://github.com/hpcaitech/FastFold) accelerates training and inference of AlphaFold protein structure

(back to top)

@@ -149,7 +149,7 @@ distributed training and inference in a few lines.

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

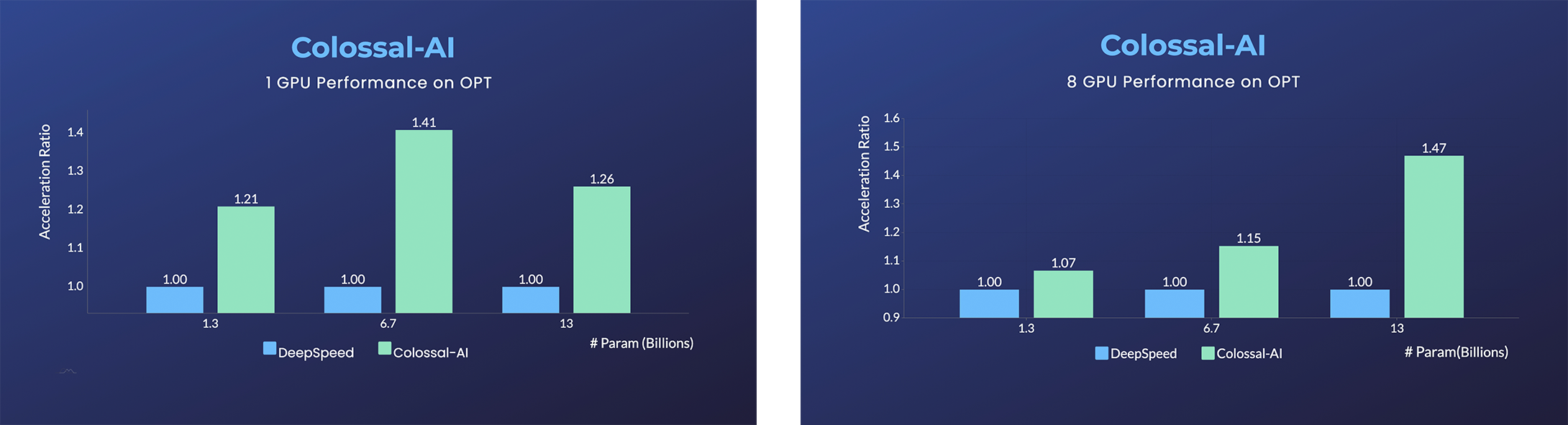

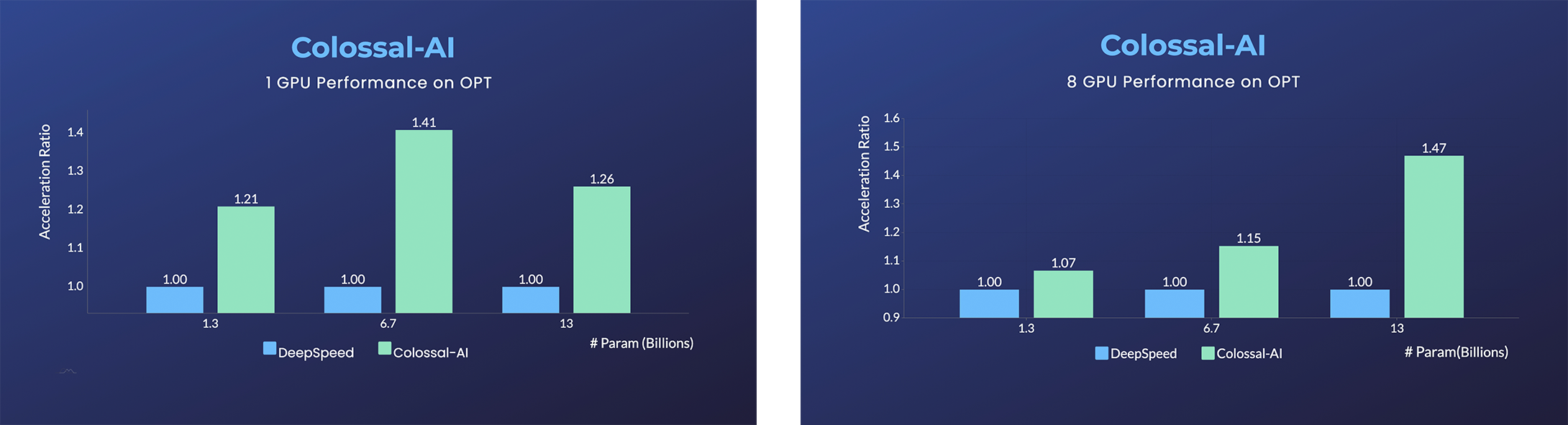

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

@@ -277,10 +277,11 @@ pip install -r requirements/requirements.txt

pip install .

```

-If you don't want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

+By default, we do not compile CUDA/C++ kernels. ColossalAI will build them during runtime.

+If you want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

@@ -277,10 +277,11 @@ pip install -r requirements/requirements.txt

pip install .

```

-If you don't want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

+By default, we do not compile CUDA/C++ kernels. ColossalAI will build them during runtime.

+If you want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

(back to top)

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

@@ -277,10 +277,11 @@ pip install -r requirements/requirements.txt

pip install .

```

-If you don't want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

+By default, we do not compile CUDA/C++ kernels. ColossalAI will build them during runtime.

+If you want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```

- [Open Pretrained Transformer (OPT)](https://github.com/facebookresearch/metaseq), a 175-Billion parameter AI language model released by Meta, which stimulates AI programmers to perform various downstream tasks and application deployments because public pretrained model weights.

-- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

+- 45% speedup fine-tuning OPT at low cost in lines. [[Example]](https://github.com/hpcaitech/ColossalAI-Examples/tree/main/language/opt) [[Online Serving]](https://service.colossalai.org/opt)

Please visit our [documentation](https://www.colossalai.org/) and [examples](https://github.com/hpcaitech/ColossalAI-Examples) for more details.

@@ -277,10 +277,11 @@ pip install -r requirements/requirements.txt

pip install .

```

-If you don't want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

+By default, we do not compile CUDA/C++ kernels. ColossalAI will build them during runtime.

+If you want to install and enable CUDA kernel fusion (compulsory installation when using fused optimizer):

```shell

-NO_CUDA_EXT=1 pip install .

+CUDA_EXT=1 pip install .

```