Prof. James Demmel (UC Berkeley): Colossal-AI makes distributed training efficient, easy and scalable.

Prof. James Demmel (UC Berkeley): Colossal-AI makes distributed training efficient, easy and scalable.

Prof. James Demmel (UC Berkeley): Colossal-AI makes distributed training efficient, easy and scalable.

Prof. James Demmel (UC Berkeley): Colossal-AI makes distributed training efficient, easy and scalable.

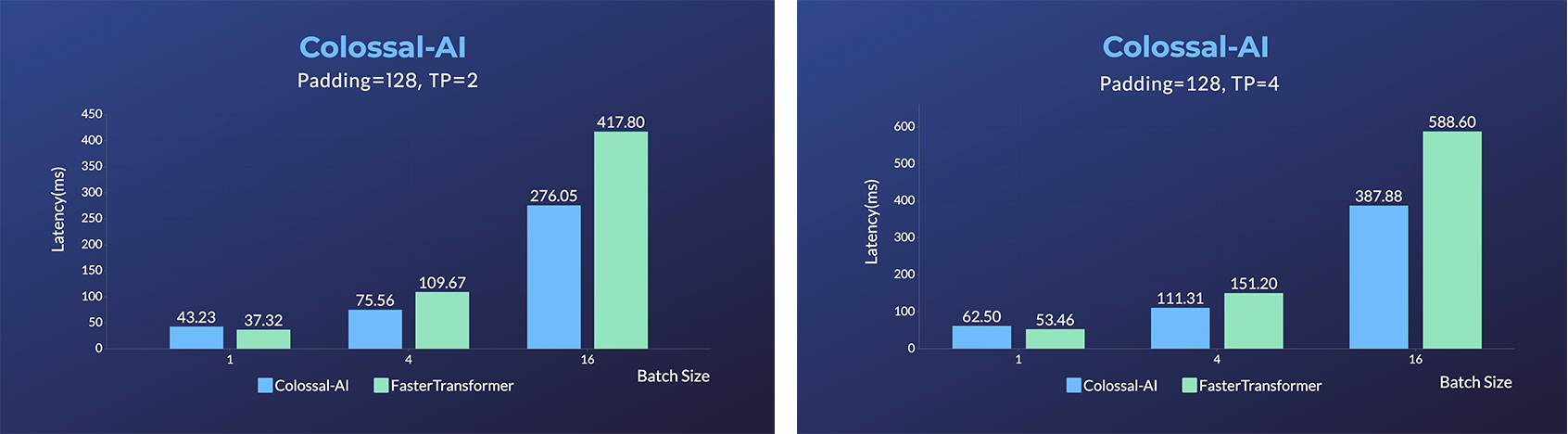

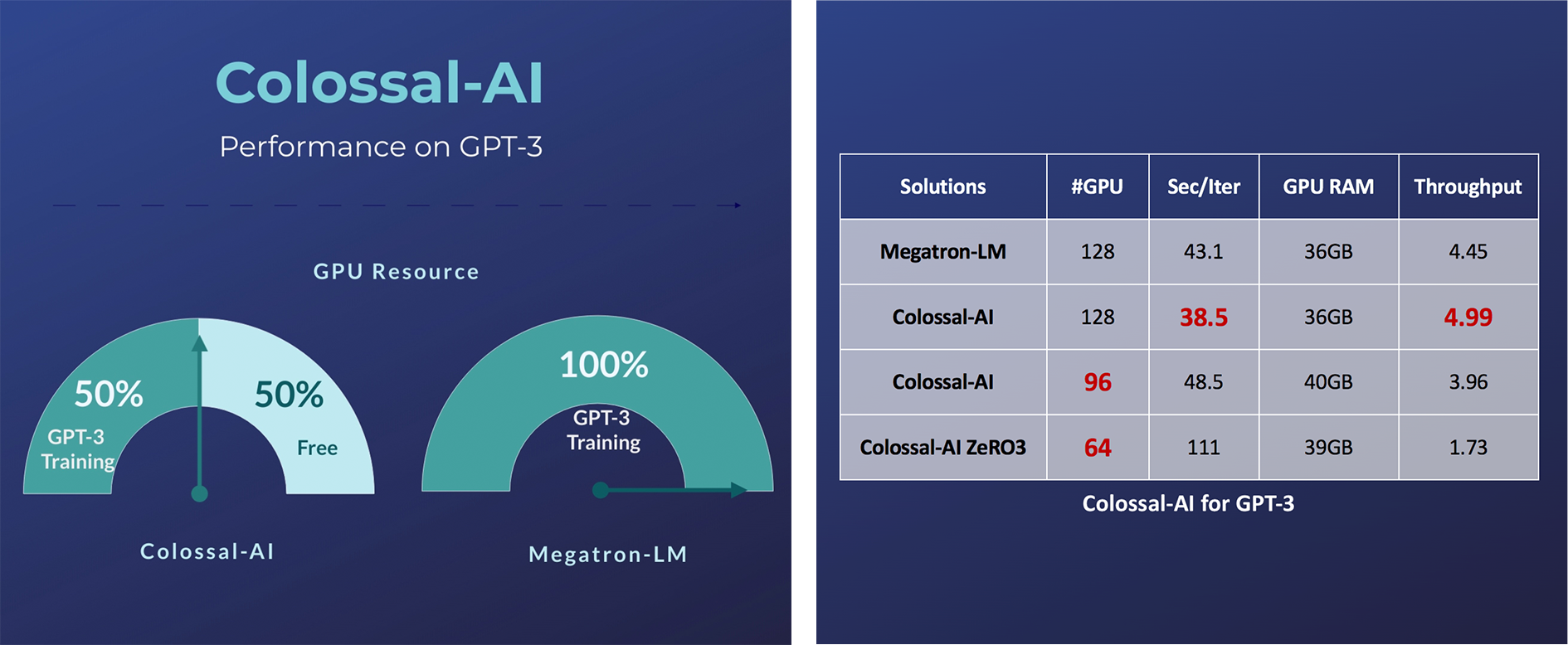

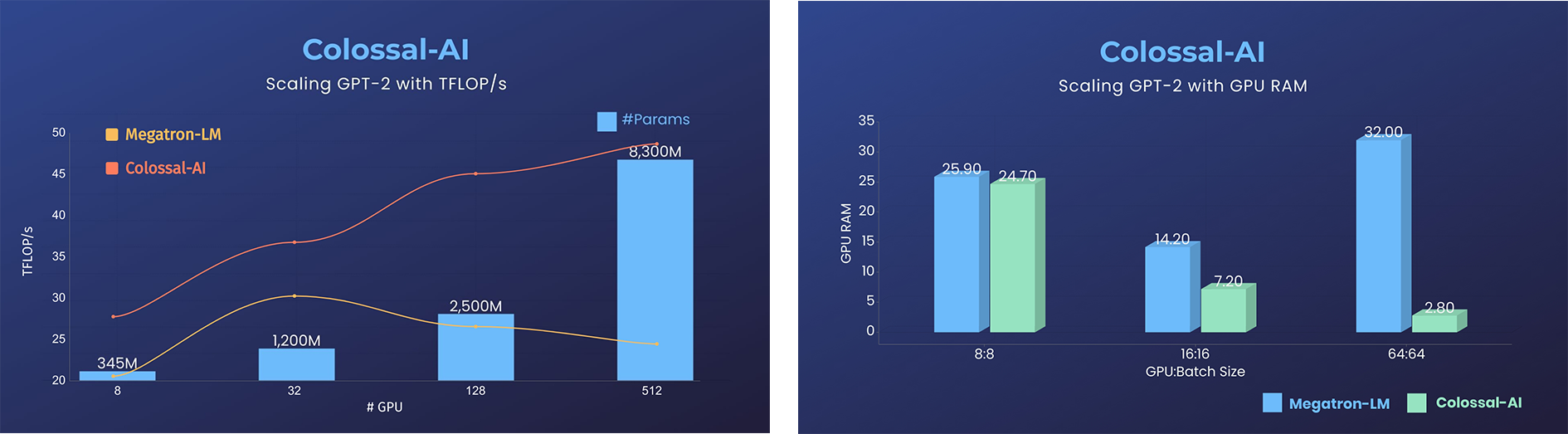

- 11x lower GPU memory consumption, and superlinear scaling efficiency with Tensor Parallelism

- 11x lower GPU memory consumption, and superlinear scaling efficiency with Tensor Parallelism

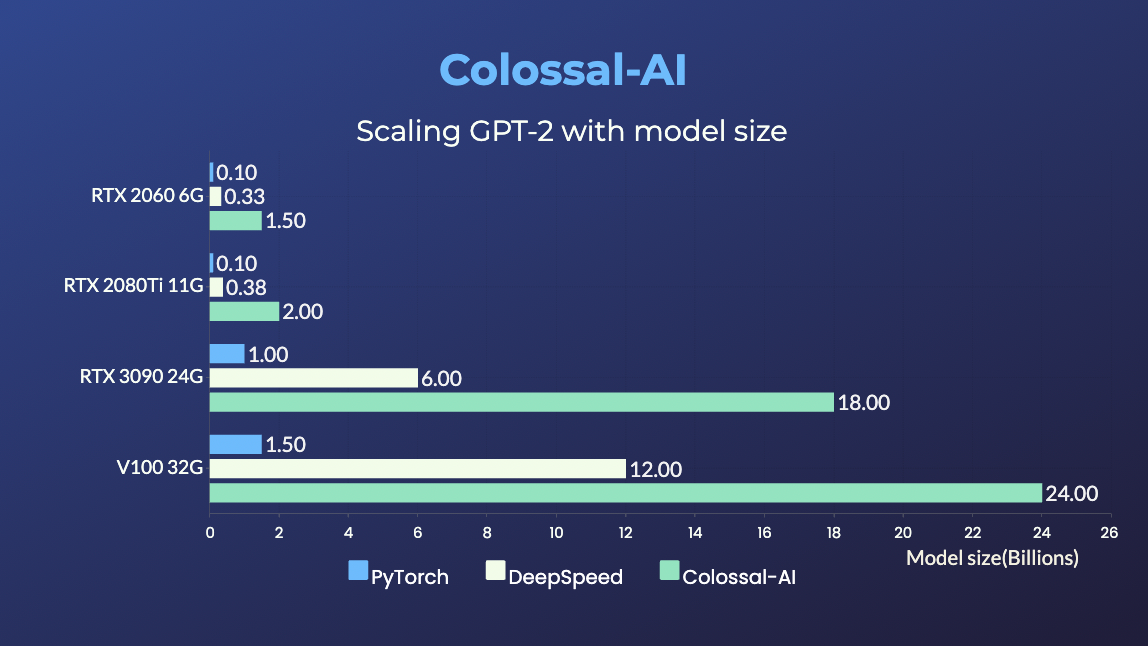

GPT-2.png) - 24x larger model size on the same hardware

- over 3x acceleration

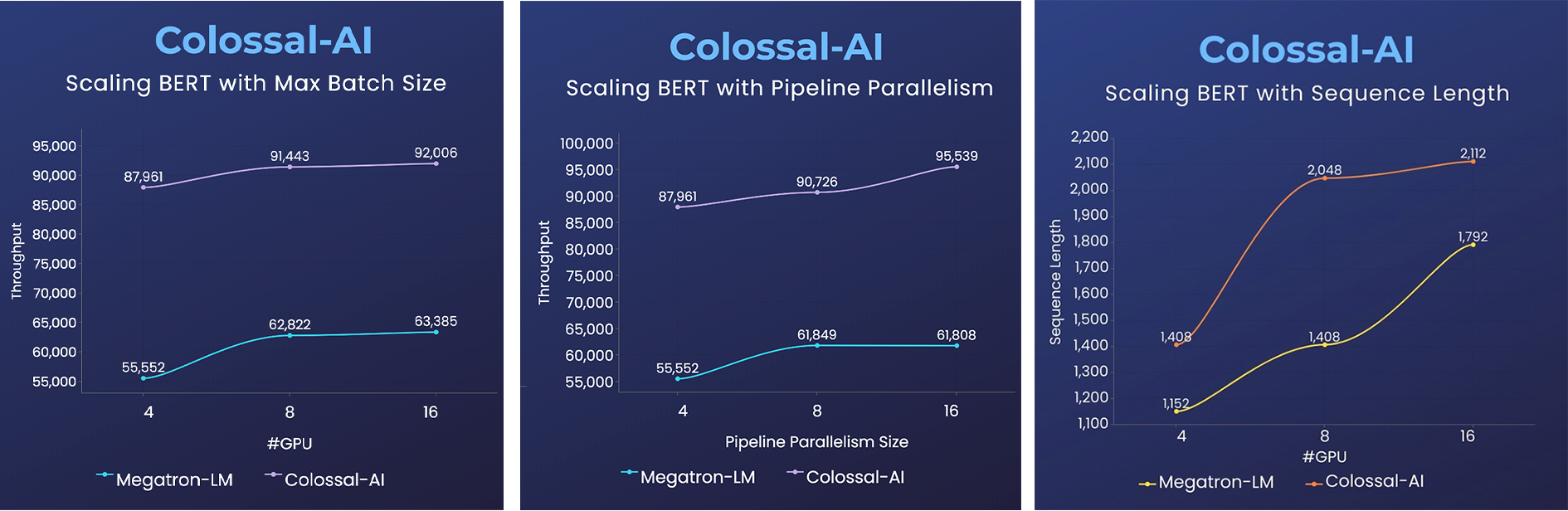

### BERT

- 24x larger model size on the same hardware

- over 3x acceleration

### BERT

- 2x faster training, or 50% longer sequence length

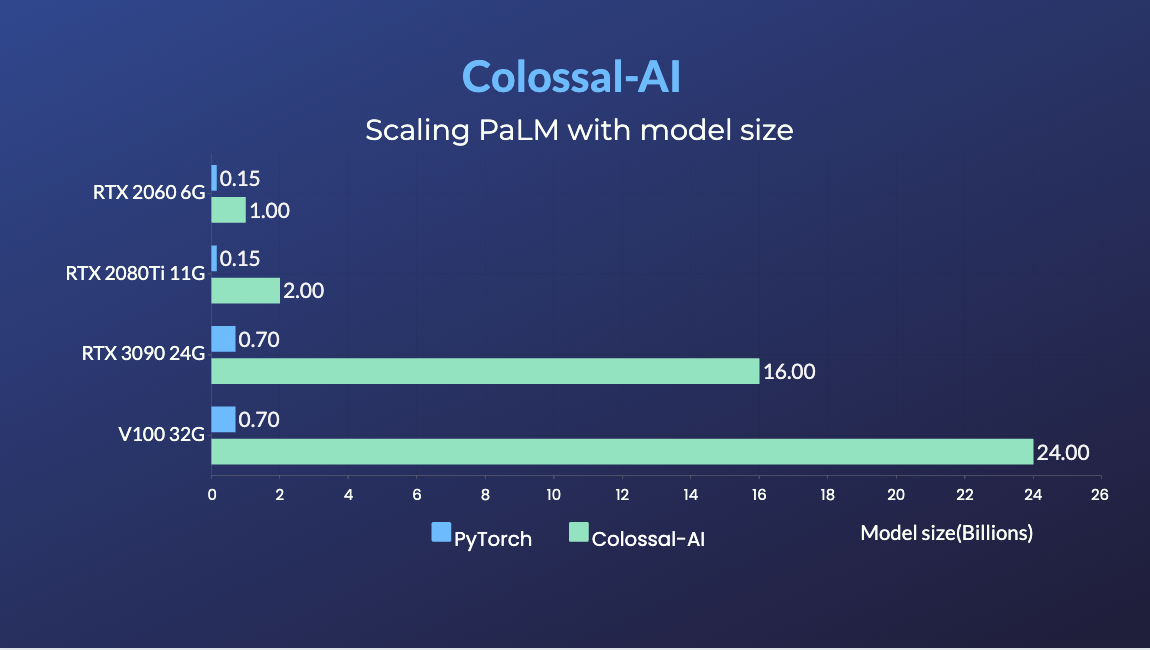

### PaLM

- [PaLM-colossalai](https://github.com/hpcaitech/PaLM-colossalai): Scalable implementation of Google's Pathways Language Model ([PaLM](https://ai.googleblog.com/2022/04/pathways-language-model-palm-scaling-to.html)).

Please visit our [documentation and tutorials](https://www.colossalai.org/) for more details.

## Single GPU Training Demo

### GPT-2

- 2x faster training, or 50% longer sequence length

### PaLM

- [PaLM-colossalai](https://github.com/hpcaitech/PaLM-colossalai): Scalable implementation of Google's Pathways Language Model ([PaLM](https://ai.googleblog.com/2022/04/pathways-language-model-palm-scaling-to.html)).

Please visit our [documentation and tutorials](https://www.colossalai.org/) for more details.

## Single GPU Training Demo

### GPT-2