mirror of

https://github.com/hpcaitech/ColossalAI.git

synced 2026-01-15 23:23:11 +00:00

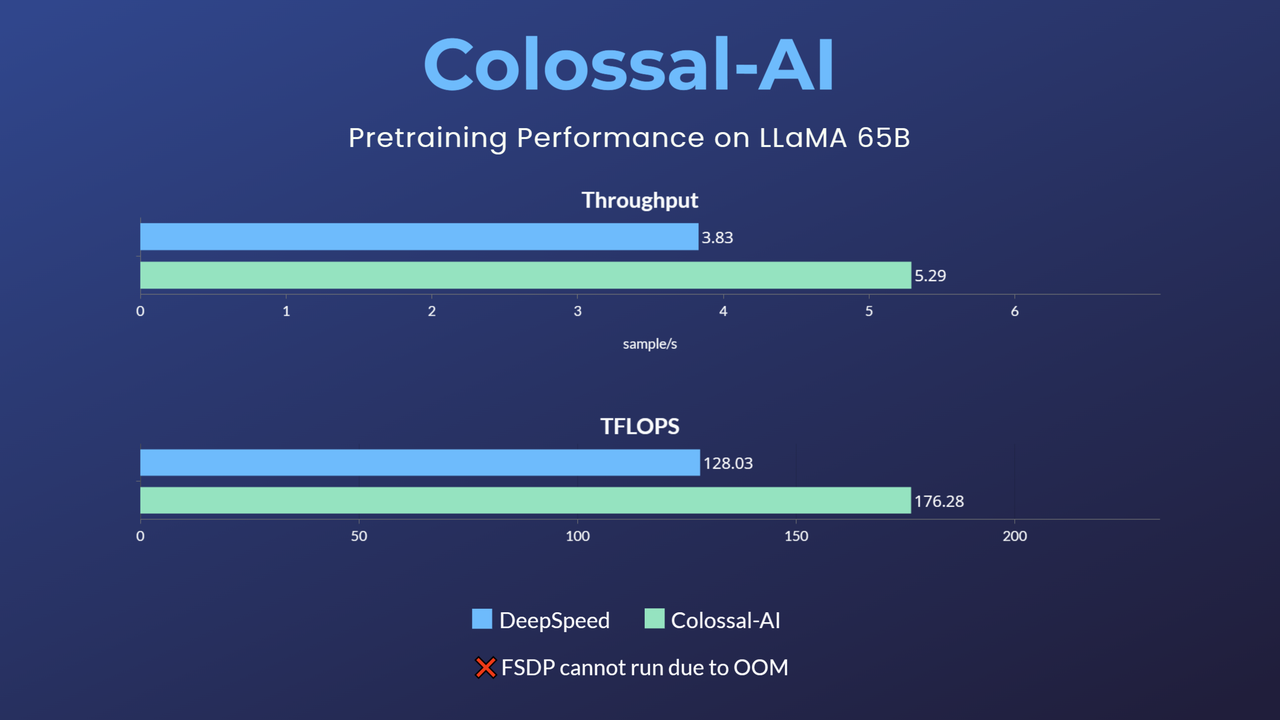

Pretraining LLaMA: best practices for building LLaMA-like base models

Since the main branch is being updated, in order to maintain the stability of the code, this example is temporarily kept as an independent branch.