mirror of

https://github.com/csunny/DB-GPT.git

synced 2025-07-27 13:57:46 +00:00

feat(ChatKnowledge):add similarity score and query rewrite (#880)

This commit is contained in:

parent

13fb9d03a7

commit

54d5b0b804

@ -78,6 +78,8 @@ KNOWLEDGE_SEARCH_TOP_SIZE=5

|

|||||||

#KNOWLEDGE_CHUNK_OVERLAP=50

|

#KNOWLEDGE_CHUNK_OVERLAP=50

|

||||||

# Control whether to display the source document of knowledge on the front end.

|

# Control whether to display the source document of knowledge on the front end.

|

||||||

KNOWLEDGE_CHAT_SHOW_RELATIONS=False

|

KNOWLEDGE_CHAT_SHOW_RELATIONS=False

|

||||||

|

# Whether to enable Chat Knowledge Search Rewrite Mode

|

||||||

|

KNOWLEDGE_SEARCH_REWRITE=False

|

||||||

## EMBEDDING_TOKENIZER - Tokenizer to use for chunking large inputs

|

## EMBEDDING_TOKENIZER - Tokenizer to use for chunking large inputs

|

||||||

## EMBEDDING_TOKEN_LIMIT - Chunk size limit for large inputs

|

## EMBEDDING_TOKEN_LIMIT - Chunk size limit for large inputs

|

||||||

# EMBEDDING_MODEL=all-MiniLM-L6-v2

|

# EMBEDDING_MODEL=all-MiniLM-L6-v2

|

||||||

@ -92,16 +94,15 @@ KNOWLEDGE_CHAT_SHOW_RELATIONS=False

|

|||||||

|

|

||||||

|

|

||||||

#*******************************************************************#

|

#*******************************************************************#

|

||||||

#** DATABASE SETTINGS **#

|

#** DB-GPT METADATA DATABASE SETTINGS **#

|

||||||

#*******************************************************************#

|

#*******************************************************************#

|

||||||

### SQLite database (Current default database)

|

### SQLite database (Current default database)

|

||||||

LOCAL_DB_PATH=data/default_sqlite.db

|

|

||||||

LOCAL_DB_TYPE=sqlite

|

LOCAL_DB_TYPE=sqlite

|

||||||

|

|

||||||

### MYSQL database

|

### MYSQL database

|

||||||

# LOCAL_DB_TYPE=mysql

|

# LOCAL_DB_TYPE=mysql

|

||||||

# LOCAL_DB_USER=root

|

# LOCAL_DB_USER=root

|

||||||

# LOCAL_DB_PASSWORD=aa12345678

|

# LOCAL_DB_PASSWORD={your_password}

|

||||||

# LOCAL_DB_HOST=127.0.0.1

|

# LOCAL_DB_HOST=127.0.0.1

|

||||||

# LOCAL_DB_PORT=3306

|

# LOCAL_DB_PORT=3306

|

||||||

# LOCAL_DB_NAME=dbgpt

|

# LOCAL_DB_NAME=dbgpt

|

||||||

|

|||||||

54

docker/examples/metadata/duckdb2mysql.py

Normal file

54

docker/examples/metadata/duckdb2mysql.py

Normal file

@ -0,0 +1,54 @@

|

|||||||

|

import duckdb

|

||||||

|

import pymysql

|

||||||

|

|

||||||

|

""" migrate duckdb to mysql"""

|

||||||

|

|

||||||

|

mysql_config = {

|

||||||

|

"host": "127.0.0.1",

|

||||||

|

"user": "root",

|

||||||

|

"password": "your_password",

|

||||||

|

"db": "dbgpt",

|

||||||

|

"charset": "utf8mb4",

|

||||||

|

"cursorclass": pymysql.cursors.DictCursor,

|

||||||

|

}

|

||||||

|

|

||||||

|

duckdb_files_to_tables = {

|

||||||

|

"pilot/message/chat_history.db": "chat_history",

|

||||||

|

"pilot/message/connect_config.db": "connect_config",

|

||||||

|

}

|

||||||

|

|

||||||

|

conn_mysql = pymysql.connect(**mysql_config)

|

||||||

|

|

||||||

|

|

||||||

|

def migrate_table(duckdb_file_path, source_table, destination_table, conn_mysql):

|

||||||

|

conn_duckdb = duckdb.connect(duckdb_file_path)

|

||||||

|

try:

|

||||||

|

cursor = conn_duckdb.cursor()

|

||||||

|

cursor.execute(f"SELECT * FROM {source_table}")

|

||||||

|

column_names = [

|

||||||

|

desc[0] for desc in cursor.description if desc[0].lower() != "id"

|

||||||

|

]

|

||||||

|

select_columns = ", ".join(column_names)

|

||||||

|

|

||||||

|

cursor.execute(f"SELECT {select_columns} FROM {source_table}")

|

||||||

|

results = cursor.fetchall()

|

||||||

|

|

||||||

|

with conn_mysql.cursor() as cursor_mysql:

|

||||||

|

for row in results:

|

||||||

|

placeholders = ", ".join(["%s"] * len(row))

|

||||||

|

insert_query = f"INSERT INTO {destination_table} ({', '.join(column_names)}) VALUES ({placeholders})"

|

||||||

|

cursor_mysql.execute(insert_query, row)

|

||||||

|

conn_mysql.commit()

|

||||||

|

finally:

|

||||||

|

conn_duckdb.close()

|

||||||

|

|

||||||

|

|

||||||

|

try:

|

||||||

|

for duckdb_file, table in duckdb_files_to_tables.items():

|

||||||

|

print(f"Migrating table {table} from {duckdb_file}...")

|

||||||

|

migrate_table(duckdb_file, table, table, conn_mysql)

|

||||||

|

print(f"Table {table} migrated successfully.")

|

||||||

|

finally:

|

||||||

|

conn_mysql.close()

|

||||||

|

|

||||||

|

print("Migration completed.")

|

||||||

48

docker/examples/metadata/duckdb2sqlite.py

Normal file

48

docker/examples/metadata/duckdb2sqlite.py

Normal file

@ -0,0 +1,48 @@

|

|||||||

|

import duckdb

|

||||||

|

import sqlite3

|

||||||

|

|

||||||

|

""" migrate duckdb to sqlite"""

|

||||||

|

|

||||||

|

duckdb_files_to_tables = {

|

||||||

|

"pilot/message/chat_history.db": "chat_history",

|

||||||

|

"pilot/message/connect_config.db": "connect_config",

|

||||||

|

}

|

||||||

|

|

||||||

|

sqlite_db_path = "pilot/meta_data/dbgpt.db"

|

||||||

|

|

||||||

|

conn_sqlite = sqlite3.connect(sqlite_db_path)

|

||||||

|

|

||||||

|

|

||||||

|

def migrate_table(duckdb_file_path, source_table, destination_table, conn_sqlite):

|

||||||

|

conn_duckdb = duckdb.connect(duckdb_file_path)

|

||||||

|

try:

|

||||||

|

cursor_duckdb = conn_duckdb.cursor()

|

||||||

|

cursor_duckdb.execute(f"SELECT * FROM {source_table}")

|

||||||

|

column_names = [

|

||||||

|

desc[0] for desc in cursor_duckdb.description if desc[0].lower() != "id"

|

||||||

|

]

|

||||||

|

select_columns = ", ".join(column_names)

|

||||||

|

|

||||||

|

cursor_duckdb.execute(f"SELECT {select_columns} FROM {source_table}")

|

||||||

|

results = cursor_duckdb.fetchall()

|

||||||

|

|

||||||

|

cursor_sqlite = conn_sqlite.cursor()

|

||||||

|

for row in results:

|

||||||

|

placeholders = ", ".join(["?"] * len(row))

|

||||||

|

insert_query = f"INSERT INTO {destination_table} ({', '.join(column_names)}) VALUES ({placeholders})"

|

||||||

|

cursor_sqlite.execute(insert_query, row)

|

||||||

|

conn_sqlite.commit()

|

||||||

|

cursor_sqlite.close()

|

||||||

|

finally:

|

||||||

|

conn_duckdb.close()

|

||||||

|

|

||||||

|

|

||||||

|

try:

|

||||||

|

for duckdb_file, table in duckdb_files_to_tables.items():

|

||||||

|

print(f"Migrating table {table} from {duckdb_file} to SQLite...")

|

||||||

|

migrate_table(duckdb_file, table, table, conn_sqlite)

|

||||||

|

print(f"Table {table} migrated to SQLite successfully.")

|

||||||

|

finally:

|

||||||

|

conn_sqlite.close()

|

||||||

|

|

||||||

|

print("Migration to SQLite completed.")

|

||||||

@ -1 +1,111 @@

|

|||||||

# RAG Parameter Adjustment

|

# RAG Parameter Adjustment

|

||||||

|

Each knowledge space supports argument customization, including the relevant arguments for vector retrieval and the arguments for knowledge question-answering prompts.

|

||||||

|

|

||||||

|

As shown in the figure below, clicking on the "Knowledge" will trigger a pop-up dialog box. Click the "Arguments" button to enter the parameter tuning interface.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

<Tabs

|

||||||

|

defaultValue="Embedding"

|

||||||

|

values={[

|

||||||

|

{label: 'Embedding Argument', value: 'Embedding'},

|

||||||

|

{label: 'Prompt Argument', value: 'Prompt'},

|

||||||

|

{label: 'Summary Argument', value: 'Summary'},

|

||||||

|

]}>

|

||||||

|

<TabItem value="Embedding" label="Embedding Argument">

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

:::tip Embedding Arguments

|

||||||

|

* topk:the top k vectors based on similarity score.

|

||||||

|

* recall_score:set a similarity threshold score for the retrieval of similar vectors. between 0 and 1. default 0.3.

|

||||||

|

* recall_type:recall type. now nly support topk by vector similarity.

|

||||||

|

* model:A model used to create vector representations of text or other data.

|

||||||

|

* chunk_size:The size of the data chunks used in processing.default 500.

|

||||||

|

* chunk_overlap:The amount of overlap between adjacent data chunks.default 50.

|

||||||

|

:::

|

||||||

|

</TabItem>

|

||||||

|

|

||||||

|

<TabItem value="Prompt" label="Prompt Argument">

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

:::tip Prompt Arguments

|

||||||

|

* scene:A contextual parameter used to define the setting or environment in which the prompt is being used.

|

||||||

|

* template:A pre-defined structure or format for the prompt, which can help ensure that the AI system generates responses that are consistent with the desired style or tone.

|

||||||

|

* max_token:The maximum number of tokens or words allowed in a prompt.

|

||||||

|

:::

|

||||||

|

|

||||||

|

</TabItem>

|

||||||

|

|

||||||

|

<TabItem value="Summary" label="Summary Argument">

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

:::tip summary arguments

|

||||||

|

* max_iteration: summary max iteration call with llm, default 5. the bigger and better for document summary but time will cost longer.

|

||||||

|

* concurrency_limit: default summary concurrency call with llm, default 3.

|

||||||

|

:::

|

||||||

|

|

||||||

|

</TabItem>

|

||||||

|

|

||||||

|

</Tabs>

|

||||||

|

|

||||||

|

# Knowledge Query Rewrite

|

||||||

|

set ``KNOWLEDGE_SEARCH_REWRITE=True`` in ``.env`` file, and restart the server.

|

||||||

|

|

||||||

|

```shell

|

||||||

|

# Whether to enable Chat Knowledge Search Rewrite Mode

|

||||||

|

KNOWLEDGE_SEARCH_REWRITE=True

|

||||||

|

```

|

||||||

|

|

||||||

|

# Change Vector Database

|

||||||

|

import Tabs from '@theme/Tabs';

|

||||||

|

import TabItem from '@theme/TabItem';

|

||||||

|

|

||||||

|

<Tabs

|

||||||

|

defaultValue="Chroma"

|

||||||

|

values={[

|

||||||

|

{label: 'Chroma', value: 'Chroma'},

|

||||||

|

{label: 'Milvus', value: 'Milvus'},

|

||||||

|

{label: 'Weaviate', value: 'Weaviate'},

|

||||||

|

]}>

|

||||||

|

<TabItem value="Chroma" label="Chroma">

|

||||||

|

|

||||||

|

set ``VECTOR_STORE_TYPE`` in ``.env`` file.

|

||||||

|

|

||||||

|

```shell

|

||||||

|

### Chroma vector db config

|

||||||

|

VECTOR_STORE_TYPE=Chroma

|

||||||

|

#CHROMA_PERSIST_PATH=/root/DB-GPT/pilot/data

|

||||||

|

```

|

||||||

|

</TabItem>

|

||||||

|

|

||||||

|

<TabItem value="Milvus" label="Milvus">

|

||||||

|

|

||||||

|

|

||||||

|

set ``VECTOR_STORE_TYPE`` in ``.env`` file

|

||||||

|

|

||||||

|

```shell

|

||||||

|

### Milvus vector db config

|

||||||

|

VECTOR_STORE_TYPE=Milvus

|

||||||

|

MILVUS_URL=127.0.0.1

|

||||||

|

MILVUS_PORT=19530

|

||||||

|

#MILVUS_USERNAME

|

||||||

|

#MILVUS_PASSWORD

|

||||||

|

#MILVUS_SECURE=

|

||||||

|

```

|

||||||

|

</TabItem>

|

||||||

|

|

||||||

|

<TabItem value="Weaviate" label="Weaviate">

|

||||||

|

|

||||||

|

set ``VECTOR_STORE_TYPE`` in ``.env`` file

|

||||||

|

|

||||||

|

```shell

|

||||||

|

### Weaviate vector db config

|

||||||

|

VECTOR_STORE_TYPE=Weaviate

|

||||||

|

#WEAVIATE_URL=https://kt-region-m8hcy0wc.weaviate.network

|

||||||

|

```

|

||||||

|

|

||||||

|

</TabItem>

|

||||||

|

</Tabs>

|

||||||

|

|||||||

@ -1,2 +0,0 @@

|

|||||||

# FAQ

|

|

||||||

If you encounter any problems, you can submit an [issue](https://github.com/eosphoros-ai/DB-GPT/issues) on Github.

|

|

||||||

55

docs/docs/faq/chatdata.md

Normal file

55

docs/docs/faq/chatdata.md

Normal file

@ -0,0 +1,55 @@

|

|||||||

|

ChatData & ChatDB

|

||||||

|

==================================

|

||||||

|

ChatData generates SQL from natural language and executes it. ChatDB involves conversing with metadata from the

|

||||||

|

Database, including metadata about databases, tables, and

|

||||||

|

fields.

|

||||||

|

|

||||||

|

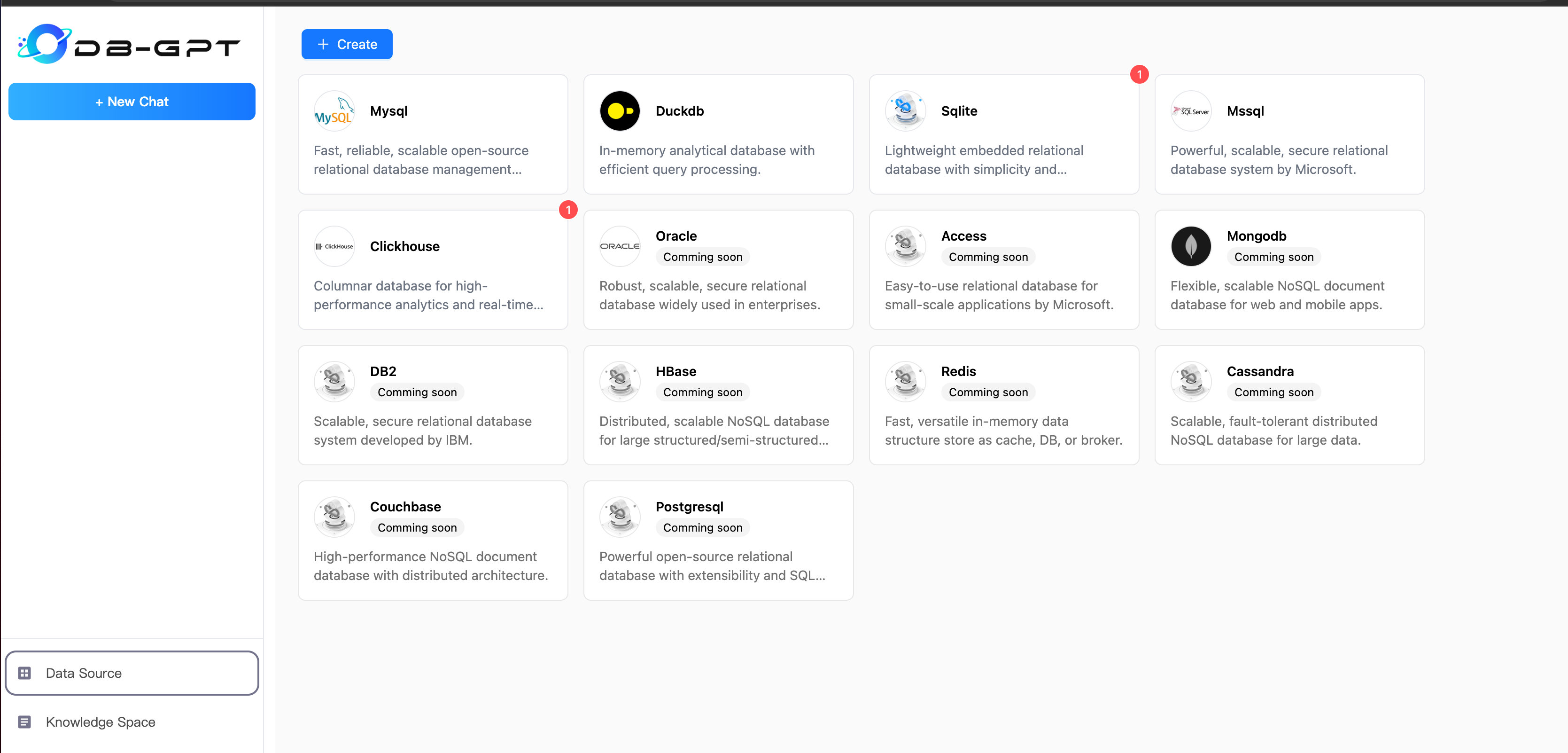

### 1.Choose Datasource

|

||||||

|

|

||||||

|

If you are using DB-GPT for the first time, you need to add a data source and set the relevant connection information

|

||||||

|

for the data source.

|

||||||

|

|

||||||

|

```{tip}

|

||||||

|

there are some example data in DB-GPT-NEW/DB-GPT/docker/examples

|

||||||

|

|

||||||

|

you can execute sql script to generate data.

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 1.1 Datasource management

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

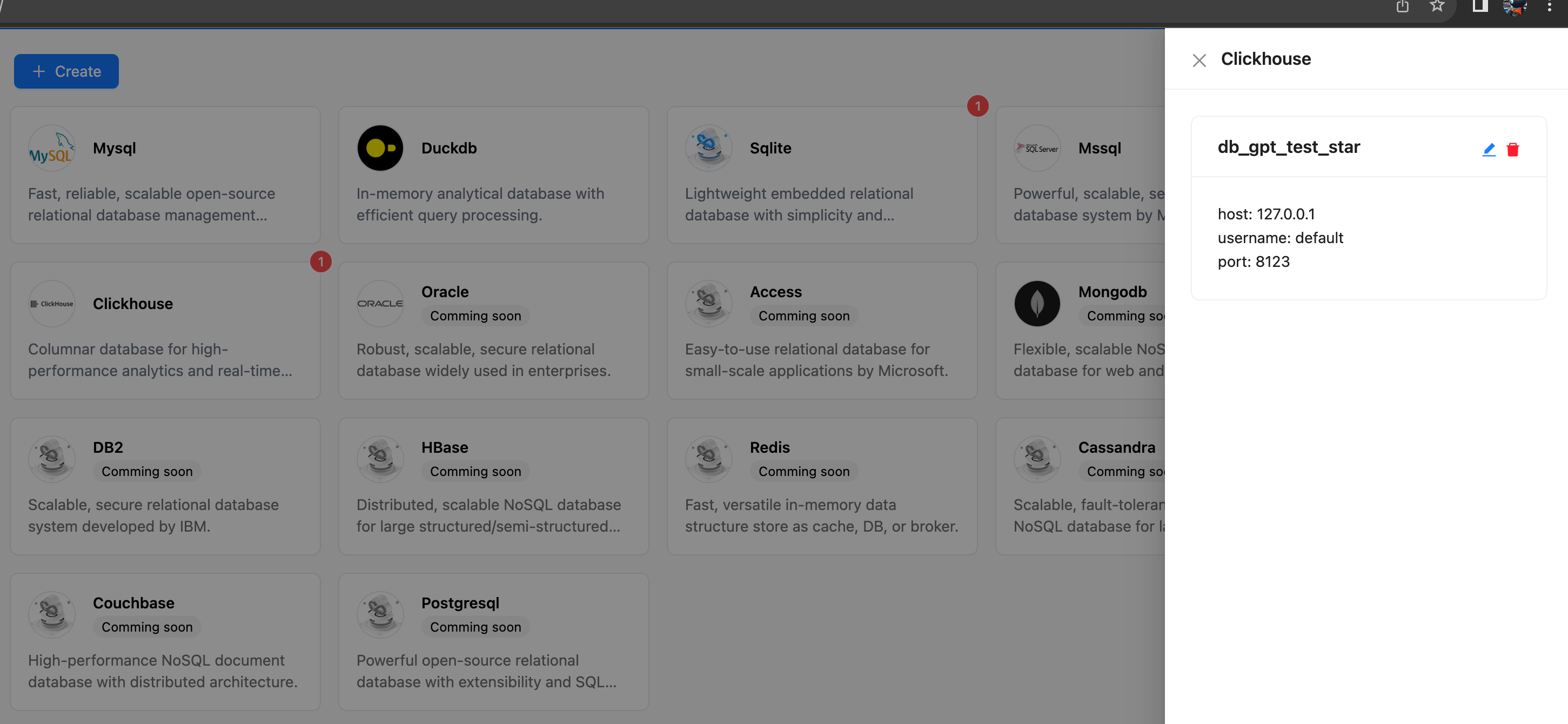

#### 1.2 Connection management

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

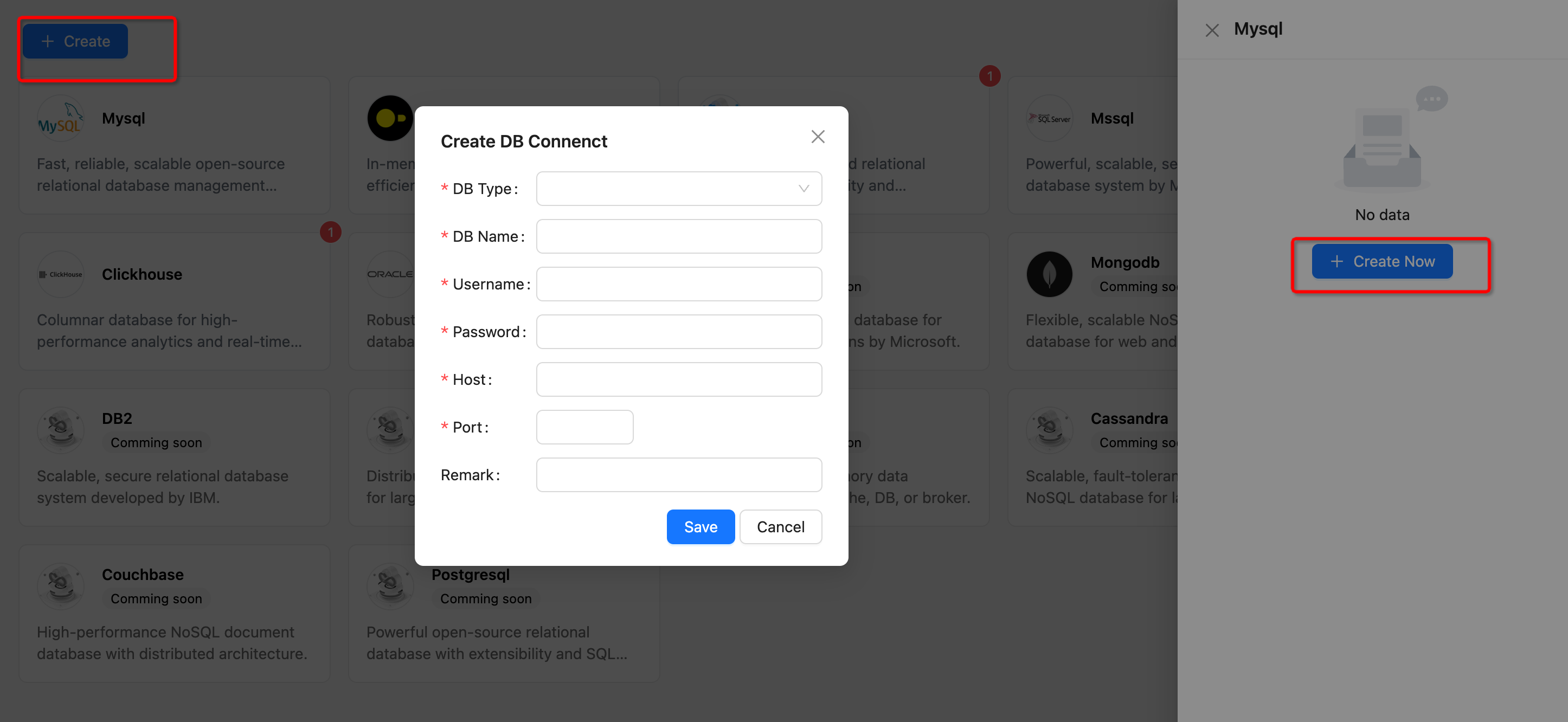

#### 1.3 Add Datasource

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

```{note}

|

||||||

|

now DB-GPT support Datasource Type

|

||||||

|

|

||||||

|

* Mysql

|

||||||

|

* Sqlite

|

||||||

|

* DuckDB

|

||||||

|

* Clickhouse

|

||||||

|

* Mssql

|

||||||

|

```

|

||||||

|

|

||||||

|

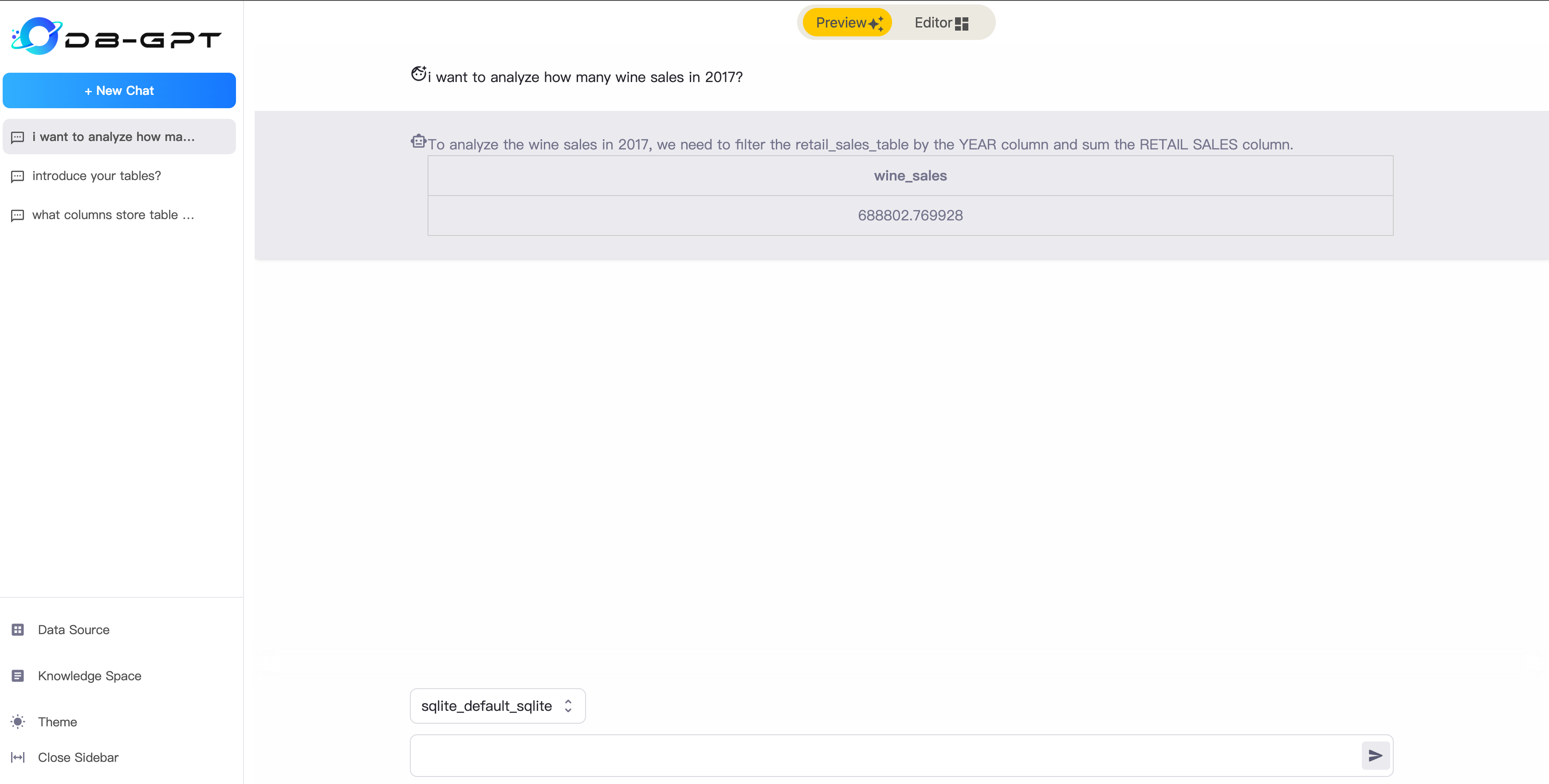

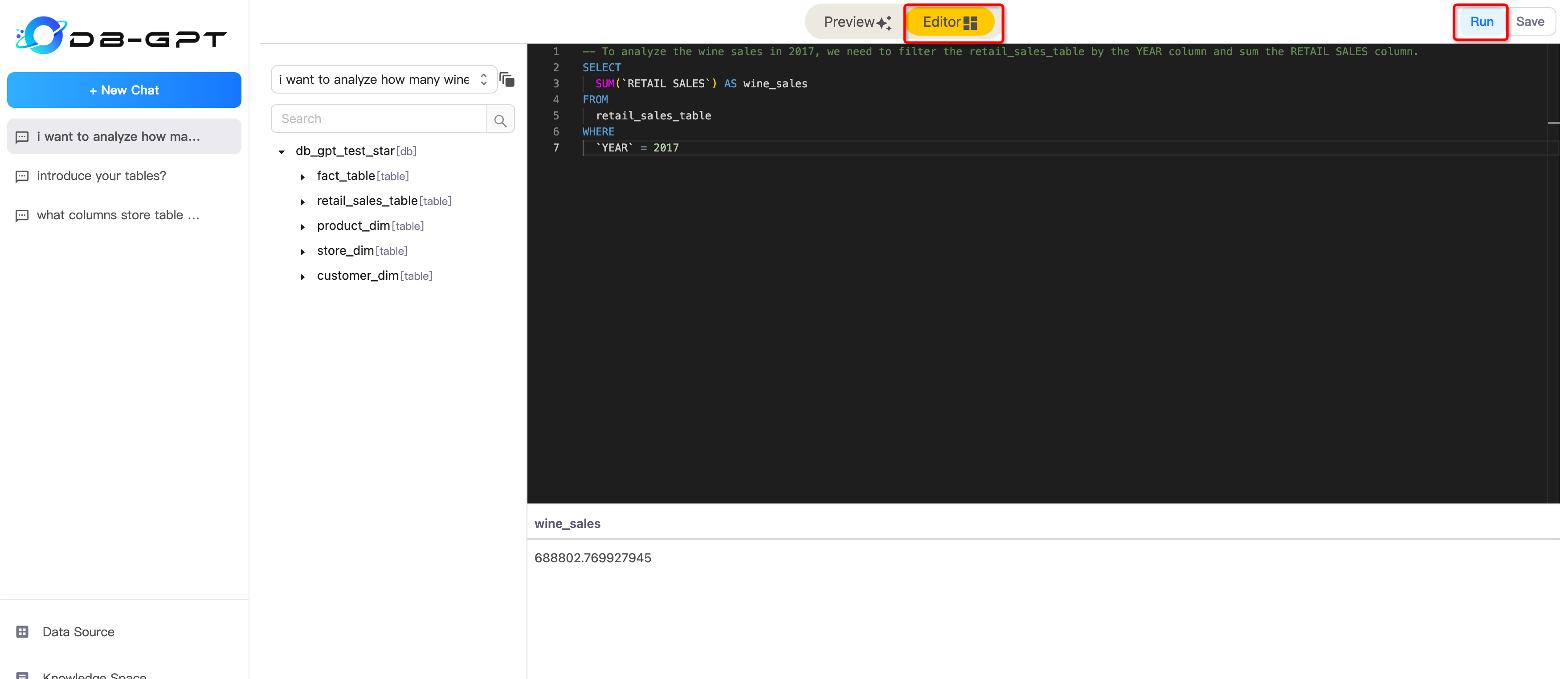

### 2.ChatData

|

||||||

|

##### Preview Mode

|

||||||

|

After successfully setting up the data source, you can start conversing with the database. You can ask it to generate

|

||||||

|

SQL for you or inquire about relevant information on the database's metadata.

|

||||||

|

|

||||||

|

|

||||||

|

##### Editor Mode

|

||||||

|

In Editor Mode, you can edit your sql and execute it.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 3.ChatDB

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

73

docs/docs/faq/install.md

Normal file

73

docs/docs/faq/install.md

Normal file

@ -0,0 +1,73 @@

|

|||||||

|

Installation FAQ

|

||||||

|

==================================

|

||||||

|

|

||||||

|

|

||||||

|

##### Q1: sqlalchemy.exc.OperationalError: (sqlite3.OperationalError) unable to open database file

|

||||||

|

|

||||||

|

make sure you pull latest code or create directory with mkdir pilot/data

|

||||||

|

|

||||||

|

##### Q2: The model keeps getting killed.

|

||||||

|

|

||||||

|

your GPU VRAM size is not enough, try replace your hardware or replace other llms.

|

||||||

|

|

||||||

|

##### Q3: How to access website on the public network

|

||||||

|

|

||||||

|

You can try to use gradio's [network](https://github.com/gradio-app/gradio/blob/main/gradio/networking.py) to achieve.

|

||||||

|

```python

|

||||||

|

import secrets

|

||||||

|

from gradio import networking

|

||||||

|

token=secrets.token_urlsafe(32)

|

||||||

|

local_port=5000

|

||||||

|

url = networking.setup_tunnel('0.0.0.0', local_port, token)

|

||||||

|

print(f'Public url: {url}')

|

||||||

|

time.sleep(60 * 60 * 24)

|

||||||

|

```

|

||||||

|

|

||||||

|

Open `url` with your browser to see the website.

|

||||||

|

|

||||||

|

##### Q4: (Windows) execute `pip install -e .` error

|

||||||

|

|

||||||

|

The error log like the following:

|

||||||

|

```

|

||||||

|

× python setup.py bdist_wheel did not run successfully.

|

||||||

|

│ exit code: 1

|

||||||

|

╰─> [11 lines of output]

|

||||||

|

running bdist_wheel

|

||||||

|

running build

|

||||||

|

running build_py

|

||||||

|

creating build

|

||||||

|

creating build\lib.win-amd64-cpython-310

|

||||||

|

creating build\lib.win-amd64-cpython-310\cchardet

|

||||||

|

copying src\cchardet\version.py -> build\lib.win-amd64-cpython-310\cchardet

|

||||||

|

copying src\cchardet\__init__.py -> build\lib.win-amd64-cpython-310\cchardet

|

||||||

|

running build_ext

|

||||||

|

building 'cchardet._cchardet' extension

|

||||||

|

error: Microsoft Visual C++ 14.0 or greater is required. Get it with "Microsoft C++ Build Tools": https://visualstudio.microsoft.com/visual-cpp-build-tools/

|

||||||

|

[end of output]

|

||||||

|

```

|

||||||

|

|

||||||

|

Download and install `Microsoft C++ Build Tools` from [visual-cpp-build-tools](https://visualstudio.microsoft.com/visual-cpp-build-tools/)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

##### Q5: `Torch not compiled with CUDA enabled`

|

||||||

|

|

||||||

|

```

|

||||||

|

2023-08-19 16:24:30 | ERROR | stderr | raise AssertionError("Torch not compiled with CUDA enabled")

|

||||||

|

2023-08-19 16:24:30 | ERROR | stderr | AssertionError: Torch not compiled with CUDA enabled

|

||||||

|

```

|

||||||

|

|

||||||

|

1. Install [CUDA Toolkit](https://developer.nvidia.com/cuda-toolkit-archive)

|

||||||

|

2. Reinstall PyTorch [start-locally](https://pytorch.org/get-started/locally/#start-locally) with CUDA support.

|

||||||

|

|

||||||

|

|

||||||

|

##### Q6: `How to migrate meta table chat_history and connect_config from duckdb to sqlite`

|

||||||

|

```commandline

|

||||||

|

python docker/examples/metadata/duckdb2sqlite.py

|

||||||

|

```

|

||||||

|

|

||||||

|

##### Q7: `How to migrate meta table chat_history and connect_config from duckdb to mysql`

|

||||||

|

```commandline

|

||||||

|

1. update your mysql username and password in docker/examples/metadata/duckdb2mysql.py

|

||||||

|

2. python docker/examples/metadata/duckdb2mysql.py

|

||||||

|

```

|

||||||

70

docs/docs/faq/kbqa.md

Normal file

70

docs/docs/faq/kbqa.md

Normal file

@ -0,0 +1,70 @@

|

|||||||

|

KBQA FAQ

|

||||||

|

==================================

|

||||||

|

|

||||||

|

##### Q1: text2vec-large-chinese not found

|

||||||

|

|

||||||

|

make sure you have download text2vec-large-chinese embedding model in right way

|

||||||

|

|

||||||

|

```tip

|

||||||

|

centos:yum install git-lfs

|

||||||

|

ubuntu:apt-get install git-lfs -y

|

||||||

|

macos:brew install git-lfs

|

||||||

|

```

|

||||||

|

```bash

|

||||||

|

cd models

|

||||||

|

git lfs clone https://huggingface.co/GanymedeNil/text2vec-large-chinese

|

||||||

|

```

|

||||||

|

|

||||||

|

##### Q2:How to change Vector DB Type in DB-GPT.

|

||||||

|

|

||||||

|

Update .env file and set VECTOR_STORE_TYPE.

|

||||||

|

|

||||||

|

DB-GPT currently support Chroma(Default), Milvus(>2.1), Weaviate vector database.

|

||||||

|

If you want to change vector db, Update your .env, set your vector store type, VECTOR_STORE_TYPE=Chroma (now only support Chroma and Milvus(>2.1), if you set Milvus, please set MILVUS_URL and MILVUS_PORT)

|

||||||

|

If you want to support more vector db, you can integrate yourself.[how to integrate](https://db-gpt.readthedocs.io/en/latest/modules/vector.html)

|

||||||

|

```commandline

|

||||||

|

#*******************************************************************#

|

||||||

|

#** VECTOR STORE SETTINGS **#

|

||||||

|

#*******************************************************************#

|

||||||

|

VECTOR_STORE_TYPE=Chroma

|

||||||

|

#MILVUS_URL=127.0.0.1

|

||||||

|

#MILVUS_PORT=19530

|

||||||

|

#MILVUS_USERNAME

|

||||||

|

#MILVUS_PASSWORD

|

||||||

|

#MILVUS_SECURE=

|

||||||

|

|

||||||

|

#WEAVIATE_URL=https://kt-region-m8hcy0wc.weaviate.network

|

||||||

|

```

|

||||||

|

##### Q3:When I use vicuna-13b, found some illegal character like this.

|

||||||

|

<p align="left">

|

||||||

|

<img src="https://github.com/eosphoros-ai/DB-GPT/assets/13723926/088d1967-88e3-4f72-9ad7-6c4307baa2f8" width="800px" />

|

||||||

|

</p>

|

||||||

|

|

||||||

|

Set KNOWLEDGE_SEARCH_TOP_SIZE smaller or set KNOWLEDGE_CHUNK_SIZE smaller, and reboot server.

|

||||||

|

|

||||||

|

##### Q4:space add error (pymysql.err.OperationalError) (1054, "Unknown column 'knowledge_space.context' in 'field list'")

|

||||||

|

|

||||||

|

1.shutdown dbgpt_server(ctrl c)

|

||||||

|

|

||||||

|

2.add column context for table knowledge_space

|

||||||

|

|

||||||

|

```commandline

|

||||||

|

mysql -h127.0.0.1 -uroot -p {your_password}

|

||||||

|

```

|

||||||

|

|

||||||

|

3.execute sql ddl

|

||||||

|

|

||||||

|

```commandline

|

||||||

|

mysql> use knowledge_management;

|

||||||

|

mysql> ALTER TABLE knowledge_space ADD COLUMN context TEXT COMMENT "arguments context";

|

||||||

|

```

|

||||||

|

|

||||||

|

4.restart dbgpt serve

|

||||||

|

|

||||||

|

##### Q5:Use Mysql, how to use DB-GPT KBQA

|

||||||

|

|

||||||

|

build Mysql KBQA system database schema.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

$ mysql -h127.0.0.1 -uroot -p{your_password} < ./assets/schema/knowledge_management.sql

|

||||||

|

```

|

||||||

52

docs/docs/faq/llm.md

Normal file

52

docs/docs/faq/llm.md

Normal file

@ -0,0 +1,52 @@

|

|||||||

|

LLM USE FAQ

|

||||||

|

==================================

|

||||||

|

##### Q1:how to use openai chatgpt service

|

||||||

|

change your LLM_MODEL

|

||||||

|

````shell

|

||||||

|

LLM_MODEL=proxyllm

|

||||||

|

````

|

||||||

|

|

||||||

|

set your OPENAPI KEY

|

||||||

|

|

||||||

|

````shell

|

||||||

|

PROXY_API_KEY={your-openai-sk}

|

||||||

|

PROXY_SERVER_URL=https://api.openai.com/v1/chat/completions

|

||||||

|

````

|

||||||

|

|

||||||

|

make sure your openapi API_KEY is available

|

||||||

|

|

||||||

|

##### Q2 What difference between `python dbgpt_server --light` and `python dbgpt_server`

|

||||||

|

|

||||||

|

:::tip

|

||||||

|

python dbgpt_server --light` dbgpt_server does not start the llm service. Users can deploy the llm service separately by using `python llmserver`, and dbgpt_server accesses the llm service through set the LLM_SERVER environment variable in .env. The purpose is to allow for the separate deployment of dbgpt's backend service and llm service.

|

||||||

|

|

||||||

|

python dbgpt_server service and the llm service are deployed on the same instance. when dbgpt_server starts the service, it also starts the llm service at the same time.

|

||||||

|

:::

|

||||||

|

|

||||||

|

##### Q3 how to use MultiGPUs

|

||||||

|

|

||||||

|

DB-GPT will use all available gpu by default. And you can modify the setting `CUDA_VISIBLE_DEVICES=0,1` in `.env` file

|

||||||

|

to use the specific gpu IDs.

|

||||||

|

|

||||||

|

Optionally, you can also specify the gpu ID to use before the starting command, as shown below:

|

||||||

|

|

||||||

|

````shell

|

||||||

|

# Specify 1 gpu

|

||||||

|

CUDA_VISIBLE_DEVICES=0 python3 pilot/server/dbgpt_server.py

|

||||||

|

|

||||||

|

# Specify 4 gpus

|

||||||

|

CUDA_VISIBLE_DEVICES=3,4,5,6 python3 pilot/server/dbgpt_server.py

|

||||||

|

````

|

||||||

|

|

||||||

|

You can modify the setting `MAX_GPU_MEMORY=xxGib` in `.env` file to configure the maximum memory used by each GPU.

|

||||||

|

|

||||||

|

##### Q4 Not Enough Memory

|

||||||

|

|

||||||

|

DB-GPT supported 8-bit quantization and 4-bit quantization.

|

||||||

|

|

||||||

|

You can modify the setting `QUANTIZE_8bit=True` or `QUANTIZE_4bit=True` in `.env` file to use quantization(8-bit quantization is enabled by default).

|

||||||

|

|

||||||

|

Llama-2-70b with 8-bit quantization can run with 80 GB of VRAM, and 4-bit quantization can run with 48 GB of VRAM.

|

||||||

|

|

||||||

|

Note: you need to install the latest dependencies according to [requirements.txt](https://github.com/eosphoros-ai/DB-GPT/blob/main/requirements.txt).

|

||||||

|

Note: you need to install the latest dependencies according to [requirements.txt](https://github.com/eosphoros-ai/DB-GPT/blob/main/requirements.txt).

|

||||||

@ -200,8 +200,27 @@ const sidebars = {

|

|||||||

},

|

},

|

||||||

|

|

||||||

{

|

{

|

||||||

type: "doc",

|

type: "category",

|

||||||

id:"faq"

|

label: "FAQ",

|

||||||

|

collapsed: true,

|

||||||

|

items: [

|

||||||

|

{

|

||||||

|

type: 'doc',

|

||||||

|

id: 'faq/install',

|

||||||

|

}

|

||||||

|

,{

|

||||||

|

type: 'doc',

|

||||||

|

id: 'faq/llm',

|

||||||

|

}

|

||||||

|

,{

|

||||||

|

type: 'doc',

|

||||||

|

id: 'faq/kbqa',

|

||||||

|

}

|

||||||

|

,{

|

||||||

|

type: 'doc',

|

||||||

|

id: 'faq/chatdata',

|

||||||

|

},

|

||||||

|

],

|

||||||

},

|

},

|

||||||

|

|

||||||

{

|

{

|

||||||

|

|||||||

@ -14,7 +14,7 @@ from pilot.configs.config import Config

|

|||||||

|

|

||||||

|

|

||||||

logger = logging.getLogger(__name__)

|

logger = logging.getLogger(__name__)

|

||||||

|

# DB-GPT meta_data database config, now support mysql and sqlite

|

||||||

CFG = Config()

|

CFG = Config()

|

||||||

default_db_path = os.path.join(os.getcwd(), "meta_data")

|

default_db_path = os.path.join(os.getcwd(), "meta_data")

|

||||||

|

|

||||||

@ -26,6 +26,7 @@ db_name = META_DATA_DATABASE

|

|||||||

db_path = default_db_path + f"/{db_name}.db"

|

db_path = default_db_path + f"/{db_name}.db"

|

||||||

connection = sqlite3.connect(db_path)

|

connection = sqlite3.connect(db_path)

|

||||||

|

|

||||||

|

|

||||||

if CFG.LOCAL_DB_TYPE == "mysql":

|

if CFG.LOCAL_DB_TYPE == "mysql":

|

||||||

engine_temp = create_engine(

|

engine_temp = create_engine(

|

||||||

f"mysql+pymysql://"

|

f"mysql+pymysql://"

|

||||||

@ -81,18 +82,10 @@ os.makedirs(default_db_path + "/alembic/versions", exist_ok=True)

|

|||||||

|

|

||||||

alembic_cfg.set_main_option("script_location", default_db_path + "/alembic")

|

alembic_cfg.set_main_option("script_location", default_db_path + "/alembic")

|

||||||

|

|

||||||

# 将模型和会话传递给Alembic配置

|

|

||||||

alembic_cfg.attributes["target_metadata"] = Base.metadata

|

alembic_cfg.attributes["target_metadata"] = Base.metadata

|

||||||

alembic_cfg.attributes["session"] = session

|

alembic_cfg.attributes["session"] = session

|

||||||

|

|

||||||

|

|

||||||

# # 创建表

|

|

||||||

# Base.metadata.create_all(engine)

|

|

||||||

#

|

|

||||||

# # 删除表

|

|

||||||

# Base.metadata.drop_all(engine)

|

|

||||||

|

|

||||||

|

|

||||||

def ddl_init_and_upgrade(disable_alembic_upgrade: bool):

|

def ddl_init_and_upgrade(disable_alembic_upgrade: bool):

|

||||||

"""Initialize and upgrade database metadata

|

"""Initialize and upgrade database metadata

|

||||||

|

|

||||||

@ -105,10 +98,6 @@ def ddl_init_and_upgrade(disable_alembic_upgrade: bool):

|

|||||||

)

|

)

|

||||||

return

|

return

|

||||||

|

|

||||||

# Base.metadata.create_all(bind=engine)

|

|

||||||

# 生成并应用迁移脚本

|

|

||||||

# command.upgrade(alembic_cfg, 'head')

|

|

||||||

# subprocess.run(["alembic", "revision", "--autogenerate", "-m", "Added account table"])

|

|

||||||

with engine.connect() as connection:

|

with engine.connect() as connection:

|

||||||

alembic_cfg.attributes["connection"] = connection

|

alembic_cfg.attributes["connection"] = connection

|

||||||

heads = command.heads(alembic_cfg)

|

heads = command.heads(alembic_cfg)

|

||||||

|

|||||||

@ -104,13 +104,6 @@ class Config(metaclass=Singleton):

|

|||||||

self.use_mac_os_tts = False

|

self.use_mac_os_tts = False

|

||||||

self.use_mac_os_tts = os.getenv("USE_MAC_OS_TTS")

|

self.use_mac_os_tts = os.getenv("USE_MAC_OS_TTS")

|

||||||

|

|

||||||

# milvus or zilliz cloud configuration

|

|

||||||

self.milvus_addr = os.getenv("MILVUS_ADDR", "localhost:19530")

|

|

||||||

self.milvus_username = os.getenv("MILVUS_USERNAME")

|

|

||||||

self.milvus_password = os.getenv("MILVUS_PASSWORD")

|

|

||||||

self.milvus_collection = os.getenv("MILVUS_COLLECTION", "dbgpt")

|

|

||||||

self.milvus_secure = os.getenv("MILVUS_SECURE", "False").lower() == "true"

|

|

||||||

|

|

||||||

self.authorise_key = os.getenv("AUTHORISE_COMMAND_KEY", "y")

|

self.authorise_key = os.getenv("AUTHORISE_COMMAND_KEY", "y")

|

||||||

self.exit_key = os.getenv("EXIT_KEY", "n")

|

self.exit_key = os.getenv("EXIT_KEY", "n")

|

||||||

self.image_provider = os.getenv("IMAGE_PROVIDER", True)

|

self.image_provider = os.getenv("IMAGE_PROVIDER", True)

|

||||||

@ -190,7 +183,7 @@ class Config(metaclass=Singleton):

|

|||||||

self.LOCAL_DB_PASSWORD = os.getenv("LOCAL_DB_PASSWORD", "aa123456")

|

self.LOCAL_DB_PASSWORD = os.getenv("LOCAL_DB_PASSWORD", "aa123456")

|

||||||

self.LOCAL_DB_POOL_SIZE = int(os.getenv("LOCAL_DB_POOL_SIZE", 10))

|

self.LOCAL_DB_POOL_SIZE = int(os.getenv("LOCAL_DB_POOL_SIZE", 10))

|

||||||

|

|

||||||

self.CHAT_HISTORY_STORE_TYPE = os.getenv("CHAT_HISTORY_STORE_TYPE", "duckdb")

|

self.CHAT_HISTORY_STORE_TYPE = os.getenv("CHAT_HISTORY_STORE_TYPE", "db")

|

||||||

|

|

||||||

### LLM Model Service Configuration

|

### LLM Model Service Configuration

|

||||||

self.LLM_MODEL = os.getenv("LLM_MODEL", "vicuna-13b-v1.5")

|

self.LLM_MODEL = os.getenv("LLM_MODEL", "vicuna-13b-v1.5")

|

||||||

@ -232,10 +225,18 @@ class Config(metaclass=Singleton):

|

|||||||

self.KNOWLEDGE_CHUNK_SIZE = int(os.getenv("KNOWLEDGE_CHUNK_SIZE", 100))

|

self.KNOWLEDGE_CHUNK_SIZE = int(os.getenv("KNOWLEDGE_CHUNK_SIZE", 100))

|

||||||

self.KNOWLEDGE_CHUNK_OVERLAP = int(os.getenv("KNOWLEDGE_CHUNK_OVERLAP", 50))

|

self.KNOWLEDGE_CHUNK_OVERLAP = int(os.getenv("KNOWLEDGE_CHUNK_OVERLAP", 50))

|

||||||

self.KNOWLEDGE_SEARCH_TOP_SIZE = int(os.getenv("KNOWLEDGE_SEARCH_TOP_SIZE", 5))

|

self.KNOWLEDGE_SEARCH_TOP_SIZE = int(os.getenv("KNOWLEDGE_SEARCH_TOP_SIZE", 5))

|

||||||

|

# default recall similarity score, between 0 and 1

|

||||||

|

self.KNOWLEDGE_SEARCH_RECALL_SCORE = float(

|

||||||

|

os.getenv("KNOWLEDGE_SEARCH_RECALL_SCORE", 0.3)

|

||||||

|

)

|

||||||

self.KNOWLEDGE_SEARCH_MAX_TOKEN = int(

|

self.KNOWLEDGE_SEARCH_MAX_TOKEN = int(

|

||||||

os.getenv("KNOWLEDGE_SEARCH_MAX_TOKEN", 2000)

|

os.getenv("KNOWLEDGE_SEARCH_MAX_TOKEN", 2000)

|

||||||

)

|

)

|

||||||

### Control whether to display the source document of knowledge on the front end.

|

# Whether to enable Chat Knowledge Search Rewrite Mode

|

||||||

|

self.KNOWLEDGE_SEARCH_REWRITE = (

|

||||||

|

os.getenv("KNOWLEDGE_SEARCH_REWRITE", "False").lower() == "true"

|

||||||

|

)

|

||||||

|

# Control whether to display the source document of knowledge on the front end.

|

||||||

self.KNOWLEDGE_CHAT_SHOW_RELATIONS = (

|

self.KNOWLEDGE_CHAT_SHOW_RELATIONS = (

|

||||||

os.getenv("KNOWLEDGE_CHAT_SHOW_RELATIONS", "False").lower() == "true"

|

os.getenv("KNOWLEDGE_CHAT_SHOW_RELATIONS", "False").lower() == "true"

|

||||||

)

|

)

|

||||||

|

|||||||

@ -1,5 +1,4 @@

|

|||||||

from typing import List

|

from sqlalchemy import Column, Integer, String, Index, Text, text

|

||||||

from sqlalchemy import Column, Integer, String, Index, DateTime, func, Boolean, Text

|

|

||||||

from sqlalchemy import UniqueConstraint

|

from sqlalchemy import UniqueConstraint

|

||||||

|

|

||||||

from pilot.base_modules.meta_data.base_dao import BaseDao

|

from pilot.base_modules.meta_data.base_dao import BaseDao

|

||||||

@ -12,15 +11,18 @@ from pilot.base_modules.meta_data.meta_data import (

|

|||||||

|

|

||||||

|

|

||||||

class ConnectConfigEntity(Base):

|

class ConnectConfigEntity(Base):

|

||||||

|

"""db connect config entity"""

|

||||||

|

|

||||||

__tablename__ = "connect_config"

|

__tablename__ = "connect_config"

|

||||||

id = Column(

|

id = Column(

|

||||||

Integer, primary_key=True, autoincrement=True, comment="autoincrement id"

|

Integer, primary_key=True, autoincrement=True, comment="autoincrement id"

|

||||||

)

|

)

|

||||||

|

|

||||||

db_type = Column(String(255), nullable=False, comment="db type")

|

db_type = Column(String(255), nullable=False, comment="db type")

|

||||||

db_name = Column(String(255), nullable=False, comment="db name")

|

db_name = Column(String(255), nullable=False, comment="db name")

|

||||||

db_path = Column(String(255), nullable=True, comment="file db path")

|

db_path = Column(String(255), nullable=True, comment="file db path")

|

||||||

db_host = Column(String(255), nullable=True, comment="db connect host(not file db)")

|

db_host = Column(String(255), nullable=True, comment="db connect host(not file db)")

|

||||||

db_port = Column(String(255), nullable=True, comment="db cnnect port(not file db)")

|

db_port = Column(String(255), nullable=True, comment="db connect port(not file db)")

|

||||||

db_user = Column(String(255), nullable=True, comment="db user")

|

db_user = Column(String(255), nullable=True, comment="db user")

|

||||||

db_pwd = Column(String(255), nullable=True, comment="db password")

|

db_pwd = Column(String(255), nullable=True, comment="db password")

|

||||||

comment = Column(Text, nullable=True, comment="db comment")

|

comment = Column(Text, nullable=True, comment="db comment")

|

||||||

@ -29,10 +31,13 @@ class ConnectConfigEntity(Base):

|

|||||||

__table_args__ = (

|

__table_args__ = (

|

||||||

UniqueConstraint("db_name", name="uk_db"),

|

UniqueConstraint("db_name", name="uk_db"),

|

||||||

Index("idx_q_db_type", "db_type"),

|

Index("idx_q_db_type", "db_type"),

|

||||||

|

{"mysql_charset": "utf8mb4", "mysql_collate": "utf8mb4_unicode_ci"},

|

||||||

)

|

)

|

||||||

|

|

||||||

|

|

||||||

class ConnectConfigDao(BaseDao[ConnectConfigEntity]):

|

class ConnectConfigDao(BaseDao[ConnectConfigEntity]):

|

||||||

|

"""db connect config dao"""

|

||||||

|

|

||||||

def __init__(self):

|

def __init__(self):

|

||||||

super().__init__(

|

super().__init__(

|

||||||

database=META_DATA_DATABASE,

|

database=META_DATA_DATABASE,

|

||||||

@ -42,6 +47,7 @@ class ConnectConfigDao(BaseDao[ConnectConfigEntity]):

|

|||||||

)

|

)

|

||||||

|

|

||||||

def update(self, entity: ConnectConfigEntity):

|

def update(self, entity: ConnectConfigEntity):

|

||||||

|

"""update db connect info"""

|

||||||

session = self.get_session()

|

session = self.get_session()

|

||||||

try:

|

try:

|

||||||

updated = session.merge(entity)

|

updated = session.merge(entity)

|

||||||

@ -51,6 +57,7 @@ class ConnectConfigDao(BaseDao[ConnectConfigEntity]):

|

|||||||

session.close()

|

session.close()

|

||||||

|

|

||||||

def delete(self, db_name: int):

|

def delete(self, db_name: int):

|

||||||

|

""" "delete db connect info"""

|

||||||

session = self.get_session()

|

session = self.get_session()

|

||||||

if db_name is None:

|

if db_name is None:

|

||||||

raise Exception("db_name is None")

|

raise Exception("db_name is None")

|

||||||

@ -61,10 +68,177 @@ class ConnectConfigDao(BaseDao[ConnectConfigEntity]):

|

|||||||

session.commit()

|

session.commit()

|

||||||

session.close()

|

session.close()

|

||||||

|

|

||||||

def get_by_name(self, db_name: str) -> ConnectConfigEntity:

|

def get_by_names(self, db_name: str) -> ConnectConfigEntity:

|

||||||

|

"""get db connect info by name"""

|

||||||

session = self.get_session()

|

session = self.get_session()

|

||||||

db_connect = session.query(ConnectConfigEntity)

|

db_connect = session.query(ConnectConfigEntity)

|

||||||

db_connect = db_connect.filter(ConnectConfigEntity.db_name == db_name)

|

db_connect = db_connect.filter(ConnectConfigEntity.db_name == db_name)

|

||||||

result = db_connect.first()

|

result = db_connect.first()

|

||||||

session.close()

|

session.close()

|

||||||

return result

|

return result

|

||||||

|

|

||||||

|

def add_url_db(

|

||||||

|

self,

|

||||||

|

db_name,

|

||||||

|

db_type,

|

||||||

|

db_host: str,

|

||||||

|

db_port: int,

|

||||||

|

db_user: str,

|

||||||

|

db_pwd: str,

|

||||||

|

comment: str = "",

|

||||||

|

):

|

||||||

|

"""

|

||||||

|

add db connect info

|

||||||

|

Args:

|

||||||

|

db_name: db name

|

||||||

|

db_type: db type

|

||||||

|

db_host: db host

|

||||||

|

db_port: db port

|

||||||

|

db_user: db user

|

||||||

|

db_pwd: db password

|

||||||

|

comment: comment

|

||||||

|

"""

|

||||||

|

try:

|

||||||

|

session = self.get_session()

|

||||||

|

|

||||||

|

from sqlalchemy import text

|

||||||

|

|

||||||

|

insert_statement = text(

|

||||||

|

"""

|

||||||

|

INSERT INTO connect_config (

|

||||||

|

db_name, db_type, db_path, db_host, db_port, db_user, db_pwd, comment

|

||||||

|

) VALUES (

|

||||||

|

:db_name, :db_type, :db_path, :db_host, :db_port, :db_user, :db_pwd, :comment

|

||||||

|

)

|

||||||

|

"""

|

||||||

|

)

|

||||||

|

|

||||||

|

params = {

|

||||||

|

"db_name": db_name,

|

||||||

|

"db_type": db_type,

|

||||||

|

"db_path": "",

|

||||||

|

"db_host": db_host,

|

||||||

|

"db_port": db_port,

|

||||||

|

"db_user": db_user,

|

||||||

|

"db_pwd": db_pwd,

|

||||||

|

"comment": comment,

|

||||||

|

}

|

||||||

|

session.execute(insert_statement, params)

|

||||||

|

session.commit()

|

||||||

|

session.close()

|

||||||

|

except Exception as e:

|

||||||

|

print("add db connect info error!" + str(e))

|

||||||

|

|

||||||

|

def update_db_info(

|

||||||

|

self,

|

||||||

|

db_name,

|

||||||

|

db_type,

|

||||||

|

db_path: str = "",

|

||||||

|

db_host: str = "",

|

||||||

|

db_port: int = 0,

|

||||||

|

db_user: str = "",

|

||||||

|

db_pwd: str = "",

|

||||||

|

comment: str = "",

|

||||||

|

):

|

||||||

|

"""update db connect info"""

|

||||||

|

old_db_conf = self.get_db_config(db_name)

|

||||||

|

if old_db_conf:

|

||||||

|

try:

|

||||||

|

session = self.get_session()

|

||||||

|

if not db_path:

|

||||||

|

update_statement = text(

|

||||||

|

f"UPDATE connect_config set db_type='{db_type}', db_host='{db_host}', db_port={db_port}, db_user='{db_user}', db_pwd='{db_pwd}', comment='{comment}' where db_name='{db_name}'"

|

||||||

|

)

|

||||||

|

else:

|

||||||

|

update_statement = text(

|

||||||

|

f"UPDATE connect_config set db_type='{db_type}', db_path='{db_path}', comment='{comment}' where db_name='{db_name}'"

|

||||||

|

)

|

||||||

|

session.execute(update_statement)

|

||||||

|

session.commit()

|

||||||

|

session.close()

|

||||||

|

except Exception as e:

|

||||||

|

print("edit db connect info error!" + str(e))

|

||||||

|

return True

|

||||||

|

raise ValueError(f"{db_name} not have config info!")

|

||||||

|

|

||||||

|

def add_file_db(self, db_name, db_type, db_path: str, comment: str = ""):

|

||||||

|

"""add file db connect info"""

|

||||||

|

try:

|

||||||

|

session = self.get_session()

|

||||||

|

insert_statement = text(

|

||||||

|

"""

|

||||||

|

INSERT INTO connect_config(

|

||||||

|

db_name, db_type, db_path, db_host, db_port, db_user, db_pwd, comment

|

||||||

|

) VALUES (

|

||||||

|

:db_name, :db_type, :db_path, :db_host, :db_port, :db_user, :db_pwd, :comment

|

||||||

|

)

|

||||||

|

"""

|

||||||

|

)

|

||||||

|

params = {

|

||||||

|

"db_name": db_name,

|

||||||

|

"db_type": db_type,

|

||||||

|

"db_path": db_path,

|

||||||

|

"db_host": "",

|

||||||

|

"db_port": 0,

|

||||||

|

"db_user": "",

|

||||||

|

"db_pwd": "",

|

||||||

|

"comment": comment,

|

||||||

|

}

|

||||||

|

|

||||||

|

session.execute(insert_statement, params)

|

||||||

|

|

||||||

|

session.commit()

|

||||||

|

session.close()

|

||||||

|

except Exception as e:

|

||||||

|

print("add db connect info error!" + str(e))

|

||||||

|

|

||||||

|

def get_db_config(self, db_name):

|

||||||

|

"""get db config by name"""

|

||||||

|

session = self.get_session()

|

||||||

|

if db_name:

|

||||||

|

select_statement = text(

|

||||||

|

"""

|

||||||

|

SELECT

|

||||||

|

*

|

||||||

|

FROM

|

||||||

|

connect_config

|

||||||

|

WHERE

|

||||||

|

db_name = :db_name

|

||||||

|

"""

|

||||||

|

)

|

||||||

|

params = {"db_name": db_name}

|

||||||

|

result = session.execute(select_statement, params)

|

||||||

|

|

||||||

|

else:

|

||||||

|

raise ValueError("Cannot get database by name" + db_name)

|

||||||

|

|

||||||

|

fields = [field[0] for field in result.cursor.description]

|

||||||

|

row_dict = {}

|

||||||

|

row_1 = list(result.cursor.fetchall()[0])

|

||||||

|

for i, field in enumerate(fields):

|

||||||

|

row_dict[field] = row_1[i]

|

||||||

|

return row_dict

|

||||||

|

|

||||||

|

def get_db_list(self):

|

||||||

|

"""get db list"""

|

||||||

|

session = self.get_session()

|

||||||

|

result = session.execute(text("SELECT * FROM connect_config"))

|

||||||

|

|

||||||

|

fields = [field[0] for field in result.cursor.description]

|

||||||

|

data = []

|

||||||

|

for row in result.cursor.fetchall():

|

||||||

|

row_dict = {}

|

||||||

|

for i, field in enumerate(fields):

|

||||||

|

row_dict[field] = row[i]

|

||||||

|

data.append(row_dict)

|

||||||

|

return data

|

||||||

|

|

||||||

|

def delete_db(self, db_name):

|

||||||

|

"""delete db connect info"""

|

||||||

|

session = self.get_session()

|

||||||

|

delete_statement = text("""DELETE FROM connect_config where db_name=:db_name""")

|

||||||

|

params = {"db_name": db_name}

|

||||||

|

session.execute(delete_statement, params)

|

||||||

|

session.commit()

|

||||||

|

session.close()

|

||||||

|

return True

|

||||||

|

|||||||

@ -2,6 +2,7 @@ import threading

|

|||||||

import asyncio

|

import asyncio

|

||||||

|

|

||||||

from pilot.configs.config import Config

|

from pilot.configs.config import Config

|

||||||

|

from pilot.connections import ConnectConfigDao

|

||||||

from pilot.connections.manages.connect_storage_duckdb import DuckdbConnectConfig

|

from pilot.connections.manages.connect_storage_duckdb import DuckdbConnectConfig

|

||||||

from pilot.common.schema import DBType

|

from pilot.common.schema import DBType

|

||||||

from pilot.component import SystemApp, ComponentType

|

from pilot.component import SystemApp, ComponentType

|

||||||

@ -28,6 +29,8 @@ CFG = Config()

|

|||||||

|

|

||||||

|

|

||||||

class ConnectManager:

|

class ConnectManager:

|

||||||

|

"""db connect manager"""

|

||||||

|

|

||||||

def get_all_subclasses(self, cls):

|

def get_all_subclasses(self, cls):

|

||||||

subclasses = cls.__subclasses__()

|

subclasses = cls.__subclasses__()

|

||||||

for subclass in subclasses:

|

for subclass in subclasses:

|

||||||

@ -49,45 +52,32 @@ class ConnectManager:

|

|||||||

if cls.db_type == db_type:

|

if cls.db_type == db_type:

|

||||||

result = cls

|

result = cls

|

||||||

if not result:

|

if not result:

|

||||||

raise ValueError("Unsupport Db Type!" + db_type)

|

raise ValueError("Unsupported Db Type!" + db_type)

|

||||||

return result

|

return result

|

||||||

|

|

||||||

def __init__(self, system_app: SystemApp):

|

def __init__(self, system_app: SystemApp):

|

||||||

self.storage = DuckdbConnectConfig()

|

"""metadata database management initialization"""

|

||||||

|

# self.storage = DuckdbConnectConfig()

|

||||||

|

self.storage = ConnectConfigDao()

|

||||||

self.db_summary_client = DBSummaryClient(system_app)

|

self.db_summary_client = DBSummaryClient(system_app)

|

||||||

# self.__load_config_db()

|

# self.__load_config_db()

|

||||||

|

|

||||||

def __load_config_db(self):

|

# def __load_config_db(self):

|

||||||

if CFG.LOCAL_DB_HOST:

|

# if CFG.LOCAL_DB_HOST:

|

||||||

# default mysql

|

# # default mysql

|

||||||

if CFG.LOCAL_DB_NAME:

|

# if CFG.LOCAL_DB_NAME:

|

||||||

self.storage.add_url_db(

|

# self.storage.add_url_db(

|

||||||

CFG.LOCAL_DB_NAME,

|

# CFG.LOCAL_DB_NAME,

|

||||||

DBType.Mysql.value(),

|

# DBType.Mysql.value(),

|

||||||

CFG.LOCAL_DB_HOST,

|

# CFG.LOCAL_DB_HOST,

|

||||||

CFG.LOCAL_DB_PORT,

|

# CFG.LOCAL_DB_PORT,

|

||||||

CFG.LOCAL_DB_USER,

|

# CFG.LOCAL_DB_USER,

|

||||||

CFG.LOCAL_DB_PASSWORD,

|

# CFG.LOCAL_DB_PASSWORD,

|

||||||

"",

|

# "",

|

||||||

)

|

# )

|

||||||

else:

|

# else:

|

||||||

# get all default mysql database

|

# # get all default mysql database

|

||||||

default_mysql = Database.from_uri(

|

# default_mysql = Database.from_uri(

|

||||||

"mysql+pymysql://"

|

|

||||||

+ CFG.LOCAL_DB_USER

|

|

||||||

+ ":"

|

|

||||||

+ CFG.LOCAL_DB_PASSWORD

|

|

||||||

+ "@"

|

|

||||||

+ CFG.LOCAL_DB_HOST

|

|

||||||

+ ":"

|

|

||||||

+ str(CFG.LOCAL_DB_PORT),

|

|

||||||

engine_args={

|

|

||||||

"pool_size": CFG.LOCAL_DB_POOL_SIZE,

|

|

||||||

"pool_recycle": 3600,

|

|

||||||

"echo": True,

|

|

||||||

},

|

|

||||||

)

|

|

||||||

# default_mysql = MySQLConnect.from_uri(

|

|

||||||

# "mysql+pymysql://"

|

# "mysql+pymysql://"

|

||||||

# + CFG.LOCAL_DB_USER

|

# + CFG.LOCAL_DB_USER

|

||||||

# + ":"

|

# + ":"

|

||||||

@ -96,43 +86,47 @@ class ConnectManager:

|

|||||||

# + CFG.LOCAL_DB_HOST

|

# + CFG.LOCAL_DB_HOST

|

||||||

# + ":"

|

# + ":"

|

||||||

# + str(CFG.LOCAL_DB_PORT),

|

# + str(CFG.LOCAL_DB_PORT),

|

||||||

# engine_args={"pool_size": 10, "pool_recycle": 3600, "echo": True},

|

# engine_args={

|

||||||

|

# "pool_size": CFG.LOCAL_DB_POOL_SIZE,

|

||||||

|

# "pool_recycle": 3600,

|

||||||

|

# "echo": True,

|

||||||

|

# },

|

||||||

# )

|

# )

|

||||||

dbs = default_mysql.get_database_list()

|

# dbs = default_mysql.get_database_list()

|

||||||

for name in dbs:

|

# for name in dbs:

|

||||||

self.storage.add_url_db(

|

# self.storage.add_url_db(

|

||||||

name,

|

# name,

|

||||||

DBType.Mysql.value(),

|

# DBType.Mysql.value(),

|

||||||

CFG.LOCAL_DB_HOST,

|

# CFG.LOCAL_DB_HOST,

|

||||||

CFG.LOCAL_DB_PORT,

|

# CFG.LOCAL_DB_PORT,

|

||||||

CFG.LOCAL_DB_USER,

|

# CFG.LOCAL_DB_USER,

|

||||||

CFG.LOCAL_DB_PASSWORD,

|

# CFG.LOCAL_DB_PASSWORD,

|

||||||

"",

|

# "",

|

||||||

)

|

# )

|

||||||

db_type = DBType.of_db_type(CFG.LOCAL_DB_TYPE)

|

# db_type = DBType.of_db_type(CFG.LOCAL_DB_TYPE)

|

||||||

if db_type.is_file_db():

|

# if db_type.is_file_db():

|

||||||

db_name = CFG.LOCAL_DB_NAME

|

# db_name = CFG.LOCAL_DB_NAME

|

||||||

db_type = CFG.LOCAL_DB_TYPE

|

# db_type = CFG.LOCAL_DB_TYPE

|

||||||

db_path = CFG.LOCAL_DB_PATH

|

# db_path = CFG.LOCAL_DB_PATH

|

||||||

if not db_type:

|

# if not db_type:

|

||||||

# Default file database type

|

# # Default file database type

|

||||||

db_type = DBType.DuckDb.value()

|

# db_type = DBType.DuckDb.value()

|

||||||

if not db_name:

|

# if not db_name:

|

||||||

db_type, db_name = self._parse_file_db_info(db_type, db_path)

|

# db_type, db_name = self._parse_file_db_info(db_type, db_path)

|

||||||

if db_name:

|

# if db_name:

|

||||||

print(

|

# print(

|

||||||

f"Add file db, db_name: {db_name}, db_type: {db_type}, db_path: {db_path}"

|

# f"Add file db, db_name: {db_name}, db_type: {db_type}, db_path: {db_path}"

|

||||||

)

|

# )

|

||||||

self.storage.add_file_db(db_name, db_type, db_path)

|

# self.storage.add_file_db(db_name, db_type, db_path)

|

||||||

|

|

||||||

def _parse_file_db_info(self, db_type: str, db_path: str):

|

# def _parse_file_db_info(self, db_type: str, db_path: str):

|

||||||

if db_type is None or db_type == DBType.DuckDb.value():

|

# if db_type is None or db_type == DBType.DuckDb.value():

|

||||||

# file db is duckdb

|

# # file db is duckdb

|

||||||

db_name = self.storage.get_file_db_name(db_path)

|

# db_name = self.storage.get_file_db_name(db_path)

|

||||||

db_type = DBType.DuckDb.value()

|

# db_type = DBType.DuckDb.value()

|

||||||

else:

|

# else:

|

||||||

db_name = DBType.parse_file_db_name_from_path(db_type, db_path)

|

# db_name = DBType.parse_file_db_name_from_path(db_type, db_path)

|

||||||

return db_type, db_name

|

# return db_type, db_name

|

||||||

|

|

||||||

def get_connect(self, db_name):

|

def get_connect(self, db_name):

|

||||||

db_config = self.storage.get_db_config(db_name)

|

db_config = self.storage.get_db_config(db_name)

|

||||||

@ -178,7 +172,7 @@ class ConnectManager:

|

|||||||

return self.storage.get_db_list()

|

return self.storage.get_db_list()

|

||||||

|

|

||||||

def get_db_names(self):

|

def get_db_names(self):

|

||||||

return self.storage.get_db_names()

|

return self.storage.get_by_name()

|

||||||

|

|

||||||

def delete_db(self, db_name: str):

|

def delete_db(self, db_name: str):

|

||||||

return self.storage.delete_db(db_name)

|

return self.storage.delete_db(db_name)

|

||||||

|

|||||||

@ -16,6 +16,27 @@ class EmbeddingEngine:

|

|||||||

2.similar_search: similarity search from vector_store

|

2.similar_search: similarity search from vector_store

|

||||||

how to use reference:https://db-gpt.readthedocs.io/en/latest/modules/knowledge.html

|

how to use reference:https://db-gpt.readthedocs.io/en/latest/modules/knowledge.html

|

||||||

how to integrate:https://db-gpt.readthedocs.io/en/latest/modules/knowledge/pdf/pdf_embedding.html

|

how to integrate:https://db-gpt.readthedocs.io/en/latest/modules/knowledge/pdf/pdf_embedding.html

|

||||||

|

Example:

|

||||||

|

.. code-block:: python

|

||||||

|

embedding_model = "your_embedding_model"

|

||||||

|

vector_store_type = "Chroma"

|

||||||

|

chroma_persist_path = "your_persist_path"

|

||||||

|

vector_store_config = {

|

||||||

|

"vector_store_name": "document_test",

|

||||||

|

"vector_store_type": vector_store_type,

|

||||||

|

"chroma_persist_path": chroma_persist_path,

|

||||||

|

}

|

||||||

|

|

||||||

|

# it can be .md,.pdf,.docx, .csv, .html

|

||||||

|

document_path = "your_path/test.md"

|

||||||

|

embedding_engine = EmbeddingEngine(

|

||||||

|

knowledge_source=document_path,

|

||||||

|

knowledge_type=KnowledgeType.DOCUMENT.value,

|

||||||

|

model_name=embedding_model,

|

||||||

|

vector_store_config=vector_store_config,

|

||||||

|

)

|

||||||

|

# embedding document content to vector store

|

||||||

|

embedding_engine.knowledge_embedding()

|

||||||

"""

|

"""

|

||||||

|

|

||||||

def __init__(

|

def __init__(

|

||||||

@ -74,7 +95,8 @@ class EmbeddingEngine:

|

|||||||

)

|

)

|

||||||

|

|

||||||

def similar_search(self, text, topk):

|

def similar_search(self, text, topk):

|

||||||

"""vector db similar search

|

"""vector db similar search in vector database.

|

||||||

|

Return topk docs.

|

||||||

Args:

|

Args:

|

||||||

- text: query text

|

- text: query text

|

||||||

- topk: top k

|

- topk: top k

|

||||||

@ -84,8 +106,22 @@ class EmbeddingEngine:

|

|||||||

)

|

)

|

||||||

# https://github.com/chroma-core/chroma/issues/657

|

# https://github.com/chroma-core/chroma/issues/657

|

||||||

ans = vector_client.similar_search(text, topk)

|

ans = vector_client.similar_search(text, topk)

|

||||||

# except NotEnoughElementsException:

|

return ans

|

||||||

# ans = vector_client.similar_search(text, 1)

|

|

||||||

|

def similar_search_with_scores(self, text, topk, score_threshold: float = 0.3):

|

||||||

|

"""

|

||||||

|

similar_search_with_score in vector database.

|

||||||

|

Return docs and relevance scores in the range [0, 1].

|

||||||

|

Args:

|

||||||

|

doc(str): query text

|

||||||

|

topk(int): return docs nums. Defaults to 4.

|

||||||

|

score_threshold(float): score_threshold: Optional, a floating point value between 0 to 1 to

|

||||||

|

filter the resulting set of retrieved docs,0 is dissimilar, 1 is most similar.

|

||||||

|

"""

|

||||||

|

vector_client = VectorStoreConnector(

|

||||||

|

self.vector_store_config["vector_store_type"], self.vector_store_config

|

||||||

|

)

|

||||||

|

ans = vector_client.similar_search_with_scores(text, topk, score_threshold)

|

||||||

return ans

|

return ans

|

||||||

|

|

||||||

def vector_exist(self):

|

def vector_exist(self):

|

||||||

|

|||||||

0

pilot/rag/extracter/__init__.py

Normal file

0

pilot/rag/extracter/__init__.py

Normal file

19

pilot/rag/extracter/base.py

Normal file

19

pilot/rag/extracter/base.py

Normal file

@ -0,0 +1,19 @@

|

|||||||

|

from abc import abstractmethod, ABC

|

||||||

|

from typing import List, Dict

|

||||||

|

|

||||||

|

from langchain.schema import Document

|

||||||

|

|

||||||

|

|

||||||

|

class Extractor(ABC):

|

||||||

|

"""Extractor Base class, it's apply for Summary Extractor, Keyword Extractor, Triplets Extractor, Question Extractor, etc."""

|

||||||

|

|

||||||

|

def __init__(self):

|

||||||

|

pass

|

||||||

|

|

||||||

|

@abstractmethod

|

||||||

|

def extract(self, chunks: List[Document]) -> List[Dict]:

|

||||||

|

"""Extracts chunks.

|

||||||

|

|

||||||

|

Args:

|

||||||

|

nodes (Sequence[Document]): nodes to extract metadata from

|

||||||

|

"""

|

||||||

95

pilot/rag/extracter/summary.py

Normal file

95

pilot/rag/extracter/summary.py

Normal file

@ -0,0 +1,95 @@

|

|||||||

|

from typing import List, Dict

|

||||||

|

|

||||||

|

from langchain.schema import Document

|

||||||

|

|

||||||

|

from pilot.common.llm_metadata import LLMMetadata

|

||||||

|

from pilot.rag.extracter.base import Extractor

|

||||||

|

|

||||||

|

|

||||||

|

class SummaryExtractor(Extractor):

|

||||||

|

"""Summary Extractor, it can extract document summary."""

|

||||||

|

|

||||||

|

def __init__(self, model_name: str = None, llm_metadata: LLMMetadata = None):

|

||||||

|

self.model_name = (model_name,)

|

||||||

|

self.llm_metadata = (llm_metadata or LLMMetadata,)

|

||||||

|

|

||||||

|

async def extract(self, chunks: List[Document]) -> str:

|

||||||

|

"""async document extract summary

|

||||||

|

Args:

|

||||||

|

- model_name: str

|

||||||

|

- chunk_docs: List[Document]

|

||||||

|

"""

|

||||||

|

texts = [doc.page_content for doc in chunks]

|

||||||

|

from pilot.common.prompt_util import PromptHelper

|

||||||

|

|

||||||

|

prompt_helper = PromptHelper()

|

||||||

|

from pilot.scene.chat_knowledge.summary.prompt import prompt

|

||||||

|

|

||||||

|

texts = prompt_helper.repack(prompt_template=prompt.template, text_chunks=texts)

|

||||||

|

return await self._mapreduce_extract_summary(

|

||||||

|

docs=texts, model_name=self.model_name, llm_metadata=self.llm_metadata

|

||||||

|

)

|

||||||

|

|

||||||

|

async def _mapreduce_extract_summary(

|

||||||