mirror of

https://github.com/hpcaitech/ColossalAI.git

synced 2025-04-29 20:25:29 +00:00

Compare commits

134 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

46ed5d856b | ||

|

|

7ecdf9a211 | ||

|

|

44d4053fec | ||

|

|

6d676ee0e9 | ||

|

|

56fe130b15 | ||

|

|

f32861ccc5 | ||

|

|

b9e60559b8 | ||

|

|

7595c453a5 | ||

|

|

53834b74b9 | ||

|

|

0171884664 | ||

|

|

9379cbd668 | ||

|

|

24dee8f0b7 | ||

|

|

f73ae55394 | ||

|

|

f8b9e88484 | ||

|

|

d54642a263 | ||

|

|

d20c8ffd97 | ||

|

|

ce0ec40811 | ||

|

|

5ff5323538 | ||

|

|

014837e725 | ||

|

|

ec73f1b5e2 | ||

|

|

5c09d726a6 | ||

|

|

2b415e5999 | ||

|

|

17062c83b9 | ||

|

|

ca0aa2365d | ||

|

|

97e60cbbcb | ||

|

|

5b094a836b | ||

|

|

ee81366cac | ||

|

|

479067e9bc | ||

|

|

7fdef9fd6b | ||

|

|

a9bedc7a43 | ||

|

|

af06d162cf | ||

|

|

836992438f | ||

|

|

8b0ed61490 | ||

|

|

5f82bfa636 | ||

|

|

fa9d0318e4 | ||

|

|

130229fdcb | ||

|

|

aaafb38851 | ||

|

|

e994c64568 | ||

|

|

de3d371f65 | ||

|

|

8d826a336e | ||

|

|

6280cb18b8 | ||

|

|

ab856fd308 | ||

|

|

8ecff0cb7f | ||

|

|

8fddbab04c | ||

|

|

152162a80e | ||

|

|

cf519dac6a | ||

|

|

5caad13055 | ||

|

|

e0c68ab6d3 | ||

|

|

184a653704 | ||

|

|

5fa657f0a1 | ||

|

|

eb69e640e5 | ||

|

|

b90835bd32 | ||

|

|

8e08c27e19 | ||

|

|

d4a436051d | ||

|

|

5a03d2696d | ||

|

|

cc40fe0e6f | ||

|

|

c2fe3137e2 | ||

|

|

a2596519fd | ||

|

|

30a9443132 | ||

|

|

7a60161035 | ||

|

|

a15ab139ad | ||

|

|

13ffa08cfa | ||

|

|

2f583c1549 | ||

|

|

c2e8f61592 | ||

|

|

89a9a600bc | ||

|

|

4294ae83bb | ||

|

|

80a8ca916a | ||

|

|

dee63cc5ef | ||

|

|

6d6cafabe2 | ||

|

|

b10339df7c | ||

|

|

19baab5fd5 | ||

|

|

58d8b8a2dd | ||

|

|

5ddad486ca | ||

|

|

3b1d7d1ae8 | ||

|

|

2bcd0b6844 | ||

|

|

cd61353bae | ||

|

|

62c13e7969 | ||

|

|

dcd41d0973 | ||

|

|

83cf2f84fb | ||

|

|

bc7eeade33 | ||

|

|

fd92789af2 | ||

|

|

6be9862aaf | ||

|

|

3dc08c8a5a | ||

|

|

8ff7d0c780 | ||

|

|

fe9208feac | ||

|

|

3201377e94 | ||

|

|

23199e34cc | ||

|

|

d891e50617 | ||

|

|

e1e86f9f1f | ||

|

|

4c8e85ee0d | ||

|

|

703bb5c18d | ||

|

|

4e0e99bb6a | ||

|

|

1507a7528f | ||

|

|

0002ae5956 | ||

|

|

dc2cdaf3e8 | ||

|

|

efe3042bb2 | ||

|

|

6b2c506fc5 | ||

|

|

5ecc27e150 | ||

|

|

f98384aef6 | ||

|

|

646b3c5a90 | ||

|

|

b635dd0669 | ||

|

|

3532f77b90 | ||

|

|

3fab92166e | ||

|

|

f4daf04270 | ||

|

|

6705dad41b | ||

|

|

91ed32c256 | ||

|

|

6fb1322db1 | ||

|

|

65c8297710 | ||

|

|

cfd9eda628 | ||

|

|

cbaa104216 | ||

|

|

dabc2e7430 | ||

|

|

f9546ba0be | ||

|

|

4fa6b9509c | ||

|

|

63314ce4e4 | ||

|

|

10e4f7da72 | ||

|

|

37e35230ff | ||

|

|

827ef3ee9a | ||

|

|

bdb125f83f | ||

|

|

f20b066c59 | ||

|

|

b582319273 | ||

|

|

0ad3129cb9 | ||

|

|

0b14a5512e | ||

|

|

696fced0d7 | ||

|

|

dc032172c3 | ||

|

|

f393867cff | ||

|

|

6eb8832366 | ||

|

|

683179cefd | ||

|

|

0a01e2a453 | ||

|

|

216d54e374 | ||

|

|

fdd84b9087 | ||

|

|

a35a078f08 | ||

|

|

13946c4448 | ||

|

|

c54c4fcd15 | ||

|

|

8fd25d6e09 |

@ -1,3 +1,3 @@

|

|||||||

2.2.2-12.1.0

|

|

||||||

2.3.0-12.1.0

|

2.3.0-12.1.0

|

||||||

2.4.0-12.4.1

|

2.4.0-12.4.1

|

||||||

|

2.5.1-12.4.1

|

||||||

|

|||||||

@ -1,11 +1,11 @@

|

|||||||

{

|

{

|

||||||

"build": [

|

"build": [

|

||||||

{

|

{

|

||||||

"torch_command": "pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 --index-url https://download.pytorch.org/whl/cu121",

|

"torch_command": "pip install torch==2.3.0 torchvision==0.18.0 torchaudio==2.3.0 --index-url https://download.pytorch.org/whl/cu121",

|

||||||

"cuda_image": "hpcaitech/cuda-conda:12.1"

|

"cuda_image": "hpcaitech/cuda-conda:12.1"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"torch_command": "pip install torch==2.4.0 torchvision==0.19.0 torchaudio==2.4.0 --index-url https://download.pytorch.org/whl/cu124",

|

"torch_command": "pip install torch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 --index-url https://download.pytorch.org/whl/cu124",

|

||||||

"cuda_image": "hpcaitech/cuda-conda:12.4"

|

"cuda_image": "hpcaitech/cuda-conda:12.4"

|

||||||

}

|

}

|

||||||

]

|

]

|

||||||

|

|||||||

20

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

20

.github/ISSUE_TEMPLATE/bug-report.yml

vendored

@ -15,6 +15,26 @@ body:

|

|||||||

options:

|

options:

|

||||||

- label: I have searched the existing issues

|

- label: I have searched the existing issues

|

||||||

required: true

|

required: true

|

||||||

|

|

||||||

|

- type: checkboxes

|

||||||

|

attributes:

|

||||||

|

label: The bug has not been fixed in the latest main branch

|

||||||

|

options:

|

||||||

|

- label: I have checked the latest main branch

|

||||||

|

required: true

|

||||||

|

|

||||||

|

- type: dropdown

|

||||||

|

id: share_script

|

||||||

|

attributes:

|

||||||

|

label: Do you feel comfortable sharing a concise (minimal) script that reproduces the error? :)

|

||||||

|

description: If not, please share your setting/training config, and/or point to the line in the repo that throws the error.

|

||||||

|

If the issue is not easily reproducible by us, it will reduce the likelihood of getting responses.

|

||||||

|

options:

|

||||||

|

- Yes, I will share a minimal reproducible script.

|

||||||

|

- No, I prefer not to share.

|

||||||

|

validations:

|

||||||

|

required: true

|

||||||

|

|

||||||

- type: textarea

|

- type: textarea

|

||||||

attributes:

|

attributes:

|

||||||

label: 🐛 Describe the bug

|

label: 🐛 Describe the bug

|

||||||

|

|||||||

11

.github/workflows/build_on_pr.yml

vendored

11

.github/workflows/build_on_pr.yml

vendored

@ -87,10 +87,10 @@ jobs:

|

|||||||

name: Build and Test Colossal-AI

|

name: Build and Test Colossal-AI

|

||||||

needs: detect

|

needs: detect

|

||||||

if: needs.detect.outputs.anyLibraryFileChanged == 'true'

|

if: needs.detect.outputs.anyLibraryFileChanged == 'true'

|

||||||

runs-on: [self-hosted, gpu]

|

runs-on: ubuntu-latest

|

||||||

container:

|

container:

|

||||||

image: hpcaitech/pytorch-cuda:2.2.2-12.1.0

|

image: image-cloud.luchentech.com/hpcaitech/pytorch-cuda:2.2.2-12.1.0

|

||||||

options: --gpus all --rm -v /dev/shm -v /data/scratch:/data/scratch

|

options: --gpus all --shm-size=2g --rm -v /dev/shm -v /data/scratch:/data/scratch

|

||||||

timeout-minutes: 90

|

timeout-minutes: 90

|

||||||

defaults:

|

defaults:

|

||||||

run:

|

run:

|

||||||

@ -117,7 +117,7 @@ jobs:

|

|||||||

cd TensorNVMe

|

cd TensorNVMe

|

||||||

conda install cmake

|

conda install cmake

|

||||||

pip install -r requirements.txt

|

pip install -r requirements.txt

|

||||||

DISABLE_URING=1 pip install -v .

|

DISABLE_URING=1 pip install -v --no-cache-dir .

|

||||||

|

|

||||||

- name: Store TensorNVMe Cache

|

- name: Store TensorNVMe Cache

|

||||||

run: |

|

run: |

|

||||||

@ -166,6 +166,7 @@ jobs:

|

|||||||

LD_LIBRARY_PATH: /github/home/.tensornvme/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

|

LD_LIBRARY_PATH: /github/home/.tensornvme/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

|

||||||

LLAMA_PATH: /data/scratch/llama-tiny

|

LLAMA_PATH: /data/scratch/llama-tiny

|

||||||

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

||||||

|

HF_ENDPOINT: https://hf-mirror.com

|

||||||

|

|

||||||

- name: Collate artifact

|

- name: Collate artifact

|

||||||

env:

|

env:

|

||||||

@ -199,7 +200,7 @@ jobs:

|

|||||||

fi

|

fi

|

||||||

|

|

||||||

- name: Upload test coverage artifact

|

- name: Upload test coverage artifact

|

||||||

uses: actions/upload-artifact@v3

|

uses: actions/upload-artifact@v4

|

||||||

with:

|

with:

|

||||||

name: report

|

name: report

|

||||||

path: report/

|

path: report/

|

||||||

|

|||||||

1

.github/workflows/build_on_schedule.yml

vendored

1

.github/workflows/build_on_schedule.yml

vendored

@ -70,6 +70,7 @@ jobs:

|

|||||||

LD_LIBRARY_PATH: /github/home/.tensornvme/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

|

LD_LIBRARY_PATH: /github/home/.tensornvme/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

|

||||||

LLAMA_PATH: /data/scratch/llama-tiny

|

LLAMA_PATH: /data/scratch/llama-tiny

|

||||||

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

||||||

|

HF_ENDPOINT: https://hf-mirror.com

|

||||||

|

|

||||||

- name: Notify Lark

|

- name: Notify Lark

|

||||||

id: message-preparation

|

id: message-preparation

|

||||||

|

|||||||

@ -79,3 +79,4 @@ jobs:

|

|||||||

LD_LIBRARY_PATH: /github/home/.tensornvme/lib

|

LD_LIBRARY_PATH: /github/home/.tensornvme/lib

|

||||||

LLAMA_PATH: /data/scratch/llama-tiny

|

LLAMA_PATH: /data/scratch/llama-tiny

|

||||||

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

||||||

|

HF_ENDPOINT: https://hf-mirror.com

|

||||||

|

|||||||

@ -73,3 +73,4 @@ jobs:

|

|||||||

LD_LIBRARY_PATH: /github/home/.tensornvme/lib

|

LD_LIBRARY_PATH: /github/home/.tensornvme/lib

|

||||||

LLAMA_PATH: /data/scratch/llama-tiny

|

LLAMA_PATH: /data/scratch/llama-tiny

|

||||||

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

||||||

|

HF_ENDPOINT: https://hf-mirror.com

|

||||||

|

|||||||

@ -67,6 +67,7 @@ jobs:

|

|||||||

LD_LIBRARY_PATH: /github/home/.tensornvme/lib

|

LD_LIBRARY_PATH: /github/home/.tensornvme/lib

|

||||||

LLAMA_PATH: /data/scratch/llama-tiny

|

LLAMA_PATH: /data/scratch/llama-tiny

|

||||||

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

MOE_TENSOR_PATH: /data/scratch/moe_tensors

|

||||||

|

HF_ENDPOINT: https://hf-mirror.com

|

||||||

|

|

||||||

- name: Notify Lark

|

- name: Notify Lark

|

||||||

id: message-preparation

|

id: message-preparation

|

||||||

|

|||||||

1

.github/workflows/doc_check_on_pr.yml

vendored

1

.github/workflows/doc_check_on_pr.yml

vendored

@ -58,6 +58,7 @@ jobs:

|

|||||||

# there is no main branch, so it's safe to checkout the main branch from the merged branch

|

# there is no main branch, so it's safe to checkout the main branch from the merged branch

|

||||||

# docer will rebase the remote main branch to the merged branch, so we have to config user

|

# docer will rebase the remote main branch to the merged branch, so we have to config user

|

||||||

- name: Make the merged branch main

|

- name: Make the merged branch main

|

||||||

|

|

||||||

run: |

|

run: |

|

||||||

cd ColossalAI

|

cd ColossalAI

|

||||||

git checkout -b main

|

git checkout -b main

|

||||||

|

|||||||

@ -49,6 +49,7 @@ jobs:

|

|||||||

# we need to install the requirements.txt first

|

# we need to install the requirements.txt first

|

||||||

# as test-pypi may not contain the distributions for libs listed in the txt file

|

# as test-pypi may not contain the distributions for libs listed in the txt file

|

||||||

pip install -r requirements/requirements.txt

|

pip install -r requirements/requirements.txt

|

||||||

|

pip install -U setuptools==68.2.2 wheel

|

||||||

pip install --index-url https://test.pypi.org/simple/ --extra-index-url https://pypi.python.org/pypi colossalai==$VERSION

|

pip install --index-url https://test.pypi.org/simple/ --extra-index-url https://pypi.python.org/pypi colossalai==$VERSION

|

||||||

env:

|

env:

|

||||||

VERSION: ${{ steps.prep-version.outputs.version }}

|

VERSION: ${{ steps.prep-version.outputs.version }}

|

||||||

|

|||||||

4

.github/workflows/run_chatgpt_examples.yml

vendored

4

.github/workflows/run_chatgpt_examples.yml

vendored

@ -31,13 +31,12 @@ jobs:

|

|||||||

|

|

||||||

- name: Install Colossal-AI

|

- name: Install Colossal-AI

|

||||||

run: |

|

run: |

|

||||||

BUILD_EXT=1 pip install --no-cache-dir -v -e .

|

pip install --no-cache-dir -v -e .

|

||||||

|

|

||||||

- name: Install ChatGPT

|

- name: Install ChatGPT

|

||||||

run: |

|

run: |

|

||||||

cd applications/ColossalChat

|

cd applications/ColossalChat

|

||||||

pip install --no-cache-dir -v .

|

pip install --no-cache-dir -v .

|

||||||

export BUILD_EXT=1

|

|

||||||

pip install --no-cache-dir -r examples/requirements.txt

|

pip install --no-cache-dir -r examples/requirements.txt

|

||||||

|

|

||||||

- name: Install Transformers

|

- name: Install Transformers

|

||||||

@ -61,5 +60,6 @@ jobs:

|

|||||||

PRETRAINED_MODEL_PATH: ./models

|

PRETRAINED_MODEL_PATH: ./models

|

||||||

SFT_DATASET: ./sft_data

|

SFT_DATASET: ./sft_data

|

||||||

PROMPT_DATASET: ./prompt_data

|

PROMPT_DATASET: ./prompt_data

|

||||||

|

PROMPT_RLVR_DATASET: ./prompt_data

|

||||||

PREFERENCE_DATASET: ./preference_data

|

PREFERENCE_DATASET: ./preference_data

|

||||||

KTO_DATASET: ./kto_data

|

KTO_DATASET: ./kto_data

|

||||||

|

|||||||

@ -15,21 +15,21 @@ repos:

|

|||||||

args: ["--profile", "black"] # avoid conflict with black

|

args: ["--profile", "black"] # avoid conflict with black

|

||||||

|

|

||||||

- repo: https://github.com/psf/black-pre-commit-mirror

|

- repo: https://github.com/psf/black-pre-commit-mirror

|

||||||

rev: 24.8.0

|

rev: 24.10.0

|

||||||

hooks:

|

hooks:

|

||||||

- id: black

|

- id: black

|

||||||

name: black formatter

|

name: black formatter

|

||||||

args: ['--line-length=120', '--target-version=py37', '--target-version=py38', '--target-version=py39','--target-version=py310']

|

args: ['--line-length=120', '--target-version=py37', '--target-version=py38', '--target-version=py39','--target-version=py310']

|

||||||

|

|

||||||

- repo: https://github.com/pre-commit/mirrors-clang-format

|

- repo: https://github.com/pre-commit/mirrors-clang-format

|

||||||

rev: v18.1.8

|

rev: v19.1.5

|

||||||

hooks:

|

hooks:

|

||||||

- id: clang-format

|

- id: clang-format

|

||||||

name: clang formatter

|

name: clang formatter

|

||||||

types_or: [c++, c]

|

types_or: [c++, c]

|

||||||

|

|

||||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||||

rev: v4.6.0

|

rev: v5.0.0

|

||||||

hooks:

|

hooks:

|

||||||

- id: check-yaml

|

- id: check-yaml

|

||||||

- id: check-merge-conflict

|

- id: check-merge-conflict

|

||||||

|

|||||||

30

README.md

30

README.md

@ -9,7 +9,7 @@

|

|||||||

<a href="https://www.colossalai.org/"> Documentation </a> |

|

<a href="https://www.colossalai.org/"> Documentation </a> |

|

||||||

<a href="https://github.com/hpcaitech/ColossalAI/tree/main/examples"> Examples </a> |

|

<a href="https://github.com/hpcaitech/ColossalAI/tree/main/examples"> Examples </a> |

|

||||||

<a href="https://github.com/hpcaitech/ColossalAI/discussions"> Forum </a> |

|

<a href="https://github.com/hpcaitech/ColossalAI/discussions"> Forum </a> |

|

||||||

<a href="https://cloud.luchentech.com/">GPU Cloud Playground </a> |

|

<a href="https://colossalai.org/zh-Hans/docs/get_started/bonus/">GPU Cloud Playground </a> |

|

||||||

<a href="https://hpc-ai.com/blog"> Blog </a></h3>

|

<a href="https://hpc-ai.com/blog"> Blog </a></h3>

|

||||||

|

|

||||||

[](https://github.com/hpcaitech/ColossalAI/stargazers)

|

[](https://github.com/hpcaitech/ColossalAI/stargazers)

|

||||||

@ -25,16 +25,34 @@

|

|||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

|

## Get Started with Colossal-AI Without Setup

|

||||||

|

|

||||||

|

Access high-end, on-demand compute for your research instantly—no setup needed.

|

||||||

|

|

||||||

|

Sign up now and get $10 in credits!

|

||||||

|

|

||||||

|

Limited Academic Bonuses:

|

||||||

|

|

||||||

|

* Top up $1,000 and receive 300 credits

|

||||||

|

* Top up $500 and receive 100 credits

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

<a href="https://hpc-ai.com/?utm_source=github&utm_medium=social&utm_campaign=promotion-colossalai">

|

||||||

|

<img src="https://github.com/hpcaitech/public_assets/blob/main/colossalai/img/2-2.gif" width="850" />

|

||||||

|

</a>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

|

||||||

## Latest News

|

## Latest News

|

||||||

|

* [2025/02] [DeepSeek 671B Fine-Tuning Guide Revealed—Unlock the Upgraded DeepSeek Suite with One Click, AI Players Ecstatic!](https://company.hpc-ai.com/blog/shocking-release-deepseek-671b-fine-tuning-guide-revealed-unlock-the-upgraded-deepseek-suite-with-one-click-ai-players-ecstatic)

|

||||||

|

* [2024/12] [The development cost of video generation models has saved by 50%! Open-source solutions are now available with H200 GPU vouchers](https://company.hpc-ai.com/blog/the-development-cost-of-video-generation-models-has-saved-by-50-open-source-solutions-are-now-available-with-h200-gpu-vouchers) [[code]](https://github.com/hpcaitech/Open-Sora/blob/main/scripts/train.py) [[vouchers]](https://colossalai.org/zh-Hans/docs/get_started/bonus/)

|

||||||

|

* [2024/10] [How to build a low-cost Sora-like app? Solutions for you](https://company.hpc-ai.com/blog/how-to-build-a-low-cost-sora-like-app-solutions-for-you)

|

||||||

|

* [2024/09] [Singapore Startup HPC-AI Tech Secures 50 Million USD in Series A Funding to Build the Video Generation AI Model and GPU Platform](https://company.hpc-ai.com/blog/singapore-startup-hpc-ai-tech-secures-50-million-usd-in-series-a-funding-to-build-the-video-generation-ai-model-and-gpu-platform)

|

||||||

|

* [2024/09] [Reducing AI Large Model Training Costs by 30% Requires Just a Single Line of Code From FP8 Mixed Precision Training Upgrades](https://company.hpc-ai.com/blog/reducing-ai-large-model-training-costs-by-30-requires-just-a-single-line-of-code-from-fp8-mixed-precision-training-upgrades)

|

||||||

* [2024/06] [Open-Sora Continues Open Source: Generate Any 16-Second 720p HD Video with One Click, Model Weights Ready to Use](https://hpc-ai.com/blog/open-sora-from-hpc-ai-tech-team-continues-open-source-generate-any-16-second-720p-hd-video-with-one-click-model-weights-ready-to-use)

|

* [2024/06] [Open-Sora Continues Open Source: Generate Any 16-Second 720p HD Video with One Click, Model Weights Ready to Use](https://hpc-ai.com/blog/open-sora-from-hpc-ai-tech-team-continues-open-source-generate-any-16-second-720p-hd-video-with-one-click-model-weights-ready-to-use)

|

||||||

* [2024/05] [Large AI Models Inference Speed Doubled, Colossal-Inference Open Source Release](https://hpc-ai.com/blog/colossal-inference)

|

* [2024/05] [Large AI Models Inference Speed Doubled, Colossal-Inference Open Source Release](https://hpc-ai.com/blog/colossal-inference)

|

||||||

* [2024/04] [Open-Sora Unveils Major Upgrade: Embracing Open Source with Single-Shot 16-Second Video Generation and 720p Resolution](https://hpc-ai.com/blog/open-soras-comprehensive-upgrade-unveiled-embracing-16-second-video-generation-and-720p-resolution-in-open-source)

|

* [2024/04] [Open-Sora Unveils Major Upgrade: Embracing Open Source with Single-Shot 16-Second Video Generation and 720p Resolution](https://hpc-ai.com/blog/open-soras-comprehensive-upgrade-unveiled-embracing-16-second-video-generation-and-720p-resolution-in-open-source)

|

||||||

* [2024/04] [Most cost-effective solutions for inference, fine-tuning and pretraining, tailored to LLaMA3 series](https://hpc-ai.com/blog/most-cost-effective-solutions-for-inference-fine-tuning-and-pretraining-tailored-to-llama3-series)

|

* [2024/04] [Most cost-effective solutions for inference, fine-tuning and pretraining, tailored to LLaMA3 series](https://hpc-ai.com/blog/most-cost-effective-solutions-for-inference-fine-tuning-and-pretraining-tailored-to-llama3-series)

|

||||||

* [2024/03] [314 Billion Parameter Grok-1 Inference Accelerated by 3.8x, Efficient and Easy-to-Use PyTorch+HuggingFace version is Here](https://hpc-ai.com/blog/314-billion-parameter-grok-1-inference-accelerated-by-3.8x-efficient-and-easy-to-use-pytorchhuggingface-version-is-here)

|

|

||||||

* [2024/03] [Open-Sora: Revealing Complete Model Parameters, Training Details, and Everything for Sora-like Video Generation Models](https://hpc-ai.com/blog/open-sora-v1.0)

|

|

||||||

* [2024/03] [Open-Sora:Sora Replication Solution with 46% Cost Reduction, Sequence Expansion to Nearly a Million](https://hpc-ai.com/blog/open-sora)

|

|

||||||

* [2024/01] [Inference Performance Improved by 46%, Open Source Solution Breaks the Length Limit of LLM for Multi-Round Conversations](https://hpc-ai.com/blog/Colossal-AI-SwiftInfer)

|

|

||||||

* [2023/07] [HPC-AI Tech Raises 22 Million USD in Series A Funding](https://www.hpc-ai.tech/blog/hpc-ai-tech-raises-22-million-usd-in-series-a-funding-to-fuel-team-expansion-and-business-growth)

|

|

||||||

|

|

||||||

## Table of Contents

|

## Table of Contents

|

||||||

<ul>

|

<ul>

|

||||||

|

|||||||

@ -100,7 +100,7 @@ LLaMA3_Conv = Conversation(

|

|||||||

messages=[],

|

messages=[],

|

||||||

offset=0,

|

offset=0,

|

||||||

sep_style=SeparatorStyle.ADD_BOS_EOS_TOKEN,

|

sep_style=SeparatorStyle.ADD_BOS_EOS_TOKEN,

|

||||||

seps=["<|begin_of_text|>", "<|end_of_text|>"],

|

seps=["<|begin_of_text|>", "<|eot_id|>"],

|

||||||

)

|

)

|

||||||

|

|

||||||

default_conversation = LLaMA3_Conv

|

default_conversation = LLaMA3_Conv

|

||||||

|

|||||||

@ -88,7 +88,7 @@ def supervised_tokenize_sft(

|

|||||||

|

|

||||||

assert (

|

assert (

|

||||||

tokenizer.bos_token == conversation_template.seps[0] and tokenizer.eos_token == conversation_template.seps[1]

|

tokenizer.bos_token == conversation_template.seps[0] and tokenizer.eos_token == conversation_template.seps[1]

|

||||||

), "`bos_token` and `eos_token` should be the same with `conversation_template.seps`."

|

), f"`bos_token`{tokenizer.bos_token} and `eos_token`{tokenizer.eos_token} should be the same with `conversation_template.seps`{conversation_template.seps}."

|

||||||

|

|

||||||

if ignore_index is None:

|

if ignore_index is None:

|

||||||

ignore_index = IGNORE_INDEX

|

ignore_index = IGNORE_INDEX

|

||||||

|

|||||||

@ -43,6 +43,7 @@ def save_checkpoint(

|

|||||||

step: int,

|

step: int,

|

||||||

batch_size: int,

|

batch_size: int,

|

||||||

coordinator: DistCoordinator,

|

coordinator: DistCoordinator,

|

||||||

|

use_lora: bool = False,

|

||||||

) -> None:

|

) -> None:

|

||||||

"""

|

"""

|

||||||

Save model checkpoint, optimizer, LR scheduler and intermedidate running states.

|

Save model checkpoint, optimizer, LR scheduler and intermedidate running states.

|

||||||

@ -51,7 +52,10 @@ def save_checkpoint(

|

|||||||

save_dir = os.path.join(save_dir, f"epoch-{epoch}_step-{step}")

|

save_dir = os.path.join(save_dir, f"epoch-{epoch}_step-{step}")

|

||||||

os.makedirs(os.path.join(save_dir, "modeling"), exist_ok=True)

|

os.makedirs(os.path.join(save_dir, "modeling"), exist_ok=True)

|

||||||

|

|

||||||

booster.save_model(model, os.path.join(save_dir, "modeling"), shard=True)

|

if use_lora:

|

||||||

|

booster.save_lora_as_pretrained(model, os.path.join(save_dir, "modeling"))

|

||||||

|

else:

|

||||||

|

booster.save_model(model, os.path.join(save_dir, "modeling"), shard=True)

|

||||||

|

|

||||||

booster.save_optimizer(optimizer, os.path.join(save_dir, "optimizer"), shard=True)

|

booster.save_optimizer(optimizer, os.path.join(save_dir, "optimizer"), shard=True)

|

||||||

booster.save_lr_scheduler(lr_scheduler, os.path.join(save_dir, "lr_scheduler"))

|

booster.save_lr_scheduler(lr_scheduler, os.path.join(save_dir, "lr_scheduler"))

|

||||||

|

|||||||

@ -21,6 +21,7 @@ from colossal_llama.utils.ckpt_io import load_checkpoint, save_checkpoint

|

|||||||

from colossal_llama.utils.froze import freeze_non_embeds_parameters

|

from colossal_llama.utils.froze import freeze_non_embeds_parameters

|

||||||

from colossal_llama.utils.neftune_patch import activate_neftune, deactivate_neftune

|

from colossal_llama.utils.neftune_patch import activate_neftune, deactivate_neftune

|

||||||

from colossal_llama.utils.utils import all_reduce_mean, format_numel_str, get_model_numel

|

from colossal_llama.utils.utils import all_reduce_mean, format_numel_str, get_model_numel

|

||||||

|

from peft import LoraConfig

|

||||||

from torch.utils.tensorboard import SummaryWriter

|

from torch.utils.tensorboard import SummaryWriter

|

||||||

from tqdm import tqdm

|

from tqdm import tqdm

|

||||||

from transformers import AutoModelForCausalLM, AutoTokenizer

|

from transformers import AutoModelForCausalLM, AutoTokenizer

|

||||||

@ -65,7 +66,7 @@ def train(args) -> None:

|

|||||||

initial_scale=2**16,

|

initial_scale=2**16,

|

||||||

max_norm=args.grad_clip,

|

max_norm=args.grad_clip,

|

||||||

enable_gradient_accumulation=(args.accumulation_steps > 1),

|

enable_gradient_accumulation=(args.accumulation_steps > 1),

|

||||||

enable_fused_normalization=torch.cuda.is_available(),

|

enable_fused_normalization=get_accelerator().is_available(),

|

||||||

enable_flash_attention=args.use_flash_attn,

|

enable_flash_attention=args.use_flash_attn,

|

||||||

)

|

)

|

||||||

elif args.plugin == "gemini_auto":

|

elif args.plugin == "gemini_auto":

|

||||||

@ -75,7 +76,7 @@ def train(args) -> None:

|

|||||||

initial_scale=2**16,

|

initial_scale=2**16,

|

||||||

max_norm=args.grad_clip,

|

max_norm=args.grad_clip,

|

||||||

enable_gradient_accumulation=(args.accumulation_steps > 1),

|

enable_gradient_accumulation=(args.accumulation_steps > 1),

|

||||||

enable_fused_normalization=torch.cuda.is_available(),

|

enable_fused_normalization=get_accelerator().is_available(),

|

||||||

enable_flash_attention=args.use_flash_attn,

|

enable_flash_attention=args.use_flash_attn,

|

||||||

)

|

)

|

||||||

elif args.plugin == "zero2":

|

elif args.plugin == "zero2":

|

||||||

@ -101,10 +102,9 @@ def train(args) -> None:

|

|||||||

sequence_parallelism_mode=args.sp_mode,

|

sequence_parallelism_mode=args.sp_mode,

|

||||||

zero_stage=args.zero_stage,

|

zero_stage=args.zero_stage,

|

||||||

enable_flash_attention=args.use_flash_attn,

|

enable_flash_attention=args.use_flash_attn,

|

||||||

enable_fused_normalization=torch.cuda.is_available(),

|

enable_fused_normalization=get_accelerator().is_available(),

|

||||||

enable_sequence_parallelism=args.enable_sequence_parallelism,

|

enable_sequence_parallelism=args.enable_sequence_parallelism,

|

||||||

cpu_offload=True if args.zero_stage >= 1 and args.zero_cpu_offload else False,

|

cpu_offload=True if args.zero_stage >= 1 and args.zero_cpu_offload else False,

|

||||||

parallel_output=False,

|

|

||||||

max_norm=args.grad_clip,

|

max_norm=args.grad_clip,

|

||||||

precision=args.mixed_precision,

|

precision=args.mixed_precision,

|

||||||

microbatch_size=args.microbatch_size,

|

microbatch_size=args.microbatch_size,

|

||||||

@ -117,11 +117,17 @@ def train(args) -> None:

|

|||||||

# ======================================================

|

# ======================================================

|

||||||

# Initialize Tokenizer, Dataset, Collator and Dataloader

|

# Initialize Tokenizer, Dataset, Collator and Dataloader

|

||||||

# ======================================================

|

# ======================================================

|

||||||

tokenizer = AutoTokenizer.from_pretrained(args.pretrained)

|

tokenizer = AutoTokenizer.from_pretrained(args.pretrained, trust_remote_code=True)

|

||||||

if args.pad_token == "eos":

|

if args.pad_token == "eos":

|

||||||

tokenizer.pad_token = tokenizer.eos_token

|

try:

|

||||||

|

tokenizer.pad_token = tokenizer.eos_token

|

||||||

|

except AttributeError:

|

||||||

|

coordinator.print_on_master(f"pad_token can't be set")

|

||||||

elif args.pad_token == "unk":

|

elif args.pad_token == "unk":

|

||||||

tokenizer.pad_token = tokenizer.unk_token

|

try:

|

||||||

|

tokenizer.pad_token = tokenizer.unk_token

|

||||||

|

except AttributeError:

|

||||||

|

coordinator.print_on_master(f"pad_token can't be set")

|

||||||

tokenizer.add_bos_token = False

|

tokenizer.add_bos_token = False

|

||||||

tokenizer.add_eos_token = False

|

tokenizer.add_eos_token = False

|

||||||

|

|

||||||

@ -164,33 +170,31 @@ def train(args) -> None:

|

|||||||

# ======================================================

|

# ======================================================

|

||||||

# Initialize Model, Objective, Optimizer and LR Scheduler

|

# Initialize Model, Objective, Optimizer and LR Scheduler

|

||||||

# ======================================================

|

# ======================================================

|

||||||

|

# When training the ChatGLM model, LoRA and gradient checkpointing are incompatible.

|

||||||

init_ctx = (

|

init_ctx = (

|

||||||

LazyInitContext(default_device=get_current_device())

|

LazyInitContext(default_device=get_current_device())

|

||||||

if isinstance(plugin, (GeminiPlugin, HybridParallelPlugin))

|

if isinstance(plugin, (GeminiPlugin, HybridParallelPlugin)) and args.lora_rank == 0

|

||||||

else nullcontext()

|

else nullcontext()

|

||||||

)

|

)

|

||||||

with init_ctx:

|

with init_ctx:

|

||||||

if args.use_flash_attn:

|

model = AutoModelForCausalLM.from_pretrained(

|

||||||

model = AutoModelForCausalLM.from_pretrained(

|

args.pretrained,

|

||||||

args.pretrained,

|

torch_dtype=torch.bfloat16 if args.mixed_precision == "bf16" else torch.float16,

|

||||||

attn_implementation="flash_attention_2",

|

trust_remote_code=True,

|

||||||

torch_dtype=torch.bfloat16 if args.mixed_precision == "bf16" else torch.float16,

|

)

|

||||||

trust_remote_code=True,

|

|

||||||

)

|

|

||||||

else:

|

|

||||||

model = AutoModelForCausalLM.from_pretrained(

|

|

||||||

args.pretrained,

|

|

||||||

torch_dtype=torch.bfloat16 if args.mixed_precision == "bf16" else torch.float16,

|

|

||||||

trust_remote_code=True,

|

|

||||||

)

|

|

||||||

# Freeze part of parameters.

|

# Freeze part of parameters.

|

||||||

if args.freeze_non_embeds_params:

|

if args.freeze_non_embeds_params:

|

||||||

freeze_non_embeds_parameters(model=model)

|

freeze_non_embeds_parameters(model=model)

|

||||||

|

|

||||||

|

if args.lora_rank > 0:

|

||||||

|

lora_config = LoraConfig(task_type="CAUSAL_LM", r=args.lora_rank, lora_alpha=32, lora_dropout=0.1)

|

||||||

|

model = booster.enable_lora(model, lora_config=lora_config)

|

||||||

|

|

||||||

# this is essential, otherwise the grad checkpoint will not work.

|

# this is essential, otherwise the grad checkpoint will not work.

|

||||||

model.train()

|

model.train()

|

||||||

|

|

||||||

if args.use_grad_checkpoint:

|

if args.use_grad_checkpoint:

|

||||||

model.gradient_checkpointing_enable()

|

model.gradient_checkpointing_enable(gradient_checkpointing_kwargs={"use_reentrant": False})

|

||||||

coordinator.print_on_master(msg="Gradient checkpointing enabled successfully")

|

coordinator.print_on_master(msg="Gradient checkpointing enabled successfully")

|

||||||

|

|

||||||

model_numel = get_model_numel(model)

|

model_numel = get_model_numel(model)

|

||||||

@ -327,6 +331,7 @@ def train(args) -> None:

|

|||||||

step=step + 1,

|

step=step + 1,

|

||||||

batch_size=args.batch_size,

|

batch_size=args.batch_size,

|

||||||

coordinator=coordinator,

|

coordinator=coordinator,

|

||||||

|

use_lora=(args.lora_rank > 0),

|

||||||

)

|

)

|

||||||

coordinator.print_on_master(

|

coordinator.print_on_master(

|

||||||

f"Saved checkpoint at epoch {epoch} step {step + 1} at folder {args.save_dir}"

|

f"Saved checkpoint at epoch {epoch} step {step + 1} at folder {args.save_dir}"

|

||||||

@ -371,44 +376,45 @@ def train(args) -> None:

|

|||||||

total_loss.fill_(0.0)

|

total_loss.fill_(0.0)

|

||||||

pbar.update()

|

pbar.update()

|

||||||

|

|

||||||

# Save modeling.

|

# Save modeling.

|

||||||

save_model_condition = (

|

save_model_condition = (

|

||||||

args.save_interval > 0 and (step + 1) % (args.save_interval * args.accumulation_steps) == 0

|

args.save_interval > 0 and (step + 1) % (args.save_interval * args.accumulation_steps) == 0

|

||||||

)

|

|

||||||

|

|

||||||

if not args.skip_save_each_epoch:

|

|

||||||

save_model_condition = save_model_condition or (step + 1) == len(dataloader)

|

|

||||||

|

|

||||||

if save_model_condition and not args.benchmark:

|

|

||||||

coordinator.print_on_master("\nStart saving model checkpoint with running states")

|

|

||||||

|

|

||||||

if args.use_neft:

|

|

||||||

coordinator.print_on_master("Deactivate NEFTune before saving model.")

|

|

||||||

deactivate_neftune(model, handle)

|

|

||||||

|

|

||||||

accelerator.empty_cache()

|

|

||||||

save_checkpoint(

|

|

||||||

save_dir=args.save_dir,

|

|

||||||

booster=booster,

|

|

||||||

model=model,

|

|

||||||

optimizer=optimizer,

|

|

||||||

lr_scheduler=lr_scheduler,

|

|

||||||

epoch=epoch,

|

|

||||||

step=step + 1,

|

|

||||||

batch_size=args.batch_size,

|

|

||||||

coordinator=coordinator,

|

|

||||||

)

|

|

||||||

coordinator.print_on_master(

|

|

||||||

f"Saved checkpoint at epoch {epoch} step {step + 1} at folder {args.save_dir}"

|

|

||||||

)

|

)

|

||||||

|

|

||||||

if args.use_neft:

|

if not args.skip_save_each_epoch:

|

||||||

coordinator.print_on_master("Activate NEFTune.")

|

save_model_condition = save_model_condition or (step + 1) == len(dataloader)

|

||||||

model, handle = activate_neftune(model)

|

|

||||||

|

|

||||||

# Delete cache.

|

if save_model_condition and not args.benchmark:

|

||||||

# del batch, batch_labels, batch_output, loss

|

coordinator.print_on_master("\nStart saving model checkpoint with running states")

|

||||||

accelerator.empty_cache()

|

|

||||||

|

if args.use_neft:

|

||||||

|

coordinator.print_on_master("Deactivate NEFTune before saving model.")

|

||||||

|

deactivate_neftune(model, handle)

|

||||||

|

|

||||||

|

accelerator.empty_cache()

|

||||||

|

save_checkpoint(

|

||||||

|

save_dir=args.save_dir,

|

||||||

|

booster=booster,

|

||||||

|

model=model,

|

||||||

|

optimizer=optimizer,

|

||||||

|

lr_scheduler=lr_scheduler,

|

||||||

|

epoch=epoch,

|

||||||

|

step=step + 1,

|

||||||

|

batch_size=args.batch_size,

|

||||||

|

coordinator=coordinator,

|

||||||

|

use_lora=(args.lora_rank > 0),

|

||||||

|

)

|

||||||

|

coordinator.print_on_master(

|

||||||

|

f"Saved checkpoint at epoch {epoch} step {step + 1} at folder {args.save_dir}"

|

||||||

|

)

|

||||||

|

|

||||||

|

if args.use_neft:

|

||||||

|

coordinator.print_on_master("Activate NEFTune.")

|

||||||

|

model, handle = activate_neftune(model)

|

||||||

|

|

||||||

|

# Delete cache.

|

||||||

|

# del batch, batch_labels, batch_output, loss

|

||||||

|

accelerator.empty_cache()

|

||||||

|

|

||||||

# the continue epochs are not resumed, so we need to reset the sampler start index and start step

|

# the continue epochs are not resumed, so we need to reset the sampler start index and start step

|

||||||

dataloader.sampler.set_start_index(start_index=0)

|

dataloader.sampler.set_start_index(start_index=0)

|

||||||

@ -522,6 +528,7 @@ if __name__ == "__main__":

|

|||||||

parser.add_argument(

|

parser.add_argument(

|

||||||

"--microbatch_size", type=int, default=1, help="Batch size for each process in PP, used for 3d plugin."

|

"--microbatch_size", type=int, default=1, help="Batch size for each process in PP, used for 3d plugin."

|

||||||

)

|

)

|

||||||

|

parser.add_argument("--lora_rank", type=int, default=0, help="lora rank when using lora to train.")

|

||||||

|

|

||||||

# Additional arguments for benchmark.

|

# Additional arguments for benchmark.

|

||||||

parser.add_argument("--num_samples", type=int, default=500, help="Number of samples for benchmarking.")

|

parser.add_argument("--num_samples", type=int, default=500, help="Number of samples for benchmarking.")

|

||||||

|

|||||||

1

applications/ColossalChat/.gitignore

vendored

1

applications/ColossalChat/.gitignore

vendored

@ -158,6 +158,7 @@ temp/

|

|||||||

applications/ColossalChat/logs

|

applications/ColossalChat/logs

|

||||||

applications/ColossalChat/models

|

applications/ColossalChat/models

|

||||||

applications/ColossalChat/sft_data

|

applications/ColossalChat/sft_data

|

||||||

|

applications/ColossalChat/kto_data

|

||||||

applications/ColossalChat/prompt_data

|

applications/ColossalChat/prompt_data

|

||||||

applications/ColossalChat/preference_data

|

applications/ColossalChat/preference_data

|

||||||

applications/ColossalChat/temp

|

applications/ColossalChat/temp

|

||||||

|

|||||||

@ -7,31 +7,23 @@

|

|||||||

## Table of Contents

|

## Table of Contents

|

||||||

|

|

||||||

- [Table of Contents](#table-of-contents)

|

- [Table of Contents](#table-of-contents)

|

||||||

- [What is ColossalChat and Coati ?](#what-is-colossalchat-and-coati-)

|

- [What is ColossalChat?](#what-is-colossalchat)

|

||||||

- [Online demo](#online-demo)

|

- [Online demo](#online-demo)

|

||||||

- [Install](#install)

|

- [Install](#install)

|

||||||

- [Install the environment](#install-the-environment)

|

- [Install the environment](#install-the-environment)

|

||||||

- [Install the Transformers](#install-the-transformers)

|

- [Install the Transformers](#install-the-transformers)

|

||||||

- [How to use?](#how-to-use)

|

- [Introduction](#introduction)

|

||||||

- [Supervised datasets collection](#step-1-data-collection)

|

- [Supervised datasets collection](#step-1-data-collection)

|

||||||

- [RLHF Training Stage1 - Supervised instructs tuning](#rlhf-training-stage1---supervised-instructs-tuning)

|

- [RLHF Training Stage1 - Supervised instructs tuning](#rlhf-training-stage1---supervised-instructs-tuning)

|

||||||

- [RLHF Training Stage2 - Training reward model](#rlhf-training-stage2---training-reward-model)

|

- [RLHF Training Stage2 - Training reward model](#rlhf-training-stage2---training-reward-model)

|

||||||

- [RLHF Training Stage3 - Training model with reinforcement learning by human feedback](#rlhf-training-stage3---proximal-policy-optimization)

|

- [RLHF Training Stage3 - Training model with reinforcement learning by human feedback](#rlhf-training-stage3---proximal-policy-optimization)

|

||||||

|

- [Alternative Option for RLHF: GRPO](#alternative-option-for-rlhf-group-relative-policy-optimization-grpo)

|

||||||

|

- [Alternative Option For RLHF: DPO](#alternative-option-for-rlhf-direct-preference-optimization)

|

||||||

|

- [Alternative Option For RLHF: SimPO](#alternative-option-for-rlhf-simple-preference-optimization-simpo)

|

||||||

|

- [Alternative Option For RLHF: ORPO](#alternative-option-for-rlhf-odds-ratio-preference-optimization-orpo)

|

||||||

|

- [Alternative Option For RLHF: KTO](#alternative-option-for-rlhf-kahneman-tversky-optimization-kto)

|

||||||

|

- [SFT for DeepSeek V3/R1](#sft-for-deepseek-v3)

|

||||||

- [Inference Quantization and Serving - After Training](#inference-quantization-and-serving---after-training)

|

- [Inference Quantization and Serving - After Training](#inference-quantization-and-serving---after-training)

|

||||||

- [Coati7B examples](#coati7b-examples)

|

|

||||||

- [Generation](#generation)

|

|

||||||

- [Open QA](#open-qa)

|

|

||||||

- [Limitation for LLaMA-finetuned models](#limitation)

|

|

||||||

- [Limitation of dataset](#limitation)

|

|

||||||

- [Alternative Option For RLHF: DPO](#alternative-option-for-rlhf-direct-preference-optimization)

|

|

||||||

- [Alternative Option For RLHF: SimPO](#alternative-option-for-rlhf-simple-preference-optimization-simpo)

|

|

||||||

- [Alternative Option For RLHF: ORPO](#alternative-option-for-rlhf-odds-ratio-preference-optimization-orpo)

|

|

||||||

- [Alternative Option For RLHF: KTO](#alternative-option-for-rlhf-kahneman-tversky-optimization-kto)

|

|

||||||

- [FAQ](#faq)

|

|

||||||

- [How to save/load checkpoint](#faq)

|

|

||||||

- [How to train with limited resources](#faq)

|

|

||||||

- [The Plan](#the-plan)

|

|

||||||

- [Real-time progress](#real-time-progress)

|

|

||||||

- [Invitation to open-source contribution](#invitation-to-open-source-contribution)

|

- [Invitation to open-source contribution](#invitation-to-open-source-contribution)

|

||||||

- [Quick Preview](#quick-preview)

|

- [Quick Preview](#quick-preview)

|

||||||

- [Authors](#authors)

|

- [Authors](#authors)

|

||||||

@ -40,9 +32,9 @@

|

|||||||

|

|

||||||

---

|

---

|

||||||

|

|

||||||

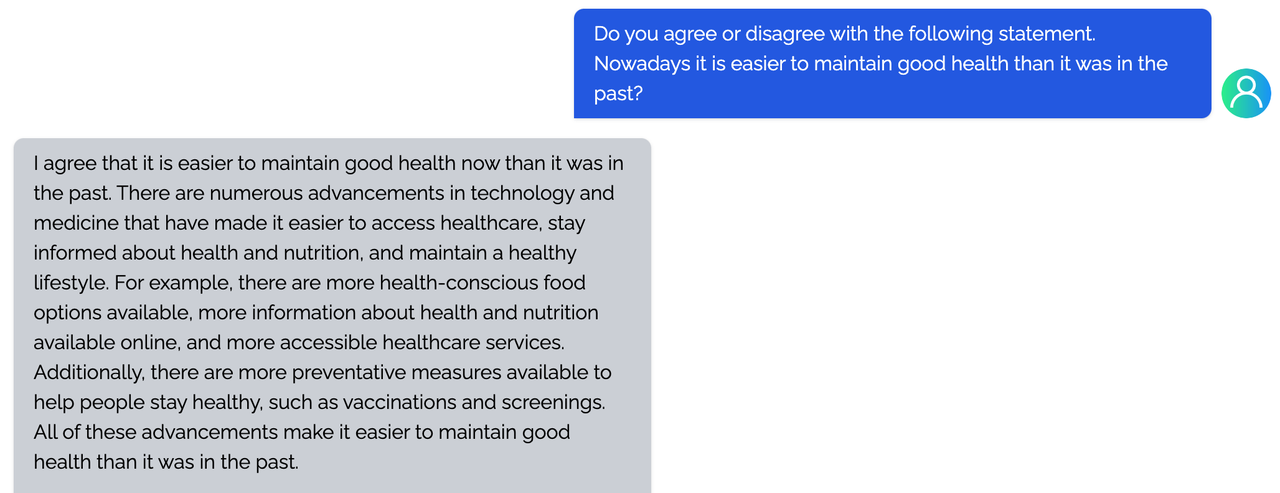

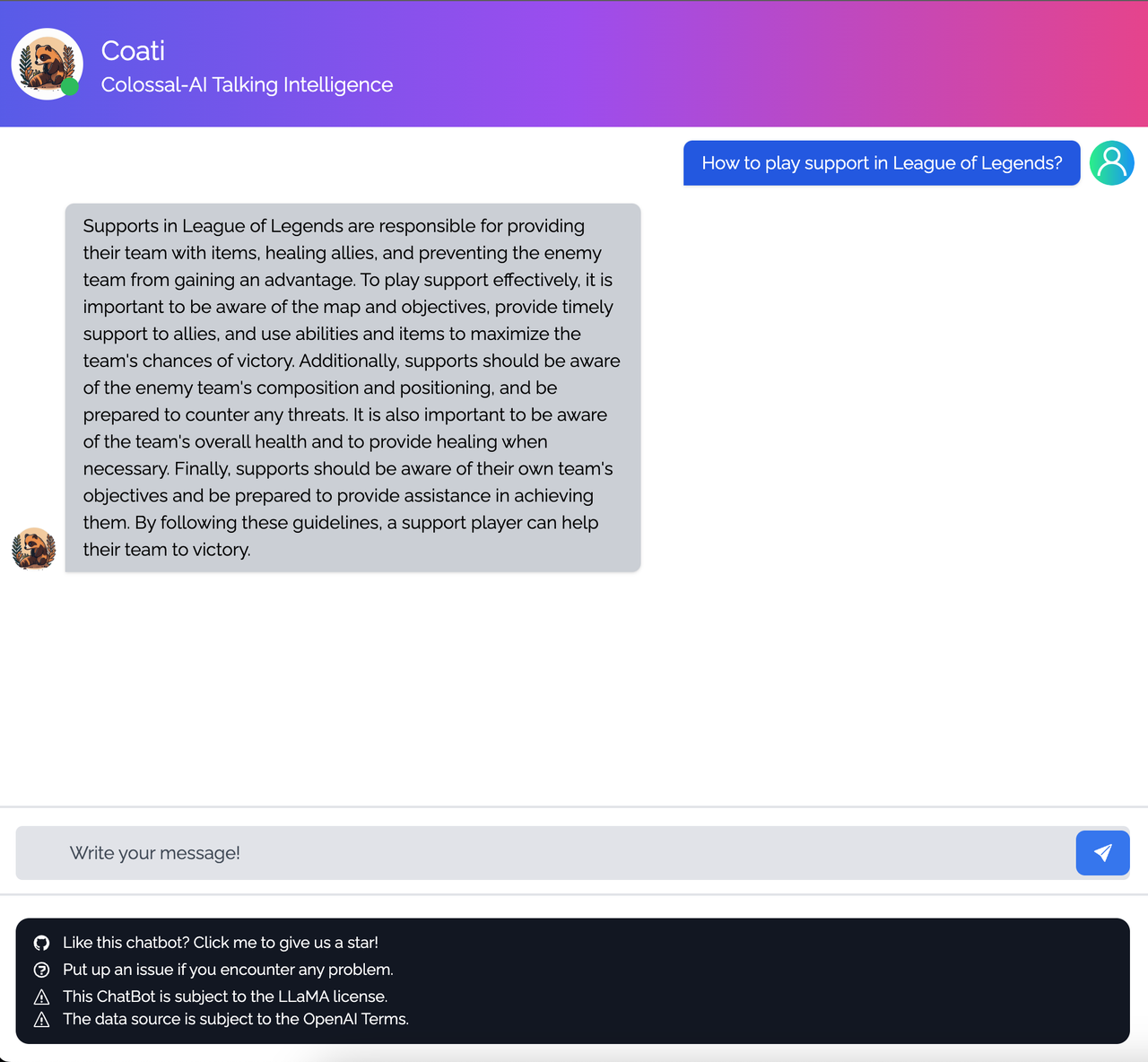

## What Is ColossalChat And Coati ?

|

## What is ColossalChat?

|

||||||

|

|

||||||

[ColossalChat](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat) is the project to implement LLM with RLHF, powered by the [Colossal-AI](https://github.com/hpcaitech/ColossalAI) project.

|

[ColossalChat](https://github.com/hpcaitech/ColossalAI/tree/main/applications/ColossalChat) is a project to implement LLM with RLHF, powered by the [Colossal-AI](https://github.com/hpcaitech/ColossalAI).

|

||||||

|

|

||||||

Coati stands for `ColossalAI Talking Intelligence`. It is the name for the module implemented in this project and is also the name of the large language model developed by the ColossalChat project.

|

Coati stands for `ColossalAI Talking Intelligence`. It is the name for the module implemented in this project and is also the name of the large language model developed by the ColossalChat project.

|

||||||

|

|

||||||

@ -53,8 +45,6 @@ The Coati package provides a unified large language model framework that has imp

|

|||||||

- Supervised instructions fine-tuning

|

- Supervised instructions fine-tuning

|

||||||

- Training reward model

|

- Training reward model

|

||||||

- Reinforcement learning with human feedback

|

- Reinforcement learning with human feedback

|

||||||

- Quantization inference

|

|

||||||

- Fast model deploying

|

|

||||||

- Perfectly integrated with the Hugging Face ecosystem, a high degree of model customization

|

- Perfectly integrated with the Hugging Face ecosystem, a high degree of model customization

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

@ -114,77 +104,16 @@ cd $COLOSSAL_AI_ROOT/applications/ColossalChat

|

|||||||

pip install .

|

pip install .

|

||||||

```

|

```

|

||||||

|

|

||||||

## How To Use?

|

## Introduction

|

||||||

|

|

||||||

### RLHF Training Stage1 - Supervised Instructs Tuning

|

### RLHF Training Stage1 - Supervised Instructs Tuning

|

||||||

|

|

||||||

Stage1 is supervised instructs fine-tuning (SFT). This step is a crucial part of the RLHF training process, as it involves training a machine learning model using human-provided instructions to learn the initial behavior for the task at hand. Here's a detailed guide on how to SFT your LLM with ColossalChat. More details can be found in [example guideline](./examples/README.md).

|

Stage1 is supervised instructs fine-tuning (SFT). This step is a crucial part of the RLHF training process, as it involves training a machine learning model using human-provided instructions to learn the initial behavior for the task at hand. More details can be found in [example guideline](./examples/README.md).

|

||||||

|

|

||||||

#### Step 1: Data Collection

|

|

||||||

The first step in Stage 1 is to collect a dataset of human demonstrations of the following format.

|

|

||||||

|

|

||||||

```json

|

|

||||||

[

|

|

||||||

{"messages":

|

|

||||||

[

|

|

||||||

{

|

|

||||||

"from": "user",

|

|

||||||

"content": "what are some pranks with a pen i can do?"

|

|

||||||

},

|

|

||||||

{

|

|

||||||

"from": "assistant",

|

|

||||||

"content": "Are you looking for practical joke ideas?"

|

|

||||||

},

|

|

||||||

]

|

|

||||||

},

|

|

||||||

]

|

|

||||||

```

|

|

||||||

|

|

||||||

#### Step 2: Preprocessing

|

|

||||||

Once you have collected your SFT dataset, you will need to preprocess it. This involves four steps: data cleaning, data deduplication, formatting and tokenization. In this section, we will focus on formatting and tokenization.

|

|

||||||

|

|

||||||

In this code, we provide a flexible way for users to set the conversation template for formatting chat data using Huggingface's newest feature--- chat template. Please follow the [example guideline](./examples/README.md) on how to format and tokenize data.

|

|

||||||

|

|

||||||

#### Step 3: Training

|

|

||||||

Choose a suitable model architecture for your task. Note that your model should be compatible with the tokenizer that you used to tokenize the SFT dataset. You can run [train_sft.sh](./examples/training_scripts/train_sft.sh) to start a supervised instructs fine-tuning. More details can be found in [example guideline](./examples/README.md).

|

|

||||||

|

|

||||||

### RLHF Training Stage2 - Training Reward Model

|

### RLHF Training Stage2 - Training Reward Model

|

||||||

|

|

||||||

Stage2 trains a reward model, which obtains corresponding scores by manually ranking different outputs for the same prompt and supervises the training of the reward model.

|

Stage2 trains a reward model, which obtains corresponding scores by manually ranking different outputs for the same prompt and supervises the training of the reward model.

|

||||||

|

|

||||||

#### Step 1: Data Collection

|

|

||||||

Below shows the preference dataset format used in training the reward model.

|

|

||||||

|

|

||||||

```json

|

|

||||||

[

|

|

||||||

{"context": [

|

|

||||||

{

|

|

||||||

"from": "human",

|

|

||||||

"content": "Introduce butterflies species in Oregon."

|

|

||||||

}

|

|

||||||

],

|

|

||||||

"chosen": [

|

|

||||||

{

|

|

||||||

"from": "assistant",

|

|

||||||

"content": "About 150 species of butterflies live in Oregon, with about 100 species are moths..."

|

|

||||||

},

|

|

||||||

],

|

|

||||||

"rejected": [

|

|

||||||

{

|

|

||||||

"from": "assistant",

|

|

||||||

"content": "Are you interested in just the common butterflies? There are a few common ones which will be easy to find..."

|

|

||||||

},

|

|

||||||

]

|

|

||||||

},

|

|

||||||

]

|

|

||||||

```

|

|

||||||

|

|

||||||

#### Step 2: Preprocessing

|

|

||||||

Similar to the second step in the previous stage, we format the reward data into the same structured format as used in step 2 of the SFT stage. You can run [prepare_preference_dataset.sh](./examples/data_preparation_scripts/prepare_preference_dataset.sh) to prepare the preference data for reward model training.

|

|

||||||

|

|

||||||

#### Step 3: Training

|

|

||||||

You can run [train_rm.sh](./examples/training_scripts/train_rm.sh) to start the reward model training. More details can be found in [example guideline](./examples/README.md).

|

|

||||||

|

|

||||||

### RLHF Training Stage3 - Proximal Policy Optimization

|

### RLHF Training Stage3 - Proximal Policy Optimization

|

||||||

|

|

||||||

In stage3 we will use reinforcement learning algorithm--- Proximal Policy Optimization (PPO), which is the most complex part of the training process:

|

In stage3 we will use reinforcement learning algorithm--- Proximal Policy Optimization (PPO), which is the most complex part of the training process:

|

||||||

@ -193,85 +122,25 @@ In stage3 we will use reinforcement learning algorithm--- Proximal Policy Optimi

|

|||||||

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/stage-3.jpeg" width=800/>

|

<img src="https://raw.githubusercontent.com/hpcaitech/public_assets/main/applications/chat/stage-3.jpeg" width=800/>

|

||||||

</p>

|

</p>

|

||||||

|

|

||||||

#### Step 1: Data Collection

|

|

||||||

PPO uses two kind of training data--- the prompt data and the sft data (optional). The first dataset is mandatory, data samples within the prompt dataset ends with a line from "human" and thus the "assistant" needs to generate a response to answer to the "human". Note that you can still use conversation that ends with a line from the "assistant", in that case, the last line will be dropped. Here is an example of the prompt dataset format.

|

|

||||||

|

|

||||||

```json

|

### Alternative Option For RLHF: Direct Preference Optimization (DPO)

|

||||||

[

|

|

||||||

{"messages":

|

|

||||||

[

|

|

||||||

{

|

|

||||||

"from": "human",

|

|

||||||

"content": "what are some pranks with a pen i can do?"

|

|

||||||

}

|

|

||||||

]

|

|

||||||

},

|

|

||||||

]

|

|

||||||

```

|

|

||||||

|

|

||||||

#### Step 2: Data Preprocessing

|

|

||||||

To prepare the prompt dataset for PPO training, simply run [prepare_prompt_dataset.sh](./examples/data_preparation_scripts/prepare_prompt_dataset.sh)

|

|

||||||

|

|

||||||

#### Step 3: Training

|

|

||||||

You can run the [train_ppo.sh](./examples/training_scripts/train_ppo.sh) to start PPO training. Here are some unique arguments for PPO, please refer to the training configuration section for other training configuration. More detais can be found in [example guideline](./examples/README.md).

|

|

||||||

|

|

||||||

```bash

|

|

||||||

--pretrain $PRETRAINED_MODEL_PATH \

|

|

||||||

--rm_pretrain $PRETRAINED_MODEL_PATH \ # reward model architectual

|

|

||||||

--tokenizer_dir $PRETRAINED_TOKENIZER_PATH \

|

|

||||||

--rm_checkpoint_path $REWARD_MODEL_PATH \ # reward model checkpoint path

|

|

||||||

--prompt_dataset ${prompt_dataset[@]} \ # List of string, the prompt dataset

|

|

||||||

--ptx_dataset ${ptx_dataset[@]} \ # List of string, the SFT data used in the SFT stage

|

|

||||||

--ptx_batch_size 1 \ # batch size for calculate ptx loss

|

|

||||||

--ptx_coef 0.0 \ # none-zero if ptx loss is enable

|

|

||||||

--num_episodes 2000 \ # number of episodes to train

|

|

||||||

--num_collect_steps 1 \

|

|

||||||

--num_update_steps 1 \

|

|

||||||

--experience_batch_size 8 \

|

|

||||||

--train_batch_size 4 \

|

|

||||||

--accumulation_steps 2

|

|

||||||

```

|

|

||||||

|

|

||||||

Each episode has two phases, the collect phase and the update phase. During the collect phase, we will collect experiences (answers generated by actor), store those in ExperienceBuffer. Then data in ExperienceBuffer is used during the update phase to update parameter of actor and critic.

|

|

||||||

|

|

||||||

- Without tensor parallelism,

|

|

||||||

```

|

|

||||||

experience buffer size

|

|

||||||

= num_process * num_collect_steps * experience_batch_size

|

|

||||||

= train_batch_size * accumulation_steps * num_process

|

|

||||||

```

|

|

||||||

|

|

||||||

- With tensor parallelism,

|

|

||||||

```

|

|

||||||

num_tp_group = num_process / tp

|

|

||||||

experience buffer size

|

|

||||||

= num_tp_group * num_collect_steps * experience_batch_size

|

|

||||||

= train_batch_size * accumulation_steps * num_tp_group

|

|

||||||

```

|

|

||||||

|

|

||||||

## Alternative Option For RLHF: Direct Preference Optimization (DPO)

|

|

||||||

For those seeking an alternative to Reinforcement Learning from Human Feedback (RLHF), Direct Preference Optimization (DPO) presents a compelling option. DPO, as detailed in this [paper](https://arxiv.org/abs/2305.18290), DPO offers an low-cost way to perform RLHF and usually request less computation resources compares to PPO. Read this [README](./examples/README.md) for more information.

|

For those seeking an alternative to Reinforcement Learning from Human Feedback (RLHF), Direct Preference Optimization (DPO) presents a compelling option. DPO, as detailed in this [paper](https://arxiv.org/abs/2305.18290), DPO offers an low-cost way to perform RLHF and usually request less computation resources compares to PPO. Read this [README](./examples/README.md) for more information.

|

||||||

|

|

||||||

### DPO Training Stage1 - Supervised Instructs Tuning

|

### Alternative Option For RLHF: Simple Preference Optimization (SimPO)

|

||||||

|

|

||||||

Please refer the [sft section](#dpo-training-stage1---supervised-instructs-tuning) in the PPO part.

|

|

||||||

|

|

||||||

### DPO Training Stage2 - DPO Training

|

|

||||||

#### Step 1: Data Collection & Preparation

|

|

||||||

For DPO training, you only need the preference dataset. Please follow the instruction in the [preference dataset preparation section](#rlhf-training-stage2---training-reward-model) to prepare the preference data for DPO training.

|

|

||||||

|

|

||||||

#### Step 2: Training

|

|

||||||

You can run the [train_dpo.sh](./examples/training_scripts/train_dpo.sh) to start DPO training. More detais can be found in [example guideline](./examples/README.md).

|

|

||||||

|

|

||||||

## Alternative Option For RLHF: Simple Preference Optimization (SimPO)

|

|

||||||

Simple Preference Optimization (SimPO) from this [paper](https://arxiv.org/pdf/2405.14734) is similar to DPO but it abandons the use of the reference model, which makes the training more efficient. It also adds a reward shaping term called target reward margin to enhance training stability. It also use length normalization to better align with the inference process. Read this [README](./examples/README.md) for more information.

|

Simple Preference Optimization (SimPO) from this [paper](https://arxiv.org/pdf/2405.14734) is similar to DPO but it abandons the use of the reference model, which makes the training more efficient. It also adds a reward shaping term called target reward margin to enhance training stability. It also use length normalization to better align with the inference process. Read this [README](./examples/README.md) for more information.

|

||||||

|

|

||||||

## Alternative Option For RLHF: Odds Ratio Preference Optimization (ORPO)

|

### Alternative Option For RLHF: Odds Ratio Preference Optimization (ORPO)

|

||||||

Odds Ratio Preference Optimization (ORPO) from this [paper](https://arxiv.org/pdf/2403.07691) is a reference model free alignment method that use a mixture of SFT loss and a reinforcement leanring loss calculated based on odds-ratio-based implicit reward to makes the training more efficient and stable. Read this [README](./examples/README.md) for more information.

|

Odds Ratio Preference Optimization (ORPO) from this [paper](https://arxiv.org/pdf/2403.07691) is a reference model free alignment method that use a mixture of SFT loss and a reinforcement leanring loss calculated based on odds-ratio-based implicit reward to makes the training more efficient and stable. Read this [README](./examples/README.md) for more information.

|

||||||

|

|

||||||

## Alternative Option For RLHF: Kahneman-Tversky Optimization (KTO)

|

### Alternative Option For RLHF: Kahneman-Tversky Optimization (KTO)

|

||||||

We support the method introduced in the paper [KTO:Model Alignment as Prospect Theoretic Optimization](https://arxiv.org/pdf/2402.01306) (KTO). Which is a aligment method that directly maximize "human utility" of generation results. Read this [README](./examples/README.md) for more information.

|

We support the method introduced in the paper [KTO:Model Alignment as Prospect Theoretic Optimization](https://arxiv.org/pdf/2402.01306) (KTO). Which is a aligment method that directly maximize "human utility" of generation results. Read this [README](./examples/README.md) for more information.

|

||||||

|

|

||||||

|

### Alternative Option For RLHF: Group Relative Policy Optimization (GRPO)

|

||||||

|

We support the main algorithm used to train DeepSeek R1 model, a variant of Proximal Policy Optimization (PPO), that enhances mathematical reasoning abilities while concurrently optimizing the memory usage of PPO. Read this [README](./examples/README.md) for more information.

|

||||||

|

|

||||||

|

### SFT for DeepSeek V3

|

||||||

|

We support fine-tuning DeepSeek V3/R1 model with LoRA. Read this [README](./examples/README.md) for more information.

|

||||||

|

|

||||||

### Inference Quantization and Serving - After Training

|

### Inference Quantization and Serving - After Training

|

||||||

|

|

||||||

We provide an online inference server and a benchmark. We aim to run inference on single GPU, so quantization is essential when using large models.

|

We provide an online inference server and a benchmark. We aim to run inference on single GPU, so quantization is essential when using large models.

|

||||||

@ -281,167 +150,7 @@ We support 8-bit quantization (RTN), 4-bit quantization (GPTQ), and FP16 inferen

|

|||||||

Online inference server scripts can help you deploy your own services.

|

Online inference server scripts can help you deploy your own services.

|

||||||

For more details, see [`inference/`](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat/inference).

|

For more details, see [`inference/`](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat/inference).

|

||||||

|

|

||||||

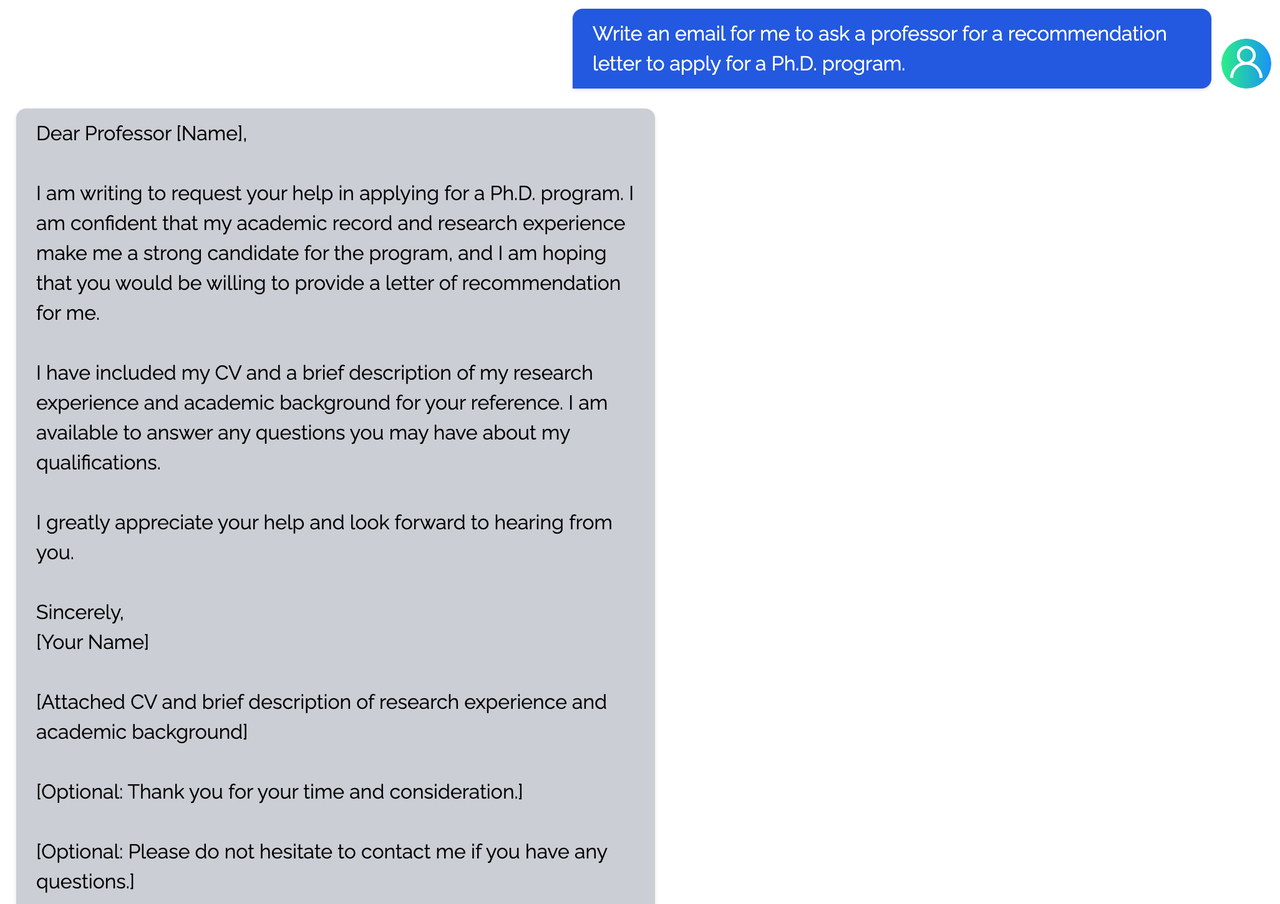

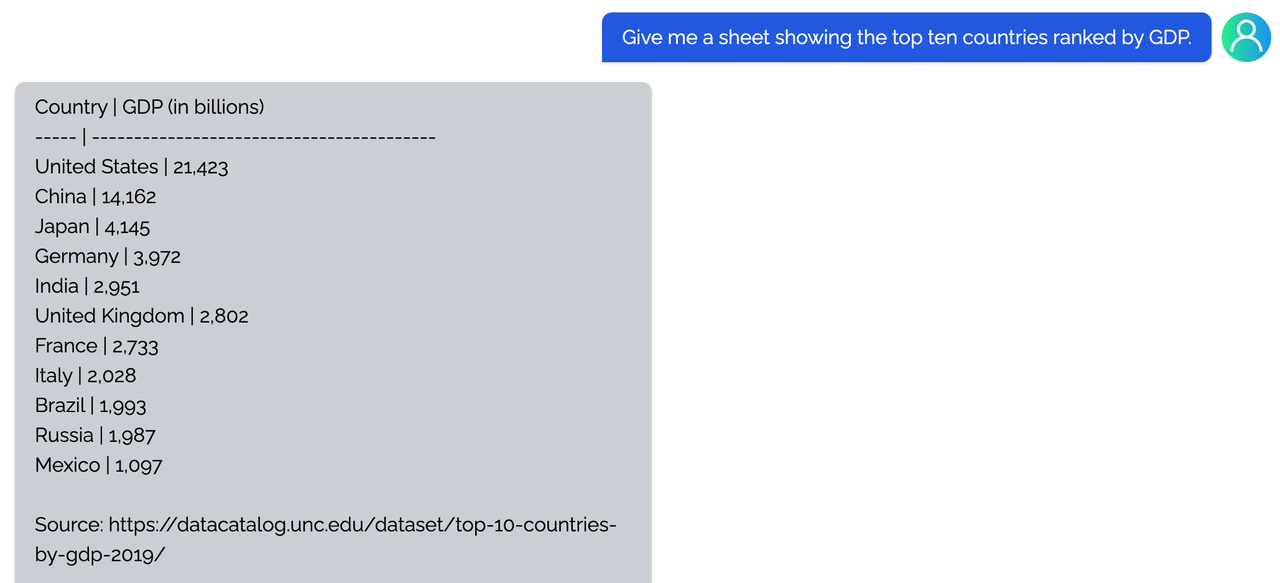

## Coati7B examples

|

|

||||||

|

|

||||||

### Generation

|

|

||||||

|

|

||||||

<details><summary><b>E-mail</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

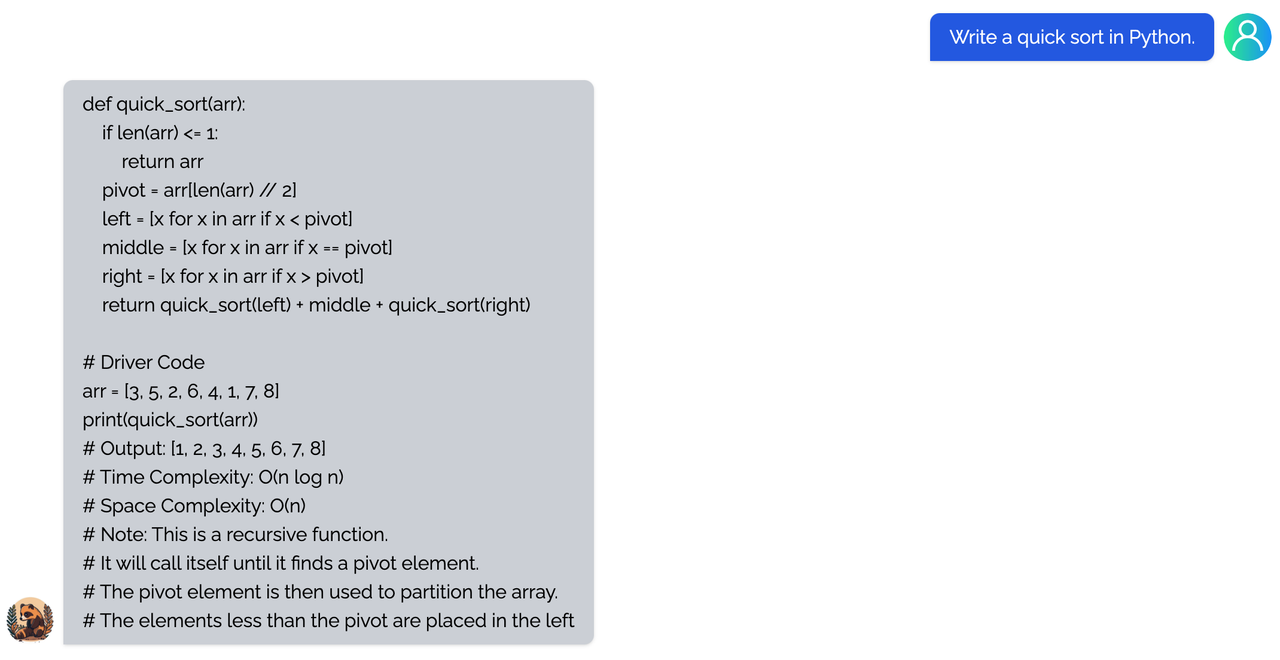

<details><summary><b>coding</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

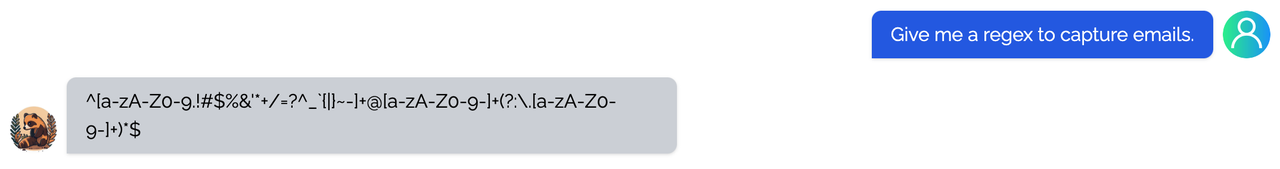

<details><summary><b>regex</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

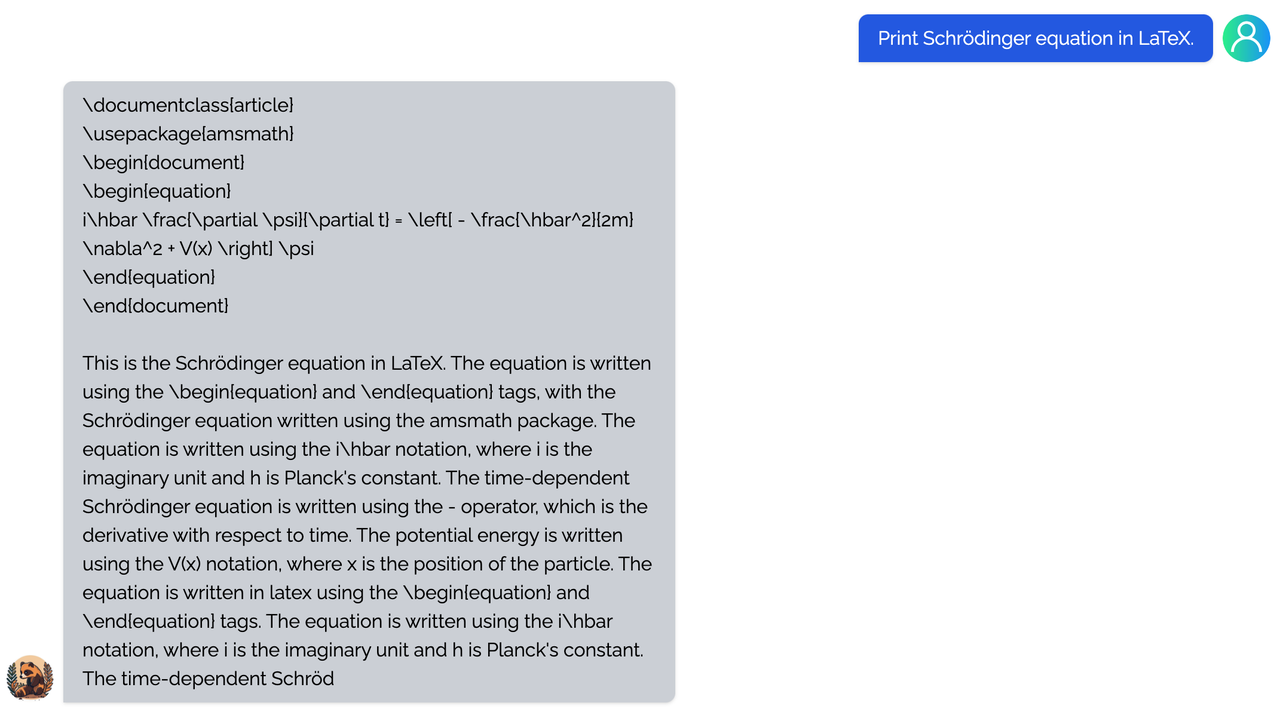

<details><summary><b>Tex</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

<details><summary><b>writing</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

<details><summary><b>Table</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

### Open QA

|

|

||||||

|

|

||||||

<details><summary><b>Game</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

<details><summary><b>Travel</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

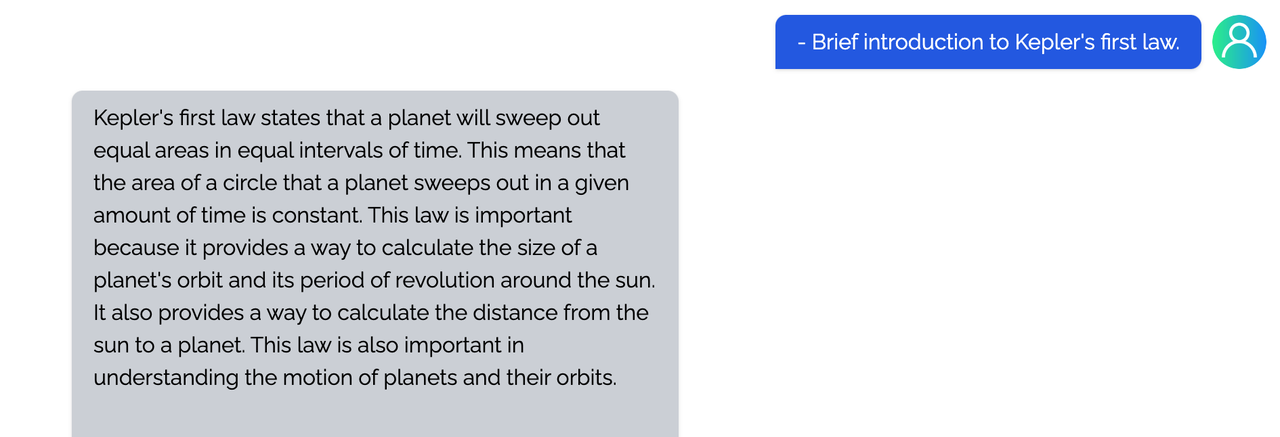

<details><summary><b>Physical</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

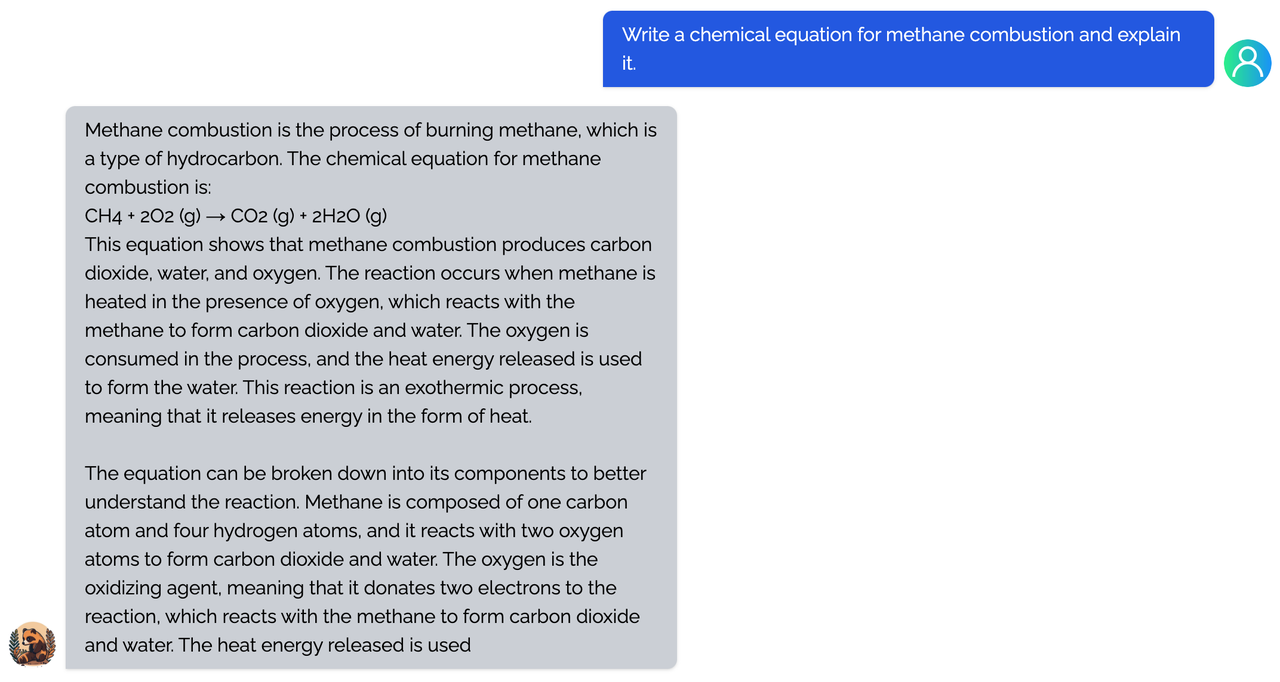

<details><summary><b>Chemical</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||

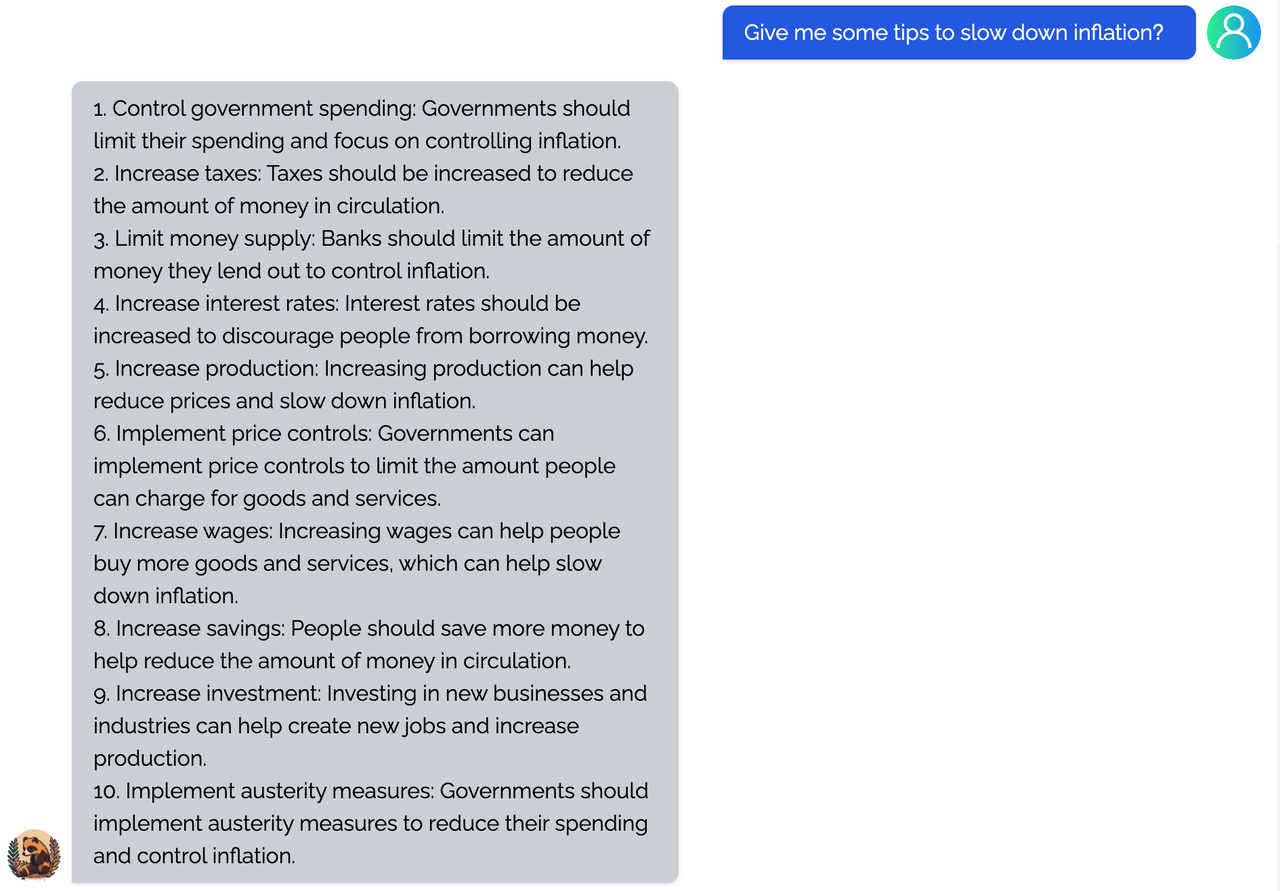

<details><summary><b>Economy</b></summary>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</details>

|

|

||||||

|

|

||||||