mirror of

https://github.com/jumpserver/jumpserver.git

synced 2025-12-16 00:52:41 +00:00

Compare commits

19 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

dd50a1faff | ||

|

|

86dab4fc6e | ||

|

|

a85a80a945 | ||

|

|

349edc10aa | ||

|

|

44918e3cb5 | ||

|

|

9a2f6c0d70 | ||

|

|

934969a8f1 | ||

|

|

57162c1628 | ||

|

|

32fb36867f | ||

|

|

158b589028 | ||

|

|

d64277353c | ||

|

|

bff6f397ce | ||

|

|

0ad461a804 | ||

|

|

a1dcef0ba0 | ||

|

|

dbb1ee3a75 | ||

|

|

d6bd207a17 | ||

|

|

e69ba27ff4 | ||

|

|

adbe7c07c6 | ||

|

|

d1eacf53d4 |

8

.github/ISSUE_TEMPLATE/bug---.md

vendored

8

.github/ISSUE_TEMPLATE/bug---.md

vendored

@@ -7,7 +7,7 @@ assignees: wojiushixiaobai

|

||||

|

||||

---

|

||||

|

||||

**JumpServer 版本( v2.28 之前的版本不再支持 )**

|

||||

**JumpServer 版本(v1.5.9以下不再支持)**

|

||||

|

||||

|

||||

**浏览器版本**

|

||||

@@ -17,6 +17,6 @@ assignees: wojiushixiaobai

|

||||

|

||||

|

||||

**Bug 重现步骤(有截图更好)**

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

1.

|

||||

2.

|

||||

3.

|

||||

|

||||

1

.github/workflows/jms-build-test.yml

vendored

1

.github/workflows/jms-build-test.yml

vendored

@@ -24,7 +24,6 @@ jobs:

|

||||

build-args: |

|

||||

APT_MIRROR=http://deb.debian.org

|

||||

PIP_MIRROR=https://pypi.org/simple

|

||||

PIP_JMS_MIRROR=https://pypi.org/simple

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

|

||||

2

.github/workflows/sync-gitee.yml

vendored

2

.github/workflows/sync-gitee.yml

vendored

@@ -20,4 +20,4 @@ jobs:

|

||||

SSH_PRIVATE_KEY: ${{ secrets.GITEE_SSH_PRIVATE_KEY }}

|

||||

with:

|

||||

source-repo: 'git@github.com:jumpserver/jumpserver.git'

|

||||

destination-repo: 'git@gitee.com:fit2cloud-feizhiyun/JumpServer.git'

|

||||

destination-repo: 'git@gitee.com:jumpserver/jumpserver.git'

|

||||

|

||||

16

README.md

16

README.md

@@ -10,17 +10,6 @@

|

||||

<a href="https://github.com/jumpserver/jumpserver"><img src="https://img.shields.io/github/stars/jumpserver/jumpserver?color=%231890FF&style=flat-square" alt="Stars"></a>

|

||||

</p>

|

||||

|

||||

|

||||

<p align="center">

|

||||

JumpServer <a href="https://github.com/jumpserver/jumpserver/releases/tag/v3.0.0">v3.0</a> 正式发布。

|

||||

<br>

|

||||

9 年时间,倾情投入,用心做好一款开源堡垒机。

|

||||

</p>

|

||||

|

||||

| :warning: 注意 :warning: |

|

||||

|:-------------------------------------------------------------------------------------------------------------------------:|

|

||||

| 3.0 架构上和 2.0 变化较大,建议全新安装一套环境来体验。如需升级,请务必升级前进行备份,并[查阅文档](https://kb.fit2cloud.com/?p=06638d69-f109-4333-b5bf-65b17b297ed9) |

|

||||

|

||||

--------------------------

|

||||

|

||||

JumpServer 是广受欢迎的开源堡垒机,是符合 4A 规范的专业运维安全审计系统。

|

||||

@@ -38,7 +27,7 @@ JumpServer 是广受欢迎的开源堡垒机,是符合 4A 规范的专业运

|

||||

|

||||

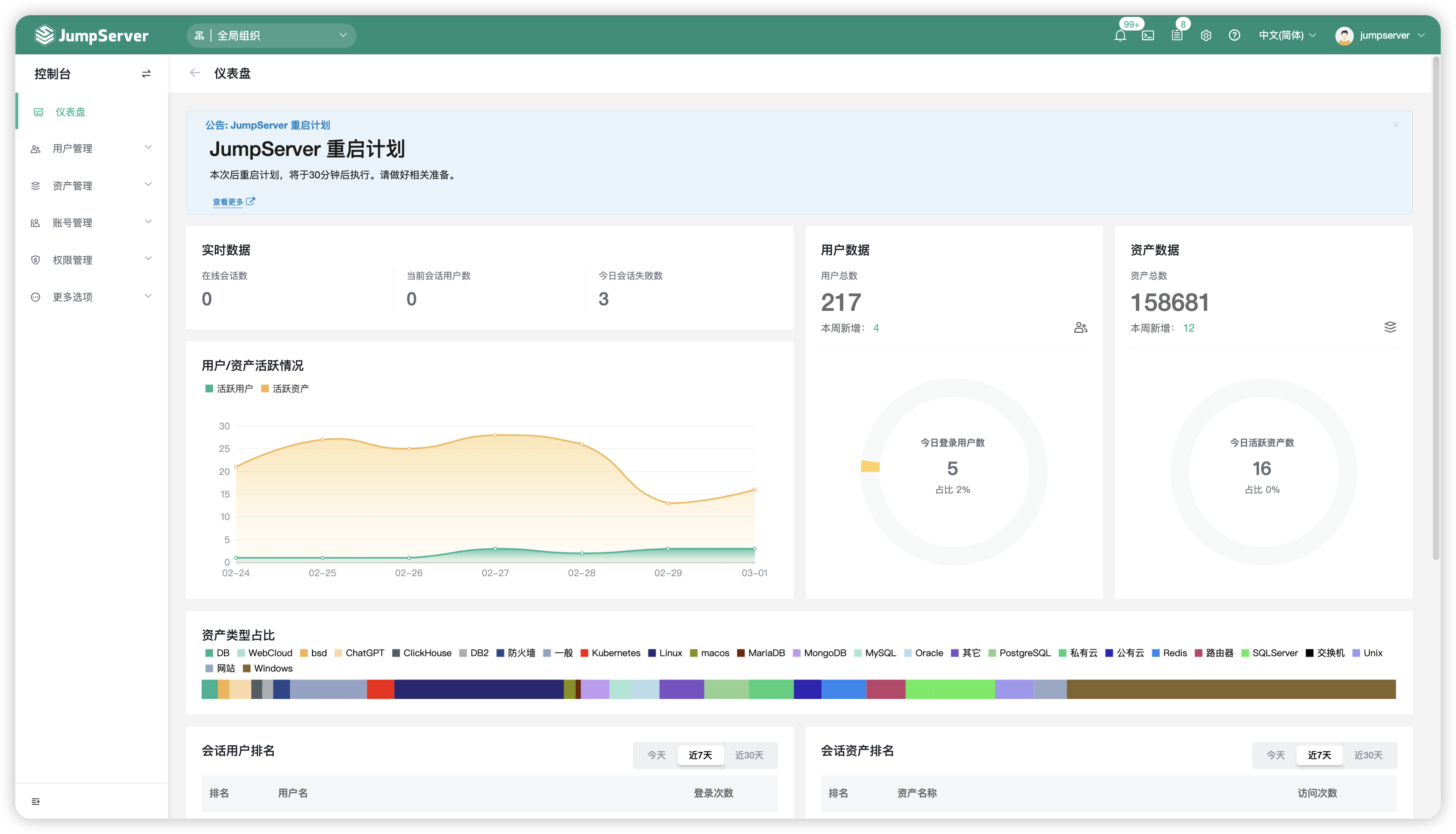

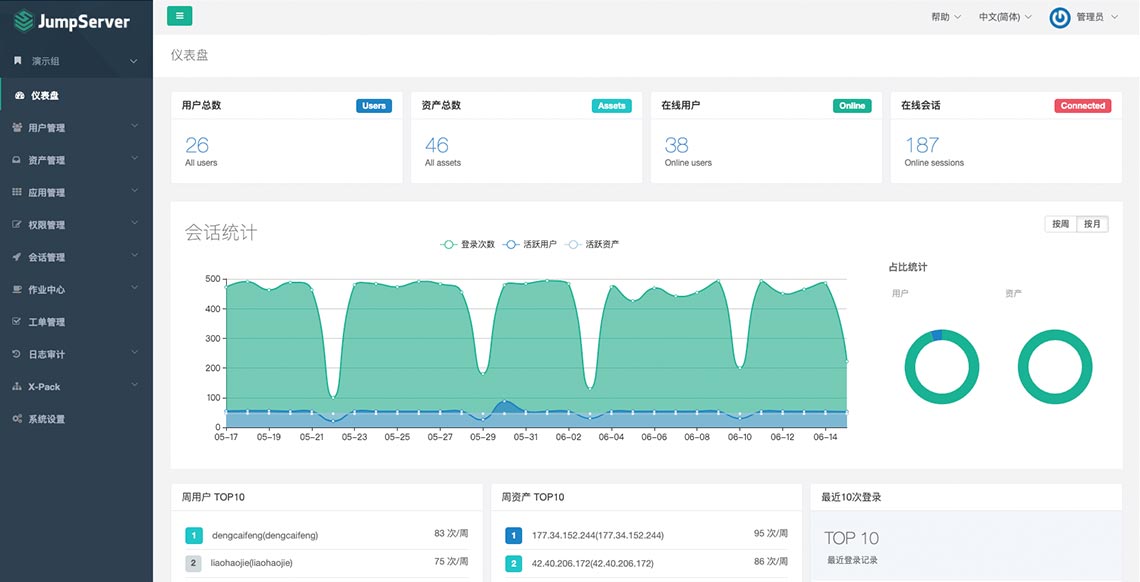

## UI 展示

|

||||

|

||||

|

||||

|

||||

|

||||

## 在线体验

|

||||

|

||||

@@ -52,7 +41,8 @@ JumpServer 是广受欢迎的开源堡垒机,是符合 4A 规范的专业运

|

||||

|

||||

## 快速开始

|

||||

|

||||

- [快速入门](https://docs.jumpserver.org/zh/v3/quick_start/)

|

||||

- [极速安装](https://docs.jumpserver.org/zh/master/install/setup_by_fast/)

|

||||

- [手动安装](https://github.com/jumpserver/installer)

|

||||

- [产品文档](https://docs.jumpserver.org)

|

||||

- [知识库](https://kb.fit2cloud.com/categories/jumpserver)

|

||||

|

||||

|

||||

@@ -43,7 +43,7 @@ class AccountViewSet(OrgBulkModelViewSet):

|

||||

asset = get_object_or_404(Asset, pk=asset_id)

|

||||

accounts = asset.accounts.all()

|

||||

else:

|

||||

accounts = Account.objects.none()

|

||||

accounts = []

|

||||

accounts = self.filter_queryset(accounts)

|

||||

serializer = serializers.AccountSerializer(accounts, many=True)

|

||||

return Response(data=serializer.data)

|

||||

|

||||

@@ -1,39 +1,15 @@

|

||||

from django_filters import rest_framework as drf_filters

|

||||

|

||||

from assets.const import Protocol

|

||||

from accounts import serializers

|

||||

from accounts.models import AccountTemplate

|

||||

from orgs.mixins.api import OrgBulkModelViewSet

|

||||

from rbac.permissions import RBACPermission

|

||||

from common.permissions import UserConfirmation, ConfirmType

|

||||

|

||||

from common.views.mixins import RecordViewLogMixin

|

||||

from common.drf.filters import BaseFilterSet

|

||||

|

||||

|

||||

class AccountTemplateFilterSet(BaseFilterSet):

|

||||

protocols = drf_filters.CharFilter(method='filter_protocols')

|

||||

|

||||

class Meta:

|

||||

model = AccountTemplate

|

||||

fields = ('username', 'name')

|

||||

|

||||

@staticmethod

|

||||

def filter_protocols(queryset, name, value):

|

||||

secret_types = set()

|

||||

protocols = value.split(',')

|

||||

protocol_secret_type_map = Protocol.settings()

|

||||

for p in protocols:

|

||||

if p not in protocol_secret_type_map:

|

||||

continue

|

||||

_st = protocol_secret_type_map[p].get('secret_types', [])

|

||||

secret_types.update(_st)

|

||||

queryset = queryset.filter(secret_type__in=secret_types)

|

||||

return queryset

|

||||

from orgs.mixins.api import OrgBulkModelViewSet

|

||||

from accounts import serializers

|

||||

from accounts.models import AccountTemplate

|

||||

|

||||

|

||||

class AccountTemplateViewSet(OrgBulkModelViewSet):

|

||||

model = AccountTemplate

|

||||

filterset_class = AccountTemplateFilterSet

|

||||

filterset_fields = ("username", 'name')

|

||||

search_fields = ('username', 'name')

|

||||

serializer_classes = {

|

||||

'default': serializers.AccountTemplateSerializer

|

||||

|

||||

@@ -9,12 +9,12 @@

|

||||

name: "{{ account.username }}"

|

||||

password: "{{ account.secret | password_hash('des') }}"

|

||||

update_password: always

|

||||

when: account.secret_type == "password"

|

||||

when: secret_type == "password"

|

||||

|

||||

- name: create user If it already exists, no operation will be performed

|

||||

ansible.builtin.user:

|

||||

name: "{{ account.username }}"

|

||||

when: account.secret_type == "ssh_key"

|

||||

when: secret_type == "ssh_key"

|

||||

|

||||

- name: remove jumpserver ssh key

|

||||

ansible.builtin.lineinfile:

|

||||

@@ -22,7 +22,7 @@

|

||||

regexp: "{{ kwargs.regexp }}"

|

||||

state: absent

|

||||

when:

|

||||

- account.secret_type == "ssh_key"

|

||||

- secret_type == "ssh_key"

|

||||

- kwargs.strategy == "set_jms"

|

||||

|

||||

- name: Change SSH key

|

||||

@@ -30,7 +30,7 @@

|

||||

user: "{{ account.username }}"

|

||||

key: "{{ account.secret }}"

|

||||

exclusive: "{{ kwargs.exclusive }}"

|

||||

when: account.secret_type == "ssh_key"

|

||||

when: secret_type == "ssh_key"

|

||||

|

||||

- name: Refresh connection

|

||||

ansible.builtin.meta: reset_connection

|

||||

@@ -42,7 +42,7 @@

|

||||

ansible_user: "{{ account.username }}"

|

||||

ansible_password: "{{ account.secret }}"

|

||||

ansible_become: no

|

||||

when: account.secret_type == "password"

|

||||

when: secret_type == "password"

|

||||

|

||||

- name: Verify SSH key

|

||||

ansible.builtin.ping:

|

||||

@@ -51,4 +51,4 @@

|

||||

ansible_user: "{{ account.username }}"

|

||||

ansible_ssh_private_key_file: "{{ account.private_key_path }}"

|

||||

ansible_become: no

|

||||

when: account.secret_type == "ssh_key"

|

||||

when: secret_type == "ssh_key"

|

||||

|

||||

@@ -9,12 +9,12 @@

|

||||

name: "{{ account.username }}"

|

||||

password: "{{ account.secret | password_hash('sha512') }}"

|

||||

update_password: always

|

||||

when: account.secret_type == "password"

|

||||

when: secret_type == "password"

|

||||

|

||||

- name: create user If it already exists, no operation will be performed

|

||||

ansible.builtin.user:

|

||||

name: "{{ account.username }}"

|

||||

when: account.secret_type == "ssh_key"

|

||||

when: secret_type == "ssh_key"

|

||||

|

||||

- name: remove jumpserver ssh key

|

||||

ansible.builtin.lineinfile:

|

||||

@@ -22,7 +22,7 @@

|

||||

regexp: "{{ kwargs.regexp }}"

|

||||

state: absent

|

||||

when:

|

||||

- account.secret_type == "ssh_key"

|

||||

- secret_type == "ssh_key"

|

||||

- kwargs.strategy == "set_jms"

|

||||

|

||||

- name: Change SSH key

|

||||

@@ -30,7 +30,7 @@

|

||||

user: "{{ account.username }}"

|

||||

key: "{{ account.secret }}"

|

||||

exclusive: "{{ kwargs.exclusive }}"

|

||||

when: account.secret_type == "ssh_key"

|

||||

when: secret_type == "ssh_key"

|

||||

|

||||

- name: Refresh connection

|

||||

ansible.builtin.meta: reset_connection

|

||||

@@ -42,7 +42,7 @@

|

||||

ansible_user: "{{ account.username }}"

|

||||

ansible_password: "{{ account.secret }}"

|

||||

ansible_become: no

|

||||

when: account.secret_type == "password"

|

||||

when: secret_type == "password"

|

||||

|

||||

- name: Verify SSH key

|

||||

ansible.builtin.ping:

|

||||

@@ -51,4 +51,4 @@

|

||||

ansible_user: "{{ account.username }}"

|

||||

ansible_ssh_private_key_file: "{{ account.private_key_path }}"

|

||||

ansible_become: no

|

||||

when: account.secret_type == "ssh_key"

|

||||

when: secret_type == "ssh_key"

|

||||

|

||||

@@ -12,7 +12,7 @@ from accounts.models import ChangeSecretRecord

|

||||

from accounts.notifications import ChangeSecretExecutionTaskMsg

|

||||

from accounts.serializers import ChangeSecretRecordBackUpSerializer

|

||||

from assets.const import HostTypes

|

||||

from common.utils import get_logger

|

||||

from common.utils import get_logger, lazyproperty

|

||||

from common.utils.file import encrypt_and_compress_zip_file

|

||||

from common.utils.timezone import local_now_display

|

||||

from users.models import User

|

||||

@@ -28,23 +28,23 @@ class ChangeSecretManager(AccountBasePlaybookManager):

|

||||

def __init__(self, *args, **kwargs):

|

||||

super().__init__(*args, **kwargs)

|

||||

self.method_hosts_mapper = defaultdict(list)

|

||||

self.secret_type = self.execution.snapshot.get('secret_type')

|

||||

self.secret_type = self.execution.snapshot['secret_type']

|

||||

self.secret_strategy = self.execution.snapshot.get(

|

||||

'secret_strategy', SecretStrategy.custom

|

||||

)

|

||||

self.ssh_key_change_strategy = self.execution.snapshot.get(

|

||||

'ssh_key_change_strategy', SSHKeyStrategy.add

|

||||

)

|

||||

self.account_ids = self.execution.snapshot['accounts']

|

||||

self.snapshot_account_usernames = self.execution.snapshot['accounts']

|

||||

self.name_recorder_mapper = {} # 做个映射,方便后面处理

|

||||

|

||||

@classmethod

|

||||

def method_type(cls):

|

||||

return AutomationTypes.change_secret

|

||||

|

||||

def get_kwargs(self, account, secret, secret_type):

|

||||

def get_kwargs(self, account, secret):

|

||||

kwargs = {}

|

||||

if secret_type != SecretType.SSH_KEY:

|

||||

if self.secret_type != SecretType.SSH_KEY:

|

||||

return kwargs

|

||||

kwargs['strategy'] = self.ssh_key_change_strategy

|

||||

kwargs['exclusive'] = 'yes' if kwargs['strategy'] == SSHKeyStrategy.set else 'no'

|

||||

@@ -54,29 +54,18 @@ class ChangeSecretManager(AccountBasePlaybookManager):

|

||||

kwargs['regexp'] = '.*{}$'.format(secret.split()[2].strip())

|

||||

return kwargs

|

||||

|

||||

def secret_generator(self, secret_type):

|

||||

@lazyproperty

|

||||

def secret_generator(self):

|

||||

return SecretGenerator(

|

||||

self.secret_strategy, secret_type,

|

||||

self.secret_strategy, self.secret_type,

|

||||

self.execution.snapshot.get('password_rules')

|

||||

)

|

||||

|

||||

def get_secret(self, secret_type):

|

||||

def get_secret(self):

|

||||

if self.secret_strategy == SecretStrategy.custom:

|

||||

return self.execution.snapshot['secret']

|

||||

else:

|

||||

return self.secret_generator(secret_type).get_secret()

|

||||

|

||||

def get_accounts(self, privilege_account):

|

||||

if not privilege_account:

|

||||

print(f'not privilege account')

|

||||

return []

|

||||

|

||||

asset = privilege_account.asset

|

||||

accounts = asset.accounts.exclude(username=privilege_account.username)

|

||||

accounts = accounts.filter(id__in=self.account_ids)

|

||||

if self.secret_type:

|

||||

accounts = accounts.filter(secret_type=self.secret_type)

|

||||

return accounts

|

||||

return self.secret_generator.get_secret()

|

||||

|

||||

def host_callback(

|

||||

self, host, asset=None, account=None,

|

||||

@@ -89,10 +78,17 @@ class ChangeSecretManager(AccountBasePlaybookManager):

|

||||

if host.get('error'):

|

||||

return host

|

||||

|

||||

accounts = self.get_accounts(account)

|

||||

accounts = asset.accounts.all()

|

||||

if account:

|

||||

accounts = accounts.exclude(username=account.username)

|

||||

|

||||

if '*' not in self.snapshot_account_usernames:

|

||||

accounts = accounts.filter(username__in=self.snapshot_account_usernames)

|

||||

|

||||

accounts = accounts.filter(secret_type=self.secret_type)

|

||||

if not accounts:

|

||||

print('没有发现待改密账号: %s 用户ID: %s 类型: %s' % (

|

||||

asset.name, self.account_ids, self.secret_type

|

||||

print('没有发现待改密账号: %s 用户名: %s 类型: %s' % (

|

||||

asset.name, self.snapshot_account_usernames, self.secret_type

|

||||

))

|

||||

return []

|

||||

|

||||

@@ -101,16 +97,16 @@ class ChangeSecretManager(AccountBasePlaybookManager):

|

||||

method_hosts = [h for h in method_hosts if h != host['name']]

|

||||

inventory_hosts = []

|

||||

records = []

|

||||

host['secret_type'] = self.secret_type

|

||||

|

||||

if asset.type == HostTypes.WINDOWS and self.secret_type == SecretType.SSH_KEY:

|

||||

print(f'Windows {asset} does not support ssh key push')

|

||||

print(f'Windows {asset} does not support ssh key push \n')

|

||||

return inventory_hosts

|

||||

|

||||

for account in accounts:

|

||||

h = deepcopy(host)

|

||||

secret_type = account.secret_type

|

||||

h['name'] += '(' + account.username + ')'

|

||||

new_secret = self.get_secret(secret_type)

|

||||

new_secret = self.get_secret()

|

||||

|

||||

recorder = ChangeSecretRecord(

|

||||

asset=asset, account=account, execution=self.execution,

|

||||

@@ -120,15 +116,15 @@ class ChangeSecretManager(AccountBasePlaybookManager):

|

||||

self.name_recorder_mapper[h['name']] = recorder

|

||||

|

||||

private_key_path = None

|

||||

if secret_type == SecretType.SSH_KEY:

|

||||

if self.secret_type == SecretType.SSH_KEY:

|

||||

private_key_path = self.generate_private_key_path(new_secret, path_dir)

|

||||

new_secret = self.generate_public_key(new_secret)

|

||||

|

||||

h['kwargs'] = self.get_kwargs(account, new_secret, secret_type)

|

||||

h['kwargs'] = self.get_kwargs(account, new_secret)

|

||||

h['account'] = {

|

||||

'name': account.name,

|

||||

'username': account.username,

|

||||

'secret_type': secret_type,

|

||||

'secret_type': account.secret_type,

|

||||

'secret': new_secret,

|

||||

'private_key_path': private_key_path

|

||||

}

|

||||

|

||||

@@ -60,6 +60,4 @@ class GatherAccountsFilter:

|

||||

if not run_method_name:

|

||||

return info

|

||||

|

||||

if hasattr(self, f'{run_method_name}_filter'):

|

||||

return getattr(self, f'{run_method_name}_filter')(info)

|

||||

return info

|

||||

return getattr(self, f'{run_method_name}_filter')(info)

|

||||

|

||||

@@ -22,8 +22,8 @@ class GatherAccountsManager(AccountBasePlaybookManager):

|

||||

self.host_asset_mapper[host['name']] = asset

|

||||

return host

|

||||

|

||||

def filter_success_result(self, tp, result):

|

||||

result = GatherAccountsFilter(tp).run(self.method_id_meta_mapper, result)

|

||||

def filter_success_result(self, host, result):

|

||||

result = GatherAccountsFilter(host).run(self.method_id_meta_mapper, result)

|

||||

return result

|

||||

|

||||

@staticmethod

|

||||

|

||||

@@ -1,6 +1,9 @@

|

||||

from copy import deepcopy

|

||||

|

||||

from django.db.models import QuerySet

|

||||

|

||||

from accounts.const import AutomationTypes, SecretType

|

||||

from accounts.models import Account

|

||||

from assets.const import HostTypes

|

||||

from common.utils import get_logger

|

||||

from ..base.manager import AccountBasePlaybookManager

|

||||

@@ -16,6 +19,36 @@ class PushAccountManager(ChangeSecretManager, AccountBasePlaybookManager):

|

||||

def method_type(cls):

|

||||

return AutomationTypes.push_account

|

||||

|

||||

def create_nonlocal_accounts(self, accounts, snapshot_account_usernames, asset):

|

||||

secret_type = self.secret_type

|

||||

usernames = accounts.filter(secret_type=secret_type).values_list(

|

||||

'username', flat=True

|

||||

)

|

||||

create_usernames = set(snapshot_account_usernames) - set(usernames)

|

||||

create_account_objs = [

|

||||

Account(

|

||||

name=f'{username}-{secret_type}', username=username,

|

||||

secret_type=secret_type, asset=asset,

|

||||

)

|

||||

for username in create_usernames

|

||||

]

|

||||

Account.objects.bulk_create(create_account_objs)

|

||||

|

||||

def get_accounts(self, privilege_account, accounts: QuerySet):

|

||||

if not privilege_account:

|

||||

print(f'not privilege account')

|

||||

return []

|

||||

snapshot_account_usernames = self.execution.snapshot['accounts']

|

||||

if '*' in snapshot_account_usernames:

|

||||

return accounts.exclude(username=privilege_account.username)

|

||||

|

||||

asset = privilege_account.asset

|

||||

self.create_nonlocal_accounts(accounts, snapshot_account_usernames, asset)

|

||||

accounts = asset.accounts.exclude(username=privilege_account.username).filter(

|

||||

username__in=snapshot_account_usernames, secret_type=self.secret_type

|

||||

)

|

||||

return accounts

|

||||

|

||||

def host_callback(self, host, asset=None, account=None, automation=None, path_dir=None, **kwargs):

|

||||

host = super(ChangeSecretManager, self).host_callback(

|

||||

host, asset=asset, account=account, automation=automation,

|

||||

@@ -24,36 +57,34 @@ class PushAccountManager(ChangeSecretManager, AccountBasePlaybookManager):

|

||||

if host.get('error'):

|

||||

return host

|

||||

|

||||

accounts = self.get_accounts(account)

|

||||

accounts = asset.accounts.all()

|

||||

accounts = self.get_accounts(account, accounts)

|

||||

inventory_hosts = []

|

||||

host['secret_type'] = self.secret_type

|

||||

if asset.type == HostTypes.WINDOWS and self.secret_type == SecretType.SSH_KEY:

|

||||

msg = f'Windows {asset} does not support ssh key push'

|

||||

msg = f'Windows {asset} does not support ssh key push \n'

|

||||

print(msg)

|

||||

return inventory_hosts

|

||||

|

||||

for account in accounts:

|

||||

h = deepcopy(host)

|

||||

secret_type = account.secret_type

|

||||

h['name'] += '(' + account.username + ')'

|

||||

if self.secret_type is None:

|

||||

new_secret = account.secret

|

||||

else:

|

||||

new_secret = self.get_secret(secret_type)

|

||||

new_secret = self.get_secret()

|

||||

|

||||

self.name_recorder_mapper[h['name']] = {

|

||||

'account': account, 'new_secret': new_secret,

|

||||

}

|

||||

|

||||

private_key_path = None

|

||||

if secret_type == SecretType.SSH_KEY:

|

||||

if self.secret_type == SecretType.SSH_KEY:

|

||||

private_key_path = self.generate_private_key_path(new_secret, path_dir)

|

||||

new_secret = self.generate_public_key(new_secret)

|

||||

|

||||

h['kwargs'] = self.get_kwargs(account, new_secret, secret_type)

|

||||

h['kwargs'] = self.get_kwargs(account, new_secret)

|

||||

h['account'] = {

|

||||

'name': account.name,

|

||||

'username': account.username,

|

||||

'secret_type': secret_type,

|

||||

'secret_type': account.secret_type,

|

||||

'secret': new_secret,

|

||||

'private_key_path': private_key_path

|

||||

}

|

||||

@@ -81,9 +112,9 @@ class PushAccountManager(ChangeSecretManager, AccountBasePlaybookManager):

|

||||

logger.error("Pust account error: ", e)

|

||||

|

||||

def run(self, *args, **kwargs):

|

||||

if self.secret_type and not self.check_secret():

|

||||

if not self.check_secret():

|

||||

return

|

||||

super(ChangeSecretManager, self).run(*args, **kwargs)

|

||||

super().run(*args, **kwargs)

|

||||

|

||||

# @classmethod

|

||||

# def trigger_by_asset_create(cls, asset):

|

||||

|

||||

@@ -25,15 +25,6 @@ class VerifyAccountManager(AccountBasePlaybookManager):

|

||||

f.write('ssh_args = -o ControlMaster=no -o ControlPersist=no\n')

|

||||

return path

|

||||

|

||||

@classmethod

|

||||

def method_type(cls):

|

||||

return AutomationTypes.verify_account

|

||||

|

||||

def get_accounts(self, privilege_account, accounts: QuerySet):

|

||||

account_ids = self.execution.snapshot['accounts']

|

||||

accounts = accounts.filter(id__in=account_ids)

|

||||

return accounts

|

||||

|

||||

def host_callback(self, host, asset=None, account=None, automation=None, path_dir=None, **kwargs):

|

||||

host = super().host_callback(

|

||||

host, asset=asset, account=account,

|

||||

@@ -71,6 +62,16 @@ class VerifyAccountManager(AccountBasePlaybookManager):

|

||||

inventory_hosts.append(h)

|

||||

return inventory_hosts

|

||||

|

||||

@classmethod

|

||||

def method_type(cls):

|

||||

return AutomationTypes.verify_account

|

||||

|

||||

def get_accounts(self, privilege_account, accounts: QuerySet):

|

||||

snapshot_account_usernames = self.execution.snapshot['accounts']

|

||||

if '*' not in snapshot_account_usernames:

|

||||

accounts = accounts.filter(username__in=snapshot_account_usernames)

|

||||

return accounts

|

||||

|

||||

def on_host_success(self, host, result):

|

||||

account = self.host_account_mapper.get(host)

|

||||

account.set_connectivity(Connectivity.OK)

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

from common.utils import get_logger

|

||||

from accounts.const import AutomationTypes

|

||||

from assets.automations.ping_gateway.manager import PingGatewayManager

|

||||

from common.utils import get_logger

|

||||

|

||||

logger = get_logger(__name__)

|

||||

|

||||

@@ -16,6 +16,6 @@ class VerifyGatewayAccountManager(PingGatewayManager):

|

||||

logger.info(">>> 开始执行测试网关账号可连接性任务")

|

||||

|

||||

def get_accounts(self, gateway):

|

||||

account_ids = self.execution.snapshot['accounts']

|

||||

accounts = gateway.accounts.filter(id__in=account_ids)

|

||||

usernames = self.execution.snapshot['accounts']

|

||||

accounts = gateway.accounts.filter(username__in=usernames)

|

||||

return accounts

|

||||

|

||||

@@ -1,69 +0,0 @@

|

||||

# Generated by Django 3.2.16 on 2023-03-07 07:36

|

||||

|

||||

from django.db import migrations

|

||||

from django.db.models import Q

|

||||

|

||||

|

||||

def get_nodes_all_assets(apps, *nodes):

|

||||

node_model = apps.get_model('assets', 'Node')

|

||||

asset_model = apps.get_model('assets', 'Asset')

|

||||

node_ids = set()

|

||||

descendant_node_query = Q()

|

||||

for n in nodes:

|

||||

node_ids.add(n.id)

|

||||

descendant_node_query |= Q(key__istartswith=f'{n.key}:')

|

||||

if descendant_node_query:

|

||||

_ids = node_model.objects.order_by().filter(descendant_node_query).values_list('id', flat=True)

|

||||

node_ids.update(_ids)

|

||||

return asset_model.objects.order_by().filter(nodes__id__in=node_ids).distinct()

|

||||

|

||||

|

||||

def get_all_assets(apps, snapshot):

|

||||

node_model = apps.get_model('assets', 'Node')

|

||||

asset_model = apps.get_model('assets', 'Asset')

|

||||

asset_ids = snapshot.get('assets', [])

|

||||

node_ids = snapshot.get('nodes', [])

|

||||

|

||||

nodes = node_model.objects.filter(id__in=node_ids)

|

||||

node_asset_ids = get_nodes_all_assets(apps, *nodes).values_list('id', flat=True)

|

||||

asset_ids = set(list(asset_ids) + list(node_asset_ids))

|

||||

return asset_model.objects.filter(id__in=asset_ids)

|

||||

|

||||

|

||||

def migrate_account_usernames_to_ids(apps, schema_editor):

|

||||

db_alias = schema_editor.connection.alias

|

||||

execution_model = apps.get_model('accounts', 'AutomationExecution')

|

||||

account_model = apps.get_model('accounts', 'Account')

|

||||

executions = execution_model.objects.using(db_alias).all()

|

||||

executions_update = []

|

||||

for execution in executions:

|

||||

snapshot = execution.snapshot

|

||||

accounts = account_model.objects.none()

|

||||

account_usernames = snapshot.get('accounts', [])

|

||||

for asset in get_all_assets(apps, snapshot):

|

||||

accounts = accounts | asset.accounts.all()

|

||||

secret_type = snapshot.get('secret_type')

|

||||

if secret_type:

|

||||

ids = accounts.filter(

|

||||

username__in=account_usernames,

|

||||

secret_type=secret_type

|

||||

).values_list('id', flat=True)

|

||||

else:

|

||||

ids = accounts.filter(

|

||||

username__in=account_usernames

|

||||

).values_list('id', flat=True)

|

||||

snapshot['accounts'] = [str(_id) for _id in ids]

|

||||

execution.snapshot = snapshot

|

||||

executions_update.append(execution)

|

||||

|

||||

execution_model.objects.bulk_update(executions_update, ['snapshot'])

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

dependencies = [

|

||||

('accounts', '0008_alter_gatheredaccount_options'),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.RunPython(migrate_account_usernames_to_ids),

|

||||

]

|

||||

@@ -1,12 +1,11 @@

|

||||

from django.db import models

|

||||

from django.utils.translation import ugettext_lazy as _

|

||||

|

||||

from common.db import fields

|

||||

from common.db.models import JMSBaseModel

|

||||

from accounts.const import (

|

||||

AutomationTypes, SecretType, SecretStrategy, SSHKeyStrategy

|

||||

)

|

||||

from accounts.models import Account

|

||||

from common.db import fields

|

||||

from common.db.models import JMSBaseModel

|

||||

from .base import AccountBaseAutomation

|

||||

|

||||

__all__ = ['ChangeSecretAutomation', 'ChangeSecretRecord', 'ChangeSecretMixin']

|

||||

@@ -28,35 +27,18 @@ class ChangeSecretMixin(models.Model):

|

||||

default=SSHKeyStrategy.add, verbose_name=_('SSH key change strategy')

|

||||

)

|

||||

|

||||

accounts: list[str] # account usernames

|

||||

get_all_assets: callable # get all assets

|

||||

|

||||

class Meta:

|

||||

abstract = True

|

||||

|

||||

def create_nonlocal_accounts(self, usernames, asset):

|

||||

pass

|

||||

|

||||

def get_account_ids(self):

|

||||

usernames = self.accounts

|

||||

accounts = Account.objects.none()

|

||||

for asset in self.get_all_assets():

|

||||

self.create_nonlocal_accounts(usernames, asset)

|

||||

accounts = accounts | asset.accounts.all()

|

||||

account_ids = accounts.filter(

|

||||

username__in=usernames, secret_type=self.secret_type

|

||||

).values_list('id', flat=True)

|

||||

return [str(_id) for _id in account_ids]

|

||||

|

||||

def to_attr_json(self):

|

||||

attr_json = super().to_attr_json()

|

||||

attr_json.update({

|

||||

'secret': self.secret,

|

||||

'secret_type': self.secret_type,

|

||||

'accounts': self.get_account_ids(),

|

||||

'password_rules': self.password_rules,

|

||||

'secret_strategy': self.secret_strategy,

|

||||

'password_rules': self.password_rules,

|

||||

'ssh_key_change_strategy': self.ssh_key_change_strategy,

|

||||

|

||||

})

|

||||

return attr_json

|

||||

|

||||

|

||||

@@ -2,8 +2,6 @@ from django.db import models

|

||||

from django.utils.translation import ugettext_lazy as _

|

||||

|

||||

from accounts.const import AutomationTypes

|

||||

from accounts.models import Account

|

||||

from jumpserver.utils import has_valid_xpack_license

|

||||

from .base import AccountBaseAutomation

|

||||

from .change_secret import ChangeSecretMixin

|

||||

|

||||

@@ -15,21 +13,6 @@ class PushAccountAutomation(ChangeSecretMixin, AccountBaseAutomation):

|

||||

username = models.CharField(max_length=128, verbose_name=_('Username'))

|

||||

action = models.CharField(max_length=16, verbose_name=_('Action'))

|

||||

|

||||

def create_nonlocal_accounts(self, usernames, asset):

|

||||

secret_type = self.secret_type

|

||||

account_usernames = asset.accounts.filter(secret_type=self.secret_type).values_list(

|

||||

'username', flat=True

|

||||

)

|

||||

create_usernames = set(usernames) - set(account_usernames)

|

||||

create_account_objs = [

|

||||

Account(

|

||||

name=f'{username}-{secret_type}', username=username,

|

||||

secret_type=secret_type, asset=asset,

|

||||

)

|

||||

for username in create_usernames

|

||||

]

|

||||

Account.objects.bulk_create(create_account_objs)

|

||||

|

||||

def set_period_schedule(self):

|

||||

pass

|

||||

|

||||

@@ -44,8 +27,6 @@ class PushAccountAutomation(ChangeSecretMixin, AccountBaseAutomation):

|

||||

|

||||

def save(self, *args, **kwargs):

|

||||

self.type = AutomationTypes.push_account

|

||||

if not has_valid_xpack_license():

|

||||

self.is_periodic = False

|

||||

super().save(*args, **kwargs)

|

||||

|

||||

def to_attr_json(self):

|

||||

|

||||

@@ -12,7 +12,7 @@ from accounts.const import SecretType

|

||||

from common.db import fields

|

||||

from common.utils import (

|

||||

ssh_key_string_to_obj, ssh_key_gen, get_logger,

|

||||

random_string, lazyproperty, parse_ssh_public_key_str, is_openssh_format_key

|

||||

random_string, lazyproperty, parse_ssh_public_key_str

|

||||

)

|

||||

from orgs.mixins.models import JMSOrgBaseModel, OrgManager

|

||||

|

||||

@@ -118,13 +118,7 @@ class BaseAccount(JMSOrgBaseModel):

|

||||

key_name = '.' + md5(self.private_key.encode('utf-8')).hexdigest()

|

||||

key_path = os.path.join(tmp_dir, key_name)

|

||||

if not os.path.exists(key_path):

|

||||

# https://github.com/ansible/ansible-runner/issues/544

|

||||

# ssh requires OpenSSH format keys to have a full ending newline.

|

||||

# It does not require this for old-style PEM keys.

|

||||

with open(key_path, 'w') as f:

|

||||

f.write(self.secret)

|

||||

if is_openssh_format_key(self.secret.encode('utf-8')):

|

||||

f.write("\n")

|

||||

self.private_key_obj.write_private_key_file(key_path)

|

||||

os.chmod(key_path, 0o400)

|

||||

return key_path

|

||||

|

||||

|

||||

@@ -81,7 +81,7 @@ class AccountAssetSerializer(serializers.ModelSerializer):

|

||||

|

||||

def to_internal_value(self, data):

|

||||

if isinstance(data, dict):

|

||||

i = data.get('id') or data.get('pk')

|

||||

i = data.get('id')

|

||||

else:

|

||||

i = data

|

||||

|

||||

@@ -135,15 +135,8 @@ class AccountHistorySerializer(serializers.ModelSerializer):

|

||||

|

||||

class Meta:

|

||||

model = Account.history.model

|

||||

fields = [

|

||||

'id', 'secret', 'secret_type', 'version', 'history_date',

|

||||

'history_user'

|

||||

]

|

||||

fields = ['id', 'secret', 'secret_type', 'version', 'history_date', 'history_user']

|

||||

read_only_fields = fields

|

||||

extra_kwargs = {

|

||||

'history_user': {'label': _('User')},

|

||||

'history_date': {'label': _('Date')},

|

||||

}

|

||||

|

||||

|

||||

class AccountTaskSerializer(serializers.Serializer):

|

||||

|

||||

@@ -33,8 +33,7 @@ class AuthValidateMixin(serializers.Serializer):

|

||||

return secret

|

||||

elif secret_type == SecretType.SSH_KEY:

|

||||

passphrase = passphrase if passphrase else None

|

||||

secret = validate_ssh_key(secret, passphrase)

|

||||

return secret

|

||||

return validate_ssh_key(secret, passphrase)

|

||||

else:

|

||||

return secret

|

||||

|

||||

@@ -42,9 +41,8 @@ class AuthValidateMixin(serializers.Serializer):

|

||||

secret_type = validated_data.get('secret_type')

|

||||

passphrase = validated_data.get('passphrase')

|

||||

secret = validated_data.pop('secret', None)

|

||||

validated_data['secret'] = self.handle_secret(

|

||||

secret, secret_type, passphrase

|

||||

)

|

||||

self.handle_secret(secret, secret_type, passphrase)

|

||||

validated_data['secret'] = secret

|

||||

for field in ('secret',):

|

||||

value = validated_data.get(field)

|

||||

if not value:

|

||||

|

||||

@@ -23,10 +23,12 @@ def push_accounts_to_assets_task(account_ids):

|

||||

task_name = gettext_noop("Push accounts to assets")

|

||||

task_name = PushAccountAutomation.generate_unique_name(task_name)

|

||||

|

||||

task_snapshot = {

|

||||

'accounts': [str(account.id) for account in accounts],

|

||||

'assets': [str(account.asset_id) for account in accounts],

|

||||

}

|

||||

|

||||

tp = AutomationTypes.push_account

|

||||

quickstart_automation_by_snapshot(task_name, tp, task_snapshot)

|

||||

for account in accounts:

|

||||

task_snapshot = {

|

||||

'secret': account.secret,

|

||||

'secret_type': account.secret_type,

|

||||

'accounts': [account.username],

|

||||

'assets': [str(account.asset_id)],

|

||||

}

|

||||

tp = AutomationTypes.push_account

|

||||

quickstart_automation_by_snapshot(task_name, tp, task_snapshot)

|

||||

|

||||

@@ -17,9 +17,9 @@ __all__ = [

|

||||

def verify_connectivity_util(assets, tp, accounts, task_name):

|

||||

if not assets or not accounts:

|

||||

return

|

||||

account_ids = [str(account.id) for account in accounts]

|

||||

account_usernames = list(accounts.values_list('username', flat=True))

|

||||

task_snapshot = {

|

||||

'accounts': account_ids,

|

||||

'accounts': account_usernames,

|

||||

'assets': [str(asset.id) for asset in assets],

|

||||

}

|

||||

quickstart_automation_by_snapshot(task_name, tp, task_snapshot)

|

||||

|

||||

@@ -12,7 +12,8 @@ from django.utils.translation import gettext as _

|

||||

from sshtunnel import SSHTunnelForwarder, BaseSSHTunnelForwarderError

|

||||

|

||||

from assets.automations.methods import platform_automation_methods

|

||||

from common.utils import get_logger, lazyproperty, is_openssh_format_key, ssh_pubkey_gen

|

||||

from common.utils import get_logger, lazyproperty

|

||||

from common.utils import ssh_pubkey_gen, ssh_key_string_to_obj

|

||||

from ops.ansible import JMSInventory, PlaybookRunner, DefaultCallback

|

||||

|

||||

logger = get_logger(__name__)

|

||||

@@ -126,13 +127,7 @@ class BasePlaybookManager:

|

||||

key_path = os.path.join(path_dir, key_name)

|

||||

|

||||

if not os.path.exists(key_path):

|

||||

# https://github.com/ansible/ansible-runner/issues/544

|

||||

# ssh requires OpenSSH format keys to have a full ending newline.

|

||||

# It does not require this for old-style PEM keys.

|

||||

with open(key_path, 'w') as f:

|

||||

f.write(secret)

|

||||

if is_openssh_format_key(secret.encode('utf-8')):

|

||||

f.write("\n")

|

||||

ssh_key_string_to_obj(secret, password=None).write_private_key_file(key_path)

|

||||

os.chmod(key_path, 0o400)

|

||||

return key_path

|

||||

|

||||

|

||||

@@ -1,35 +0,0 @@

|

||||

__all__ = ['FormatAssetInfo']

|

||||

|

||||

|

||||

class FormatAssetInfo:

|

||||

|

||||

def __init__(self, tp):

|

||||

self.tp = tp

|

||||

|

||||

@staticmethod

|

||||

def posix_format(info):

|

||||

for cpu_model in info.get('cpu_model', []):

|

||||

if cpu_model.endswith('GHz') or cpu_model.startswith("Intel"):

|

||||

break

|

||||

else:

|

||||

cpu_model = ''

|

||||

info['cpu_model'] = cpu_model[:48]

|

||||

info['cpu_count'] = info.get('cpu_count', 0)

|

||||

return info

|

||||

|

||||

def run(self, method_id_meta_mapper, info):

|

||||

for k, v in info.items():

|

||||

info[k] = v.strip() if isinstance(v, str) else v

|

||||

|

||||

run_method_name = None

|

||||

for k, v in method_id_meta_mapper.items():

|

||||

if self.tp not in v['type']:

|

||||

continue

|

||||

run_method_name = k.replace(f'{v["method"]}_', '')

|

||||

|

||||

if not run_method_name:

|

||||

return info

|

||||

|

||||

if hasattr(self, f'{run_method_name}_format'):

|

||||

return getattr(self, f'{run_method_name}_format')(info)

|

||||

return info

|

||||

@@ -11,7 +11,7 @@

|

||||

cpu_count: "{{ ansible_processor_count }}"

|

||||

cpu_cores: "{{ ansible_processor_cores }}"

|

||||

cpu_vcpus: "{{ ansible_processor_vcpus }}"

|

||||

memory: "{{ ansible_memtotal_mb / 1024 | round(2) }}"

|

||||

memory: "{{ ansible_memtotal_mb }}"

|

||||

disk_total: "{{ (ansible_mounts | map(attribute='size_total') | sum / 1024 / 1024 / 1024) | round(2) }}"

|

||||

distribution: "{{ ansible_distribution }}"

|

||||

distribution_version: "{{ ansible_distribution_version }}"

|

||||

|

||||

@@ -1,6 +1,5 @@

|

||||

from assets.const import AutomationTypes

|

||||

from common.utils import get_logger

|

||||

from .format_asset_info import FormatAssetInfo

|

||||

from assets.const import AutomationTypes

|

||||

from ..base.manager import BasePlaybookManager

|

||||

|

||||

logger = get_logger(__name__)

|

||||

@@ -20,16 +19,13 @@ class GatherFactsManager(BasePlaybookManager):

|

||||

self.host_asset_mapper[host['name']] = asset

|

||||

return host

|

||||

|

||||

def format_asset_info(self, tp, info):

|

||||

info = FormatAssetInfo(tp).run(self.method_id_meta_mapper, info)

|

||||

return info

|

||||

|

||||

def on_host_success(self, host, result):

|

||||

info = result.get('debug', {}).get('res', {}).get('info', {})

|

||||

asset = self.host_asset_mapper.get(host)

|

||||

if asset and info:

|

||||

info = self.format_asset_info(asset.type, info)

|

||||

for k, v in info.items():

|

||||

info[k] = v.strip() if isinstance(v, str) else v

|

||||

asset.info = info

|

||||

asset.save(update_fields=['info'])

|

||||

asset.save()

|

||||

else:

|

||||

logger.error("Not found info: {}".format(host))

|

||||

|

||||

@@ -1,12 +1,10 @@

|

||||

from django.utils.translation import gettext_lazy as _

|

||||

|

||||

from .base import BaseType

|

||||

|

||||

|

||||

class CloudTypes(BaseType):

|

||||

PUBLIC = 'public', _('Public cloud')

|

||||

PRIVATE = 'private', _('Private cloud')

|

||||

K8S = 'k8s', _('Kubernetes')

|

||||

PUBLIC = 'public', 'Public cloud'

|

||||

PRIVATE = 'private', 'Private cloud'

|

||||

K8S = 'k8s', 'Kubernetes'

|

||||

|

||||

@classmethod

|

||||

def _get_base_constrains(cls) -> dict:

|

||||

|

||||

@@ -1,5 +1,3 @@

|

||||

from django.utils.translation import gettext_lazy as _

|

||||

|

||||

from .base import BaseType

|

||||

|

||||

GATEWAY_NAME = 'Gateway'

|

||||

@@ -9,7 +7,7 @@ class HostTypes(BaseType):

|

||||

LINUX = 'linux', 'Linux'

|

||||

WINDOWS = 'windows', 'Windows'

|

||||

UNIX = 'unix', 'Unix'

|

||||

OTHER_HOST = 'other', _("Other")

|

||||

OTHER_HOST = 'other', "Other"

|

||||

|

||||

@classmethod

|

||||

def _get_base_constrains(cls) -> dict:

|

||||

|

||||

@@ -39,7 +39,7 @@ class Protocol(ChoicesMixin, models.TextChoices):

|

||||

'port': 3389,

|

||||

'secret_types': ['password'],

|

||||

'setting': {

|

||||

'console': False,

|

||||

'console': True,

|

||||

'security': 'any',

|

||||

}

|

||||

},

|

||||

|

||||

@@ -214,13 +214,10 @@ class AllTypes(ChoicesMixin):

|

||||

tp_node = cls.choice_to_node(tp, category_node['id'], opened=False, meta=meta)

|

||||

tp_count = category_type_mapper.get(category + '_' + tp, 0)

|

||||

tp_node['name'] += f'({tp_count})'

|

||||

platforms = tp_platforms.get(category + '_' + tp, [])

|

||||

if not platforms:

|

||||

tp_node['isParent'] = False

|

||||

nodes.append(tp_node)

|

||||

|

||||

# Platform 格式化

|

||||

for p in platforms:

|

||||

for p in tp_platforms.get(category + '_' + tp, []):

|

||||

platform_node = cls.platform_to_node(p, tp_node['id'], include_asset)

|

||||

platform_node['name'] += f'({platform_count.get(p.id, 0)})'

|

||||

nodes.append(platform_node)

|

||||

@@ -309,11 +306,10 @@ class AllTypes(ChoicesMixin):

|

||||

protocols_data = deepcopy(default_protocols)

|

||||

if _protocols:

|

||||

protocols_data = [p for p in protocols_data if p['name'] in _protocols]

|

||||

|

||||

for p in protocols_data:

|

||||

setting = _protocols_setting.get(p['name'], {})

|

||||

p['required'] = setting.pop('required', False)

|

||||

p['default'] = setting.pop('default', False)

|

||||

p['required'] = p.pop('required', False)

|

||||

p['default'] = p.pop('default', False)

|

||||

p['setting'] = {**p.get('setting', {}), **setting}

|

||||

|

||||

platform_data = {

|

||||

|

||||

@@ -34,9 +34,8 @@ def migrate_database_to_asset(apps, *args):

|

||||

_attrs = app.attrs or {}

|

||||

attrs.update(_attrs)

|

||||

|

||||

name = 'DB-{}'.format(app.name)

|

||||

db = db_model(

|

||||

id=app.id, name=name, address=attrs['host'],

|

||||

id=app.id, name=app.name, address=attrs['host'],

|

||||

protocols='{}/{}'.format(app.type, attrs['port']),

|

||||

db_name=attrs['database'] or '',

|

||||

platform=platforms_map[app.type],

|

||||

@@ -62,9 +61,8 @@ def migrate_cloud_to_asset(apps, *args):

|

||||

for app in applications:

|

||||

attrs = app.attrs

|

||||

print("\t- Create cloud: {}".format(app.name))

|

||||

name = 'Cloud-{}'.format(app.name)

|

||||

cloud = cloud_model(

|

||||

id=app.id, name=name,

|

||||

id=app.id, name=app.name,

|

||||

address=attrs.get('cluster', ''),

|

||||

protocols='k8s/443', platform=platform,

|

||||

org_id=app.org_id,

|

||||

|

||||

@@ -1,29 +0,0 @@

|

||||

# Generated by Django 3.2.17 on 2023-03-15 09:41

|

||||

|

||||

from django.db import migrations

|

||||

|

||||

|

||||

def set_windows_platform_non_console(apps, schema_editor):

|

||||

Platform = apps.get_model('assets', 'Platform')

|

||||

names = ['Windows', 'Windows-RDP', 'Windows-TLS', 'RemoteAppHost']

|

||||

windows = Platform.objects.filter(name__in=names)

|

||||

if not windows:

|

||||

return

|

||||

|

||||

for p in windows:

|

||||

rdp = p.protocols.filter(name='rdp').first()

|

||||

if not rdp:

|

||||

continue

|

||||

rdp.setting['console'] = False

|

||||

rdp.save()

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

('assets', '0109_alter_asset_options'),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.RunPython(set_windows_platform_non_console)

|

||||

]

|

||||

@@ -108,7 +108,7 @@ class Asset(NodesRelationMixin, AbsConnectivity, JMSOrgBaseModel):

|

||||

verbose_name=_("Nodes"))

|

||||

is_active = models.BooleanField(default=True, verbose_name=_('Is active'))

|

||||

labels = models.ManyToManyField('assets.Label', blank=True, related_name='assets', verbose_name=_("Labels"))

|

||||

info = models.JSONField(verbose_name=_('Info'), default=dict, blank=True) # 资产的一些信息,如 硬件信息

|

||||

info = models.JSONField(verbose_name='Info', default=dict, blank=True) # 资产的一些信息,如 硬件信息

|

||||

|

||||

objects = AssetManager.from_queryset(AssetQuerySet)()

|

||||

|

||||

|

||||

@@ -11,7 +11,7 @@ __all__ = ['Platform', 'PlatformProtocol', 'PlatformAutomation']

|

||||

|

||||

class PlatformProtocol(models.Model):

|

||||

SETTING_ATTRS = {

|

||||

'console': False,

|

||||

'console': True,

|

||||

'security': 'any,tls,rdp',

|

||||

'sftp_enabled': True,

|

||||

'sftp_home': '/tmp'

|

||||

|

||||

@@ -26,13 +26,6 @@ __all__ = [

|

||||

class AssetProtocolsSerializer(serializers.ModelSerializer):

|

||||

port = serializers.IntegerField(required=False, allow_null=True, max_value=65535, min_value=1)

|

||||

|

||||

def to_file_representation(self, data):

|

||||

return '{name}/{port}'.format(**data)

|

||||

|

||||

def to_file_internal_value(self, data):

|

||||

name, port = data.split('/')

|

||||

return {'name': name, 'port': port}

|

||||

|

||||

class Meta:

|

||||

model = Protocol

|

||||

fields = ['name', 'port']

|

||||

@@ -71,8 +64,7 @@ class AssetAccountSerializer(

|

||||

template = serializers.BooleanField(

|

||||

default=False, label=_("Template"), write_only=True

|

||||

)

|

||||

name = serializers.CharField(max_length=128, required=False, label=_("Name"))

|

||||

secret_type = serializers.CharField(max_length=64, default='password', label=_("Secret type"))

|

||||

name = serializers.CharField(max_length=128, required=True, label=_("Name"))

|

||||

|

||||

class Meta:

|

||||

model = Account

|

||||

@@ -81,7 +73,7 @@ class AssetAccountSerializer(

|

||||

'is_active', 'version', 'secret_type',

|

||||

]

|

||||

fields_write_only = [

|

||||

'secret', 'passphrase', 'push_now', 'template'

|

||||

'secret', 'push_now', 'template'

|

||||

]

|

||||

fields = fields_mini + fields_write_only

|

||||

extra_kwargs = {

|

||||

@@ -129,8 +121,7 @@ class AssetSerializer(BulkOrgResourceModelSerializer, WritableNestedModelSeriali

|

||||

type = LabeledChoiceField(choices=AllTypes.choices(), read_only=True, label=_('Type'))

|

||||

labels = AssetLabelSerializer(many=True, required=False, label=_('Label'))

|

||||

protocols = AssetProtocolsSerializer(many=True, required=False, label=_('Protocols'), default=())

|

||||

accounts = AssetAccountSerializer(many=True, required=False, allow_null=True, write_only=True, label=_('Account'))

|

||||

nodes_display = serializers.ListField(read_only=True, label=_("Node path"))

|

||||

accounts = AssetAccountSerializer(many=True, required=False, write_only=True, label=_('Account'))

|

||||

|

||||

class Meta:

|

||||

model = Asset

|

||||

@@ -142,11 +133,11 @@ class AssetSerializer(BulkOrgResourceModelSerializer, WritableNestedModelSeriali

|

||||

'nodes_display', 'accounts'

|

||||

]

|

||||

read_only_fields = [

|

||||

'category', 'type', 'connectivity', 'auto_info',

|

||||

'category', 'type', 'connectivity',

|

||||

'date_verified', 'created_by', 'date_created',

|

||||

'auto_info',

|

||||

]

|

||||

fields = fields_small + fields_fk + fields_m2m + read_only_fields

|

||||

fields_unexport = ['auto_info']

|

||||

extra_kwargs = {

|

||||

'auto_info': {'label': _('Auto info')},

|

||||

'name': {'label': _("Name")},

|

||||

@@ -159,7 +150,7 @@ class AssetSerializer(BulkOrgResourceModelSerializer, WritableNestedModelSeriali

|

||||

self._init_field_choices()

|

||||

|

||||

def _get_protocols_required_default(self):

|

||||

platform = self._asset_platform

|

||||

platform = self._initial_data_platform

|

||||

platform_protocols = platform.protocols.all()

|

||||

protocols_default = [p for p in platform_protocols if p.default]

|

||||

protocols_required = [p for p in platform_protocols if p.required or p.primary]

|

||||

@@ -215,22 +206,20 @@ class AssetSerializer(BulkOrgResourceModelSerializer, WritableNestedModelSeriali

|

||||

instance.nodes.set(nodes_to_set)

|

||||

|

||||

@property

|

||||

def _asset_platform(self):

|

||||

def _initial_data_platform(self):

|

||||

if self.instance:

|

||||

return self.instance.platform

|

||||

|

||||

platform_id = self.initial_data.get('platform')

|

||||

if isinstance(platform_id, dict):

|

||||

platform_id = platform_id.get('id') or platform_id.get('pk')

|

||||

|

||||

if not platform_id and self.instance:

|

||||

platform = self.instance.platform

|

||||

else:

|

||||

platform = Platform.objects.filter(id=platform_id).first()

|

||||

|

||||

platform = Platform.objects.filter(id=platform_id).first()

|

||||

if not platform:

|

||||

raise serializers.ValidationError({'platform': _("Platform not exist")})

|

||||

return platform

|

||||

|

||||

def validate_domain(self, value):

|

||||

platform = self._asset_platform

|

||||

platform = self._initial_data_platform

|

||||

if platform.domain_enabled:

|

||||

return value

|

||||

else:

|

||||

@@ -274,8 +263,6 @@ class AssetSerializer(BulkOrgResourceModelSerializer, WritableNestedModelSeriali

|

||||

|

||||

@staticmethod

|

||||

def accounts_create(accounts_data, asset):

|

||||

if not accounts_data:

|

||||

return

|

||||

for data in accounts_data:

|

||||

data['asset'] = asset

|

||||

AssetAccountSerializer().create(data)

|

||||

|

||||

@@ -1,25 +1,26 @@

|

||||

from django.utils.translation import gettext_lazy as _

|

||||

from rest_framework import serializers

|

||||

from django.utils.translation import gettext_lazy as _

|

||||

|

||||

from assets.models import Host

|

||||

from .common import AssetSerializer

|

||||

|

||||

|

||||

__all__ = ['HostInfoSerializer', 'HostSerializer']

|

||||

|

||||

|

||||

class HostInfoSerializer(serializers.Serializer):

|

||||

vendor = serializers.CharField(max_length=64, required=False, allow_blank=True, label=_('Vendor'))

|

||||

model = serializers.CharField(max_length=54, required=False, allow_blank=True, label=_('Model'))

|

||||

sn = serializers.CharField(max_length=128, required=False, allow_blank=True, label=_('Serial number'))

|

||||

cpu_model = serializers.CharField(max_length=64, allow_blank=True, required=False, label=_('CPU model'))

|

||||

cpu_count = serializers.CharField(max_length=64, required=False, allow_blank=True, label=_('CPU count'))

|

||||

cpu_cores = serializers.CharField(max_length=64, required=False, allow_blank=True, label=_('CPU cores'))

|

||||

cpu_vcpus = serializers.CharField(max_length=64, required=False, allow_blank=True, label=_('CPU vcpus'))

|

||||

model = serializers.CharField(max_length=54, required=False, allow_blank=True, label=_('Model'))

|

||||

sn = serializers.CharField(max_length=128, required=False, allow_blank=True, label=_('Serial number'))

|

||||

cpu_model = serializers.ListField(child=serializers.CharField(max_length=64, allow_blank=True), required=False, label=_('CPU model'))

|

||||

cpu_count = serializers.IntegerField(required=False, label=_('CPU count'))

|

||||

cpu_cores = serializers.IntegerField(required=False, label=_('CPU cores'))

|

||||

cpu_vcpus = serializers.IntegerField(required=False, label=_('CPU vcpus'))

|

||||

memory = serializers.CharField(max_length=64, allow_blank=True, required=False, label=_('Memory'))

|

||||

disk_total = serializers.CharField(max_length=1024, allow_blank=True, required=False, label=_('Disk total'))

|

||||

|

||||

distribution = serializers.CharField(max_length=128, allow_blank=True, required=False, label=_('OS'))

|

||||

distribution_version = serializers.CharField(max_length=16, allow_blank=True, required=False, label=_('OS version'))

|

||||

distribution_version = serializers.CharField(max_length=16, allow_blank=True, required=False, label=_('OS version'))

|

||||

arch = serializers.CharField(max_length=16, allow_blank=True, required=False, label=_('OS arch'))

|

||||

|

||||

|

||||

@@ -35,3 +36,5 @@ class HostSerializer(AssetSerializer):

|

||||

'label': _("IP/Host")

|

||||

},

|

||||

}

|

||||

|

||||

|

||||

|

||||

@@ -29,8 +29,7 @@ class LabelSerializer(BulkOrgResourceModelSerializer):

|

||||

|

||||

@classmethod

|

||||

def setup_eager_loading(cls, queryset):

|

||||

queryset = queryset.prefetch_related('assets') \

|

||||

.annotate(asset_count=Count('assets'))

|

||||

queryset = queryset.annotate(asset_count=Count('assets'))

|

||||

return queryset

|

||||

|

||||

|

||||

|

||||

@@ -1,5 +1,6 @@

|

||||

from django.utils.translation import gettext_lazy as _

|

||||

from rest_framework import serializers

|

||||

from django.core import validators

|

||||

|

||||

from assets.const.web import FillType

|

||||

from common.serializers import WritableNestedModelSerializer

|

||||

@@ -18,7 +19,7 @@ class ProtocolSettingSerializer(serializers.Serializer):

|

||||

("nla", "NLA"),

|

||||

]

|

||||

# RDP

|

||||

console = serializers.BooleanField(required=False, default=False)

|

||||

console = serializers.BooleanField(required=False)

|

||||

security = serializers.ChoiceField(choices=SECURITY_CHOICES, default="any")

|

||||

|

||||

# SFTP

|

||||

|

||||

@@ -1,11 +1,14 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

from urllib.parse import urlencode

|

||||

from urllib3.exceptions import MaxRetryError, LocationParseError

|

||||

|

||||

from kubernetes import client

|

||||

from kubernetes.client import api_client

|

||||

from kubernetes.client.api import core_v1_api

|

||||

from kubernetes.client.exceptions import ApiException

|

||||

|

||||

from common.utils import get_logger

|

||||

from common.exceptions import JMSException

|

||||

from ..const import CloudTypes, Category

|

||||

|

||||

logger = get_logger(__file__)

|

||||

@@ -17,8 +20,7 @@ class KubernetesClient:

|

||||

self.token = token

|

||||

self.proxy = proxy

|

||||

|

||||

@property

|

||||

def api(self):

|

||||

def get_api(self):

|

||||

configuration = client.Configuration()

|

||||

configuration.host = self.url

|

||||

configuration.proxy = self.proxy

|

||||

@@ -28,29 +30,64 @@ class KubernetesClient:

|

||||

api = core_v1_api.CoreV1Api(c)

|

||||

return api

|

||||

|

||||

def get_namespaces(self):

|

||||

namespaces = []

|

||||

resp = self.api.list_namespace()

|

||||

for ns in resp.items:

|

||||

namespaces.append(ns.metadata.name)

|

||||

return namespaces

|

||||

def get_namespace_list(self):

|

||||

api = self.get_api()

|

||||

namespace_list = []

|

||||

for ns in api.list_namespace().items:

|

||||

namespace_list.append(ns.metadata.name)

|

||||

return namespace_list

|

||||

|

||||

def get_pods(self, namespace):

|

||||

pods = []

|

||||

resp = self.api.list_namespaced_pod(namespace)

|

||||

for pd in resp.items:

|

||||

pods.append(pd.metadata.name)

|

||||

return pods

|

||||

def get_services(self):

|

||||

api = self.get_api()

|

||||

ret = api.list_service_for_all_namespaces(watch=False)

|

||||

for i in ret.items:

|

||||

print("%s \t%s \t%s \t%s \t%s \n" % (

|

||||

i.kind, i.metadata.namespace, i.metadata.name, i.spec.cluster_ip, i.spec.ports))

|

||||

|

||||

def get_containers(self, namespace, pod_name):

|

||||

containers = []

|

||||

resp = self.api.read_namespaced_pod(pod_name, namespace)

|

||||

for container in resp.spec.containers:

|

||||

containers.append(container.name)

|

||||

return containers

|

||||

def get_pod_info(self, namespace, pod):

|

||||

api = self.get_api()

|

||||

resp = api.read_namespaced_pod(namespace=namespace, name=pod)

|

||||

return resp

|

||||

|

||||

@staticmethod

|

||||

def get_proxy_url(asset):

|

||||

def get_pod_logs(self, namespace, pod):

|

||||

api = self.get_api()

|

||||

log_content = api.read_namespaced_pod_log(pod, namespace, pretty=True, tail_lines=200)

|

||||

return log_content

|

||||

|

||||

def get_pods(self):

|

||||

api = self.get_api()

|

||||

try:

|

||||

ret = api.list_pod_for_all_namespaces(watch=False, _request_timeout=(3, 3))

|

||||

except LocationParseError as e:

|

||||

logger.warning("Kubernetes API request url error: {}".format(e))

|

||||

raise JMSException(code='k8s_tree_error', detail=e)

|

||||

except MaxRetryError:

|

||||

msg = "Kubernetes API request timeout"

|

||||

logger.warning(msg)

|

||||

raise JMSException(code='k8s_tree_error', detail=msg)

|

||||

except ApiException as e:

|

||||

if e.status == 401:

|

||||

msg = "Kubernetes API request unauthorized"

|

||||

logger.warning(msg)

|

||||

else:

|

||||

msg = e

|

||||

logger.warning(msg)

|

||||

raise JMSException(code='k8s_tree_error', detail=msg)

|

||||

data = {}

|

||||

for i in ret.items:

|

||||

namespace = i.metadata.namespace

|

||||

pod_info = {

|

||||

'pod_name': i.metadata.name,

|

||||

'containers': [j.name for j in i.spec.containers]

|

||||

}

|

||||

if namespace in data:

|

||||

data[namespace].append(pod_info)

|

||||

else:

|

||||

data[namespace] = [pod_info, ]

|

||||

return data

|

||||

|

||||

@classmethod

|

||||

def get_proxy_url(cls, asset):

|

||||

if not asset.domain:

|

||||

return None

|

||||

|

||||

@@ -60,14 +97,11 @@ class KubernetesClient:

|

||||

return f'{gateway.address}:{gateway.port}'

|

||||

|

||||

@classmethod

|

||||

def run(cls, asset, secret, tp, *args):

|

||||

def get_kubernetes_data(cls, asset, secret):

|

||||

k8s_url = f'{asset.address}'

|

||||

proxy_url = cls.get_proxy_url(asset)

|

||||

k8s = cls(k8s_url, secret, proxy=proxy_url)

|

||||

func_name = f'get_{tp}s'

|

||||

if hasattr(k8s, func_name):

|

||||

return getattr(k8s, func_name)(*args)

|

||||

return []

|

||||

return k8s.get_pods()

|

||||

|

||||

|

||||

class KubernetesTree:

|

||||

@@ -83,15 +117,17 @@ class KubernetesTree:

|

||||

)

|

||||

return node

|

||||

|