Update python.py(experimental:Added code for PythonREPL)

Added code for PythonREPL, defining a static method 'sanitize_input'

that takes the string 'query' as input and returns a sanitizing string.

The purpose of this method is to remove unwanted characters from the

input string, Specifically:

1. Delete the whitespace at the beginning and end of the string (' \s').

2. Remove the quotation marks (`` ` ``) at the beginning and end of the

string.

3. Remove the keyword "python" at the beginning of the string (case

insensitive) because the user may have typed it.

This method uses regular expressions (regex) to implement sanitizing.

It all started with this code:

from langchain.agents import Tool

from langchain_experimental.utilities import PythonREPL

python_repl = PythonREPL()

repl_tool = Tool(

name="python_repl",

description="Remove redundant formatting marks at the beginning and end

of source code from input.Use a Python shell to execute python commands.

If you want to see the output of a value, you should print it out with

`print(...)`.",

func=python_repl.run,

)

When I call the agent to write a piece of code for me and execute it

with the defined code, I must get an error: SyntaxError('invalid

syntax', ('<string>', 1, 1,'In', 1, 2))

After checking, I found that pythonREPL has less formatting of input

code than the soon-to-be deprecated pythonREPL tool, so I added this

step to it, so that no matter what code I ask the agent to write for me,

it can be executed smoothly and get the output result.

I have tried modifying the prompt words to solve this problem before,

but it did not work, and by adding a simple format check, the problem is

well resolved.

<img width="1271" alt="image"

src="https://github.com/langchain-ai/langchain/assets/164149097/c49a685f-d246-4b11-b655-fd952fc2f04c">

---------

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

Implemented bind_tools for OllamaFunctions.

Made OllamaFunctions sub class of ChatOllama.

Implemented with_structured_output for OllamaFunctions.

integration unit test has been updated.

notebook has been updated.

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:** Fixes a bug in the HuggingGPT task execution logic

here:

except Exception as e:

self.status = "failed"

self.message = str(e)

self.status = "completed"

self.save_product()

where a caught exception effectively just sets `self.message` and can

then throw an exception if, e.g., `self.product` is not defined.

**Issue:** None that I'm aware of.

**Dependencies:** None

**Twitter handle:** https://twitter.com/michaeljschock

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

- **Description:** Currently, the regex is static (`r"(?<=[.?!])\s+"`),

which is only useful for certain use cases. The current change only

moves this to be a parameter of split_text(). Which adds flexibility

without making it more complex (as the default regex is still the same).

- **Issue:** Not applicable (I searched, no one seems to have created

this issue yet).

- **Dependencies:** None.

_If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17._

---------

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:** In January, Laiyer.ai became part of ProtectAI, which

means the model became owned by ProtectAI. In addition to that,

yesterday, we released a new version of the model addressing issues the

Langchain's community and others mentioned to us about false-positives.

The new model has a better accuracy compared to the previous version,

and we thought the Langchain community would benefit from using the

[latest version of the

model](https://huggingface.co/protectai/deberta-v3-base-prompt-injection-v2).

- **Issue:** N/A

- **Dependencies:** N/A

- **Twitter handle:** @alex_yaremchuk

Replaced `from langchain.prompts` with `from langchain_core.prompts`

where it is appropriate.

Most of the changes go to `langchain_experimental`

Similar to #20348

Replaced all `from langchain.callbacks` into `from

langchain_core.callbacks` .

Changes in the `langchain` and `langchain_experimental`

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

LLMs might sometimes return invalid response for LLM graph transformer.

Instead of failing due to pydantic validation, we skip it and manually

check and optionally fix error where we can, so that more information

gets extracted

Removes required usage of `requests` from `langchain-core`, all of which

has been deprecated.

- removes Tracer V1 implementations

- removes old `try_load_from_hub` github-based hub implementations

Removal done in a way where imports will still succeed, and usage will

fail with a `RuntimeError`.

Description: Video imagery to text (Closed Captioning)

This pull request introduces the VideoCaptioningChain, a tool for

automated video captioning. It processes audio and video to generate

subtitles and closed captions, merging them into a single SRT output.

Issue: https://github.com/langchain-ai/langchain/issues/11770

Dependencies: opencv-python, ffmpeg-python, assemblyai, transformers,

pillow, torch, openai

Tag maintainer:

@baskaryan

@hwchase17

Hello! We are a group of students from the University of Toronto

(@LunarECL, @TomSadan, @nicoledroi1, @A2113S) that want to make a

contribution to the LangChain community! We have ran make format, make

lint and make test locally before submitting the PR. To our knowledge,

our changes do not introduce any new errors.

Thank you for taking the time to review our PR!

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:**

When using the SQLDatabaseChain with Llama2-70b LLM and, SQLite

database. I was getting `Warning: You can only execute one statement at

a time.`.

```

from langchain.sql_database import SQLDatabase

from langchain_experimental.sql import SQLDatabaseChain

sql_database_path = '/dccstor/mmdataretrieval/mm_dataset/swimming_record/rag_data/swimmingdataset.db'

sql_db = get_database(sql_database_path)

db_chain = SQLDatabaseChain.from_llm(mistral, sql_db, verbose=True, callbacks = [callback_obj])

db_chain.invoke({

"query": "What is the best time of Lance Larson in men's 100 meter butterfly competition?"

})

```

Error:

```

Warning Traceback (most recent call last)

Cell In[31], line 3

1 import langchain

2 langchain.debug=False

----> 3 db_chain.invoke({

4 "query": "What is the best time of Lance Larson in men's 100 meter butterfly competition?"

5 })

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/langchain/chains/base.py:162, in Chain.invoke(self, input, config, **kwargs)

160 except BaseException as e:

161 run_manager.on_chain_error(e)

--> 162 raise e

163 run_manager.on_chain_end(outputs)

164 final_outputs: Dict[str, Any] = self.prep_outputs(

165 inputs, outputs, return_only_outputs

166 )

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/langchain/chains/base.py:156, in Chain.invoke(self, input, config, **kwargs)

149 run_manager = callback_manager.on_chain_start(

150 dumpd(self),

151 inputs,

152 name=run_name,

153 )

154 try:

155 outputs = (

--> 156 self._call(inputs, run_manager=run_manager)

157 if new_arg_supported

158 else self._call(inputs)

159 )

160 except BaseException as e:

161 run_manager.on_chain_error(e)

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/langchain_experimental/sql/base.py:198, in SQLDatabaseChain._call(self, inputs, run_manager)

194 except Exception as exc:

195 # Append intermediate steps to exception, to aid in logging and later

196 # improvement of few shot prompt seeds

197 exc.intermediate_steps = intermediate_steps # type: ignore

--> 198 raise exc

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/langchain_experimental/sql/base.py:143, in SQLDatabaseChain._call(self, inputs, run_manager)

139 intermediate_steps.append(

140 sql_cmd

141 ) # output: sql generation (no checker)

142 intermediate_steps.append({"sql_cmd": sql_cmd}) # input: sql exec

--> 143 result = self.database.run(sql_cmd)

144 intermediate_steps.append(str(result)) # output: sql exec

145 else:

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/langchain_community/utilities/sql_database.py:436, in SQLDatabase.run(self, command, fetch, include_columns)

425 def run(

426 self,

427 command: str,

428 fetch: Literal["all", "one"] = "all",

429 include_columns: bool = False,

430 ) -> str:

431 """Execute a SQL command and return a string representing the results.

432

433 If the statement returns rows, a string of the results is returned.

434 If the statement returns no rows, an empty string is returned.

435 """

--> 436 result = self._execute(command, fetch)

438 res = [

439 {

440 column: truncate_word(value, length=self._max_string_length)

(...)

443 for r in result

444 ]

446 if not include_columns:

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/langchain_community/utilities/sql_database.py:413, in SQLDatabase._execute(self, command, fetch)

410 elif self.dialect == "postgresql": # postgresql

411 connection.exec_driver_sql("SET search_path TO %s", (self._schema,))

--> 413 cursor = connection.execute(text(command))

414 if cursor.returns_rows:

415 if fetch == "all":

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/base.py:1416, in Connection.execute(self, statement, parameters, execution_options)

1414 raise exc.ObjectNotExecutableError(statement) from err

1415 else:

-> 1416 return meth(

1417 self,

1418 distilled_parameters,

1419 execution_options or NO_OPTIONS,

1420 )

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/sql/elements.py:516, in ClauseElement._execute_on_connection(self, connection, distilled_params, execution_options)

514 if TYPE_CHECKING:

515 assert isinstance(self, Executable)

--> 516 return connection._execute_clauseelement(

517 self, distilled_params, execution_options

518 )

519 else:

520 raise exc.ObjectNotExecutableError(self)

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/base.py:1639, in Connection._execute_clauseelement(self, elem, distilled_parameters, execution_options)

1627 compiled_cache: Optional[CompiledCacheType] = execution_options.get(

1628 "compiled_cache", self.engine._compiled_cache

1629 )

1631 compiled_sql, extracted_params, cache_hit = elem._compile_w_cache(

1632 dialect=dialect,

1633 compiled_cache=compiled_cache,

(...)

1637 linting=self.dialect.compiler_linting | compiler.WARN_LINTING,

1638 )

-> 1639 ret = self._execute_context(

1640 dialect,

1641 dialect.execution_ctx_cls._init_compiled,

1642 compiled_sql,

1643 distilled_parameters,

1644 execution_options,

1645 compiled_sql,

1646 distilled_parameters,

1647 elem,

1648 extracted_params,

1649 cache_hit=cache_hit,

1650 )

1651 if has_events:

1652 self.dispatch.after_execute(

1653 self,

1654 elem,

(...)

1658 ret,

1659 )

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/base.py:1848, in Connection._execute_context(self, dialect, constructor, statement, parameters, execution_options, *args, **kw)

1843 return self._exec_insertmany_context(

1844 dialect,

1845 context,

1846 )

1847 else:

-> 1848 return self._exec_single_context(

1849 dialect, context, statement, parameters

1850 )

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/base.py:1988, in Connection._exec_single_context(self, dialect, context, statement, parameters)

1985 result = context._setup_result_proxy()

1987 except BaseException as e:

-> 1988 self._handle_dbapi_exception(

1989 e, str_statement, effective_parameters, cursor, context

1990 )

1992 return result

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/base.py:2346, in Connection._handle_dbapi_exception(self, e, statement, parameters, cursor, context, is_sub_exec)

2344 else:

2345 assert exc_info[1] is not None

-> 2346 raise exc_info[1].with_traceback(exc_info[2])

2347 finally:

2348 del self._reentrant_error

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/base.py:1969, in Connection._exec_single_context(self, dialect, context, statement, parameters)

1967 break

1968 if not evt_handled:

-> 1969 self.dialect.do_execute(

1970 cursor, str_statement, effective_parameters, context

1971 )

1973 if self._has_events or self.engine._has_events:

1974 self.dispatch.after_cursor_execute(

1975 self,

1976 cursor,

(...)

1980 context.executemany,

1981 )

File ~/.conda/envs/guardrails1/lib/python3.9/site-packages/sqlalchemy/engine/default.py:922, in DefaultDialect.do_execute(self, cursor, statement, parameters, context)

921 def do_execute(self, cursor, statement, parameters, context=None):

--> 922 cursor.execute(statement, parameters)

Warning: You can only execute one statement at a time.

```

**Issue:**

The Error occurs because when generating the SQLQuery, the llm_input

includes the stop character of "\nSQLResult:", so for this user query

the LLM generated response is **SELECT Time FROM men_butterfly_100m

WHERE Swimmer = 'Lance Larson';\nSQLResult:** it is required to remove

the SQLResult suffix on the llm response before executing it on the

database.

```

llm_inputs = {

"input": input_text,

"top_k": str(self.top_k),

"dialect": self.database.dialect,

"table_info": table_info,

"stop": ["\nSQLResult:"],

}

sql_cmd = self.llm_chain.predict(

callbacks=_run_manager.get_child(),

**llm_inputs,

).strip()

if SQL_RESULT in sql_cmd:

sql_cmd = sql_cmd.split(SQL_RESULT)[0].strip()

result = self.database.run(sql_cmd)

```

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

---------

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

**Description:**

While not technically incorrect, the TypeVar used for the `@beta`

decorator prevented pyright (and thus most vscode users) from correctly

seeing the types of functions/classes decorated with `@beta`.

This is in part due to a small bug in pyright

(https://github.com/microsoft/pyright/issues/7448 ) - however, the

`Type` bound in the typevar `C = TypeVar("C", Type, Callable)` is not

doing anything - classes are `Callables` by default, so by my

understanding binding to `Type` does not actually provide any more

safety - the modified annotation still works correctly for both

functions, properties, and classes.

---------

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

- [x] **PR title**: "experimental: Enhance LLMGraphTransformer with

async processing and improved readability"

- [x] **PR message**:

- **Description:** This pull request refactors the `process_response`

and `convert_to_graph_documents` methods in the LLMGraphTransformer

class to improve code readability and adds async versions of these

methods for concurrent processing.

The main changes include:

- Simplifying list comprehensions and conditional logic in the

process_response method for better readability.

- Adding async versions aprocess_response and

aconvert_to_graph_documents to enable concurrent processing of

documents.

These enhancements aim to improve the overall efficiency and

maintainability of the `LLMGraphTransformer` class.

- **Issue:** N/A

- **Dependencies:** No additional dependencies required.

- **Twitter handle:** @jjovalle99

- [x] **Add tests and docs**: N/A (This PR does not introduce a new

integration)

- [x] **Lint and test**: Ran make format, make lint, and make test from

the root of the modified package(s). All tests pass successfully.

Additional notes:

- The changes made in this PR are backwards compatible and do not

introduce any breaking changes.

- The PR touches only the `LLMGraphTransformer` class within the

experimental package.

---------

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

**Description**

Adding different threshold types to the semantic chunker. I’ve had much

better and predictable performance when using standard deviations

instead of percentiles.

For all the documents I’ve tried, the distribution of distances look

similar to the above: positively skewed normal distribution. All skews

I’ve seen are less than 1 so that explains why standard deviations

perform well, but I’ve included IQR if anyone wants something more

robust.

Also, using the percentile method backwards, you can declare the number

of clusters and use semantic chunking to get an ‘optimal’ splitting.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

## Amazon Personalize support on Langchain

This PR is a successor to this PR -

https://github.com/langchain-ai/langchain/pull/13216

This PR introduces an integration with [Amazon

Personalize](https://aws.amazon.com/personalize/) to help you to

retrieve recommendations and use them in your natural language

applications. This integration provides two new components:

1. An `AmazonPersonalize` client, that provides a wrapper around the

Amazon Personalize API.

2. An `AmazonPersonalizeChain`, that provides a chain to pull in

recommendations using the client, and then generating the response in

natural language.

We have added this to langchain_experimental since there was feedback

from the previous PR about having this support in experimental rather

than the core or community extensions.

Here is some sample code to explain the usage.

```python

from langchain_experimental.recommenders import AmazonPersonalize

from langchain_experimental.recommenders import AmazonPersonalizeChain

from langchain.llms.bedrock import Bedrock

recommender_arn = "<insert_arn>"

client=AmazonPersonalize(

credentials_profile_name="default",

region_name="us-west-2",

recommender_arn=recommender_arn

)

bedrock_llm = Bedrock(

model_id="anthropic.claude-v2",

region_name="us-west-2"

)

chain = AmazonPersonalizeChain.from_llm(

llm=bedrock_llm,

client=client

)

response = chain({'user_id': '1'})

```

Reviewer: @3coins

Noticed and fixed a few typos in the SmartLLMChain default ideation and

critique prompts

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

- **Description:**

[AS-IS] When dealing with a yaml file, the extension must be .yaml.

[TO-BE] In the absence of extension length constraints in the OS, the

extension of the YAML file is yaml, but control over the yml extension

must still be made.

It's as if it's an error because it's a .jpg extension in jpeg support.

- **Issue:** -

- **Dependencies:**

no dependencies required for this change,

As described in issue #17060, in the case in which text has only one

sentence the following function fails. Checking for that and adding a

return case fixed the issue.

```python

def split_text(self, text: str) -> List[str]:

"""Split text into multiple components."""

# Splitting the essay on '.', '?', and '!'

single_sentences_list = re.split(r"(?<=[.?!])\s+", text)

sentences = [

{"sentence": x, "index": i} for i, x in enumerate(single_sentences_list)

]

sentences = combine_sentences(sentences)

embeddings = self.embeddings.embed_documents(

[x["combined_sentence"] for x in sentences]

)

for i, sentence in enumerate(sentences):

sentence["combined_sentence_embedding"] = embeddings[i]

distances, sentences = calculate_cosine_distances(sentences)

start_index = 0

# Create a list to hold the grouped sentences

chunks = []

breakpoint_percentile_threshold = 95

breakpoint_distance_threshold = np.percentile(

distances, breakpoint_percentile_threshold

) # If you want more chunks, lower the percentile cutoff

indices_above_thresh = [

i for i, x in enumerate(distances) if x > breakpoint_distance_threshold

] # The indices of those breakpoints on your list

# Iterate through the breakpoints to slice the sentences

for index in indices_above_thresh:

# The end index is the current breakpoint

end_index = index

# Slice the sentence_dicts from the current start index to the end index

group = sentences[start_index : end_index + 1]

combined_text = " ".join([d["sentence"] for d in group])

chunks.append(combined_text)

# Update the start index for the next group

start_index = index + 1

# The last group, if any sentences remain

if start_index < len(sentences):

combined_text = " ".join([d["sentence"] for d in sentences[start_index:]])

chunks.append(combined_text)

return chunks

```

Co-authored-by: Giulio Zani <salamanderxing@Giulios-MBP.homenet.telecomitalia.it>

- **Description:** Presidio-based anonymizers are not working because

`_remove_conflicts_and_get_text_manipulation_data` was being called

without a conflict resolution strategy. This PR fixes this issue. In

addition, it removes some mutable default arguments (antipattern).

To reproduce the issue, just run the very first cell of this

[notebook](https://python.langchain.com/docs/guides/privacy/2/) from

langchain's documentation.

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

…tch]: import models from community

ran

```bash

git grep -l 'from langchain\.chat_models' | xargs -L 1 sed -i '' "s/from\ langchain\.chat_models/from\ langchain_community.chat_models/g"

git grep -l 'from langchain\.llms' | xargs -L 1 sed -i '' "s/from\ langchain\.llms/from\ langchain_community.llms/g"

git grep -l 'from langchain\.embeddings' | xargs -L 1 sed -i '' "s/from\ langchain\.embeddings/from\ langchain_community.embeddings/g"

git checkout master libs/langchain/tests/unit_tests/llms

git checkout master libs/langchain/tests/unit_tests/chat_models

git checkout master libs/langchain/tests/unit_tests/embeddings/test_imports.py

make format

cd libs/langchain; make format

cd ../experimental; make format

cd ../core; make format

```

Addded missed docstrings. Fixed inconsistency in docstrings.

**Note** CC @efriis

There were PR errors on

`langchain_experimental/prompt_injection_identifier/hugging_face_identifier.py`

But, I didn't touch this file in this PR! Can it be some cache problems?

I fixed this error.

- **Description:** This is addition to [my previous

PR](https://github.com/langchain-ai/langchain/pull/13930) with

improvements to flexibility allowing different models and notebook to

use ONNX runtime for faster speed. Since the last PR, [our

model](https://huggingface.co/laiyer/deberta-v3-base-prompt-injection)

got more than 660k downloads, and with the [public

benchmark](https://huggingface.co/spaces/laiyer/prompt-injection-benchmark)

showed much fewer false-positives than the previous one from deepset.

Additionally, on the ONNX runtime, it can be running 3x faster on the

CPU, which might be handy for builders using Langchain.

**Issue:** N/A

- **Dependencies:** N/A

- **Tag maintainer:** N/A

- **Twitter handle:** `@laiyer_ai`

**Description**

The `SmartLLMChain` was was fixed to output key "resolution".

Unfortunately, this prevents the ability to use multiple `SmartLLMChain`

in a `SequentialChain` because of colliding output keys. This change

simply gives the option the customize the output key to allow for

sequential chaining. The default behavior is the same as the current

behavior.

Now, it's possible to do the following:

```

from langchain.chat_models import ChatOpenAI

from langchain.prompts import PromptTemplate

from langchain_experimental.smart_llm import SmartLLMChain

from langchain.chains import SequentialChain

joke_prompt = PromptTemplate(

input_variables=["content"],

template="Tell me a joke about {content}.",

)

review_prompt = PromptTemplate(

input_variables=["scale", "joke"],

template="Rate the following joke from 1 to {scale}: {joke}"

)

llm = ChatOpenAI(temperature=0.9, model_name="gpt-4-32k")

joke_chain = SmartLLMChain(llm=llm, prompt=joke_prompt, output_key="joke")

review_chain = SmartLLMChain(llm=llm, prompt=review_prompt, output_key="review")

chain = SequentialChain(

chains=[joke_chain, review_chain],

input_variables=["content", "scale"],

output_variables=["review"],

verbose=True

)

response = chain.run({"content": "chickens", "scale": "10"})

print(response)

```

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

<!-- Thank you for contributing to LangChain!

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes (if applicable),

- **Dependencies:** any dependencies required for this change,

- **Tag maintainer:** for a quicker response, tag the relevant

maintainer (see below),

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc:

https://github.com/langchain-ai/langchain/blob/master/.github/CONTRIBUTING.md

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in `docs/extras`

directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

- **Description:** Fix#11737 issue (extra_tools option of

create_pandas_dataframe_agent is not working),

- **Issue:** #11737 ,

- **Dependencies:** no,

- **Tag maintainer:** @baskaryan, @eyurtsev, @hwchase17 I needed this

method at work, so I modified it myself and used it. There is a similar

issue(#11737) and PR(#13018) of @PyroGenesis, so I combined my code at

the original PR.

You may be busy, but it would be great help for me if you checked. Thank

you.

- **Twitter handle:** @lunara_x

If you need an .ipynb example about this, please tag me.

I will share what I am working on after removing any work-related

content.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

See PR title.

From what I can see, `poetry` will auto-include this. Please let me know

if I am missing something here.

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

CC @baskaryan @hwchase17 @jmorganca

Having a bit of trouble importing `langchain_experimental` from a

notebook, will figure it out tomorrow

~Ah and also is blocked by #13226~

---------

Co-authored-by: Lance Martin <lance@langchain.dev>

Co-authored-by: Bagatur <baskaryan@gmail.com>

It was :

`from langchain.schema.prompts import BasePromptTemplate`

but because of the breaking change in the ns, it is now

`from langchain.schema.prompt_template import BasePromptTemplate`

This bug prevents building the API Reference for the langchain_experimental

- **Description:** Updates to `AnthropicFunctions` to be compatible with

the OpenAI `function_call` functionality.

- **Issue:** The functionality to indicate `auto`, `none` and a forced

function_call was not completely implemented in the existing code.

- **Dependencies:** None

- **Tag maintainer:** @baskaryan , and any of the other maintainers if

needed.

- **Twitter handle:** None

I have specifically tested this functionality via AWS Bedrock with the

Claude-2 and Claude-Instant models.

- **Description:** Existing model used for Prompt Injection is quite

outdated but we fine-tuned and open-source a new model based on the same

model deberta-v3-base from Microsoft -

[laiyer/deberta-v3-base-prompt-injection](https://huggingface.co/laiyer/deberta-v3-base-prompt-injection).

It supports more up-to-date injections and less prone to

false-positives.

- **Dependencies:** No

- **Tag maintainer:** -

- **Twitter handle:** @alex_yaremchuk

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:** The experimental package needs to be compatible with

the usage of importing agents

For example, if i use `from langchain.agents import

create_pandas_dataframe_agent`, running the program will prompt the

following information:

```

Traceback (most recent call last):

File "/Users/dongwm/test/main.py", line 1, in <module>

from langchain.agents import create_pandas_dataframe_agent

File "/Users/dongwm/test/venv/lib/python3.11/site-packages/langchain/agents/__init__.py", line 87, in __getattr__

raise ImportError(

ImportError: create_pandas_dataframe_agent has been moved to langchain experimental. See https://github.com/langchain-ai/langchain/discussions/11680 for more information.

Please update your import statement from: `langchain.agents.create_pandas_dataframe_agent` to `langchain_experimental.agents.create_pandas_dataframe_agent`.

```

But when I changed to `from langchain_experimental.agents import

create_pandas_dataframe_agent`, it was actually wrong:

```python

Traceback (most recent call last):

File "/Users/dongwm/test/main.py", line 2, in <module>

from langchain_experimental.agents import create_pandas_dataframe_agent

ImportError: cannot import name 'create_pandas_dataframe_agent' from 'langchain_experimental.agents' (/Users/dongwm/test/venv/lib/python3.11/site-packages/langchain_experimental/agents/__init__.py)

```

I should use `from langchain_experimental.agents.agent_toolkits import

create_pandas_dataframe_agent`. In order to solve the problem and make

it compatible, I added additional import code to the

langchain_experimental package. Now it can be like this Used `from

langchain_experimental.agents import create_pandas_dataframe_agent`

- **Twitter handle:** [lin_bob57617](https://twitter.com/lin_bob57617)

Fix some circular deps:

- move PromptValue into top level module bc both PromptTemplates and

OutputParsers import

- move tracer context vars to `tracers.context` and import them in

functions in `callbacks.manager`

- add core import tests

## Update 2023-09-08

This PR now supports further models in addition to Lllama-2 chat models.

See [this comment](#issuecomment-1668988543) for further details. The

title of this PR has been updated accordingly.

## Original PR description

This PR adds a generic `Llama2Chat` model, a wrapper for LLMs able to

serve Llama-2 chat models (like `LlamaCPP`,

`HuggingFaceTextGenInference`, ...). It implements `BaseChatModel`,

converts a list of chat messages into the [required Llama-2 chat prompt

format](https://huggingface.co/blog/llama2#how-to-prompt-llama-2) and

forwards the formatted prompt as `str` to the wrapped `LLM`. Usage

example:

```python

# uses a locally hosted Llama2 chat model

llm = HuggingFaceTextGenInference(

inference_server_url="http://127.0.0.1:8080/",

max_new_tokens=512,

top_k=50,

temperature=0.1,

repetition_penalty=1.03,

)

# Wrap llm to support Llama2 chat prompt format.

# Resulting model is a chat model

model = Llama2Chat(llm=llm)

messages = [

SystemMessage(content="You are a helpful assistant."),

MessagesPlaceholder(variable_name="chat_history"),

HumanMessagePromptTemplate.from_template("{text}"),

]

prompt = ChatPromptTemplate.from_messages(messages)

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

chain = LLMChain(llm=model, prompt=prompt, memory=memory)

# use chat model in a conversation

# ...

```

Also part of this PR are tests and a demo notebook.

- Tag maintainer: @hwchase17

- Twitter handle: `@mrt1nz`

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

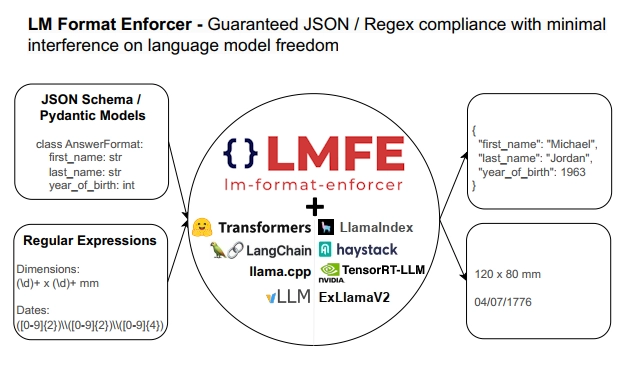

## Description

This PR adds support for

[lm-format-enforcer](https://github.com/noamgat/lm-format-enforcer) to

LangChain.

The library is similar to jsonformer / RELLM which are supported in

Langchain, but has several advantages such as

- Batching and Beam search support

- More complete JSON Schema support

- LLM has control over whitespace, improving quality

- Better runtime performance due to only calling the LLM's generate()

function once per generate() call.

The integration is loosely based on the jsonformer integration in terms

of project structure.

## Dependencies

No compile-time dependency was added, but if `lm-format-enforcer` is not

installed, a runtime error will occur if it is trying to be used.

## Tests

Due to the integration modifying the internal parameters of the

underlying huggingface transformer LLM, it is not possible to test

without building a real LM, which requires internet access. So, similar

to the jsonformer and RELLM integrations, the testing is via the

notebook.

## Twitter Handle

[@noamgat](https://twitter.com/noamgat)

Looking forward to hearing feedback!

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

Best to review one commit at a time, since two of the commits are 100%

autogenerated changes from running `ruff format`:

- Install and use `ruff format` instead of black for code formatting.

- Output of `ruff format .` in the `langchain` package.

- Use `ruff format` in experimental package.

- Format changes in experimental package by `ruff format`.

- Manual formatting fixes to make `ruff .` pass.

This PR replaces the previous `Intent` check with the new `Prompt

Safety` check. The logic and steps to enable chain moderation via the

Amazon Comprehend service, allowing you to detect and redact PII, Toxic,

and Prompt Safety information in the LLM prompt or answer remains

unchanged.

This implementation updates the code and configuration types with

respect to `Prompt Safety`.

### Usage sample

```python

from langchain_experimental.comprehend_moderation import (BaseModerationConfig,

ModerationPromptSafetyConfig,

ModerationPiiConfig,

ModerationToxicityConfig

)

pii_config = ModerationPiiConfig(

labels=["SSN"],

redact=True,

mask_character="X"

)

toxicity_config = ModerationToxicityConfig(

threshold=0.5

)

prompt_safety_config = ModerationPromptSafetyConfig(

threshold=0.5

)

moderation_config = BaseModerationConfig(

filters=[pii_config, toxicity_config, prompt_safety_config]

)

comp_moderation_with_config = AmazonComprehendModerationChain(

moderation_config=moderation_config, #specify the configuration

client=comprehend_client, #optionally pass the Boto3 Client

verbose=True

)

template = """Question: {question}

Answer:"""

prompt = PromptTemplate(template=template, input_variables=["question"])

responses = [

"Final Answer: A credit card number looks like 1289-2321-1123-2387. A fake SSN number looks like 323-22-9980. John Doe's phone number is (999)253-9876.",

"Final Answer: This is a really shitty way of constructing a birdhouse. This is fucking insane to think that any birds would actually create their motherfucking nests here."

]

llm = FakeListLLM(responses=responses)

llm_chain = LLMChain(prompt=prompt, llm=llm)

chain = (

prompt

| comp_moderation_with_config

| {llm_chain.input_keys[0]: lambda x: x['output'] }

| llm_chain

| { "input": lambda x: x['text'] }

| comp_moderation_with_config

)

try:

response = chain.invoke({"question": "A sample SSN number looks like this 123-456-7890. Can you give me some more samples?"})

except Exception as e:

print(str(e))

else:

print(response['output'])

```

### Output

```python

> Entering new AmazonComprehendModerationChain chain...

Running AmazonComprehendModerationChain...

Running pii Validation...

Running toxicity Validation...

Running prompt safety Validation...

> Finished chain.

> Entering new AmazonComprehendModerationChain chain...

Running AmazonComprehendModerationChain...

Running pii Validation...

Running toxicity Validation...

Running prompt safety Validation...

> Finished chain.

Final Answer: A credit card number looks like 1289-2321-1123-2387. A fake SSN number looks like XXXXXXXXXXXX John Doe's phone number is (999)253-9876.

```

---------

Co-authored-by: Jha <nikjha@amazon.com>

Co-authored-by: Anjan Biswas <anjanavb@amazon.com>

Co-authored-by: Anjan Biswas <84933469+anjanvb@users.noreply.github.com>

Type hinting `*args` as `List[Any]` means that each positional argument

should be a list. Type hinting `**kwargs` as `Dict[str, Any]` means that

each keyword argument should be a dict of strings.

This is almost never what we actually wanted, and doesn't seem to be

what we want in any of the cases I'm replacing here.

Minor lint dependency version upgrade to pick up latest functionality.

Ruff's new v0.1 version comes with lots of nice features, like

fix-safety guarantees and a preview mode for not-yet-stable features:

https://astral.sh/blog/ruff-v0.1.0

- **Description:** fixed a bug in pal-chain when it reports Python

code validation errors. When node.func does not have any ids, the

original code tried to print node.func.id in raising ValueError.

- **Issue:** n/a,

- **Dependencies:** no dependencies,

- **Tag maintainer:** @hazzel-cn, @eyurtsev

- **Twitter handle:** @lazyswamp

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

Use `.copy()` to fix the bug that the first `llm_inputs` element is

overwritten by the second `llm_inputs` element in `intermediate_steps`.

***Problem description:***

In [line 127](

c732d8fffd/libs/experimental/langchain_experimental/sql/base.py (L127C17-L127C17)),

the `llm_inputs` of the sql generation step is appended as the first

element of `intermediate_steps`:

```

intermediate_steps.append(llm_inputs) # input: sql generation

```

However, `llm_inputs` is a mutable dict, it is updated in [line

179](https://github.com/langchain-ai/langchain/blob/master/libs/experimental/langchain_experimental/sql/base.py#L179)

for the final answer step:

```

llm_inputs["input"] = input_text

```

Then, the updated `llm_inputs` is appended as another element of

`intermediate_steps` in [line

180](c732d8fffd/libs/experimental/langchain_experimental/sql/base.py (L180)):

```

intermediate_steps.append(llm_inputs) # input: final answer

```

As a result, the final `intermediate_steps` returned in [line

189](c732d8fffd/libs/experimental/langchain_experimental/sql/base.py (L189C43-L189C43))

actually contains two same `llm_inputs` elements, i.e., the `llm_inputs`

for the sql generation step overwritten by the one for final answer step

by mistake. Users are not able to get the actual `llm_inputs` for the

sql generation step from `intermediate_steps`

Simply calling `.copy()` when appending `llm_inputs` to

`intermediate_steps` can solve this problem.