Fix issue #6380

<!-- Remove if not applicable -->

Fixes#6380 (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: HubertKl <HubertKl>

Support baidu list type answer_box

From [this document](https://serpapi.com/baidu-answer-box), we can know

that the answer_box attribute returned by the Baidu interface is a list,

and the list contains only one Object, but an error will occur when the

current code is executed.

So when answer_box is a list, we reset res["answer_box"] so that the

code can execute successfully.

Caching wasn't accounting for which model was used so a result for the

first executed model would return for the same prompt on a different

model.

This was because `Replicate._identifying_params` did not include the

`model` parameter.

FYI

- @cbh123

- @hwchase17

- @agola11

# Provider the latest duckduckgo_search API

The Git commit contents involve two files related to some DuckDuckGo

query operations, and an upgrade of the DuckDuckGo module to version

3.8.3. A suitable commit message could be "Upgrade DuckDuckGo module to

version 3.8.3, including query operations". Specifically, in the

duckduckgo_search.py file, a DDGS() class instance is newly added to

replace the previous ddg() function, and the time parameter name in the

get_snippets() and results() methods is changed from "time" to

"timelimit" to accommodate recent changes. In the pyproject.toml file,

the duckduckgo-search module is upgraded to version 3.8.3.

[duckduckgo_search readme

attention](https://github.com/deedy5/duckduckgo_search): Versions before

v2.9.4 no longer work as of May 12, 2023

## Who can review?

@vowelparrot

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Trying to use OpenAI models like 'text-davinci-002' or

'text-davinci-003' the agent doesn't work and the message is 'Only

supported with OpenAI models.' The error message should be 'Only

supported with ChatOpenAI models.'

My Twitter handle is @alonsosilva

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

Co-authored-by: SILVA Alonso <alonso.silva@nokia-bell-labs.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

I apologize for the error: the 'ANTHROPIC_API_URL' environment variable

doesn't take effect if the 'anthropic_api_url' parameter has a default

value.

#### Who can review?

Models

- @hwchase17

- @agola11

1. Introduced new distance strategies support: **DOT_PRODUCT** and

**EUCLIDEAN_DISTANCE** for enhanced flexibility.

2. Implemented a feature to filter results based on metadata fields.

3. Incorporated connection attributes specifying "langchain python sdk"

usage for enhanced traceability and debugging.

4. Expanded the suite of integration tests for improved code

reliability.

5. Updated the existing notebook with the usage example

@dev2049

---------

Co-authored-by: Volodymyr Tkachuk <vtkachuk-ua@singlestore.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

W.r.t recent changes, ChatPromptTemplate does not accepting partial

variables. This PR should fix that issue.

Fixes#6431

#### Who can review?

@hwchase17

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Throwing ToolException when incorrect arguments are passed to tools so

that that agent can course correct them.

# Incorrect argument count handling

I was facing an error where the agent passed incorrect arguments to

tools. As per the discussions going around, I started throwing

ToolException to allow the model to course correct.

## Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes a link typo from `/-/route` to `/-/routes`.

and change endpoint format

from `f"{self.anyscale_service_url}/{self.anyscale_service_route}"` to

`f"{self.anyscale_service_url}{self.anyscale_service_route}"`

Also adding documentation about the format of the endpoint

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Fixed several inconsistencies:

- file names and notebook titles should be similar otherwise ToC on the

[retrievers

page](https://python.langchain.com/en/latest/modules/indexes/retrievers.html)

and on the left ToC tab are different. For example, now, `Self-querying

with Chroma` is not correctly alphabetically sorted because its file

named `chroma_self_query.ipynb`

- `Stringing compressors and document transformers...` demoted from `#`

to `##`. Otherwise, it appears in Toc.

- several formatting problems

#### Who can review?

@hwchase17

@dev2049

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

The `CustomOutputParser` needs to throw `OutputParserException` when it

fails to parse the response from the agent, so that the executor can

[catch it and

retry](be9371ca8f/langchain/agents/agent.py (L767))

when `handle_parsing_errors=True`.

<!-- Remove if not applicable -->

#### Who can review?

Tag maintainers/contributors who might be interested: @hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

#### Description

- Removed two backticks surrounding the phrase "chat messages as"

- This phrase stood out among other formatted words/phrases such as

`prompt`, `role`, `PromptTemplate`, etc., which all seem to have a clear

function.

- `chat messages as`, formatted as such, confused me while reading,

leading me to believe the backticks were misplaced.

#### Who can review?

@hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

Minor new line character in the markdown.

Also, this option is not yet in the latest version of LangChain

(0.0.190) from Conda. Maybe in the next update.

@eyurtsev

@hwchase17

Just so it is consistent with other `VectorStore` classes.

This is a follow-up of #6056 which also discussed the potential of

adding `similarity_search_by_vector_returning_embeddings` that we will

continue the discussion here.

potentially related: #6286

#### Who can review?

Tag maintainers/contributors who might be interested: @rlancemartin

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

This PR adds an example of doing question answering over documents using

OpenAI Function Agents.

#### Who can review?

@hwchase17

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes: ChatAnthropic was mutating the input message list during

formatting which isn't ideal bc you could be changing the behavior for

other chat models when using the same input

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

Arize released a new Generative LLM Model Type, adjusting the callback

function to new logging.

Added arize imports, please delete if not necessary.

Specifically, this change makes sure that the prompt and response pairs

from LangChain agents are logged into Arize as a Generative LLM model,

instead of our previous categorical model. In order to do this, the

callback functions collects the necessary data and passes the data into

Arize using Python Pandas SDK.

Arize library, specifically pandas.logger is an additional dependency.

Notebook For Test:

https://docs.arize.com/arize/resources/integrations/langchain

Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17 - project lead

Tracing / Callbacks

@agola11

- return raw and full output (but keep run shortcut method functional)

- change output parser to take in generations (good for working with

messages)

- add output parser to base class, always run (default to same as

current)

---------

Co-authored-by: Eugene Yurtsev <eyurtsev@gmail.com>

#### Before submitting

Add memory support for `OpenAIFunctionsAgent` like

`StructuredChatAgent`.

#### Who can review?

@hwchase17

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

A must-include for SiteMap Loader to avoid the SSL verification error.

Setting the 'verify' to False by ``` sitemap_loader.requests_kwargs =

{"verify": False}``` does not bypass the SSL verification in some

websites.

There are websites (https:// researchadmin.asu.edu/ sitemap.xml) where

setting "verify" to False as shown below would not work:

sitemap_loader.requests_kwargs = {"verify": False}

We need this merge to tell the Session to use a connector with a

specific argument about SSL:

\# For SiteMap SSL verification

if not self.request_kwargs['verify']:

connector = aiohttp.TCPConnector(ssl=False)

else:

connector = None

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

Fixes#5483

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

@eyurtsev

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

@agola11

Issue

#6193

I added the new pricing for the new models.

Also, now gpt-3.5-turbo got split into "input" and "output" pricing. It

currently does not support that.

can't pass system_message argument, the prompt always show default

message "System: You are a helpful AI assistant."

```

system_message = SystemMessage(

content="You are an AI that provides information to Human regarding documentation."

)

agent = initialize_agent(

tools,

llm=openai_llm_chat,

agent=AgentType.OPENAI_FUNCTIONS,

system_message=system_message,

agent_kwargs={

"system_message": system_message,

},

verbose=False,

)

```

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

To bypass SSL verification errors during fetching, you can include the

`verify=False` parameter. This markdown proves useful, especially for

beginners in the field of web scraping.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

Fixes#6079

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

@eyurtsev

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

To bypass SSL verification errors during web scraping, you can include

the ssl_verify=False parameter along with the headers parameter. This

combination of arguments proves useful, especially for beginners in the

field of web scraping.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

Fixes#1829

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17 @eyurtsev

-->

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Hi, I make a small improvement for BaseOpenAI.

I added a max_context_size attribute to BaseOpenAI so that we can get

the max context size directly instead of only getting the maximum token

size of the prompt through the max_tokens_for_prompt method.

Who can review?

@hwchase17 @agola11

I followed the [Common

Tasks](c7db9febb0/.github/CONTRIBUTING.md),

the test is all passed.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

LLM configurations can be loaded from a Python dict (or JSON file

deserialized as dict) using the

[load_llm_from_config](8e1a7a8646/langchain/llms/loading.py (L12))

function.

However, the type string in the `type_to_cls_dict` lookup dict differs

from the type string defined in some LLM classes. This means that the

LLM object can be saved, but not loaded again, because the type strings

differ.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

The current version of chat history with DynamoDB doesn't handle the

case correctly when a table has no chat history. This change solves this

error handling.

<!-- Remove if not applicable -->

Fixes https://github.com/hwchase17/langchain/issues/6088

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

Fixes#6131

Simply passes kwargs forward from similarity_search to helper functions

so that search_kwargs are applied to search as originally intended. See

bug for repro steps.

#### Who can review?

@hwchase17

@dev2049

Twitter: poshporcupine

Very small typo in the Constitutional AI critique default prompt. The

negation "If there is *no* material critique of ..." is used two times,

should be used only on the first one.

Cheers,

Pierre

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

Fixes https://github.com/hwchase17/langchain/issues/6208

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

VectorStores / Retrievers / Memory

- @dev2049

Hot Fixes for Deep Lake [would highly appreciate expedited review]

* deeplake version was hardcoded and since deeplake upgraded the

integration fails with confusing error

* an additional integration test fixed due to embedding function

* Additionally fixed docs for code understanding links after docs

upgraded

* notebook removal of public parameter to make sure code understanding

notebook works

#### Who can review?

@hwchase17 @dev2049

---------

Co-authored-by: Davit Buniatyan <d@activeloop.ai>

Fixes#5807 (issue)

#### Who can review?

Tag maintainers/contributors who might be interested: @dev2049

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

Related to this https://github.com/hwchase17/langchain/issues/6225

Just copied the implementation from `generate` function to `agenerate`

and tested it.

Didn't run any official tests thought

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes#6225

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17, @agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

The LLM integration

[HuggingFaceTextGenInference](https://github.com/hwchase17/langchain/blob/master/langchain/llms/huggingface_text_gen_inference.py)

already has streaming support.

However, when streaming is enabled, it always returns an empty string as

the final output text when the LLM is finished. This is because `text`

is instantiated with an empty string and never updated.

This PR fixes the collection of the final output text by concatenating

new tokens.

Similar as https://github.com/hwchase17/langchain/pull/5818

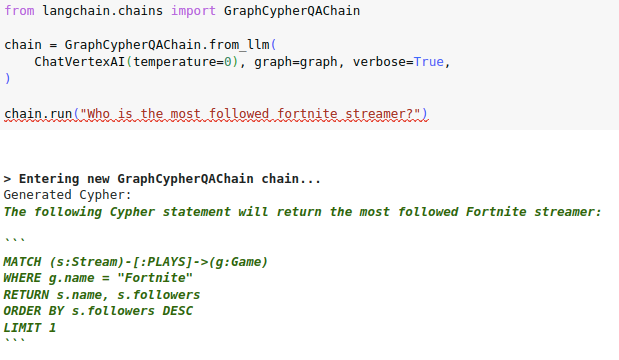

Added the functionality to save/load Graph Cypher QA Chain due to a user

reporting the following error

> raise NotImplementedError("Saving not supported for this chain

type.")\nNotImplementedError: Saving not supported for this chain

type.\n'

In LangChain, all module classes are enumerated in the `__init__.py`

file of the correspondent module. But some classes were missed and were

not included in the module `__init__.py`

This PR:

- added the missed classes to the module `__init__.py` files

- `__init__.py:__all_` variable value (a list of the class names) was

sorted

- `langchain.tools.sql_database.tool.QueryCheckerTool` was renamed into

the `QuerySQLCheckerTool` because it conflicted with

`langchain.tools.spark_sql.tool.QueryCheckerTool`

- changes to `pyproject.toml`:

- added `pgvector` to `pyproject.toml:extended_testing`

- added `pandas` to

`pyproject.toml:[tool.poetry.group.test.dependencies]`

- commented out the `streamlit` from `collbacks/__init__.py`, It is

because now the `streamlit` requires Python >=3.7, !=3.9.7

- fixed duplicate names in `tools`

- fixed correspondent ut-s

#### Who can review?

@hwchase17

@dev2049

Fixed PermissionError that occurred when downloading PDF files via http

in BasePDFLoader on windows.

When downloading PDF files via http in BasePDFLoader, NamedTemporaryFile

is used.

This function cannot open the file again on **Windows**.[Python

Doc](https://docs.python.org/3.9/library/tempfile.html#tempfile.NamedTemporaryFile)

So, we created a **temporary directory** with TemporaryDirectory and

placed the downloaded file there.

temporary directory is deleted in the deconstruct.

Fixes#2698

#### Who can review?

Tag maintainers/contributors who might be interested:

- @eyurtsev

- @hwchase17

This will add the ability to add an AsyncCallbackManager (handler) for

the reducer chain, which would be able to stream the tokens via the

`async def on_llm_new_token` callback method

Fixes # (issue)

[5532](https://github.com/hwchase17/langchain/issues/5532)

@hwchase17 @agola11

The following code snippet explains how this change would be used to

enable `reduce_llm` with streaming support in a `map_reduce` chain

I have tested this change and it works for the streaming use-case of

reducer responses. I am happy to share more information if this makes

solution sense.

```

AsyncHandler

..........................

class StreamingLLMCallbackHandler(AsyncCallbackHandler):

"""Callback handler for streaming LLM responses."""

def __init__(self, websocket):

self.websocket = websocket

# This callback method is to be executed in async

async def on_llm_new_token(self, token: str, **kwargs: Any) -> None:

resp = ChatResponse(sender="bot", message=token, type="stream")

await self.websocket.send_json(resp.dict())

Chain

..........

stream_handler = StreamingLLMCallbackHandler(websocket)

stream_manager = AsyncCallbackManager([stream_handler])

streaming_llm = ChatOpenAI(

streaming=True,

callback_manager=stream_manager,

verbose=False,

temperature=0,

)

main_llm = OpenAI(

temperature=0,

verbose=False,

)

doc_chain = load_qa_chain(

llm=main_llm,

reduce_llm=streaming_llm,

chain_type="map_reduce",

callback_manager=manager

)

qa_chain = ConversationalRetrievalChain(

retriever=vectorstore.as_retriever(),

combine_docs_chain=doc_chain,

question_generator=question_generator,

callback_manager=manager,

)

# Here `acall` will trigger `acombine_docs` on `map_reduce` which should then call `_aprocess_result` which in turn will call `self.combine_document_chain.arun` hence async callback will be awaited

result = await qa_chain.acall(

{"question": question, "chat_history": chat_history}

)

```

Hi again @agola11! 🤗

## What's in this PR?

After playing around with different chains we noticed that some chains

were using different `output_key`s and we were just handling some, so

we've extended the support to any output, either if it's a Python list

or a string.

Kudos to @dvsrepo for spotting this!

---------

Co-authored-by: Daniel Vila Suero <daniel@argilla.io>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes https://github.com/ShreyaR/guardrails/issues/155

Enables guardrails reasking by specifying an LLM api in the output

parser.

skip building preview of docs for anything branch that doesn't start

with `__docs__`. will eventually update to look at code diff directories

but patching for now

We propose an enhancement to the web-based loader initialize method by

introducing a "verify" option. This enhancement addresses the issue of

SSL verification errors encountered on certain web pages. By providing

users with the option to set the verify parameter to False, we offer

greater flexibility and control.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

### Fixes#6079

#### Who can review?

@eyurtsev @hwchase17

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

[Feature] User can custom the Anthropic API URL

#### Who can review?

Tag maintainers/contributors who might be interested:

Models

- @hwchase17

- @agola11

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Added support to `search_by_vector` to Qdrant Vector store.

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

### Who can review

VectorStores / Retrievers / Memory

- @dev2049

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

-->

@eyurtsev

The existing GoogleDrive implementation always needs a service account

to be available at the credentials location. When running on GCP

services such as Cloud Run, a service account already exists in the

metadata of the service, so no physical key is necessary. This change

adds a check to see if it is running in such an environment, and uses

that authentication instead.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Add oobabooga/text-generation-webui support as an LLM. Currently,

supports using text-generation-webui's non-streaming API interface.

Allows users who already have text-gen running to use the same models

with langchain.

#### Before submitting

Simple usage, similar to existing LLM supported:

```

from langchain.llms import TextGen

llm = TextGen(model_url = "http://localhost:5000")

```

#### Who can review?

@hwchase17 - project lead

---------

Co-authored-by: Hien Ngo <Hien.Ngo@adia.ae>

Hi there:

As I implement the AnalyticDB VectorStore use two table to store the

document before. It seems just use one table is a better way. So this

commit is try to improve AnalyticDB VectorStore implementation without

affecting user behavior:

**1. Streamline the `post_init `behavior by creating a single table with

vector indexing.

2. Update the `add_texts` API for document insertion.

3. Optimize `similarity_search_with_score_by_vector` to retrieve results

directly from the table.

4. Implement `_similarity_search_with_relevance_scores`.

5. Add `embedding_dimension` parameter to support different dimension

embedding functions.**

Users can continue using the API as before.

Test cases added before is enough to meet this commit.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes ##6039

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17 @agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

## DocArray as a Retriever

[DocArray](https://github.com/docarray/docarray) is an open-source tool

for managing your multi-modal data. It offers flexibility to store and

search through your data using various document index backends. This PR

introduces `DocArrayRetriever` - which works with any available backend

and serves as a retriever for Langchain apps.

Also, I added 2 notebooks:

DocArray Backends - intro to all 5 currently supported backends, how to

initialize, index, and use them as a retriever

DocArray Usage - showcasing what additional search parameters you can

pass to create versatile retrievers

Example:

```python

from docarray.index import InMemoryExactNNIndex

from docarray import BaseDoc, DocList

from docarray.typing import NdArray

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.retrievers import DocArrayRetriever

# define document schema

class MyDoc(BaseDoc):

description: str

description_embedding: NdArray[1536]

embeddings = OpenAIEmbeddings()

# create documents

descriptions = ["description 1", "description 2"]

desc_embeddings = embeddings.embed_documents(texts=descriptions)

docs = DocList[MyDoc](

[

MyDoc(description=desc, description_embedding=embedding)

for desc, embedding in zip(descriptions, desc_embeddings)

]

)

# initialize document index with data

db = InMemoryExactNNIndex[MyDoc](docs)

# create a retriever

retriever = DocArrayRetriever(

index=db,

embeddings=embeddings,

search_field="description_embedding",

content_field="description",

)

# find the relevant document

doc = retriever.get_relevant_documents("action movies")

print(doc)

```

#### Who can review?

@dev2049

---------

Signed-off-by: jupyterjazz <saba.sturua@jina.ai>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes #

links to prompt templates and example selectors on the

[Prompts](https://python.langchain.com/docs/modules/model_io/prompts/)

page are invalid.

#### Before submitting

Just a small note that I tried to run `make docs_clean` and other

related commands before PR written

[here](https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md#build-documentation-locally),

it gives me an error:

```bash

langchain % make docs_clean

Traceback (most recent call last):

File "/Users/masafumi/Downloads/langchain/.venv/bin/make", line 5, in <module>

from scripts.proto import main

ModuleNotFoundError: No module named 'scripts'

make: *** [docs_clean] Error 1

# Poetry (version 1.5.1)

# Python 3.9.13

```

I couldn't figure out how to fix this, so I didn't run those command.

But links should work.

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

Similar issue #6323

Co-authored-by: masafumimori <m.masafumimori@outlook.com>

# Handle Managed Motorhead Data Key

Managed motorhead will return a payload with a `data` key. we need to

handle this to properly access messages from the server.

Just adds some comments and docstring improvements.

There was some behaviour that was quite unclear to me at first like:

- "when do things get updated?"

- "why are there only entity names and no summaries?"

- "why do the entity names disappear?"

Now it can be much more obvious to many.

I am lukestanley on Twitter.

1. Changed the implementation of add_texts interface for the AwaDB

vector store in order to improve the performance

2. Upgrade the AwaDB from 0.3.2 to 0.3.3

---------

Co-authored-by: vincent <awadb.vincent@gmail.com>

Fixes https://github.com/hwchase17/langchain/issues/6172

As described in https://github.com/hwchase17/langchain/issues/6172, I'd

love to help update the dev container in this project.

**Summary of changes:**

- Dev container now builds (the current container in this repo won't

build for me)

- Dockerfile updates

- Update image to our [currently-maintained Python

image](https://github.com/devcontainers/images/tree/main/src/python/.devcontainer)

(`mcr.microsoft.com/devcontainers/python`) rather than the deprecated

image from vscode-dev-containers

- Move Dockerfile to root of repo - in order for `COPY` to work

properly, it needs the files (in this case, `pyproject.toml` and

`poetry.toml`) in the same directory

- devcontainer.json updates

- Removed `customizations` and `remoteUser` since they should be covered

by the updated image in the Dockerfile

- Update comments

- Update docker-compose.yaml to properly point to updated Dockerfile

- Add a .gitattributes to avoid line ending conversions, which can

result in hundreds of pending changes

([info](https://code.visualstudio.com/docs/devcontainers/tips-and-tricks#_resolving-git-line-ending-issues-in-containers-resulting-in-many-modified-files))

- Add a README in the .devcontainer folder and info on the dev container

in the contributing.md

**Outstanding questions:**

- Is it expected for `poetry install` to take some time? It takes about

30 minutes for this dev container to finish building in a Codespace, but

a user should only have to experience this once. Through some online

investigation, this doesn't seem unusual

- Versions of poetry newer than 1.3.2 failed every time - based on some

of the guidance in contributing.md and other online resources, it seemed

changing poetry versions might be a good solution. 1.3.2 is from Jan

2023

---------

Co-authored-by: bamurtaugh <brmurtau@microsoft.com>

Co-authored-by: Samruddhi Khandale <samruddhikhandale@github.com>

This PR refactors the ArxivAPIWrapper class making

`doc_content_chars_max` parameter optional. Additionally, tests have

been added to ensure the functionality of the doc_content_chars_max

parameter.

Fixes#6027 (issue)

There will likely be another change or two coming over the next couple

weeks as we stabilize the API, but putting this one in now which just

makes the integration a bit more flexible with the response output

format.

```

(langchain) danielking@MML-1B940F4333E2 langchain % pytest tests/integration_tests/llms/test_mosaicml.py tests/integration_tests/embeddings/test_mosaicml.py

=================================================================================== test session starts ===================================================================================

platform darwin -- Python 3.10.11, pytest-7.3.1, pluggy-1.0.0

rootdir: /Users/danielking/github/langchain

configfile: pyproject.toml

plugins: asyncio-0.20.3, mock-3.10.0, dotenv-0.5.2, cov-4.0.0, anyio-3.6.2

asyncio: mode=strict

collected 12 items

tests/integration_tests/llms/test_mosaicml.py ...... [ 50%]

tests/integration_tests/embeddings/test_mosaicml.py ...... [100%]

=================================================================================== slowest 5 durations ===================================================================================

4.76s call tests/integration_tests/llms/test_mosaicml.py::test_retry_logic

4.74s call tests/integration_tests/llms/test_mosaicml.py::test_mosaicml_llm_call

4.13s call tests/integration_tests/llms/test_mosaicml.py::test_instruct_prompt

0.91s call tests/integration_tests/llms/test_mosaicml.py::test_short_retry_does_not_loop

0.66s call tests/integration_tests/llms/test_mosaicml.py::test_mosaicml_extra_kwargs

=================================================================================== 12 passed in 19.70s ===================================================================================

```

#### Who can review?

@hwchase17

@dev2049

the current implement put the doc itself as the metadata, but the

document chatgpt plugin retriever returned already has a `metadata`

field, it's better to use that instead.

the original code will throw the following exception when using

`RetrievalQAWithSourcesChain`, becuse it can not find the field

`metadata`:

```python

Exception has occurred: ValueError (note: full exception trace is shown but execution is paused at: _run_module_as_main)

Document prompt requires documents to have metadata variables: ['source']. Received document with missing metadata: ['source'].

File "/home/wangjie/anaconda3/envs/chatglm/lib/python3.10/site-packages/langchain/chains/combine_documents/base.py", line 27, in format_document

raise ValueError(

File "/home/wangjie/anaconda3/envs/chatglm/lib/python3.10/site-packages/langchain/chains/combine_documents/stuff.py", line 65, in <listcomp>

doc_strings = [format_document(doc, self.document_prompt) for doc in docs]

File "/home/wangjie/anaconda3/envs/chatglm/lib/python3.10/site-packages/langchain/chains/combine_documents/stuff.py", line 65, in _get_inputs

doc_strings = [format_document(doc, self.document_prompt) for doc in docs]

File "/home/wangjie/anaconda3/envs/chatglm/lib/python3.10/site-packages/langchain/chains/combine_documents/stuff.py", line 85, in combine_docs

inputs = self._get_inputs(docs, **kwargs)

File "/home/wangjie/anaconda3/envs/chatglm/lib/python3.10/site-packages/langchain/chains/combine_documents/base.py", line 84, in _call

output, extra_return_dict = self.combine_docs(

File "/home/wangjie/anaconda3/envs/chatglm/lib/python3.10/site-packages/langchain/chains/base.py", line 140, in __call__

raise e

```

Additionally, the `metadata` filed in the `chatgpt plugin retriever`

have these fileds by default:

```json

{

"source": "file", //email, file or chat

"source_id": "filename.docx", // the filename

"url": "",

...

}

```

so, we should set `source_id` to `source` in the langchain metadata.

```python

metadata = d.pop("metadata", d)

if(metadata.get("source_id")):

metadata["source"] = metadata.pop("source_id")

```

#### Who can review?

@dev2049

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: wangjie <wangjie@htffund.com>

**Short Description**

Added a new argument to AutoGPT class which allows to persist the chat

history to a file.

**Changes**

1. Removed the `self.full_message_history: List[BaseMessage] = []`

2. Replaced it with `chat_history_memory` which can take any subclasses

of `BaseChatMessageHistory`

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

adding new loader for [acreom](https://acreom.com) vaults. It's based on

the Obsidian loader with some additional text processing for acreom

specific markdown elements.

@eyurtsev please take a look!

---------

Co-authored-by: rlm <pexpresss31@gmail.com>

Trying to call `ChatOpenAI.get_num_tokens_from_messages` returns the

following error for the newly announced models `gpt-3.5-turbo-0613` and

`gpt-4-0613`:

```

NotImplementedError: get_num_tokens_from_messages() is not presently implemented for model gpt-3.5-turbo-0613.See https://github.com/openai/openai-python/blob/main/chatml.md for information on how messages are converted to tokens.

```

This adds support for counting tokens for those models, by counting

tokens the same way they're counted for the previous versions of

`gpt-3.5-turbo` and `gpt-4`.

#### reviewers

- @hwchase17

- @agola11

Confluence API supports difference format of page content. The storage

format is the raw XML representation for storage. The view format is the

HTML representation for viewing with macros rendered as though it is

viewed by users.

Add the `content_format` parameter to `ConfluenceLoader.load()` to

specify the content format, this is

set to `ContentFormat.STORAGE` by default.

#### Who can review?

Tag maintainers/contributors who might be interested: @eyurtsev

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

## Add Solidity programming language support for code splitter.

Twitter: @0xjord4n_

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @hwchase17

VectorStores / Retrievers / Memory

- @dev2049

-->

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

This adds implementation of MMR search in pinecone; and I have two

semi-related observations about this vector store class:

- Maybe we should also have a

`similarity_search_by_vector_returning_embeddings` like in supabase, but

it's not in the base `VectorStore` class so I didn't implement

- Talking about the base class, there's

`similarity_search_with_relevance_scores`, but in pinecone it is called

`similarity_search_with_score`; maybe we should consider renaming it to

align with other `VectorStore` base and sub classes (or add that as an

alias for backward compatibility)

#### Who can review?

Tag maintainers/contributors who might be interested:

- VectorStores / Retrievers / Memory - @dev2049

# Introduces embaas document extraction api endpoints

In this PR, we add support for embaas document extraction endpoints to

Text Embedding Models (with LLMs, in different PRs coming). We currently

offer the MTEB leaderboard top performers, will continue to add top

embedding models and soon add support for customers to deploy thier own

models. Additional Documentation + Infomation can be found

[here](https://embaas.io).

While developing this integration, I closely followed the patterns

established by other langchain integrations. Nonetheless, if there are

any aspects that require adjustments or if there's a better way to

present a new integration, let me know! :)

Additionally, I fixed some docs in the embeddings integration.

Related PR: #5976

#### Who can review?

DataLoaders

- @eyurtsev

This creates a new kind of text splitter for markdown files.

The user can supply a set of headers that they want to split the file

on.

We define a new text splitter class, `MarkdownHeaderTextSplitter`, that

does a few things:

(1) For each line, it determines the associated set of user-specified

headers

(2) It groups lines with common headers into splits

See notebook for example usage and test cases.

Adds a new parameter `relative_chunk_overlap` for the

`SentenceTransformersTokenTextSplitter` constructor. The parameter sets

the chunk overlap using a relative factor, e.g. for a model where the

token limit is 100, a `relative_chunk_overlap=0.5` implies that

`chunk_overlap=50`

Tag maintainers/contributors who might be interested:

@hwchase17, @dev2049

#### What I do

Adding embedding api for

[DashScope](https://help.aliyun.com/product/610100.html), which is the

DAMO Academy's multilingual text unified vector model based on the LLM

base. It caters to multiple mainstream languages worldwide and offers

high-quality vector services, helping developers quickly transform text

data into high-quality vector data. Currently supported languages

include Chinese, English, Spanish, French, Portuguese, Indonesian, and

more.

#### Who can review?

Models

- @hwchase17

- @agola11

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Added description of LangChain Decorators ✨ into the integration section

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@hwchase17

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

Inspired by the filtering capability available in ChromaDB, added the

same functionality to the FAISS vectorestore as well. Since FAISS does

not have an inbuilt method of filtering used the approach suggested in

this [thread](https://github.com/facebookresearch/faiss/issues/1079)

Langchain Issue inspiration:

https://github.com/hwchase17/langchain/issues/4572

- [x] Added filtering capability to semantic similarly and MMR

- [x] Added test cases for filtering in

`tests/integration_tests/vectorstores/test_faiss.py`

#### Who can review?

Tag maintainers/contributors who might be interested:

VectorStores / Retrievers / Memory

- @dev2049

- @hwchase17

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

I used the APIChain sometimes it failed during the intermediate step

when generating the api url and calling the `request` function. After

some digging, I found the url sometimes includes the space at the

beginning, like `%20https://...api.com` which causes the `

self.requests_wrapper.get` internal function to fail.

Including a little string preprocessing `.strip` to remove the space

seems to improve the robustness of the APIchain to make sure it can send

the request and retrieve the API result more reliably.

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

@vowelparrot

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

HuggingFace -> Hugging Face

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Obey `handler.raise_error` in `_ahandle_event_for_handler`

Exceptions for async callbacks were only logged as warnings, also when

`raise_error = True`

#### Who can review?

@hwchase17

@agola11

@eyurtsev

当Confluence文档内容中包含附件,且附件内容为非英文时,提取出来的文本是乱码的。

When the content of the document contains attachments, and the content

of the attachments is not in English, the extracted text is garbled.

这主要是因为没有为pytesseract传递lang参数,默认情况下只支持英文。

This is mainly because lang parameter is not passed to pytesseract, and

only English is supported by default.

所以我给ConfluenceLoader.load()添加了ocr_languages参数,以便支持多种语言。

So I added the ocr_languages parameter to ConfluenceLoader.load () to

support multiple languages.

Fixes (not reported) an error that may occur in some cases in the

RecursiveCharacterTextSplitter.

An empty `new_separators` array ([]) would end up in the else path of

the condition below and used in a function where it is expected to be

non empty.

```python

if new_separators is None:

...

else:

# _split_text() expects this array to be non-empty!

other_info = self._split_text(s, new_separators)

```

resulting in an `IndexError`

```python

def _split_text(self, text: str, separators: List[str]) -> List[str]:

"""Split incoming text and return chunks."""

final_chunks = []

# Get appropriate separator to use

> separator = separators[-1]

E IndexError: list index out of range

langchain/text_splitter.py:425: IndexError

```

#### Who can review?

@hwchase17 @eyurtsev

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

This fixes a token limit bug in the

SentenceTransformersTokenTextSplitter. Before the token limit was taken

from tokenizer used by the model. However, for some models the token

limit of the tokenizer (from `AutoTokenizer.from_pretrained`) does not

equal the token limit of the model. This was a false assumption.

Therefore, the token limit of the text splitter is now taken from the

sentence transformers model token limit.

Twitter: @plasmajens

#### Before submitting

#### Who can review?

@hwchase17 and/or @dev2049

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

This PR updates the Vectara integration (@hwchase17 ):

* Adds reuse of requests.session to imrpove efficiency and speed.

* Utilizes Vectara's low-level API (instead of standard API) to better

match user's specific chunking with LangChain

* Now add_texts puts all the texts into a single Vectara document so

indexing is much faster.

* updated variables names from alpha to lambda_val (to be consistent

with Vectara docs) and added n_context_sentence so it's available to use

if needed.

* Updates to documentation and tests

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Unstructured XML Loader

Adds an `UnstructuredXMLLoader` class for .xml files. Works with

unstructured>=0.6.7. A plain text representation of the text with the

XML tags will be available under the `page_content` attribute in the

doc.

### Testing

```python

from langchain.document_loaders import UnstructuredXMLLoader

loader = UnstructuredXMLLoader(

"example_data/factbook.xml",

)

docs = loader.load()

```

## Who can review?

@hwchase17

@eyurtsev

Added AwaDB vector store, which is a wrapper over the AwaDB, that can be

used as a vector storage and has an efficient similarity search. Added

integration tests for the vector store

Added jupyter notebook with the example

Delete a unneeded empty file and resolve the

conflict(https://github.com/hwchase17/langchain/pull/5886)

Please check, Thanks!

@dev2049

@hwchase17

---------

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: ljeagle <vincent_jieli@yeah.net>

Co-authored-by: vincent <awadb.vincent@gmail.com>

Based on the inspiration from the SQL chain, the following three

parameters are added to Graph Cypher Chain.

- top_k: Limited the number of results from the database to be used as

context

- return_direct: Return database results without transforming them to

natural language

- return_intermediate_steps: Return intermediate steps

Hi,

This is a fix for https://github.com/hwchase17/langchain/pull/5014. This

PR forgot to add the ability to self solve the ValueError(f"Could not

parse LLM output: {llm_output}") error for `_atake_next_step`.

<!--

Fixed a simple typo on

https://python.langchain.com/en/latest/modules/indexes/retrievers/examples/vectorstore.html

where the word "use" was missing.

#### Who can review?