mirror of

https://github.com/hwchase17/langchain.git

synced 2026-02-12 04:01:05 +00:00

Compare commits

197 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

6e4e7d2637 | ||

|

|

5e57496225 | ||

|

|

b9e5b27a99 | ||

|

|

79a44c8225 | ||

|

|

2f49c96532 | ||

|

|

40469eef7f | ||

|

|

125afb51d7 | ||

|

|

7bf5b0ccd3 | ||

|

|

7a4e1b72a8 | ||

|

|

f5afb60116 | ||

|

|

f7f118e021 | ||

|

|

544cc7f395 | ||

|

|

cd9336469e | ||

|

|

d8967e28d0 | ||

|

|

b4d6a425a2 | ||

|

|

fc1d48814c | ||

|

|

9b78bb7393 | ||

|

|

a32c85951e | ||

|

|

95e780d6f9 | ||

|

|

247a88f2f9 | ||

|

|

6dc86ad48f | ||

|

|

c9f93f5f74 | ||

|

|

8cded3fdad | ||

|

|

dca21078ad | ||

|

|

6dbd29e440 | ||

|

|

481de8df7f | ||

|

|

a31c9511e8 | ||

|

|

ec489599fd | ||

|

|

3d0449bb45 | ||

|

|

632c65d64b | ||

|

|

15cdfa9e7f | ||

|

|

704b0feb38 | ||

|

|

aecd1c8ee3 | ||

|

|

58a93f88da | ||

|

|

aa439ac2ff | ||

|

|

e131156805 | ||

|

|

0316900d2f | ||

|

|

5c64b86ba3 | ||

|

|

c2f21a519f | ||

|

|

629fda3957 | ||

|

|

f8e4048cd8 | ||

|

|

bd780a8223 | ||

|

|

7149d33c71 | ||

|

|

f240651bd8 | ||

|

|

13d1df2140 | ||

|

|

5b34931948 | ||

|

|

f0926bad9f | ||

|

|

b4914888a7 | ||

|

|

2ffb90b161 | ||

|

|

ad87584c35 | ||

|

|

fd69cc7e42 | ||

|

|

b6a101d121 | ||

|

|

6f47133d8a | ||

|

|

1dfb6a2a44 | ||

|

|

270384fb44 | ||

|

|

c913acdb4c | ||

|

|

1e19e004af | ||

|

|

60c837c58a | ||

|

|

3acf423de0 | ||

|

|

26314d7004 | ||

|

|

a9e637b8f5 | ||

|

|

1140bd79a0 | ||

|

|

007babb363 | ||

|

|

c9ae0c5808 | ||

|

|

3d871853df | ||

|

|

00bc8df640 | ||

|

|

a63cfad558 | ||

|

|

f0d4f36219 | ||

|

|

b410dc76aa | ||

|

|

4d730a9bbc | ||

|

|

af7f20fa42 | ||

|

|

659c67e896 | ||

|

|

e519a81a05 | ||

|

|

b026a62bc4 | ||

|

|

d6d6f322a9 | ||

|

|

41832042cc | ||

|

|

2b975de94d | ||

|

|

1f88b11c99 | ||

|

|

f5da9a5161 | ||

|

|

8a4709582f | ||

|

|

de7afc52a9 | ||

|

|

c7b083ab56 | ||

|

|

dc3ac8082b | ||

|

|

0a9f04bad9 | ||

|

|

d17dea30ce | ||

|

|

e90d007db3 | ||

|

|

585f60a5aa | ||

|

|

90973c10b1 | ||

|

|

fe1eb8ca5f | ||

|

|

10dab053b4 | ||

|

|

c969a779c9 | ||

|

|

7ed8d00bba | ||

|

|

9cceb4a02a | ||

|

|

c841b2cc51 | ||

|

|

28cedab1a4 | ||

|

|

cb5c5d1a4d | ||

|

|

fd0d631f39 | ||

|

|

3fb4997ad8 | ||

|

|

cc50a4579e | ||

|

|

00c39ea409 | ||

|

|

870cd33701 | ||

|

|

393cd3c796 | ||

|

|

347ea24524 | ||

|

|

6c13003dd3 | ||

|

|

b21c485ad5 | ||

|

|

d85f57ef9c | ||

|

|

595ebe1796 | ||

|

|

3b75b004fc | ||

|

|

3a2782053b | ||

|

|

e4cfaa5680 | ||

|

|

00d3ec5ed8 | ||

|

|

fe572a5a0d | ||

|

|

94b2f536f3 | ||

|

|

715bd06f04 | ||

|

|

337d1e78ff | ||

|

|

b4b7e8a54d | ||

|

|

8f608f4e75 | ||

|

|

134fc87e48 | ||

|

|

035aed8dc9 | ||

|

|

9a5268dc5f | ||

|

|

acfda4d1d8 | ||

|

|

a9dddd8a32 | ||

|

|

579ad85785 | ||

|

|

609b14a570 | ||

|

|

1ddd6dbf0b | ||

|

|

2d0ff1a06d | ||

|

|

09f9464254 | ||

|

|

582950291c | ||

|

|

5a0844bae1 | ||

|

|

e49284acde | ||

|

|

67dde7d893 | ||

|

|

90e388b9f8 | ||

|

|

64f44c6483 | ||

|

|

4b59bb55c7 | ||

|

|

7a8f1d2854 | ||

|

|

632c2b49da | ||

|

|

e57b045402 | ||

|

|

0ce4767076 | ||

|

|

6c66f51fb8 | ||

|

|

2eeaccf01c | ||

|

|

e6a9ee64b3 | ||

|

|

4e9ee566ef | ||

|

|

fc009f61c8 | ||

|

|

3dfe1cf60e | ||

|

|

a4a1ee6b5d | ||

|

|

2d3918c152 | ||

|

|

1c03205cc2 | ||

|

|

feec4c61f4 | ||

|

|

097684e5f2 | ||

|

|

fd1fcb5a7d | ||

|

|

3207a74829 | ||

|

|

597378d1f6 | ||

|

|

64b9843b5b | ||

|

|

5d86a6acf1 | ||

|

|

35a3218e84 | ||

|

|

65c0c73597 | ||

|

|

33a001933a | ||

|

|

fe804d2a01 | ||

|

|

68f039704c | ||

|

|

bcfd071784 | ||

|

|

7d90691adb | ||

|

|

f83c36d8fd | ||

|

|

6be67279fb | ||

|

|

3dc49a04a3 | ||

|

|

5c907d9998 | ||

|

|

1b7cfd7222 | ||

|

|

7859245fc5 | ||

|

|

529a1f39b9 | ||

|

|

f5a4bf0ce4 | ||

|

|

a0453ebcf5 | ||

|

|

ffb7de34ca | ||

|

|

09085c32e3 | ||

|

|

8b91a21e37 | ||

|

|

55b52bad21 | ||

|

|

b35260ed47 | ||

|

|

7bea3b302c | ||

|

|

b5449a866d | ||

|

|

8441cbfc03 | ||

|

|

4ab66c4f52 | ||

|

|

27f80784d0 | ||

|

|

031e32f331 | ||

|

|

ccee1aedd2 | ||

|

|

e2c26909f2 | ||

|

|

3e879b47c1 | ||

|

|

859502b16c | ||

|

|

c33e055f17 | ||

|

|

a5bf8c9b9d | ||

|

|

0874872dee | ||

|

|

ef25904ecb | ||

|

|

9d6f649ba5 | ||

|

|

c58932e8fd | ||

|

|

6e85cbcce3 | ||

|

|

b25dbcb5b3 | ||

|

|

a554e94a1a | ||

|

|

5f34dffedc | ||

|

|

aff33d52c5 | ||

|

|

f16c1fb6df |

6

.dockerignore

Normal file

6

.dockerignore

Normal file

@@ -0,0 +1,6 @@

|

||||

.venv

|

||||

.github

|

||||

.git

|

||||

.mypy_cache

|

||||

.pytest_cache

|

||||

Dockerfile

|

||||

8

.github/CONTRIBUTING.md

vendored

8

.github/CONTRIBUTING.md

vendored

@@ -46,7 +46,7 @@ good code into the codebase.

|

||||

|

||||

### 🏭Release process

|

||||

|

||||

As of now, LangChain has an ad hoc release process: releases are cut with high frequency via by

|

||||

As of now, LangChain has an ad hoc release process: releases are cut with high frequency by

|

||||

a developer and published to [PyPI](https://pypi.org/project/langchain/).

|

||||

|

||||

LangChain follows the [semver](https://semver.org/) versioning standard. However, as pre-1.0 software,

|

||||

@@ -123,6 +123,12 @@ To run unit tests:

|

||||

make test

|

||||

```

|

||||

|

||||

To run unit tests in Docker:

|

||||

|

||||

```bash

|

||||

make docker_tests

|

||||

```

|

||||

|

||||

If you add new logic, please add a unit test.

|

||||

|

||||

Integration tests cover logic that requires making calls to outside APIs (often integration with other services).

|

||||

|

||||

1

.gitignore

vendored

1

.gitignore

vendored

@@ -141,3 +141,4 @@ wandb/

|

||||

|

||||

# asdf tool versions

|

||||

.tool-versions

|

||||

/.ruff_cache/

|

||||

|

||||

44

Dockerfile

Normal file

44

Dockerfile

Normal file

@@ -0,0 +1,44 @@

|

||||

# This is a Dockerfile for running unit tests

|

||||

|

||||

# Use the Python base image

|

||||

FROM python:3.11.2-bullseye AS builder

|

||||

|

||||

# Define the version of Poetry to install (default is 1.4.2)

|

||||

ARG POETRY_VERSION=1.4.2

|

||||

|

||||

# Define the directory to install Poetry to (default is /opt/poetry)

|

||||

ARG POETRY_HOME=/opt/poetry

|

||||

|

||||

# Create a Python virtual environment for Poetry and install it

|

||||

RUN python3 -m venv ${POETRY_HOME} && \

|

||||

$POETRY_HOME/bin/pip install --upgrade pip && \

|

||||

$POETRY_HOME/bin/pip install poetry==${POETRY_VERSION}

|

||||

|

||||

# Test if Poetry is installed in the expected path

|

||||

RUN echo "Poetry version:" && $POETRY_HOME/bin/poetry --version

|

||||

|

||||

# Set the working directory for the app

|

||||

WORKDIR /app

|

||||

|

||||

# Use a multi-stage build to install dependencies

|

||||

FROM builder AS dependencies

|

||||

|

||||

# Copy only the dependency files for installation

|

||||

COPY pyproject.toml poetry.lock poetry.toml ./

|

||||

|

||||

# Install the Poetry dependencies (this layer will be cached as long as the dependencies don't change)

|

||||

RUN $POETRY_HOME/bin/poetry install --no-interaction --no-ansi --with test

|

||||

|

||||

# Use a multi-stage build to run tests

|

||||

FROM dependencies AS tests

|

||||

|

||||

# Copy the rest of the app source code (this layer will be invalidated and rebuilt whenever the source code changes)

|

||||

COPY . .

|

||||

|

||||

RUN /opt/poetry/bin/poetry install --no-interaction --no-ansi --with test

|

||||

|

||||

# Set the entrypoint to run tests using Poetry

|

||||

ENTRYPOINT ["/opt/poetry/bin/poetry", "run", "pytest"]

|

||||

|

||||

# Set the default command to run all unit tests

|

||||

CMD ["tests/unit_tests"]

|

||||

19

Makefile

19

Makefile

@@ -1,7 +1,7 @@

|

||||

.PHONY: all clean format lint test tests test_watch integration_tests help

|

||||

.PHONY: all clean format lint test tests test_watch integration_tests docker_tests help

|

||||

|

||||

all: help

|

||||

|

||||

|

||||

coverage:

|

||||

poetry run pytest --cov \

|

||||

--cov-config=.coveragerc \

|

||||

@@ -23,9 +23,13 @@ format:

|

||||

poetry run black .

|

||||

poetry run ruff --select I --fix .

|

||||

|

||||

lint:

|

||||

poetry run mypy .

|

||||

poetry run black . --check

|

||||

PYTHON_FILES=.

|

||||

lint: PYTHON_FILES=.

|

||||

lint_diff: PYTHON_FILES=$(shell git diff --name-only --diff-filter=d master | grep -E '\.py$$')

|

||||

|

||||

lint lint_diff:

|

||||

poetry run mypy $(PYTHON_FILES)

|

||||

poetry run black $(PYTHON_FILES) --check

|

||||

poetry run ruff .

|

||||

|

||||

test:

|

||||

@@ -40,6 +44,10 @@ test_watch:

|

||||

integration_tests:

|

||||

poetry run pytest tests/integration_tests

|

||||

|

||||

docker_tests:

|

||||

docker build -t my-langchain-image:test .

|

||||

docker run --rm my-langchain-image:test

|

||||

|

||||

help:

|

||||

@echo '----'

|

||||

@echo 'coverage - run unit tests and generate coverage report'

|

||||

@@ -51,3 +59,4 @@ help:

|

||||

@echo 'test - run unit tests'

|

||||

@echo 'test_watch - run unit tests in watch mode'

|

||||

@echo 'integration_tests - run integration tests'

|

||||

@echo 'docker_tests - run unit tests in docker'

|

||||

|

||||

@@ -73,7 +73,7 @@ Memory is the concept of persisting state between calls of a chain/agent. LangCh

|

||||

|

||||

[BETA] Generative models are notoriously hard to evaluate with traditional metrics. One new way of evaluating them is using language models themselves to do the evaluation. LangChain provides some prompts/chains for assisting in this.

|

||||

|

||||

For more information on these concepts, please see our [full documentation](https://langchain.readthedocs.io/en/latest/?).

|

||||

For more information on these concepts, please see our [full documentation](https://langchain.readthedocs.io/en/latest/).

|

||||

|

||||

## 💁 Contributing

|

||||

|

||||

|

||||

BIN

docs/_static/ApifyActors.png

vendored

Normal file

BIN

docs/_static/ApifyActors.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 559 KiB |

293

docs/ecosystem/aim_tracking.ipynb

Normal file

293

docs/ecosystem/aim_tracking.ipynb

Normal file

@@ -0,0 +1,293 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Aim\n",

|

||||

"\n",

|

||||

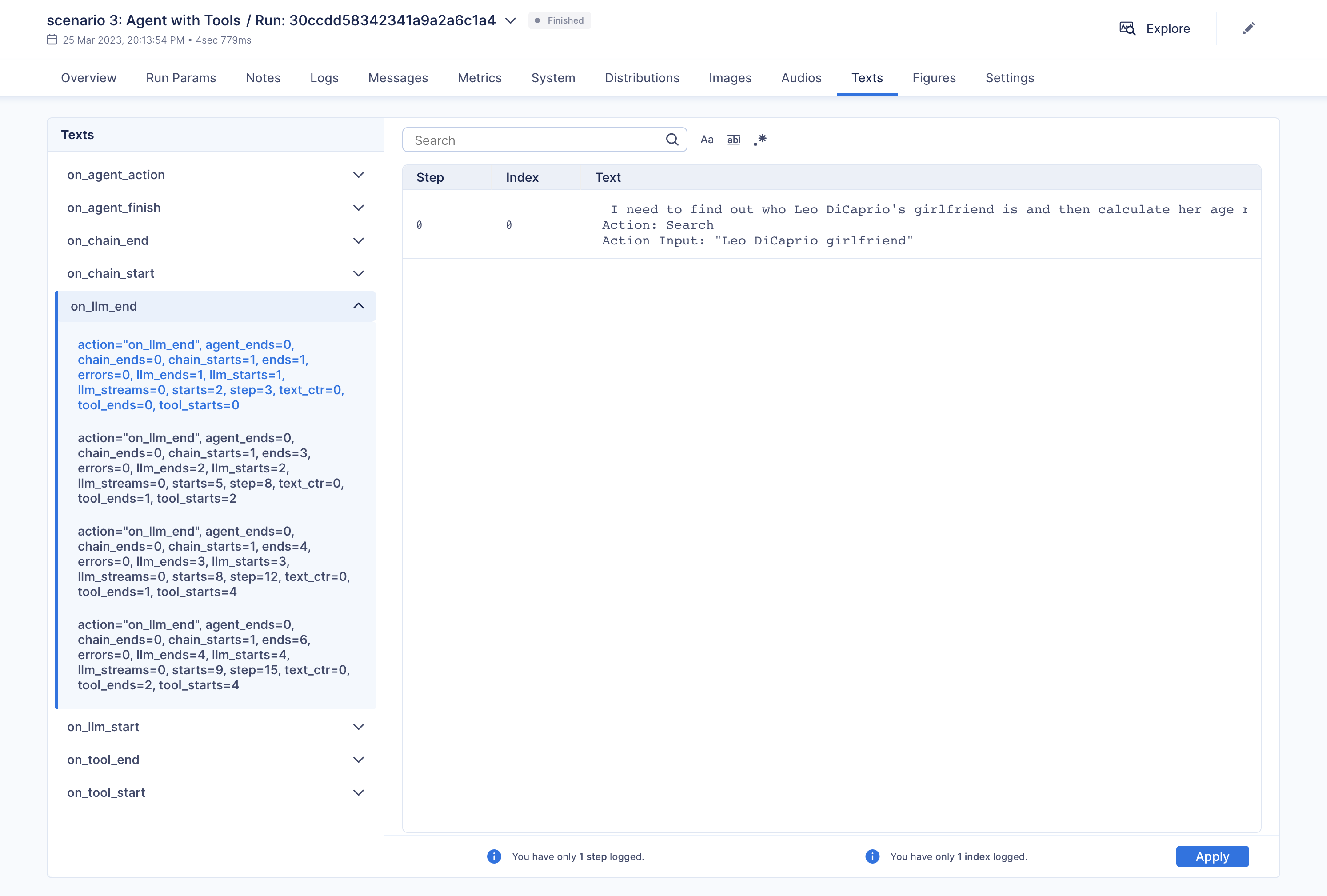

"Aim makes it super easy to visualize and debug LangChain executions. Aim tracks inputs and outputs of LLMs and tools, as well as actions of agents. \n",

|

||||

"\n",

|

||||

"With Aim, you can easily debug and examine an individual execution:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

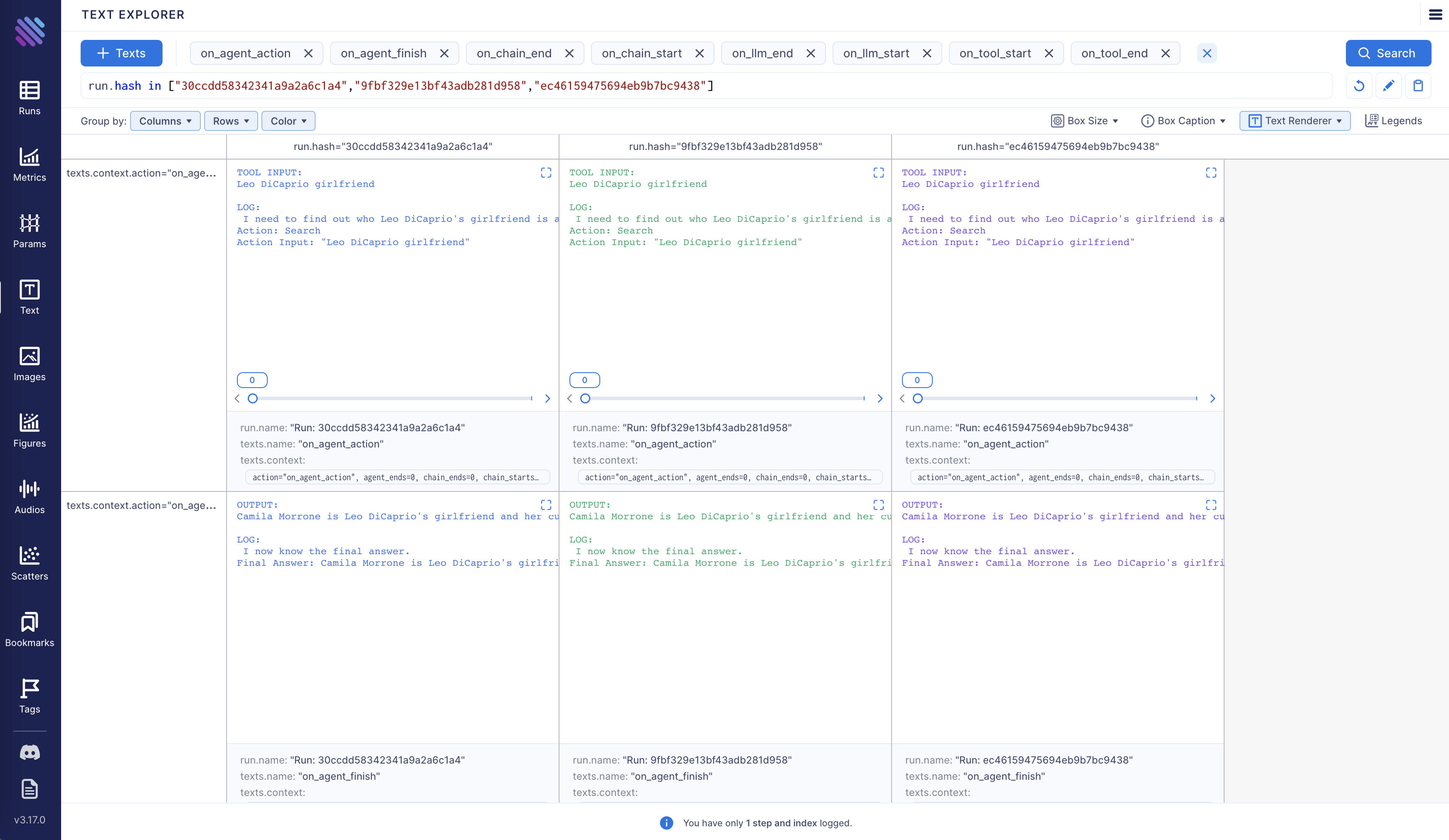

"Additionally, you have the option to compare multiple executions side by side:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"Aim is fully open source, [learn more](https://github.com/aimhubio/aim) about Aim on GitHub.\n",

|

||||

"\n",

|

||||

"Let's move forward and see how to enable and configure Aim callback."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Tracking LangChain Executions with Aim</h3>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"In this notebook we will explore three usage scenarios. To start off, we will install the necessary packages and import certain modules. Subsequently, we will configure two environment variables that can be established either within the Python script or through the terminal."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "mf88kuCJhbVu"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!pip install aim\n",

|

||||

"!pip install langchain\n",

|

||||

"!pip install openai\n",

|

||||

"!pip install google-search-results"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "g4eTuajwfl6L"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import os\n",

|

||||

"from datetime import datetime\n",

|

||||

"\n",

|

||||

"from langchain.llms import OpenAI\n",

|

||||

"from langchain.callbacks.base import CallbackManager\n",

|

||||

"from langchain.callbacks import AimCallbackHandler, StdOutCallbackHandler"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"Our examples use a GPT model as the LLM, and OpenAI offers an API for this purpose. You can obtain the key from the following link: https://platform.openai.com/account/api-keys .\n",

|

||||

"\n",

|

||||

"We will use the SerpApi to retrieve search results from Google. To acquire the SerpApi key, please go to https://serpapi.com/manage-api-key ."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "T1bSmKd6V2If"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"os.environ[\"OPENAI_API_KEY\"] = \"...\"\n",

|

||||

"os.environ[\"SERPAPI_API_KEY\"] = \"...\""

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "QenUYuBZjIzc"

|

||||

},

|

||||

"source": [

|

||||

"The event methods of `AimCallbackHandler` accept the LangChain module or agent as input and log at least the prompts and generated results, as well as the serialized version of the LangChain module, to the designated Aim run."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "KAz8weWuUeXF"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"session_group = datetime.now().strftime(\"%m.%d.%Y_%H.%M.%S\")\n",

|

||||

"aim_callback = AimCallbackHandler(\n",

|

||||

" repo=\".\",\n",

|

||||

" experiment_name=\"scenario 1: OpenAI LLM\",\n",

|

||||

")\n",

|

||||

"\n",

|

||||

"manager = CallbackManager([StdOutCallbackHandler(), aim_callback])\n",

|

||||

"llm = OpenAI(temperature=0, callback_manager=manager, verbose=True)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "b8WfByB4fl6N"

|

||||

},

|

||||

"source": [

|

||||

"The `flush_tracker` function is used to record LangChain assets on Aim. By default, the session is reset rather than being terminated outright."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 1</h3> In the first scenario, we will use OpenAI LLM."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "o_VmneyIUyx8"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# scenario 1 - LLM\n",

|

||||

"llm_result = llm.generate([\"Tell me a joke\", \"Tell me a poem\"] * 3)\n",

|

||||

"aim_callback.flush_tracker(\n",

|

||||

" langchain_asset=llm,\n",

|

||||

" experiment_name=\"scenario 2: Chain with multiple SubChains on multiple generations\",\n",

|

||||

")\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 2</h3> Scenario two involves chaining with multiple SubChains across multiple generations."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "trxslyb1U28Y"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from langchain.prompts import PromptTemplate\n",

|

||||

"from langchain.chains import LLMChain"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "uauQk10SUzF6"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# scenario 2 - Chain\n",

|

||||

"template = \"\"\"You are a playwright. Given the title of play, it is your job to write a synopsis for that title.\n",

|

||||

"Title: {title}\n",

|

||||

"Playwright: This is a synopsis for the above play:\"\"\"\n",

|

||||

"prompt_template = PromptTemplate(input_variables=[\"title\"], template=template)\n",

|

||||

"synopsis_chain = LLMChain(llm=llm, prompt=prompt_template, callback_manager=manager)\n",

|

||||

"\n",

|

||||

"test_prompts = [\n",

|

||||

" {\"title\": \"documentary about good video games that push the boundary of game design\"},\n",

|

||||

" {\"title\": \"the phenomenon behind the remarkable speed of cheetahs\"},\n",

|

||||

" {\"title\": \"the best in class mlops tooling\"},\n",

|

||||

"]\n",

|

||||

"synopsis_chain.apply(test_prompts)\n",

|

||||

"aim_callback.flush_tracker(\n",

|

||||

" langchain_asset=synopsis_chain, experiment_name=\"scenario 3: Agent with Tools\"\n",

|

||||

")"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 3</h3> The third scenario involves an agent with tools."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "_jN73xcPVEpI"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from langchain.agents import initialize_agent, load_tools\n",

|

||||

"from langchain.agents import AgentType"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"colab": {

|

||||

"base_uri": "https://localhost:8080/"

|

||||

},

|

||||

"id": "Gpq4rk6VT9cu",

|

||||

"outputId": "68ae261e-d0a2-4229-83c4-762562263b66"

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\n",

|

||||

"\n",

|

||||

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who Leo DiCaprio's girlfriend is and then calculate her age raised to the 0.43 power.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mLeonardo DiCaprio seemed to prove a long-held theory about his love life right after splitting from girlfriend Camila Morrone just months ...\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I need to find out Camila Morrone's age\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Camila Morrone age\"\u001b[0m\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3m25 years\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I need to calculate 25 raised to the 0.43 power\n",

|

||||

"Action: Calculator\n",

|

||||

"Action Input: 25^0.43\u001b[0m\n",

|

||||

"Observation: \u001b[33;1m\u001b[1;3mAnswer: 3.991298452658078\n",

|

||||

"\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I now know the final answer\n",

|

||||

"Final Answer: Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.991298452658078.\u001b[0m\n",

|

||||

"\n",

|

||||

"\u001b[1m> Finished chain.\u001b[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"# scenario 3 - Agent with Tools\n",

|

||||

"tools = load_tools([\"serpapi\", \"llm-math\"], llm=llm, callback_manager=manager)\n",

|

||||

"agent = initialize_agent(\n",

|

||||

" tools,\n",

|

||||

" llm,\n",

|

||||

" agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,\n",

|

||||

" callback_manager=manager,\n",

|

||||

" verbose=True,\n",

|

||||

")\n",

|

||||

"agent.run(\n",

|

||||

" \"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?\"\n",

|

||||

")\n",

|

||||

"aim_callback.flush_tracker(langchain_asset=agent, reset=False, finish=True)"

|

||||

]

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"accelerator": "GPU",

|

||||

"colab": {

|

||||

"provenance": []

|

||||

},

|

||||

"gpuClass": "standard",

|

||||

"kernelspec": {

|

||||

"display_name": "Python 3 (ipykernel)",

|

||||

"language": "python",

|

||||

"name": "python3"

|

||||

},

|

||||

"language_info": {

|

||||

"codemirror_mode": {

|

||||

"name": "ipython",

|

||||

"version": 3

|

||||

},

|

||||

"file_extension": ".py",

|

||||

"mimetype": "text/x-python",

|

||||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.9.1"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 1

|

||||

}

|

||||

46

docs/ecosystem/apify.md

Normal file

46

docs/ecosystem/apify.md

Normal file

@@ -0,0 +1,46 @@

|

||||

# Apify

|

||||

|

||||

This page covers how to use [Apify](https://apify.com) within LangChain.

|

||||

|

||||

## Overview

|

||||

|

||||

Apify is a cloud platform for web scraping and data extraction,

|

||||

which provides an [ecosystem](https://apify.com/store) of more than a thousand

|

||||

ready-made apps called *Actors* for various scraping, crawling, and extraction use cases.

|

||||

|

||||

[](https://apify.com/store)

|

||||

|

||||

This integration enables you run Actors on the Apify platform and load their results into LangChain to feed your vector

|

||||

indexes with documents and data from the web, e.g. to generate answers from websites with documentation,

|

||||

blogs, or knowledge bases.

|

||||

|

||||

|

||||

## Installation and Setup

|

||||

|

||||

- Install the Apify API client for Python with `pip install apify-client`

|

||||

- Get your [Apify API token](https://console.apify.com/account/integrations) and either set it as

|

||||

an environment variable (`APIFY_API_TOKEN`) or pass it to the `ApifyWrapper` as `apify_api_token` in the constructor.

|

||||

|

||||

|

||||

## Wrappers

|

||||

|

||||

### Utility

|

||||

|

||||

You can use the `ApifyWrapper` to run Actors on the Apify platform.

|

||||

|

||||

```python

|

||||

from langchain.utilities import ApifyWrapper

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this wrapper, see [this notebook](../modules/agents/tools/examples/apify.ipynb).

|

||||

|

||||

|

||||

### Loader

|

||||

|

||||

You can also use our `ApifyDatasetLoader` to get data from Apify dataset.

|

||||

|

||||

```python

|

||||

from langchain.document_loaders import ApifyDatasetLoader

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this loader, see [this notebook](../modules/indexes/document_loaders/examples/apify_dataset.ipynb).

|

||||

589

docs/ecosystem/clearml_tracking.ipynb

Normal file

589

docs/ecosystem/clearml_tracking.ipynb

Normal file

@@ -0,0 +1,589 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# ClearML Integration\n",

|

||||

"\n",

|

||||

"In order to properly keep track of your langchain experiments and their results, you can enable the ClearML integration. ClearML is an experiment manager that neatly tracks and organizes all your experiment runs.\n",

|

||||

"\n",

|

||||

"<a target=\"_blank\" href=\"https://colab.research.google.com/github/hwchase17/langchain/blob/master/docs/ecosystem/clearml_tracking.ipynb\">\n",

|

||||

" <img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/>\n",

|

||||

"</a>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Getting API Credentials\n",

|

||||

"\n",

|

||||

"We'll be using quite some APIs in this notebook, here is a list and where to get them:\n",

|

||||

"\n",

|

||||

"- ClearML: https://app.clear.ml/settings/workspace-configuration\n",

|

||||

"- OpenAI: https://platform.openai.com/account/api-keys\n",

|

||||

"- SerpAPI (google search): https://serpapi.com/dashboard"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 2,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import os\n",

|

||||

"os.environ[\"CLEARML_API_ACCESS_KEY\"] = \"\"\n",

|

||||

"os.environ[\"CLEARML_API_SECRET_KEY\"] = \"\"\n",

|

||||

"\n",

|

||||

"os.environ[\"OPENAI_API_KEY\"] = \"\"\n",

|

||||

"os.environ[\"SERPAPI_API_KEY\"] = \"\""

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Setting Up"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!pip install clearml\n",

|

||||

"!pip install pandas\n",

|

||||

"!pip install textstat\n",

|

||||

"!pip install spacy\n",

|

||||

"!python -m spacy download en_core_web_sm"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 3,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"The clearml callback is currently in beta and is subject to change based on updates to `langchain`. Please report any issues to https://github.com/allegroai/clearml/issues with the tag `langchain`.\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"from datetime import datetime\n",

|

||||

"from langchain.callbacks import ClearMLCallbackHandler, StdOutCallbackHandler\n",

|

||||

"from langchain.callbacks.base import CallbackManager\n",

|

||||

"from langchain.llms import OpenAI\n",

|

||||

"\n",

|

||||

"# Setup and use the ClearML Callback\n",

|

||||

"clearml_callback = ClearMLCallbackHandler(\n",

|

||||

" task_type=\"inference\",\n",

|

||||

" project_name=\"langchain_callback_demo\",\n",

|

||||

" task_name=\"llm\",\n",

|

||||

" tags=[\"test\"],\n",

|

||||

" # Change the following parameters based on the amount of detail you want tracked\n",

|

||||

" visualize=True,\n",

|

||||

" complexity_metrics=True,\n",

|

||||

" stream_logs=True\n",

|

||||

")\n",

|

||||

"manager = CallbackManager([StdOutCallbackHandler(), clearml_callback])\n",

|

||||

"# Get the OpenAI model ready to go\n",

|

||||

"llm = OpenAI(temperature=0, callback_manager=manager, verbose=True)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Scenario 1: Just an LLM\n",

|

||||

"\n",

|

||||

"First, let's just run a single LLM a few times and capture the resulting prompt-answer conversation in ClearML"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 5,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a joke'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a poem'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a joke'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a poem'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a joke'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a poem'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nQ: What did the fish say when it hit the wall?\\nA: Dam!', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 109.04, 'flesch_kincaid_grade': 1.3, 'smog_index': 0.0, 'coleman_liau_index': -1.24, 'automated_readability_index': 0.3, 'dale_chall_readability_score': 5.5, 'difficult_words': 0, 'linsear_write_formula': 5.5, 'gunning_fog': 5.2, 'text_standard': '5th and 6th grade', 'fernandez_huerta': 133.58, 'szigriszt_pazos': 131.54, 'gutierrez_polini': 62.3, 'crawford': -0.2, 'gulpease_index': 79.8, 'osman': 116.91}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nRoses are red,\\nViolets are blue,\\nSugar is sweet,\\nAnd so are you.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 83.66, 'flesch_kincaid_grade': 4.8, 'smog_index': 0.0, 'coleman_liau_index': 3.23, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 6.71, 'difficult_words': 2, 'linsear_write_formula': 6.5, 'gunning_fog': 8.28, 'text_standard': '6th and 7th grade', 'fernandez_huerta': 115.58, 'szigriszt_pazos': 112.37, 'gutierrez_polini': 54.83, 'crawford': 1.4, 'gulpease_index': 72.1, 'osman': 100.17}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nQ: What did the fish say when it hit the wall?\\nA: Dam!', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 109.04, 'flesch_kincaid_grade': 1.3, 'smog_index': 0.0, 'coleman_liau_index': -1.24, 'automated_readability_index': 0.3, 'dale_chall_readability_score': 5.5, 'difficult_words': 0, 'linsear_write_formula': 5.5, 'gunning_fog': 5.2, 'text_standard': '5th and 6th grade', 'fernandez_huerta': 133.58, 'szigriszt_pazos': 131.54, 'gutierrez_polini': 62.3, 'crawford': -0.2, 'gulpease_index': 79.8, 'osman': 116.91}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nRoses are red,\\nViolets are blue,\\nSugar is sweet,\\nAnd so are you.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 83.66, 'flesch_kincaid_grade': 4.8, 'smog_index': 0.0, 'coleman_liau_index': 3.23, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 6.71, 'difficult_words': 2, 'linsear_write_formula': 6.5, 'gunning_fog': 8.28, 'text_standard': '6th and 7th grade', 'fernandez_huerta': 115.58, 'szigriszt_pazos': 112.37, 'gutierrez_polini': 54.83, 'crawford': 1.4, 'gulpease_index': 72.1, 'osman': 100.17}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nQ: What did the fish say when it hit the wall?\\nA: Dam!', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 109.04, 'flesch_kincaid_grade': 1.3, 'smog_index': 0.0, 'coleman_liau_index': -1.24, 'automated_readability_index': 0.3, 'dale_chall_readability_score': 5.5, 'difficult_words': 0, 'linsear_write_formula': 5.5, 'gunning_fog': 5.2, 'text_standard': '5th and 6th grade', 'fernandez_huerta': 133.58, 'szigriszt_pazos': 131.54, 'gutierrez_polini': 62.3, 'crawford': -0.2, 'gulpease_index': 79.8, 'osman': 116.91}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nRoses are red,\\nViolets are blue,\\nSugar is sweet,\\nAnd so are you.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 83.66, 'flesch_kincaid_grade': 4.8, 'smog_index': 0.0, 'coleman_liau_index': 3.23, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 6.71, 'difficult_words': 2, 'linsear_write_formula': 6.5, 'gunning_fog': 8.28, 'text_standard': '6th and 7th grade', 'fernandez_huerta': 115.58, 'szigriszt_pazos': 112.37, 'gutierrez_polini': 54.83, 'crawford': 1.4, 'gulpease_index': 72.1, 'osman': 100.17}\n",

|

||||

"{'action_records': action name step starts ends errors text_ctr chain_starts \\\n",

|

||||

"0 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"1 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"2 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"3 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"4 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"5 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"6 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"7 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"8 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"9 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"10 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"11 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"12 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"13 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"14 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"15 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"16 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"17 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"18 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"19 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"20 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"21 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"22 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"23 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"\n",

|

||||

" chain_ends llm_starts ... difficult_words linsear_write_formula \\\n",

|

||||

"0 0 1 ... NaN NaN \n",

|

||||

"1 0 1 ... NaN NaN \n",

|

||||

"2 0 1 ... NaN NaN \n",

|

||||

"3 0 1 ... NaN NaN \n",

|

||||

"4 0 1 ... NaN NaN \n",

|

||||

"5 0 1 ... NaN NaN \n",

|

||||

"6 0 1 ... 0.0 5.5 \n",

|

||||

"7 0 1 ... 2.0 6.5 \n",

|

||||

"8 0 1 ... 0.0 5.5 \n",

|

||||

"9 0 1 ... 2.0 6.5 \n",

|

||||

"10 0 1 ... 0.0 5.5 \n",

|

||||

"11 0 1 ... 2.0 6.5 \n",

|

||||

"12 0 2 ... NaN NaN \n",

|

||||

"13 0 2 ... NaN NaN \n",

|

||||

"14 0 2 ... NaN NaN \n",

|

||||

"15 0 2 ... NaN NaN \n",

|

||||

"16 0 2 ... NaN NaN \n",

|

||||

"17 0 2 ... NaN NaN \n",

|

||||

"18 0 2 ... 0.0 5.5 \n",

|

||||

"19 0 2 ... 2.0 6.5 \n",

|

||||

"20 0 2 ... 0.0 5.5 \n",

|

||||

"21 0 2 ... 2.0 6.5 \n",

|

||||

"22 0 2 ... 0.0 5.5 \n",

|

||||

"23 0 2 ... 2.0 6.5 \n",

|

||||

"\n",

|

||||

" gunning_fog text_standard fernandez_huerta szigriszt_pazos \\\n",

|

||||

"0 NaN NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN NaN \n",

|

||||

"5 NaN NaN NaN NaN \n",

|

||||

"6 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"7 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"8 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"9 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"10 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"11 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"12 NaN NaN NaN NaN \n",

|

||||

"13 NaN NaN NaN NaN \n",

|

||||

"14 NaN NaN NaN NaN \n",

|

||||

"15 NaN NaN NaN NaN \n",

|

||||

"16 NaN NaN NaN NaN \n",

|

||||

"17 NaN NaN NaN NaN \n",

|

||||

"18 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"19 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"20 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"21 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"22 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"23 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"\n",

|

||||

" gutierrez_polini crawford gulpease_index osman \n",

|

||||

"0 NaN NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN NaN \n",

|

||||

"5 NaN NaN NaN NaN \n",

|

||||

"6 62.30 -0.2 79.8 116.91 \n",

|

||||

"7 54.83 1.4 72.1 100.17 \n",

|

||||

"8 62.30 -0.2 79.8 116.91 \n",

|

||||

"9 54.83 1.4 72.1 100.17 \n",

|

||||

"10 62.30 -0.2 79.8 116.91 \n",

|

||||

"11 54.83 1.4 72.1 100.17 \n",

|

||||

"12 NaN NaN NaN NaN \n",

|

||||

"13 NaN NaN NaN NaN \n",

|

||||

"14 NaN NaN NaN NaN \n",

|

||||

"15 NaN NaN NaN NaN \n",

|

||||

"16 NaN NaN NaN NaN \n",

|

||||

"17 NaN NaN NaN NaN \n",

|

||||

"18 62.30 -0.2 79.8 116.91 \n",

|

||||

"19 54.83 1.4 72.1 100.17 \n",

|

||||

"20 62.30 -0.2 79.8 116.91 \n",

|

||||

"21 54.83 1.4 72.1 100.17 \n",

|

||||

"22 62.30 -0.2 79.8 116.91 \n",

|

||||

"23 54.83 1.4 72.1 100.17 \n",

|

||||

"\n",

|

||||

"[24 rows x 39 columns], 'session_analysis': prompt_step prompts name output_step \\\n",

|

||||

"0 1 Tell me a joke OpenAI 2 \n",

|

||||

"1 1 Tell me a poem OpenAI 2 \n",

|

||||

"2 1 Tell me a joke OpenAI 2 \n",

|

||||

"3 1 Tell me a poem OpenAI 2 \n",

|

||||

"4 1 Tell me a joke OpenAI 2 \n",

|

||||

"5 1 Tell me a poem OpenAI 2 \n",

|

||||

"6 3 Tell me a joke OpenAI 4 \n",

|

||||

"7 3 Tell me a poem OpenAI 4 \n",

|

||||

"8 3 Tell me a joke OpenAI 4 \n",

|

||||

"9 3 Tell me a poem OpenAI 4 \n",

|

||||

"10 3 Tell me a joke OpenAI 4 \n",

|

||||

"11 3 Tell me a poem OpenAI 4 \n",

|

||||

"\n",

|

||||

" output \\\n",

|

||||

"0 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"1 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"2 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"3 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"4 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"5 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"6 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"7 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"8 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"9 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"10 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"11 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"\n",

|

||||

" token_usage_total_tokens token_usage_prompt_tokens \\\n",

|

||||

"0 162 24 \n",

|

||||

"1 162 24 \n",

|

||||

"2 162 24 \n",

|

||||

"3 162 24 \n",

|

||||

"4 162 24 \n",

|

||||

"5 162 24 \n",

|

||||

"6 162 24 \n",

|

||||

"7 162 24 \n",

|

||||

"8 162 24 \n",

|

||||

"9 162 24 \n",

|

||||

"10 162 24 \n",

|

||||

"11 162 24 \n",

|

||||

"\n",

|

||||

" token_usage_completion_tokens flesch_reading_ease flesch_kincaid_grade \\\n",

|

||||

"0 138 109.04 1.3 \n",

|

||||

"1 138 83.66 4.8 \n",

|

||||

"2 138 109.04 1.3 \n",

|

||||

"3 138 83.66 4.8 \n",

|

||||

"4 138 109.04 1.3 \n",

|

||||

"5 138 83.66 4.8 \n",

|

||||

"6 138 109.04 1.3 \n",

|

||||

"7 138 83.66 4.8 \n",

|

||||

"8 138 109.04 1.3 \n",

|

||||

"9 138 83.66 4.8 \n",

|

||||

"10 138 109.04 1.3 \n",

|

||||

"11 138 83.66 4.8 \n",

|

||||

"\n",

|

||||

" ... difficult_words linsear_write_formula gunning_fog \\\n",

|

||||

"0 ... 0 5.5 5.20 \n",

|

||||

"1 ... 2 6.5 8.28 \n",

|

||||

"2 ... 0 5.5 5.20 \n",

|

||||

"3 ... 2 6.5 8.28 \n",

|

||||

"4 ... 0 5.5 5.20 \n",

|

||||

"5 ... 2 6.5 8.28 \n",

|

||||

"6 ... 0 5.5 5.20 \n",

|

||||

"7 ... 2 6.5 8.28 \n",

|

||||

"8 ... 0 5.5 5.20 \n",

|

||||

"9 ... 2 6.5 8.28 \n",

|

||||

"10 ... 0 5.5 5.20 \n",

|

||||

"11 ... 2 6.5 8.28 \n",

|

||||

"\n",

|

||||

" text_standard fernandez_huerta szigriszt_pazos gutierrez_polini \\\n",

|

||||

"0 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"1 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"2 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"3 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"4 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"5 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"6 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"7 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"8 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"9 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"10 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"11 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"\n",

|

||||

" crawford gulpease_index osman \n",

|

||||

"0 -0.2 79.8 116.91 \n",

|

||||

"1 1.4 72.1 100.17 \n",

|

||||

"2 -0.2 79.8 116.91 \n",

|

||||

"3 1.4 72.1 100.17 \n",

|

||||

"4 -0.2 79.8 116.91 \n",

|

||||

"5 1.4 72.1 100.17 \n",

|

||||

"6 -0.2 79.8 116.91 \n",

|

||||

"7 1.4 72.1 100.17 \n",

|

||||

"8 -0.2 79.8 116.91 \n",

|

||||

"9 1.4 72.1 100.17 \n",

|

||||

"10 -0.2 79.8 116.91 \n",

|

||||

"11 1.4 72.1 100.17 \n",

|

||||

"\n",

|

||||

"[12 rows x 24 columns]}\n",

|

||||

"2023-03-29 14:00:25,948 - clearml.Task - INFO - Completed model upload to https://files.clear.ml/langchain_callback_demo/llm.988bd727b0e94a29a3ac0ee526813545/models/simple_sequential\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"# SCENARIO 1 - LLM\n",

|

||||

"llm_result = llm.generate([\"Tell me a joke\", \"Tell me a poem\"] * 3)\n",

|

||||

"# After every generation run, use flush to make sure all the metrics\n",

|

||||

"# prompts and other output are properly saved separately\n",

|

||||

"clearml_callback.flush_tracker(langchain_asset=llm, name=\"simple_sequential\")"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"At this point you can already go to https://app.clear.ml and take a look at the resulting ClearML Task that was created.\n",

|

||||

"\n",

|

||||

"Among others, you should see that this notebook is saved along with any git information. The model JSON that contains the used parameters is saved as an artifact, there are also console logs and under the plots section, you'll find tables that represent the flow of the chain.\n",

|

||||

"\n",

|

||||

"Finally, if you enabled visualizations, these are stored as HTML files under debug samples."

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Scenario 2: Creating a agent with tools\n",

|

||||

"\n",

|

||||

"To show a more advanced workflow, let's create an agent with access to tools. The way ClearML tracks the results is not different though, only the table will look slightly different as there are other types of actions taken when compared to the earlier, simpler example.\n",

|

||||

"\n",

|

||||

"You can now also see the use of the `finish=True` keyword, which will fully close the ClearML Task, instead of just resetting the parameters and prompts for a new conversation."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 8,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\n",

|

||||

"\n",

|

||||

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

|

||||

"{'action': 'on_chain_start', 'name': 'AgentExecutor', 'step': 1, 'starts': 1, 'ends': 0, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 0, 'llm_ends': 0, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'input': 'Who is the wife of the person who sang summer of 69?'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 2, 'starts': 2, 'ends': 0, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 0, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Answer the following questions as best you can. You have access to the following tools:\\n\\nSearch: A search engine. Useful for when you need to answer questions about current events. Input should be a search query.\\nCalculator: Useful for when you need to answer questions about math.\\n\\nUse the following format:\\n\\nQuestion: the input question you must answer\\nThought: you should always think about what to do\\nAction: the action to take, should be one of [Search, Calculator]\\nAction Input: the input to the action\\nObservation: the result of the action\\n... (this Thought/Action/Action Input/Observation can repeat N times)\\nThought: I now know the final answer\\nFinal Answer: the final answer to the original input question\\n\\nBegin!\\n\\nQuestion: Who is the wife of the person who sang summer of 69?\\nThought:'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 189, 'token_usage_completion_tokens': 34, 'token_usage_total_tokens': 223, 'model_name': 'text-davinci-003', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': ' I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 91.61, 'flesch_kincaid_grade': 3.8, 'smog_index': 0.0, 'coleman_liau_index': 3.41, 'automated_readability_index': 3.5, 'dale_chall_readability_score': 6.06, 'difficult_words': 2, 'linsear_write_formula': 5.75, 'gunning_fog': 5.4, 'text_standard': '3rd and 4th grade', 'fernandez_huerta': 121.07, 'szigriszt_pazos': 119.5, 'gutierrez_polini': 54.91, 'crawford': 0.9, 'gulpease_index': 72.7, 'osman': 92.16}\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who sang summer of 69 and then find out who their wife is.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Who sang summer of 69\"\u001b[0m{'action': 'on_agent_action', 'tool': 'Search', 'tool_input': 'Who sang summer of 69', 'log': ' I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"', 'step': 4, 'starts': 3, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 1, 'tool_ends': 0, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_tool_start', 'input_str': 'Who sang summer of 69', 'name': 'Search', 'description': 'A search engine. Useful for when you need to answer questions about current events. Input should be a search query.', 'step': 5, 'starts': 4, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 0, 'agent_ends': 0}\n",

|

||||

"\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mBryan Adams - Summer Of 69 (Official Music Video).\u001b[0m\n",

|

||||

"Thought:{'action': 'on_tool_end', 'output': 'Bryan Adams - Summer Of 69 (Official Music Video).', 'step': 6, 'starts': 4, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 1, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 7, 'starts': 5, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 1, 'agent_ends': 0, 'prompts': 'Answer the following questions as best you can. You have access to the following tools:\\n\\nSearch: A search engine. Useful for when you need to answer questions about current events. Input should be a search query.\\nCalculator: Useful for when you need to answer questions about math.\\n\\nUse the following format:\\n\\nQuestion: the input question you must answer\\nThought: you should always think about what to do\\nAction: the action to take, should be one of [Search, Calculator]\\nAction Input: the input to the action\\nObservation: the result of the action\\n... (this Thought/Action/Action Input/Observation can repeat N times)\\nThought: I now know the final answer\\nFinal Answer: the final answer to the original input question\\n\\nBegin!\\n\\nQuestion: Who is the wife of the person who sang summer of 69?\\nThought: I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"\\nObservation: Bryan Adams - Summer Of 69 (Official Music Video).\\nThought:'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 242, 'token_usage_completion_tokens': 28, 'token_usage_total_tokens': 270, 'model_name': 'text-davinci-003', 'step': 8, 'starts': 5, 'ends': 3, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 1, 'agent_ends': 0, 'text': ' I need to find out who Bryan Adams is married to.\\nAction: Search\\nAction Input: \"Who is Bryan Adams married to\"', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 94.66, 'flesch_kincaid_grade': 2.7, 'smog_index': 0.0, 'coleman_liau_index': 4.73, 'automated_readability_index': 4.0, 'dale_chall_readability_score': 7.16, 'difficult_words': 2, 'linsear_write_formula': 4.25, 'gunning_fog': 4.2, 'text_standard': '4th and 5th grade', 'fernandez_huerta': 124.13, 'szigriszt_pazos': 119.2, 'gutierrez_polini': 52.26, 'crawford': 0.7, 'gulpease_index': 74.7, 'osman': 84.2}\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who Bryan Adams is married to.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Who is Bryan Adams married to\"\u001b[0m{'action': 'on_agent_action', 'tool': 'Search', 'tool_input': 'Who is Bryan Adams married to', 'log': ' I need to find out who Bryan Adams is married to.\\nAction: Search\\nAction Input: \"Who is Bryan Adams married to\"', 'step': 9, 'starts': 6, 'ends': 3, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 3, 'tool_ends': 1, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_tool_start', 'input_str': 'Who is Bryan Adams married to', 'name': 'Search', 'description': 'A search engine. Useful for when you need to answer questions about current events. Input should be a search query.', 'step': 10, 'starts': 7, 'ends': 3, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 1, 'agent_ends': 0}\n",

|

||||

"\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mBryan Adams has never married. In the 1990s, he was in a relationship with Danish model Cecilie Thomsen. In 2011, Bryan and Alicia Grimaldi, his ...\u001b[0m\n",

|

||||

"Thought:{'action': 'on_tool_end', 'output': 'Bryan Adams has never married. In the 1990s, he was in a relationship with Danish model Cecilie Thomsen. In 2011, Bryan and Alicia Grimaldi, his ...', 'step': 11, 'starts': 7, 'ends': 4, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 12, 'starts': 8, 'ends': 4, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 3, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 0, 'prompts': 'Answer the following questions as best you can. You have access to the following tools:\\n\\nSearch: A search engine. Useful for when you need to answer questions about current events. Input should be a search query.\\nCalculator: Useful for when you need to answer questions about math.\\n\\nUse the following format:\\n\\nQuestion: the input question you must answer\\nThought: you should always think about what to do\\nAction: the action to take, should be one of [Search, Calculator]\\nAction Input: the input to the action\\nObservation: the result of the action\\n... (this Thought/Action/Action Input/Observation can repeat N times)\\nThought: I now know the final answer\\nFinal Answer: the final answer to the original input question\\n\\nBegin!\\n\\nQuestion: Who is the wife of the person who sang summer of 69?\\nThought: I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"\\nObservation: Bryan Adams - Summer Of 69 (Official Music Video).\\nThought: I need to find out who Bryan Adams is married to.\\nAction: Search\\nAction Input: \"Who is Bryan Adams married to\"\\nObservation: Bryan Adams has never married. In the 1990s, he was in a relationship with Danish model Cecilie Thomsen. In 2011, Bryan and Alicia Grimaldi, his ...\\nThought:'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 314, 'token_usage_completion_tokens': 18, 'token_usage_total_tokens': 332, 'model_name': 'text-davinci-003', 'step': 13, 'starts': 8, 'ends': 5, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 3, 'llm_ends': 3, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 0, 'text': ' I now know the final answer.\\nFinal Answer: Bryan Adams has never been married.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 81.29, 'flesch_kincaid_grade': 3.7, 'smog_index': 0.0, 'coleman_liau_index': 5.75, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 7.37, 'difficult_words': 1, 'linsear_write_formula': 2.5, 'gunning_fog': 2.8, 'text_standard': '3rd and 4th grade', 'fernandez_huerta': 115.7, 'szigriszt_pazos': 110.84, 'gutierrez_polini': 49.79, 'crawford': 0.7, 'gulpease_index': 85.4, 'osman': 83.14}\n",

|

||||

"\u001b[32;1m\u001b[1;3m I now know the final answer.\n",

|

||||

"Final Answer: Bryan Adams has never been married.\u001b[0m\n",

|

||||

"{'action': 'on_agent_finish', 'output': 'Bryan Adams has never been married.', 'log': ' I now know the final answer.\\nFinal Answer: Bryan Adams has never been married.', 'step': 14, 'starts': 8, 'ends': 6, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 3, 'llm_ends': 3, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 1}\n",

|

||||

"\n",

|

||||

"\u001b[1m> Finished chain.\u001b[0m\n",

|

||||

"{'action': 'on_chain_end', 'outputs': 'Bryan Adams has never been married.', 'step': 15, 'starts': 8, 'ends': 7, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 1, 'llm_starts': 3, 'llm_ends': 3, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 1}\n",

|

||||

"{'action_records': action name step starts ends errors text_ctr \\\n",

|

||||

"0 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"1 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"2 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"3 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"4 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

".. ... ... ... ... ... ... ... \n",

|

||||

"66 on_tool_end NaN 11 7 4 0 0 \n",

|

||||

"67 on_llm_start OpenAI 12 8 4 0 0 \n",

|

||||

"68 on_llm_end NaN 13 8 5 0 0 \n",

|

||||

"69 on_agent_finish NaN 14 8 6 0 0 \n",

|

||||

"70 on_chain_end NaN 15 8 7 0 0 \n",

|

||||

"\n",

|

||||

" chain_starts chain_ends llm_starts ... gulpease_index osman input \\\n",

|

||||

"0 0 0 1 ... NaN NaN NaN \n",

|

||||

"1 0 0 1 ... NaN NaN NaN \n",

|

||||

"2 0 0 1 ... NaN NaN NaN \n",

|

||||

"3 0 0 1 ... NaN NaN NaN \n",

|

||||

"4 0 0 1 ... NaN NaN NaN \n",

|

||||

".. ... ... ... ... ... ... ... \n",

|

||||

"66 1 0 2 ... NaN NaN NaN \n",

|

||||

"67 1 0 3 ... NaN NaN NaN \n",

|

||||

"68 1 0 3 ... 85.4 83.14 NaN \n",

|

||||

"69 1 0 3 ... NaN NaN NaN \n",

|

||||

"70 1 1 3 ... NaN NaN NaN \n",

|

||||

"\n",

|

||||

" tool tool_input log \\\n",

|

||||

"0 NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN \n",

|

||||

".. ... ... ... \n",

|

||||

"66 NaN NaN NaN \n",

|

||||

"67 NaN NaN NaN \n",

|

||||

"68 NaN NaN NaN \n",

|

||||

"69 NaN NaN I now know the final answer.\\nFinal Answer: B... \n",

|

||||

"70 NaN NaN NaN \n",

|

||||

"\n",

|

||||

" input_str description output \\\n",

|

||||

"0 NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN \n",

|

||||

".. ... ... ... \n",

|

||||

"66 NaN NaN Bryan Adams has never married. In the 1990s, h... \n",

|

||||

"67 NaN NaN NaN \n",

|

||||

"68 NaN NaN NaN \n",

|

||||

"69 NaN NaN Bryan Adams has never been married. \n",

|

||||

"70 NaN NaN NaN \n",

|

||||

"\n",

|

||||

" outputs \n",

|

||||

"0 NaN \n",

|

||||

"1 NaN \n",

|

||||

"2 NaN \n",

|

||||

"3 NaN \n",

|

||||

"4 NaN \n",

|

||||

".. ... \n",

|

||||

"66 NaN \n",

|

||||

"67 NaN \n",

|

||||

"68 NaN \n",

|

||||

"69 NaN \n",

|

||||

"70 Bryan Adams has never been married. \n",

|

||||

"\n",

|

||||

"[71 rows x 47 columns], 'session_analysis': prompt_step prompts name \\\n",

|

||||