mirror of

https://github.com/hwchase17/langchain.git

synced 2026-02-07 17:50:35 +00:00

Compare commits

2 Commits

v0.0.129

...

harrison/g

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e61e37a40a | ||

|

|

f267f59186 |

@@ -1,2 +0,0 @@

|

||||

.venv

|

||||

.github

|

||||

2

.github/CONTRIBUTING.md

vendored

2

.github/CONTRIBUTING.md

vendored

@@ -73,8 +73,6 @@ poetry install -E all

|

||||

|

||||

This will install all requirements for running the package, examples, linting, formatting, tests, and coverage. Note the `-E all` flag will install all optional dependencies necessary for integration testing.

|

||||

|

||||

❗Note: If you're running Poetry 1.4.1 and receive a `WheelFileValidationError` for `debugpy` during installation, you can try either downgrading to Poetry 1.4.0 or disabling "modern installation" (`poetry config installer.modern-installation false`) and re-install requirements. See [this `debugpy` issue](https://github.com/microsoft/debugpy/issues/1246) for more details.

|

||||

|

||||

Now, you should be able to run the common tasks in the following section.

|

||||

|

||||

## ✅Common Tasks

|

||||

|

||||

6

.gitignore

vendored

6

.gitignore

vendored

@@ -135,9 +135,3 @@ dmypy.json

|

||||

|

||||

# macOS display setting files

|

||||

.DS_Store

|

||||

|

||||

# Wandb directory

|

||||

wandb/

|

||||

|

||||

# asdf tool versions

|

||||

.tool-versions

|

||||

|

||||

39

Dockerfile

39

Dockerfile

@@ -1,39 +0,0 @@

|

||||

# Use the Python base image

|

||||

FROM python:3.11.2-bullseye AS builder

|

||||

|

||||

# Print Python version

|

||||

RUN echo "Python version:" && python --version && echo ""

|

||||

|

||||

# Install Poetry

|

||||

RUN echo "Installing Poetry..." && \

|

||||

curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/install-poetry.py | python -

|

||||

|

||||

# Add Poetry to PATH

|

||||

ENV PATH="${PATH}:/root/.local/bin"

|

||||

|

||||

# Test if Poetry is added to PATH

|

||||

RUN echo "Poetry version:" && poetry --version && echo ""

|

||||

|

||||

# Set working directory

|

||||

WORKDIR /app

|

||||

|

||||

# Use a multi-stage build to install dependencies

|

||||

FROM builder AS dependencies

|

||||

|

||||

# Copy only the dependency files for installation

|

||||

COPY pyproject.toml poetry.lock poetry.toml ./

|

||||

|

||||

# Install Poetry dependencies (this layer will be cached as long as the dependencies don't change)

|

||||

RUN poetry install --no-interaction --no-ansi

|

||||

|

||||

# Use a multi-stage build to run tests

|

||||

FROM dependencies AS tests

|

||||

|

||||

# Copy the rest of the app source code (this layer will be invalidated and rebuilt whenever the source code changes)

|

||||

COPY . .

|

||||

|

||||

# Set entrypoint to run tests

|

||||

ENTRYPOINT ["poetry", "run", "pytest"]

|

||||

|

||||

# Set default command to run all unit tests

|

||||

CMD ["tests/unit_tests"]

|

||||

9

Makefile

9

Makefile

@@ -1,7 +1,7 @@

|

||||

.PHONY: all clean format lint test tests test_watch integration_tests docker_tests help

|

||||

.PHONY: all clean format lint test tests test_watch integration_tests help

|

||||

|

||||

all: help

|

||||

|

||||

|

||||

coverage:

|

||||

poetry run pytest --cov \

|

||||

--cov-config=.coveragerc \

|

||||

@@ -40,10 +40,6 @@ test_watch:

|

||||

integration_tests:

|

||||

poetry run pytest tests/integration_tests

|

||||

|

||||

docker_tests:

|

||||

docker build -t my-langchain-image:test .

|

||||

docker run --rm my-langchain-image:test

|

||||

|

||||

help:

|

||||

@echo '----'

|

||||

@echo 'coverage - run unit tests and generate coverage report'

|

||||

@@ -55,4 +51,3 @@ help:

|

||||

@echo 'test - run unit tests'

|

||||

@echo 'test_watch - run unit tests in watch mode'

|

||||

@echo 'integration_tests - run integration tests'

|

||||

@echo 'docker_tests - run unit tests in docker'

|

||||

|

||||

@@ -32,7 +32,7 @@ This library is aimed at assisting in the development of those types of applicat

|

||||

|

||||

**🤖 Agents**

|

||||

|

||||

- [Documentation](https://langchain.readthedocs.io/en/latest/modules/agents.html)

|

||||

- [Documentation](https://langchain.readthedocs.io/en/latest/use_cases/agents.html)

|

||||

- End-to-end Example: [GPT+WolframAlpha](https://huggingface.co/spaces/JavaFXpert/Chat-GPT-LangChain)

|

||||

|

||||

## 📖 Documentation

|

||||

|

||||

BIN

docs/_static/ApifyActors.png

vendored

BIN

docs/_static/ApifyActors.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 559 KiB |

@@ -23,7 +23,7 @@ with open("../pyproject.toml") as f:

|

||||

# -- Project information -----------------------------------------------------

|

||||

|

||||

project = "🦜🔗 LangChain"

|

||||

copyright = "2023, Harrison Chase"

|

||||

copyright = "2022, Harrison Chase"

|

||||

author = "Harrison Chase"

|

||||

|

||||

version = data["tool"]["poetry"]["version"]

|

||||

|

||||

@@ -37,6 +37,3 @@ A minimal example on how to run LangChain on Vercel using Flask.

|

||||

## [SteamShip](https://github.com/steamship-core/steamship-langchain/)

|

||||

This repository contains LangChain adapters for Steamship, enabling LangChain developers to rapidly deploy their apps on Steamship.

|

||||

This includes: production ready endpoints, horizontal scaling across dependencies, persistant storage of app state, multi-tenancy support, etc.

|

||||

|

||||

## [Langchain-serve](https://github.com/jina-ai/langchain-serve)

|

||||

This repository allows users to serve local chains and agents as RESTful, gRPC, or Websocket APIs thanks to [Jina](https://docs.jina.ai/). Deploy your chains & agents with ease and enjoy independent scaling, serverless and autoscaling APIs, as well as a Streamlit playground on Jina AI Cloud.

|

||||

|

||||

@@ -1,292 +0,0 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Aim\n",

|

||||

"\n",

|

||||

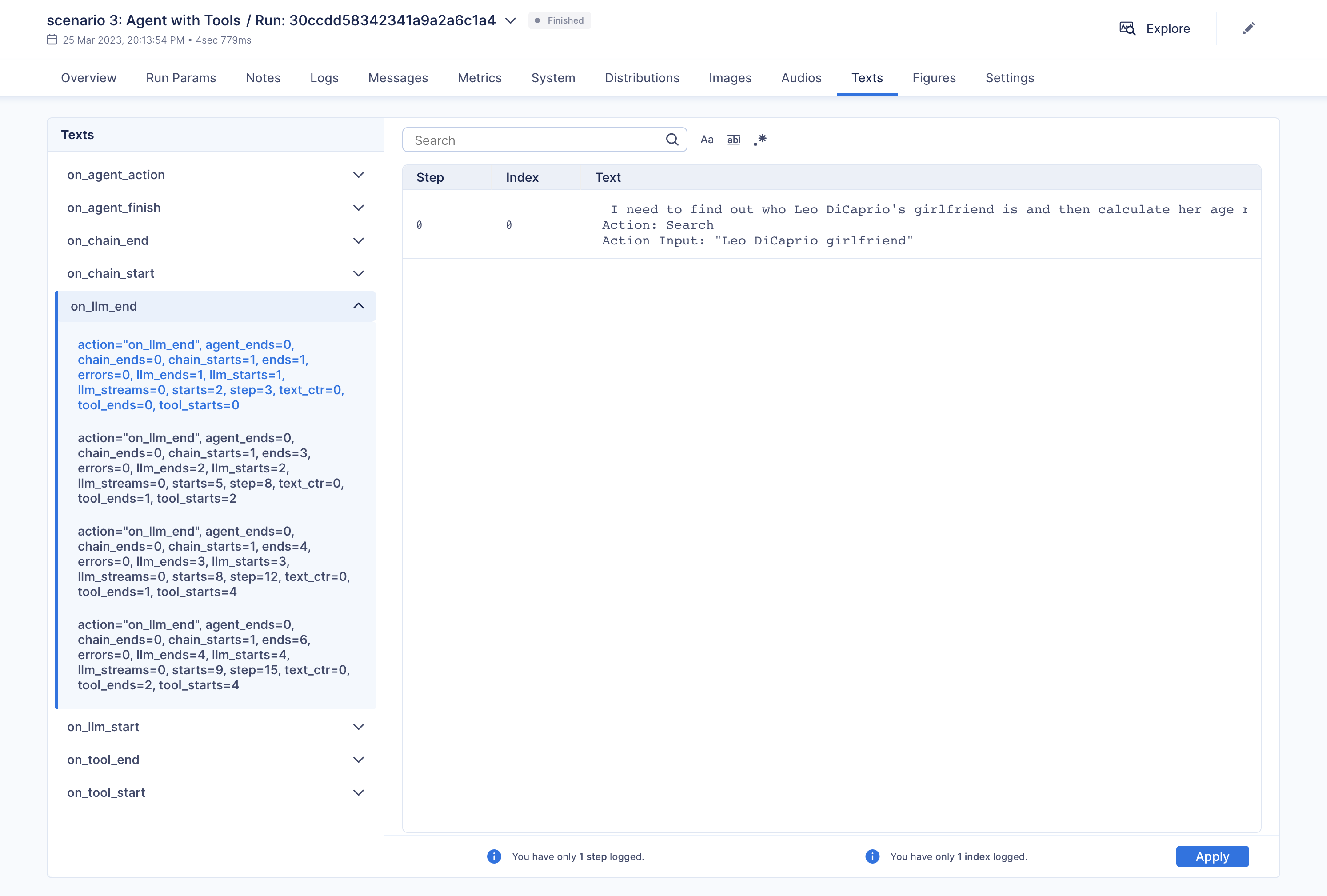

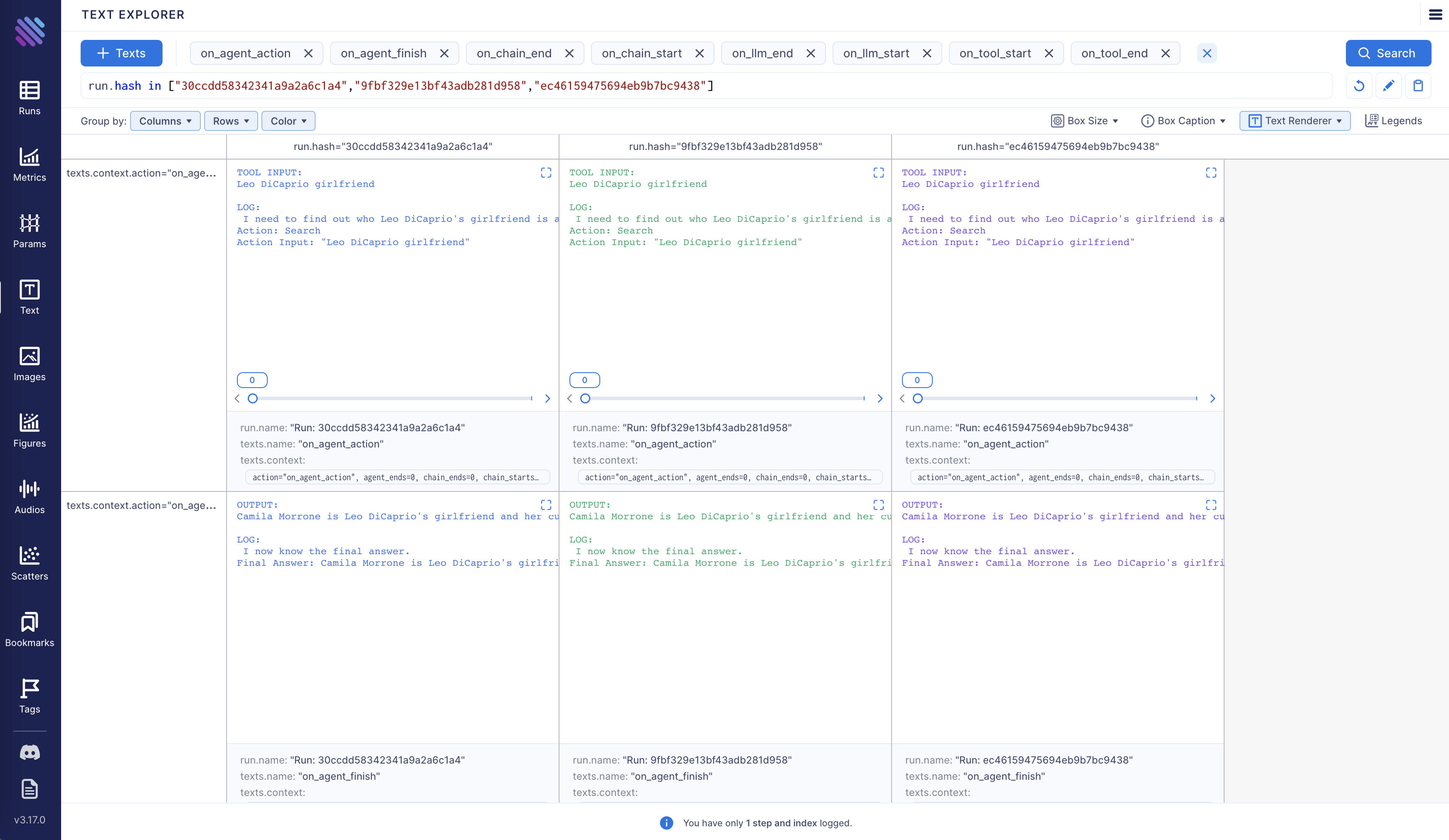

"Aim makes it super easy to visualize and debug LangChain executions. Aim tracks inputs and outputs of LLMs and tools, as well as actions of agents. \n",

|

||||

"\n",

|

||||

"With Aim, you can easily debug and examine an individual execution:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"Additionally, you have the option to compare multiple executions side by side:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"Aim is fully open source, [learn more](https://github.com/aimhubio/aim) about Aim on GitHub.\n",

|

||||

"\n",

|

||||

"Let's move forward and see how to enable and configure Aim callback."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Tracking LangChain Executions with Aim</h3>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"In this notebook we will explore three usage scenarios. To start off, we will install the necessary packages and import certain modules. Subsequently, we will configure two environment variables that can be established either within the Python script or through the terminal."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "mf88kuCJhbVu"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!pip install aim\n",

|

||||

"!pip install langchain\n",

|

||||

"!pip install openai\n",

|

||||

"!pip install google-search-results"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "g4eTuajwfl6L"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import os\n",

|

||||

"from datetime import datetime\n",

|

||||

"\n",

|

||||

"from langchain.llms import OpenAI\n",

|

||||

"from langchain.callbacks.base import CallbackManager\n",

|

||||

"from langchain.callbacks import AimCallbackHandler, StdOutCallbackHandler"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"Our examples use a GPT model as the LLM, and OpenAI offers an API for this purpose. You can obtain the key from the following link: https://platform.openai.com/account/api-keys .\n",

|

||||

"\n",

|

||||

"We will use the SerpApi to retrieve search results from Google. To acquire the SerpApi key, please go to https://serpapi.com/manage-api-key ."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "T1bSmKd6V2If"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"os.environ[\"OPENAI_API_KEY\"] = \"...\"\n",

|

||||

"os.environ[\"SERPAPI_API_KEY\"] = \"...\""

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "QenUYuBZjIzc"

|

||||

},

|

||||

"source": [

|

||||

"The event methods of `AimCallbackHandler` accept the LangChain module or agent as input and log at least the prompts and generated results, as well as the serialized version of the LangChain module, to the designated Aim run."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "KAz8weWuUeXF"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"session_group = datetime.now().strftime(\"%m.%d.%Y_%H.%M.%S\")\n",

|

||||

"aim_callback = AimCallbackHandler(\n",

|

||||

" repo=\".\",\n",

|

||||

" experiment_name=\"scenario 1: OpenAI LLM\",\n",

|

||||

")\n",

|

||||

"\n",

|

||||

"manager = CallbackManager([StdOutCallbackHandler(), aim_callback])\n",

|

||||

"llm = OpenAI(temperature=0, callback_manager=manager, verbose=True)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "b8WfByB4fl6N"

|

||||

},

|

||||

"source": [

|

||||

"The `flush_tracker` function is used to record LangChain assets on Aim. By default, the session is reset rather than being terminated outright."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 1</h3> In the first scenario, we will use OpenAI LLM."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "o_VmneyIUyx8"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# scenario 1 - LLM\n",

|

||||

"llm_result = llm.generate([\"Tell me a joke\", \"Tell me a poem\"] * 3)\n",

|

||||

"aim_callback.flush_tracker(\n",

|

||||

" langchain_asset=llm,\n",

|

||||

" experiment_name=\"scenario 2: Chain with multiple SubChains on multiple generations\",\n",

|

||||

")\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 2</h3> Scenario two involves chaining with multiple SubChains across multiple generations."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "trxslyb1U28Y"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from langchain.prompts import PromptTemplate\n",

|

||||

"from langchain.chains import LLMChain"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "uauQk10SUzF6"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# scenario 2 - Chain\n",

|

||||

"template = \"\"\"You are a playwright. Given the title of play, it is your job to write a synopsis for that title.\n",

|

||||

"Title: {title}\n",

|

||||

"Playwright: This is a synopsis for the above play:\"\"\"\n",

|

||||

"prompt_template = PromptTemplate(input_variables=[\"title\"], template=template)\n",

|

||||

"synopsis_chain = LLMChain(llm=llm, prompt=prompt_template, callback_manager=manager)\n",

|

||||

"\n",

|

||||

"test_prompts = [\n",

|

||||

" {\"title\": \"documentary about good video games that push the boundary of game design\"},\n",

|

||||

" {\"title\": \"the phenomenon behind the remarkable speed of cheetahs\"},\n",

|

||||

" {\"title\": \"the best in class mlops tooling\"},\n",

|

||||

"]\n",

|

||||

"synopsis_chain.apply(test_prompts)\n",

|

||||

"aim_callback.flush_tracker(\n",

|

||||

" langchain_asset=synopsis_chain, experiment_name=\"scenario 3: Agent with Tools\"\n",

|

||||

")"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 3</h3> The third scenario involves an agent with tools."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "_jN73xcPVEpI"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from langchain.agents import initialize_agent, load_tools"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"colab": {

|

||||

"base_uri": "https://localhost:8080/"

|

||||

},

|

||||

"id": "Gpq4rk6VT9cu",

|

||||

"outputId": "68ae261e-d0a2-4229-83c4-762562263b66"

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\n",

|

||||

"\n",

|

||||

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who Leo DiCaprio's girlfriend is and then calculate her age raised to the 0.43 power.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mLeonardo DiCaprio seemed to prove a long-held theory about his love life right after splitting from girlfriend Camila Morrone just months ...\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I need to find out Camila Morrone's age\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Camila Morrone age\"\u001b[0m\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3m25 years\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I need to calculate 25 raised to the 0.43 power\n",

|

||||

"Action: Calculator\n",

|

||||

"Action Input: 25^0.43\u001b[0m\n",

|

||||

"Observation: \u001b[33;1m\u001b[1;3mAnswer: 3.991298452658078\n",

|

||||

"\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I now know the final answer\n",

|

||||

"Final Answer: Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.991298452658078.\u001b[0m\n",

|

||||

"\n",

|

||||

"\u001b[1m> Finished chain.\u001b[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"# scenario 3 - Agent with Tools\n",

|

||||

"tools = load_tools([\"serpapi\", \"llm-math\"], llm=llm, callback_manager=manager)\n",

|

||||

"agent = initialize_agent(\n",

|

||||

" tools,\n",

|

||||

" llm,\n",

|

||||

" agent=\"zero-shot-react-description\",\n",

|

||||

" callback_manager=manager,\n",

|

||||

" verbose=True,\n",

|

||||

")\n",

|

||||

"agent.run(\n",

|

||||

" \"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?\"\n",

|

||||

")\n",

|

||||

"aim_callback.flush_tracker(langchain_asset=agent, reset=False, finish=True)"

|

||||

]

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"accelerator": "GPU",

|

||||

"colab": {

|

||||

"provenance": []

|

||||

},

|

||||

"gpuClass": "standard",

|

||||

"kernelspec": {

|

||||

"display_name": "Python 3 (ipykernel)",

|

||||

"language": "python",

|

||||

"name": "python3"

|

||||

},

|

||||

"language_info": {

|

||||

"codemirror_mode": {

|

||||

"name": "ipython",

|

||||

"version": 3

|

||||

},

|

||||

"file_extension": ".py",

|

||||

"mimetype": "text/x-python",

|

||||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.9.1"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 1

|

||||

}

|

||||

@@ -1,46 +0,0 @@

|

||||

# Apify

|

||||

|

||||

This page covers how to use [Apify](https://apify.com) within LangChain.

|

||||

|

||||

## Overview

|

||||

|

||||

Apify is a cloud platform for web scraping and data extraction,

|

||||

which provides an [ecosystem](https://apify.com/store) of more than a thousand

|

||||

ready-made apps called *Actors* for various scraping, crawling, and extraction use cases.

|

||||

|

||||

[](https://apify.com/store)

|

||||

|

||||

This integration enables you run Actors on the Apify platform and load their results into LangChain to feed your vector

|

||||

indexes with documents and data from the web, e.g. to generate answers from websites with documentation,

|

||||

blogs, or knowledge bases.

|

||||

|

||||

|

||||

## Installation and Setup

|

||||

|

||||

- Install the Apify API client for Python with `pip install apify-client`

|

||||

- Get your [Apify API token](https://console.apify.com/account/integrations) and either set it as

|

||||

an environment variable (`APIFY_API_TOKEN`) or pass it to the `ApifyWrapper` as `apify_api_token` in the constructor.

|

||||

|

||||

|

||||

## Wrappers

|

||||

|

||||

### Utility

|

||||

|

||||

You can use the `ApifyWrapper` to run Actors on the Apify platform.

|

||||

|

||||

```python

|

||||

from langchain.utilities import ApifyWrapper

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this wrapper, see [this notebook](../modules/agents/tools/examples/apify.ipynb).

|

||||

|

||||

|

||||

### Loader

|

||||

|

||||

You can also use our `ApifyDatasetLoader` to get data from Apify dataset.

|

||||

|

||||

```python

|

||||

from langchain.document_loaders import ApifyDatasetLoader

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this loader, see [this notebook](../modules/indexes/document_loaders/examples/apify_dataset.ipynb).

|

||||

@@ -24,4 +24,4 @@ To import this vectorstore:

|

||||

from langchain.vectorstores import AtlasDB

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of the AtlasDB wrapper, see [this notebook](../modules/indexes/vectorstores/examples/atlas.ipynb)

|

||||

For a more detailed walkthrough of the AtlasDB wrapper, see [this notebook](../modules/indexes/vectorstore_examples/atlas.ipynb)

|

||||

|

||||

@@ -17,4 +17,4 @@ To import this vectorstore:

|

||||

from langchain.vectorstores import Chroma

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of the Chroma wrapper, see [this notebook](../modules/indexes/vectorstores/getting_started.ipynb)

|

||||

For a more detailed walkthrough of the Chroma wrapper, see [this notebook](../modules/indexes/examples/vectorstores.ipynb)

|

||||

|

||||

@@ -1,588 +0,0 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# ClearML Integration\n",

|

||||

"\n",

|

||||

"In order to properly keep track of your langchain experiments and their results, you can enable the ClearML integration. ClearML is an experiment manager that neatly tracks and organizes all your experiment runs.\n",

|

||||

"\n",

|

||||

"<a target=\"_blank\" href=\"https://colab.research.google.com/github/hwchase17/langchain/blob/master/docs/ecosystem/clearml_tracking.ipynb\">\n",

|

||||

" <img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/>\n",

|

||||

"</a>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Getting API Credentials\n",

|

||||

"\n",

|

||||

"We'll be using quite some APIs in this notebook, here is a list and where to get them:\n",

|

||||

"\n",

|

||||

"- ClearML: https://app.clear.ml/settings/workspace-configuration\n",

|

||||

"- OpenAI: https://platform.openai.com/account/api-keys\n",

|

||||

"- SerpAPI (google search): https://serpapi.com/dashboard"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 2,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import os\n",

|

||||

"os.environ[\"CLEARML_API_ACCESS_KEY\"] = \"\"\n",

|

||||

"os.environ[\"CLEARML_API_SECRET_KEY\"] = \"\"\n",

|

||||

"\n",

|

||||

"os.environ[\"OPENAI_API_KEY\"] = \"\"\n",

|

||||

"os.environ[\"SERPAPI_API_KEY\"] = \"\""

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Setting Up"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!pip install clearml\n",

|

||||

"!pip install pandas\n",

|

||||

"!pip install textstat\n",

|

||||

"!pip install spacy\n",

|

||||

"!python -m spacy download en_core_web_sm"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 3,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"The clearml callback is currently in beta and is subject to change based on updates to `langchain`. Please report any issues to https://github.com/allegroai/clearml/issues with the tag `langchain`.\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"from datetime import datetime\n",

|

||||

"from langchain.callbacks import ClearMLCallbackHandler, StdOutCallbackHandler\n",

|

||||

"from langchain.callbacks.base import CallbackManager\n",

|

||||

"from langchain.llms import OpenAI\n",

|

||||

"\n",

|

||||

"# Setup and use the ClearML Callback\n",

|

||||

"clearml_callback = ClearMLCallbackHandler(\n",

|

||||

" task_type=\"inference\",\n",

|

||||

" project_name=\"langchain_callback_demo\",\n",

|

||||

" task_name=\"llm\",\n",

|

||||

" tags=[\"test\"],\n",

|

||||

" # Change the following parameters based on the amount of detail you want tracked\n",

|

||||

" visualize=True,\n",

|

||||

" complexity_metrics=True,\n",

|

||||

" stream_logs=True\n",

|

||||

")\n",

|

||||

"manager = CallbackManager([StdOutCallbackHandler(), clearml_callback])\n",

|

||||

"# Get the OpenAI model ready to go\n",

|

||||

"llm = OpenAI(temperature=0, callback_manager=manager, verbose=True)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Scenario 1: Just an LLM\n",

|

||||

"\n",

|

||||

"First, let's just run a single LLM a few times and capture the resulting prompt-answer conversation in ClearML"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 5,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a joke'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a poem'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a joke'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a poem'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a joke'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Tell me a poem'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nQ: What did the fish say when it hit the wall?\\nA: Dam!', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 109.04, 'flesch_kincaid_grade': 1.3, 'smog_index': 0.0, 'coleman_liau_index': -1.24, 'automated_readability_index': 0.3, 'dale_chall_readability_score': 5.5, 'difficult_words': 0, 'linsear_write_formula': 5.5, 'gunning_fog': 5.2, 'text_standard': '5th and 6th grade', 'fernandez_huerta': 133.58, 'szigriszt_pazos': 131.54, 'gutierrez_polini': 62.3, 'crawford': -0.2, 'gulpease_index': 79.8, 'osman': 116.91}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nRoses are red,\\nViolets are blue,\\nSugar is sweet,\\nAnd so are you.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 83.66, 'flesch_kincaid_grade': 4.8, 'smog_index': 0.0, 'coleman_liau_index': 3.23, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 6.71, 'difficult_words': 2, 'linsear_write_formula': 6.5, 'gunning_fog': 8.28, 'text_standard': '6th and 7th grade', 'fernandez_huerta': 115.58, 'szigriszt_pazos': 112.37, 'gutierrez_polini': 54.83, 'crawford': 1.4, 'gulpease_index': 72.1, 'osman': 100.17}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nQ: What did the fish say when it hit the wall?\\nA: Dam!', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 109.04, 'flesch_kincaid_grade': 1.3, 'smog_index': 0.0, 'coleman_liau_index': -1.24, 'automated_readability_index': 0.3, 'dale_chall_readability_score': 5.5, 'difficult_words': 0, 'linsear_write_formula': 5.5, 'gunning_fog': 5.2, 'text_standard': '5th and 6th grade', 'fernandez_huerta': 133.58, 'szigriszt_pazos': 131.54, 'gutierrez_polini': 62.3, 'crawford': -0.2, 'gulpease_index': 79.8, 'osman': 116.91}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nRoses are red,\\nViolets are blue,\\nSugar is sweet,\\nAnd so are you.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 83.66, 'flesch_kincaid_grade': 4.8, 'smog_index': 0.0, 'coleman_liau_index': 3.23, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 6.71, 'difficult_words': 2, 'linsear_write_formula': 6.5, 'gunning_fog': 8.28, 'text_standard': '6th and 7th grade', 'fernandez_huerta': 115.58, 'szigriszt_pazos': 112.37, 'gutierrez_polini': 54.83, 'crawford': 1.4, 'gulpease_index': 72.1, 'osman': 100.17}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nQ: What did the fish say when it hit the wall?\\nA: Dam!', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 109.04, 'flesch_kincaid_grade': 1.3, 'smog_index': 0.0, 'coleman_liau_index': -1.24, 'automated_readability_index': 0.3, 'dale_chall_readability_score': 5.5, 'difficult_words': 0, 'linsear_write_formula': 5.5, 'gunning_fog': 5.2, 'text_standard': '5th and 6th grade', 'fernandez_huerta': 133.58, 'szigriszt_pazos': 131.54, 'gutierrez_polini': 62.3, 'crawford': -0.2, 'gulpease_index': 79.8, 'osman': 116.91}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 24, 'token_usage_completion_tokens': 138, 'token_usage_total_tokens': 162, 'model_name': 'text-davinci-003', 'step': 4, 'starts': 2, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 0, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': '\\n\\nRoses are red,\\nViolets are blue,\\nSugar is sweet,\\nAnd so are you.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 83.66, 'flesch_kincaid_grade': 4.8, 'smog_index': 0.0, 'coleman_liau_index': 3.23, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 6.71, 'difficult_words': 2, 'linsear_write_formula': 6.5, 'gunning_fog': 8.28, 'text_standard': '6th and 7th grade', 'fernandez_huerta': 115.58, 'szigriszt_pazos': 112.37, 'gutierrez_polini': 54.83, 'crawford': 1.4, 'gulpease_index': 72.1, 'osman': 100.17}\n",

|

||||

"{'action_records': action name step starts ends errors text_ctr chain_starts \\\n",

|

||||

"0 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"1 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"2 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"3 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"4 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"5 on_llm_start OpenAI 1 1 0 0 0 0 \n",

|

||||

"6 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"7 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"8 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"9 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"10 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"11 on_llm_end NaN 2 1 1 0 0 0 \n",

|

||||

"12 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"13 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"14 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"15 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"16 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"17 on_llm_start OpenAI 3 2 1 0 0 0 \n",

|

||||

"18 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"19 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"20 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"21 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"22 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"23 on_llm_end NaN 4 2 2 0 0 0 \n",

|

||||

"\n",

|

||||

" chain_ends llm_starts ... difficult_words linsear_write_formula \\\n",

|

||||

"0 0 1 ... NaN NaN \n",

|

||||

"1 0 1 ... NaN NaN \n",

|

||||

"2 0 1 ... NaN NaN \n",

|

||||

"3 0 1 ... NaN NaN \n",

|

||||

"4 0 1 ... NaN NaN \n",

|

||||

"5 0 1 ... NaN NaN \n",

|

||||

"6 0 1 ... 0.0 5.5 \n",

|

||||

"7 0 1 ... 2.0 6.5 \n",

|

||||

"8 0 1 ... 0.0 5.5 \n",

|

||||

"9 0 1 ... 2.0 6.5 \n",

|

||||

"10 0 1 ... 0.0 5.5 \n",

|

||||

"11 0 1 ... 2.0 6.5 \n",

|

||||

"12 0 2 ... NaN NaN \n",

|

||||

"13 0 2 ... NaN NaN \n",

|

||||

"14 0 2 ... NaN NaN \n",

|

||||

"15 0 2 ... NaN NaN \n",

|

||||

"16 0 2 ... NaN NaN \n",

|

||||

"17 0 2 ... NaN NaN \n",

|

||||

"18 0 2 ... 0.0 5.5 \n",

|

||||

"19 0 2 ... 2.0 6.5 \n",

|

||||

"20 0 2 ... 0.0 5.5 \n",

|

||||

"21 0 2 ... 2.0 6.5 \n",

|

||||

"22 0 2 ... 0.0 5.5 \n",

|

||||

"23 0 2 ... 2.0 6.5 \n",

|

||||

"\n",

|

||||

" gunning_fog text_standard fernandez_huerta szigriszt_pazos \\\n",

|

||||

"0 NaN NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN NaN \n",

|

||||

"5 NaN NaN NaN NaN \n",

|

||||

"6 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"7 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"8 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"9 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"10 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"11 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"12 NaN NaN NaN NaN \n",

|

||||

"13 NaN NaN NaN NaN \n",

|

||||

"14 NaN NaN NaN NaN \n",

|

||||

"15 NaN NaN NaN NaN \n",

|

||||

"16 NaN NaN NaN NaN \n",

|

||||

"17 NaN NaN NaN NaN \n",

|

||||

"18 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"19 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"20 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"21 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"22 5.20 5th and 6th grade 133.58 131.54 \n",

|

||||

"23 8.28 6th and 7th grade 115.58 112.37 \n",

|

||||

"\n",

|

||||

" gutierrez_polini crawford gulpease_index osman \n",

|

||||

"0 NaN NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN NaN \n",

|

||||

"5 NaN NaN NaN NaN \n",

|

||||

"6 62.30 -0.2 79.8 116.91 \n",

|

||||

"7 54.83 1.4 72.1 100.17 \n",

|

||||

"8 62.30 -0.2 79.8 116.91 \n",

|

||||

"9 54.83 1.4 72.1 100.17 \n",

|

||||

"10 62.30 -0.2 79.8 116.91 \n",

|

||||

"11 54.83 1.4 72.1 100.17 \n",

|

||||

"12 NaN NaN NaN NaN \n",

|

||||

"13 NaN NaN NaN NaN \n",

|

||||

"14 NaN NaN NaN NaN \n",

|

||||

"15 NaN NaN NaN NaN \n",

|

||||

"16 NaN NaN NaN NaN \n",

|

||||

"17 NaN NaN NaN NaN \n",

|

||||

"18 62.30 -0.2 79.8 116.91 \n",

|

||||

"19 54.83 1.4 72.1 100.17 \n",

|

||||

"20 62.30 -0.2 79.8 116.91 \n",

|

||||

"21 54.83 1.4 72.1 100.17 \n",

|

||||

"22 62.30 -0.2 79.8 116.91 \n",

|

||||

"23 54.83 1.4 72.1 100.17 \n",

|

||||

"\n",

|

||||

"[24 rows x 39 columns], 'session_analysis': prompt_step prompts name output_step \\\n",

|

||||

"0 1 Tell me a joke OpenAI 2 \n",

|

||||

"1 1 Tell me a poem OpenAI 2 \n",

|

||||

"2 1 Tell me a joke OpenAI 2 \n",

|

||||

"3 1 Tell me a poem OpenAI 2 \n",

|

||||

"4 1 Tell me a joke OpenAI 2 \n",

|

||||

"5 1 Tell me a poem OpenAI 2 \n",

|

||||

"6 3 Tell me a joke OpenAI 4 \n",

|

||||

"7 3 Tell me a poem OpenAI 4 \n",

|

||||

"8 3 Tell me a joke OpenAI 4 \n",

|

||||

"9 3 Tell me a poem OpenAI 4 \n",

|

||||

"10 3 Tell me a joke OpenAI 4 \n",

|

||||

"11 3 Tell me a poem OpenAI 4 \n",

|

||||

"\n",

|

||||

" output \\\n",

|

||||

"0 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"1 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"2 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"3 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"4 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"5 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"6 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"7 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"8 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"9 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"10 \\n\\nQ: What did the fish say when it hit the w... \n",

|

||||

"11 \\n\\nRoses are red,\\nViolets are blue,\\nSugar i... \n",

|

||||

"\n",

|

||||

" token_usage_total_tokens token_usage_prompt_tokens \\\n",

|

||||

"0 162 24 \n",

|

||||

"1 162 24 \n",

|

||||

"2 162 24 \n",

|

||||

"3 162 24 \n",

|

||||

"4 162 24 \n",

|

||||

"5 162 24 \n",

|

||||

"6 162 24 \n",

|

||||

"7 162 24 \n",

|

||||

"8 162 24 \n",

|

||||

"9 162 24 \n",

|

||||

"10 162 24 \n",

|

||||

"11 162 24 \n",

|

||||

"\n",

|

||||

" token_usage_completion_tokens flesch_reading_ease flesch_kincaid_grade \\\n",

|

||||

"0 138 109.04 1.3 \n",

|

||||

"1 138 83.66 4.8 \n",

|

||||

"2 138 109.04 1.3 \n",

|

||||

"3 138 83.66 4.8 \n",

|

||||

"4 138 109.04 1.3 \n",

|

||||

"5 138 83.66 4.8 \n",

|

||||

"6 138 109.04 1.3 \n",

|

||||

"7 138 83.66 4.8 \n",

|

||||

"8 138 109.04 1.3 \n",

|

||||

"9 138 83.66 4.8 \n",

|

||||

"10 138 109.04 1.3 \n",

|

||||

"11 138 83.66 4.8 \n",

|

||||

"\n",

|

||||

" ... difficult_words linsear_write_formula gunning_fog \\\n",

|

||||

"0 ... 0 5.5 5.20 \n",

|

||||

"1 ... 2 6.5 8.28 \n",

|

||||

"2 ... 0 5.5 5.20 \n",

|

||||

"3 ... 2 6.5 8.28 \n",

|

||||

"4 ... 0 5.5 5.20 \n",

|

||||

"5 ... 2 6.5 8.28 \n",

|

||||

"6 ... 0 5.5 5.20 \n",

|

||||

"7 ... 2 6.5 8.28 \n",

|

||||

"8 ... 0 5.5 5.20 \n",

|

||||

"9 ... 2 6.5 8.28 \n",

|

||||

"10 ... 0 5.5 5.20 \n",

|

||||

"11 ... 2 6.5 8.28 \n",

|

||||

"\n",

|

||||

" text_standard fernandez_huerta szigriszt_pazos gutierrez_polini \\\n",

|

||||

"0 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"1 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"2 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"3 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"4 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"5 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"6 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"7 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"8 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"9 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"10 5th and 6th grade 133.58 131.54 62.30 \n",

|

||||

"11 6th and 7th grade 115.58 112.37 54.83 \n",

|

||||

"\n",

|

||||

" crawford gulpease_index osman \n",

|

||||

"0 -0.2 79.8 116.91 \n",

|

||||

"1 1.4 72.1 100.17 \n",

|

||||

"2 -0.2 79.8 116.91 \n",

|

||||

"3 1.4 72.1 100.17 \n",

|

||||

"4 -0.2 79.8 116.91 \n",

|

||||

"5 1.4 72.1 100.17 \n",

|

||||

"6 -0.2 79.8 116.91 \n",

|

||||

"7 1.4 72.1 100.17 \n",

|

||||

"8 -0.2 79.8 116.91 \n",

|

||||

"9 1.4 72.1 100.17 \n",

|

||||

"10 -0.2 79.8 116.91 \n",

|

||||

"11 1.4 72.1 100.17 \n",

|

||||

"\n",

|

||||

"[12 rows x 24 columns]}\n",

|

||||

"2023-03-29 14:00:25,948 - clearml.Task - INFO - Completed model upload to https://files.clear.ml/langchain_callback_demo/llm.988bd727b0e94a29a3ac0ee526813545/models/simple_sequential\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"# SCENARIO 1 - LLM\n",

|

||||

"llm_result = llm.generate([\"Tell me a joke\", \"Tell me a poem\"] * 3)\n",

|

||||

"# After every generation run, use flush to make sure all the metrics\n",

|

||||

"# prompts and other output are properly saved separately\n",

|

||||

"clearml_callback.flush_tracker(langchain_asset=llm, name=\"simple_sequential\")"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"At this point you can already go to https://app.clear.ml and take a look at the resulting ClearML Task that was created.\n",

|

||||

"\n",

|

||||

"Among others, you should see that this notebook is saved along with any git information. The model JSON that contains the used parameters is saved as an artifact, there are also console logs and under the plots section, you'll find tables that represent the flow of the chain.\n",

|

||||

"\n",

|

||||

"Finally, if you enabled visualizations, these are stored as HTML files under debug samples."

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Scenario 2: Creating a agent with tools\n",

|

||||

"\n",

|

||||

"To show a more advanced workflow, let's create an agent with access to tools. The way ClearML tracks the results is not different though, only the table will look slightly different as there are other types of actions taken when compared to the earlier, simpler example.\n",

|

||||

"\n",

|

||||

"You can now also see the use of the `finish=True` keyword, which will fully close the ClearML Task, instead of just resetting the parameters and prompts for a new conversation."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 8,

|

||||

"metadata": {},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\n",

|

||||

"\n",

|

||||

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

|

||||

"{'action': 'on_chain_start', 'name': 'AgentExecutor', 'step': 1, 'starts': 1, 'ends': 0, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 0, 'llm_ends': 0, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'input': 'Who is the wife of the person who sang summer of 69?'}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 2, 'starts': 2, 'ends': 0, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 0, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'prompts': 'Answer the following questions as best you can. You have access to the following tools:\\n\\nSearch: A search engine. Useful for when you need to answer questions about current events. Input should be a search query.\\nCalculator: Useful for when you need to answer questions about math.\\n\\nUse the following format:\\n\\nQuestion: the input question you must answer\\nThought: you should always think about what to do\\nAction: the action to take, should be one of [Search, Calculator]\\nAction Input: the input to the action\\nObservation: the result of the action\\n... (this Thought/Action/Action Input/Observation can repeat N times)\\nThought: I now know the final answer\\nFinal Answer: the final answer to the original input question\\n\\nBegin!\\n\\nQuestion: Who is the wife of the person who sang summer of 69?\\nThought:'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 189, 'token_usage_completion_tokens': 34, 'token_usage_total_tokens': 223, 'model_name': 'text-davinci-003', 'step': 3, 'starts': 2, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 0, 'tool_ends': 0, 'agent_ends': 0, 'text': ' I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 91.61, 'flesch_kincaid_grade': 3.8, 'smog_index': 0.0, 'coleman_liau_index': 3.41, 'automated_readability_index': 3.5, 'dale_chall_readability_score': 6.06, 'difficult_words': 2, 'linsear_write_formula': 5.75, 'gunning_fog': 5.4, 'text_standard': '3rd and 4th grade', 'fernandez_huerta': 121.07, 'szigriszt_pazos': 119.5, 'gutierrez_polini': 54.91, 'crawford': 0.9, 'gulpease_index': 72.7, 'osman': 92.16}\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who sang summer of 69 and then find out who their wife is.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Who sang summer of 69\"\u001b[0m{'action': 'on_agent_action', 'tool': 'Search', 'tool_input': 'Who sang summer of 69', 'log': ' I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"', 'step': 4, 'starts': 3, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 1, 'tool_ends': 0, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_tool_start', 'input_str': 'Who sang summer of 69', 'name': 'Search', 'description': 'A search engine. Useful for when you need to answer questions about current events. Input should be a search query.', 'step': 5, 'starts': 4, 'ends': 1, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 0, 'agent_ends': 0}\n",

|

||||

"\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mBryan Adams - Summer Of 69 (Official Music Video).\u001b[0m\n",

|

||||

"Thought:{'action': 'on_tool_end', 'output': 'Bryan Adams - Summer Of 69 (Official Music Video).', 'step': 6, 'starts': 4, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 1, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 1, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 7, 'starts': 5, 'ends': 2, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 1, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 1, 'agent_ends': 0, 'prompts': 'Answer the following questions as best you can. You have access to the following tools:\\n\\nSearch: A search engine. Useful for when you need to answer questions about current events. Input should be a search query.\\nCalculator: Useful for when you need to answer questions about math.\\n\\nUse the following format:\\n\\nQuestion: the input question you must answer\\nThought: you should always think about what to do\\nAction: the action to take, should be one of [Search, Calculator]\\nAction Input: the input to the action\\nObservation: the result of the action\\n... (this Thought/Action/Action Input/Observation can repeat N times)\\nThought: I now know the final answer\\nFinal Answer: the final answer to the original input question\\n\\nBegin!\\n\\nQuestion: Who is the wife of the person who sang summer of 69?\\nThought: I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"\\nObservation: Bryan Adams - Summer Of 69 (Official Music Video).\\nThought:'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 242, 'token_usage_completion_tokens': 28, 'token_usage_total_tokens': 270, 'model_name': 'text-davinci-003', 'step': 8, 'starts': 5, 'ends': 3, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 2, 'tool_ends': 1, 'agent_ends': 0, 'text': ' I need to find out who Bryan Adams is married to.\\nAction: Search\\nAction Input: \"Who is Bryan Adams married to\"', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 94.66, 'flesch_kincaid_grade': 2.7, 'smog_index': 0.0, 'coleman_liau_index': 4.73, 'automated_readability_index': 4.0, 'dale_chall_readability_score': 7.16, 'difficult_words': 2, 'linsear_write_formula': 4.25, 'gunning_fog': 4.2, 'text_standard': '4th and 5th grade', 'fernandez_huerta': 124.13, 'szigriszt_pazos': 119.2, 'gutierrez_polini': 52.26, 'crawford': 0.7, 'gulpease_index': 74.7, 'osman': 84.2}\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who Bryan Adams is married to.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Who is Bryan Adams married to\"\u001b[0m{'action': 'on_agent_action', 'tool': 'Search', 'tool_input': 'Who is Bryan Adams married to', 'log': ' I need to find out who Bryan Adams is married to.\\nAction: Search\\nAction Input: \"Who is Bryan Adams married to\"', 'step': 9, 'starts': 6, 'ends': 3, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 3, 'tool_ends': 1, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_tool_start', 'input_str': 'Who is Bryan Adams married to', 'name': 'Search', 'description': 'A search engine. Useful for when you need to answer questions about current events. Input should be a search query.', 'step': 10, 'starts': 7, 'ends': 3, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 1, 'agent_ends': 0}\n",

|

||||

"\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mBryan Adams has never married. In the 1990s, he was in a relationship with Danish model Cecilie Thomsen. In 2011, Bryan and Alicia Grimaldi, his ...\u001b[0m\n",

|

||||

"Thought:{'action': 'on_tool_end', 'output': 'Bryan Adams has never married. In the 1990s, he was in a relationship with Danish model Cecilie Thomsen. In 2011, Bryan and Alicia Grimaldi, his ...', 'step': 11, 'starts': 7, 'ends': 4, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 2, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 0}\n",

|

||||

"{'action': 'on_llm_start', 'name': 'OpenAI', 'step': 12, 'starts': 8, 'ends': 4, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 3, 'llm_ends': 2, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 0, 'prompts': 'Answer the following questions as best you can. You have access to the following tools:\\n\\nSearch: A search engine. Useful for when you need to answer questions about current events. Input should be a search query.\\nCalculator: Useful for when you need to answer questions about math.\\n\\nUse the following format:\\n\\nQuestion: the input question you must answer\\nThought: you should always think about what to do\\nAction: the action to take, should be one of [Search, Calculator]\\nAction Input: the input to the action\\nObservation: the result of the action\\n... (this Thought/Action/Action Input/Observation can repeat N times)\\nThought: I now know the final answer\\nFinal Answer: the final answer to the original input question\\n\\nBegin!\\n\\nQuestion: Who is the wife of the person who sang summer of 69?\\nThought: I need to find out who sang summer of 69 and then find out who their wife is.\\nAction: Search\\nAction Input: \"Who sang summer of 69\"\\nObservation: Bryan Adams - Summer Of 69 (Official Music Video).\\nThought: I need to find out who Bryan Adams is married to.\\nAction: Search\\nAction Input: \"Who is Bryan Adams married to\"\\nObservation: Bryan Adams has never married. In the 1990s, he was in a relationship with Danish model Cecilie Thomsen. In 2011, Bryan and Alicia Grimaldi, his ...\\nThought:'}\n",

|

||||

"{'action': 'on_llm_end', 'token_usage_prompt_tokens': 314, 'token_usage_completion_tokens': 18, 'token_usage_total_tokens': 332, 'model_name': 'text-davinci-003', 'step': 13, 'starts': 8, 'ends': 5, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 3, 'llm_ends': 3, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 0, 'text': ' I now know the final answer.\\nFinal Answer: Bryan Adams has never been married.', 'generation_info_finish_reason': 'stop', 'generation_info_logprobs': None, 'flesch_reading_ease': 81.29, 'flesch_kincaid_grade': 3.7, 'smog_index': 0.0, 'coleman_liau_index': 5.75, 'automated_readability_index': 3.9, 'dale_chall_readability_score': 7.37, 'difficult_words': 1, 'linsear_write_formula': 2.5, 'gunning_fog': 2.8, 'text_standard': '3rd and 4th grade', 'fernandez_huerta': 115.7, 'szigriszt_pazos': 110.84, 'gutierrez_polini': 49.79, 'crawford': 0.7, 'gulpease_index': 85.4, 'osman': 83.14}\n",

|

||||

"\u001b[32;1m\u001b[1;3m I now know the final answer.\n",

|

||||

"Final Answer: Bryan Adams has never been married.\u001b[0m\n",

|

||||

"{'action': 'on_agent_finish', 'output': 'Bryan Adams has never been married.', 'log': ' I now know the final answer.\\nFinal Answer: Bryan Adams has never been married.', 'step': 14, 'starts': 8, 'ends': 6, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 0, 'llm_starts': 3, 'llm_ends': 3, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 1}\n",

|

||||

"\n",

|

||||

"\u001b[1m> Finished chain.\u001b[0m\n",

|

||||

"{'action': 'on_chain_end', 'outputs': 'Bryan Adams has never been married.', 'step': 15, 'starts': 8, 'ends': 7, 'errors': 0, 'text_ctr': 0, 'chain_starts': 1, 'chain_ends': 1, 'llm_starts': 3, 'llm_ends': 3, 'llm_streams': 0, 'tool_starts': 4, 'tool_ends': 2, 'agent_ends': 1}\n",

|

||||

"{'action_records': action name step starts ends errors text_ctr \\\n",

|

||||

"0 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"1 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"2 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"3 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

"4 on_llm_start OpenAI 1 1 0 0 0 \n",

|

||||

".. ... ... ... ... ... ... ... \n",

|

||||

"66 on_tool_end NaN 11 7 4 0 0 \n",

|

||||

"67 on_llm_start OpenAI 12 8 4 0 0 \n",

|

||||

"68 on_llm_end NaN 13 8 5 0 0 \n",

|

||||

"69 on_agent_finish NaN 14 8 6 0 0 \n",

|

||||

"70 on_chain_end NaN 15 8 7 0 0 \n",

|

||||

"\n",

|

||||

" chain_starts chain_ends llm_starts ... gulpease_index osman input \\\n",

|

||||

"0 0 0 1 ... NaN NaN NaN \n",

|

||||

"1 0 0 1 ... NaN NaN NaN \n",

|

||||

"2 0 0 1 ... NaN NaN NaN \n",

|

||||

"3 0 0 1 ... NaN NaN NaN \n",

|

||||

"4 0 0 1 ... NaN NaN NaN \n",

|

||||

".. ... ... ... ... ... ... ... \n",

|

||||

"66 1 0 2 ... NaN NaN NaN \n",

|

||||

"67 1 0 3 ... NaN NaN NaN \n",

|

||||

"68 1 0 3 ... 85.4 83.14 NaN \n",

|

||||

"69 1 0 3 ... NaN NaN NaN \n",

|

||||

"70 1 1 3 ... NaN NaN NaN \n",

|

||||

"\n",

|

||||

" tool tool_input log \\\n",

|

||||

"0 NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN \n",

|

||||

".. ... ... ... \n",

|

||||

"66 NaN NaN NaN \n",

|

||||

"67 NaN NaN NaN \n",

|

||||

"68 NaN NaN NaN \n",

|

||||

"69 NaN NaN I now know the final answer.\\nFinal Answer: B... \n",

|

||||

"70 NaN NaN NaN \n",

|

||||

"\n",

|

||||

" input_str description output \\\n",

|

||||

"0 NaN NaN NaN \n",

|

||||

"1 NaN NaN NaN \n",

|

||||

"2 NaN NaN NaN \n",

|

||||

"3 NaN NaN NaN \n",

|

||||

"4 NaN NaN NaN \n",

|

||||

".. ... ... ... \n",

|

||||

"66 NaN NaN Bryan Adams has never married. In the 1990s, h... \n",

|

||||

"67 NaN NaN NaN \n",

|

||||

"68 NaN NaN NaN \n",

|

||||

"69 NaN NaN Bryan Adams has never been married. \n",

|

||||

"70 NaN NaN NaN \n",

|

||||

"\n",

|

||||

" outputs \n",

|

||||

"0 NaN \n",

|

||||

"1 NaN \n",

|

||||

"2 NaN \n",

|

||||

"3 NaN \n",

|

||||

"4 NaN \n",

|

||||

".. ... \n",

|

||||

"66 NaN \n",

|

||||

"67 NaN \n",

|

||||

"68 NaN \n",

|

||||

"69 NaN \n",

|

||||

"70 Bryan Adams has never been married. \n",

|

||||

"\n",

|

||||

"[71 rows x 47 columns], 'session_analysis': prompt_step prompts name \\\n",

|

||||

"0 2 Answer the following questions as best you can... OpenAI \n",

|

||||

"1 7 Answer the following questions as best you can... OpenAI \n",

|

||||

"2 12 Answer the following questions as best you can... OpenAI \n",

|

||||

"\n",

|

||||

" output_step output \\\n",

|

||||

"0 3 I need to find out who sang summer of 69 and ... \n",

|

||||

"1 8 I need to find out who Bryan Adams is married... \n",

|

||||

"2 13 I now know the final answer.\\nFinal Answer: B... \n",

|

||||

"\n",

|

||||

" token_usage_total_tokens token_usage_prompt_tokens \\\n",

|

||||

"0 223 189 \n",

|

||||

"1 270 242 \n",

|

||||

"2 332 314 \n",

|

||||

"\n",

|

||||

" token_usage_completion_tokens flesch_reading_ease flesch_kincaid_grade \\\n",

|

||||

"0 34 91.61 3.8 \n",

|

||||

"1 28 94.66 2.7 \n",

|

||||

"2 18 81.29 3.7 \n",

|

||||

"\n",

|

||||

" ... difficult_words linsear_write_formula gunning_fog \\\n",

|

||||

"0 ... 2 5.75 5.4 \n",

|

||||

"1 ... 2 4.25 4.2 \n",

|

||||

"2 ... 1 2.50 2.8 \n",

|

||||

"\n",

|

||||

" text_standard fernandez_huerta szigriszt_pazos gutierrez_polini \\\n",

|

||||

"0 3rd and 4th grade 121.07 119.50 54.91 \n",

|

||||

"1 4th and 5th grade 124.13 119.20 52.26 \n",

|

||||

"2 3rd and 4th grade 115.70 110.84 49.79 \n",

|

||||

"\n",

|

||||

" crawford gulpease_index osman \n",

|

||||

"0 0.9 72.7 92.16 \n",

|

||||

"1 0.7 74.7 84.20 \n",

|

||||

"2 0.7 85.4 83.14 \n",

|

||||

"\n",

|

||||

"[3 rows x 24 columns]}\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "stderr",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"Could not update last created model in Task 988bd727b0e94a29a3ac0ee526813545, Task status 'completed' cannot be updated\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"from langchain.agents import initialize_agent, load_tools\n",

|

||||

"\n",

|

||||

"# SCENARIO 2 - Agent with Tools\n",

|

||||

"tools = load_tools([\"serpapi\", \"llm-math\"], llm=llm, callback_manager=manager)\n",

|

||||

"agent = initialize_agent(\n",

|

||||

" tools,\n",

|

||||

" llm,\n",

|

||||

" agent=\"zero-shot-react-description\",\n",

|

||||

" callback_manager=manager,\n",

|

||||

" verbose=True,\n",

|

||||

")\n",

|

||||

"agent.run(\n",

|

||||

" \"Who is the wife of the person who sang summer of 69?\"\n",

|

||||

")\n",

|

||||

"clearml_callback.flush_tracker(langchain_asset=agent, name=\"Agent with Tools\", finish=True)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Tips and Next Steps\n",

|

||||

"\n",

|

||||

"- Make sure you always use a unique `name` argument for the `clearml_callback.flush_tracker` function. If not, the model parameters used for a run will override the previous run!\n",

|

||||

"\n",

|

||||

"- If you close the ClearML Callback using `clearml_callback.flush_tracker(..., finish=True)` the Callback cannot be used anymore. Make a new one if you want to keep logging.\n",

|

||||

"\n",

|

||||

"- Check out the rest of the open source ClearML ecosystem, there is a data version manager, a remote execution agent, automated pipelines and much more!\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {},

|

||||

"outputs": [],

|

||||

"source": []

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"kernelspec": {

|

||||

"display_name": ".venv",

|

||||

"language": "python",

|

||||

"name": "python3"

|

||||

},

|

||||

"language_info": {

|

||||

"codemirror_mode": {

|

||||

"name": "ipython",

|

||||

"version": 3

|

||||

},

|

||||

"file_extension": ".py",

|

||||

"mimetype": "text/x-python",

|

||||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.10.9"

|

||||

},

|

||||

"orig_nbformat": 4,

|

||||

"vscode": {

|

||||

"interpreter": {

|

||||

"hash": "a53ebf4a859167383b364e7e7521d0add3c2dbbdecce4edf676e8c4634ff3fbb"

|

||||

}

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 2

|

||||

}

|

||||

@@ -22,4 +22,4 @@ There exists an Cohere Embeddings wrapper, which you can access with

|

||||

```python

|

||||

from langchain.embeddings import CohereEmbeddings

|

||||

```

|

||||

For a more detailed walkthrough of this, see [this notebook](../modules/models/text_embedding/examples/cohere.ipynb)

|

||||

For a more detailed walkthrough of this, see [this notebook](../modules/indexes/examples/embeddings.ipynb)

|

||||

|

||||

@@ -22,4 +22,4 @@ from langchain.vectorstores import DeepLake

|

||||

```

|

||||

|

||||

|

||||

For a more detailed walkthrough of the Deep Lake wrapper, see [this notebook](../modules/indexes/vectorstores/examples/deeplake.ipynb)

|

||||

For a more detailed walkthrough of the Deep Lake wrapper, see [this notebook](../modules/indexes/vectorstore_examples/deeplake.ipynb)

|

||||

|

||||

@@ -18,7 +18,7 @@ There exists a GoogleSearchAPIWrapper utility which wraps this API. To import th

|

||||

from langchain.utilities import GoogleSearchAPIWrapper

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this wrapper, see [this notebook](../modules/agents/tools/examples/google_search.ipynb).