mirror of

https://github.com/hwchase17/langchain.git

synced 2026-01-23 21:31:02 +00:00

Compare commits

782 Commits

v0.0.72

...

harrison/a

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

80bb3206da | ||

|

|

a0cd0175a8 | ||

|

|

fde13c9e95 | ||

|

|

d3f779d61d | ||

|

|

4364d3316e | ||

|

|

023de9a70b | ||

|

|

1c979e320d | ||

|

|

9d20fd5135 | ||

|

|

28bef6f87d | ||

|

|

ad3c5dd186 | ||

|

|

b286d0e63f | ||

|

|

90d5328eda | ||

|

|

bd9f095ed2 | ||

|

|

e23a596a18 | ||

|

|

8d3b059332 | ||

|

|

1931d4495e | ||

|

|

e63f9a846b | ||

|

|

b82cbd1be0 | ||

|

|

50c511d75f | ||

|

|

61f7bd7a3a | ||

|

|

10ff1fda8e | ||

|

|

c51753250d | ||

|

|

e56673c7f9 | ||

|

|

7c1dd3057f | ||

|

|

412397ad55 | ||

|

|

7aba18ea77 | ||

|

|

e57f0e38c1 | ||

|

|

63175eb696 | ||

|

|

54b1645d13 | ||

|

|

aaac7071a3 | ||

|

|

5c0c5fafb2 | ||

|

|

d2f8ddab10 | ||

|

|

9a49f5763d | ||

|

|

166624d005 | ||

|

|

9aed565f13 | ||

|

|

0f5d3b3390 | ||

|

|

5376799a23 | ||

|

|

6f39e88a2c | ||

|

|

6e4e7d2637 | ||

|

|

5e57496225 | ||

|

|

b9e5b27a99 | ||

|

|

79a44c8225 | ||

|

|

2f49c96532 | ||

|

|

40469eef7f | ||

|

|

125afb51d7 | ||

|

|

7bf5b0ccd3 | ||

|

|

7a4e1b72a8 | ||

|

|

f5afb60116 | ||

|

|

f7f118e021 | ||

|

|

544cc7f395 | ||

|

|

cd9336469e | ||

|

|

d8967e28d0 | ||

|

|

b4d6a425a2 | ||

|

|

fc1d48814c | ||

|

|

9b78bb7393 | ||

|

|

a32c85951e | ||

|

|

95e780d6f9 | ||

|

|

247a88f2f9 | ||

|

|

6dc86ad48f | ||

|

|

c9f93f5f74 | ||

|

|

8cded3fdad | ||

|

|

dca21078ad | ||

|

|

6dbd29e440 | ||

|

|

481de8df7f | ||

|

|

a31c9511e8 | ||

|

|

ec489599fd | ||

|

|

3d0449bb45 | ||

|

|

632c65d64b | ||

|

|

15cdfa9e7f | ||

|

|

704b0feb38 | ||

|

|

aecd1c8ee3 | ||

|

|

58a93f88da | ||

|

|

aa439ac2ff | ||

|

|

e131156805 | ||

|

|

0316900d2f | ||

|

|

5c64b86ba3 | ||

|

|

c2f21a519f | ||

|

|

629fda3957 | ||

|

|

f8e4048cd8 | ||

|

|

bd780a8223 | ||

|

|

7149d33c71 | ||

|

|

f240651bd8 | ||

|

|

13d1df2140 | ||

|

|

5b34931948 | ||

|

|

f0926bad9f | ||

|

|

b4914888a7 | ||

|

|

2ffb90b161 | ||

|

|

ad87584c35 | ||

|

|

fd69cc7e42 | ||

|

|

b6a101d121 | ||

|

|

6f47133d8a | ||

|

|

1dfb6a2a44 | ||

|

|

270384fb44 | ||

|

|

c913acdb4c | ||

|

|

1e19e004af | ||

|

|

60c837c58a | ||

|

|

3acf423de0 | ||

|

|

26314d7004 | ||

|

|

a9e637b8f5 | ||

|

|

1140bd79a0 | ||

|

|

007babb363 | ||

|

|

c9ae0c5808 | ||

|

|

3d871853df | ||

|

|

00bc8df640 | ||

|

|

a63cfad558 | ||

|

|

f0d4f36219 | ||

|

|

b410dc76aa | ||

|

|

4d730a9bbc | ||

|

|

af7f20fa42 | ||

|

|

659c67e896 | ||

|

|

e519a81a05 | ||

|

|

b026a62bc4 | ||

|

|

d6d6f322a9 | ||

|

|

41832042cc | ||

|

|

2b975de94d | ||

|

|

1f88b11c99 | ||

|

|

f5da9a5161 | ||

|

|

8a4709582f | ||

|

|

de7afc52a9 | ||

|

|

c7b083ab56 | ||

|

|

dc3ac8082b | ||

|

|

0a9f04bad9 | ||

|

|

d17dea30ce | ||

|

|

e90d007db3 | ||

|

|

585f60a5aa | ||

|

|

90973c10b1 | ||

|

|

fe1eb8ca5f | ||

|

|

10dab053b4 | ||

|

|

c969a779c9 | ||

|

|

7ed8d00bba | ||

|

|

9cceb4a02a | ||

|

|

c841b2cc51 | ||

|

|

28cedab1a4 | ||

|

|

cb5c5d1a4d | ||

|

|

fd0d631f39 | ||

|

|

3fb4997ad8 | ||

|

|

cc50a4579e | ||

|

|

00c39ea409 | ||

|

|

870cd33701 | ||

|

|

393cd3c796 | ||

|

|

347ea24524 | ||

|

|

6c13003dd3 | ||

|

|

b21c485ad5 | ||

|

|

d85f57ef9c | ||

|

|

595ebe1796 | ||

|

|

3b75b004fc | ||

|

|

3a2782053b | ||

|

|

e4cfaa5680 | ||

|

|

00d3ec5ed8 | ||

|

|

fe572a5a0d | ||

|

|

94b2f536f3 | ||

|

|

715bd06f04 | ||

|

|

337d1e78ff | ||

|

|

b4b7e8a54d | ||

|

|

8f608f4e75 | ||

|

|

134fc87e48 | ||

|

|

035aed8dc9 | ||

|

|

9a5268dc5f | ||

|

|

acfda4d1d8 | ||

|

|

a9dddd8a32 | ||

|

|

579ad85785 | ||

|

|

609b14a570 | ||

|

|

1ddd6dbf0b | ||

|

|

2d0ff1a06d | ||

|

|

09f9464254 | ||

|

|

582950291c | ||

|

|

5a0844bae1 | ||

|

|

e49284acde | ||

|

|

67dde7d893 | ||

|

|

90e388b9f8 | ||

|

|

64f44c6483 | ||

|

|

4b59bb55c7 | ||

|

|

7a8f1d2854 | ||

|

|

632c2b49da | ||

|

|

e57b045402 | ||

|

|

0ce4767076 | ||

|

|

6c66f51fb8 | ||

|

|

2eeaccf01c | ||

|

|

e6a9ee64b3 | ||

|

|

4e9ee566ef | ||

|

|

fc009f61c8 | ||

|

|

3dfe1cf60e | ||

|

|

a4a1ee6b5d | ||

|

|

2d3918c152 | ||

|

|

1c03205cc2 | ||

|

|

feec4c61f4 | ||

|

|

097684e5f2 | ||

|

|

fd1fcb5a7d | ||

|

|

3207a74829 | ||

|

|

597378d1f6 | ||

|

|

64b9843b5b | ||

|

|

5d86a6acf1 | ||

|

|

35a3218e84 | ||

|

|

65c0c73597 | ||

|

|

33a001933a | ||

|

|

fe804d2a01 | ||

|

|

68f039704c | ||

|

|

bcfd071784 | ||

|

|

7d90691adb | ||

|

|

f83c36d8fd | ||

|

|

6be67279fb | ||

|

|

3dc49a04a3 | ||

|

|

5c907d9998 | ||

|

|

1b7cfd7222 | ||

|

|

7859245fc5 | ||

|

|

529a1f39b9 | ||

|

|

f5a4bf0ce4 | ||

|

|

a0453ebcf5 | ||

|

|

ffb7de34ca | ||

|

|

09085c32e3 | ||

|

|

8b91a21e37 | ||

|

|

55b52bad21 | ||

|

|

b35260ed47 | ||

|

|

7bea3b302c | ||

|

|

b5449a866d | ||

|

|

8441cbfc03 | ||

|

|

4ab66c4f52 | ||

|

|

27f80784d0 | ||

|

|

031e32f331 | ||

|

|

ccee1aedd2 | ||

|

|

e2c26909f2 | ||

|

|

3e879b47c1 | ||

|

|

859502b16c | ||

|

|

c33e055f17 | ||

|

|

a5bf8c9b9d | ||

|

|

0874872dee | ||

|

|

ef25904ecb | ||

|

|

9d6f649ba5 | ||

|

|

c58932e8fd | ||

|

|

6e85cbcce3 | ||

|

|

b25dbcb5b3 | ||

|

|

a554e94a1a | ||

|

|

5f34dffedc | ||

|

|

aff33d52c5 | ||

|

|

f16c1fb6df | ||

|

|

a9e1043673 | ||

|

|

f281033362 | ||

|

|

410bf37fb8 | ||

|

|

eff5eed719 | ||

|

|

d0a56f47ee | ||

|

|

9e74df2404 | ||

|

|

0bee219cb3 | ||

|

|

923a7dde5a | ||

|

|

4cd5cf2e95 | ||

|

|

33ebb05251 | ||

|

|

e0331b55bb | ||

|

|

d5825bd3e8 | ||

|

|

e8d9cbca3f | ||

|

|

b5020c7d9c | ||

|

|

5bea731fb4 | ||

|

|

0e3b0c827e | ||

|

|

365669a7fd | ||

|

|

b7f392fdd6 | ||

|

|

4be2f9d75a | ||

|

|

f74a1bebf5 | ||

|

|

76ecca4d53 | ||

|

|

b7ebb8fe30 | ||

|

|

41c8a42e22 | ||

|

|

1cc9e90041 | ||

|

|

30e3b31b04 | ||

|

|

a0cd6672aa | ||

|

|

8b5a43d720 | ||

|

|

725b668aef | ||

|

|

024efb09f8 | ||

|

|

953e58d004 | ||

|

|

f257b08406 | ||

|

|

5e91928607 | ||

|

|

880a6a3db5 | ||

|

|

71e8eaff2b | ||

|

|

6598beacdb | ||

|

|

e4f15e4eac | ||

|

|

e50c1ea7fb | ||

|

|

62e08f80de | ||

|

|

c50fafb35d | ||

|

|

3d3e523520 | ||

|

|

c1a9d83b34 | ||

|

|

42d725223e | ||

|

|

0bbcc7815b | ||

|

|

b26fa1935d | ||

|

|

bc2ed93b77 | ||

|

|

c71f2a7b26 | ||

|

|

51681f653f | ||

|

|

705431aecc | ||

|

|

b83e826510 | ||

|

|

e7d6de6b1c | ||

|

|

6e0d3880df | ||

|

|

6ec5780547 | ||

|

|

47d37db2d2 | ||

|

|

4f364db9a9 | ||

|

|

030ce9f506 | ||

|

|

8990122d5d | ||

|

|

52d6bf04d0 | ||

|

|

910da8518f | ||

|

|

2f27ef92fe | ||

|

|

75149d6d38 | ||

|

|

fab7994b74 | ||

|

|

eb80d6e0e4 | ||

|

|

b5667bed9e | ||

|

|

b3be83c750 | ||

|

|

50626a10ee | ||

|

|

6e1b5b8f7e | ||

|

|

eec9b1b306 | ||

|

|

ea142f6a32 | ||

|

|

12f868b292 | ||

|

|

31f9ecfc19 | ||

|

|

273e9bf296 | ||

|

|

f155d9d3ec | ||

|

|

d3d4503ce2 | ||

|

|

1f93c5cf69 | ||

|

|

15b5a08f4b | ||

|

|

ff4a25b841 | ||

|

|

2212520a6c | ||

|

|

d08f940336 | ||

|

|

2280a2cb2f | ||

|

|

ce5d97bcb3 | ||

|

|

8fa1764c60 | ||

|

|

f299bd1416 | ||

|

|

064be93edf | ||

|

|

86822d1cc2 | ||

|

|

a581bce379 | ||

|

|

2ffc643086 | ||

|

|

2136dc94bb | ||

|

|

a92344f476 | ||

|

|

b706966ebc | ||

|

|

1c22657256 | ||

|

|

6f02286805 | ||

|

|

3674074eb0 | ||

|

|

a7e09d46c5 | ||

|

|

fa2e546b76 | ||

|

|

c592b12043 | ||

|

|

9555bbd5bb | ||

|

|

0ca1641b14 | ||

|

|

d5b4393bb2 | ||

|

|

7b6ff7fe00 | ||

|

|

76c7b1f677 | ||

|

|

5aa8ece211 | ||

|

|

f6d24d5740 | ||

|

|

b1c4480d7c | ||

|

|

b6ba989f2f | ||

|

|

04acda55ec | ||

|

|

8e5c4ac867 | ||

|

|

df8702fead | ||

|

|

d5d50c39e6 | ||

|

|

1f18698b2a | ||

|

|

ef4945af6b | ||

|

|

7de2ada3ea | ||

|

|

262d4cb9a8 | ||

|

|

951c158106 | ||

|

|

85e4dd7fc3 | ||

|

|

b1b4a4065a | ||

|

|

08f23c95d9 | ||

|

|

3cf493b089 | ||

|

|

e635c86145 | ||

|

|

779790167e | ||

|

|

3161ced4bc | ||

|

|

3d6fcb85dc | ||

|

|

3701b2901e | ||

|

|

280cb4160d | ||

|

|

80d8db5f60 | ||

|

|

1a8790d808 | ||

|

|

34840f3aee | ||

|

|

8685d53adc | ||

|

|

2f6833d433 | ||

|

|

dd90fd02d5 | ||

|

|

07766a69f3 | ||

|

|

aa854988bf | ||

|

|

96ebe98dc2 | ||

|

|

45f05fc939 | ||

|

|

cf9c3f54f7 | ||

|

|

fbc0c85b90 | ||

|

|

276940fd9b | ||

|

|

cdff6c8181 | ||

|

|

cd45adbea2 | ||

|

|

aff44d0a98 | ||

|

|

8a95fdaee1 | ||

|

|

5d8dc83ede | ||

|

|

b157e0c1c3 | ||

|

|

40e9488055 | ||

|

|

55efbb8a7e | ||

|

|

d6bbf395af | ||

|

|

606605925d | ||

|

|

f93c011456 | ||

|

|

3c24684522 | ||

|

|

b84d190fd0 | ||

|

|

aad4bff098 | ||

|

|

3ea6d9c4d2 | ||

|

|

ced412e1c1 | ||

|

|

1279c8de39 | ||

|

|

c7779c800a | ||

|

|

6f4f771897 | ||

|

|

4a327dd1d6 | ||

|

|

d4edd3c312 | ||

|

|

e72074f78a | ||

|

|

0b29e68c17 | ||

|

|

4d7fdb8957 | ||

|

|

656efe6ef3 | ||

|

|

362586fe8b | ||

|

|

63aa28e2a6 | ||

|

|

c3dfbdf0da | ||

|

|

a2280f321f | ||

|

|

4e13cef05a | ||

|

|

e5c1659864 | ||

|

|

2d098e8869 | ||

|

|

8965a2f0af | ||

|

|

e222ea4ee8 | ||

|

|

e326939759 | ||

|

|

7cf46b3fee | ||

|

|

84cd825a0e | ||

|

|

0a1b1806e9 | ||

|

|

9ee2713272 | ||

|

|

b3234bf3b0 | ||

|

|

562d9891ea | ||

|

|

56aff797c0 | ||

|

|

d53ff270e0 | ||

|

|

df6c33d4b3 | ||

|

|

039d05c808 | ||

|

|

aed9f9febe | ||

|

|

72b461e257 | ||

|

|

cb646082ba | ||

|

|

bd4a2a670b | ||

|

|

6e98ab01e1 | ||

|

|

c0ad5d13b8 | ||

|

|

acd86d33bc | ||

|

|

9707eda83c | ||

|

|

7e550df6d4 | ||

|

|

c9b5a30b37 | ||

|

|

cb04ba0136 | ||

|

|

5903a93f3d | ||

|

|

15de3e8137 | ||

|

|

f95d551f7a | ||

|

|

c6bfa00178 | ||

|

|

01a57198b8 | ||

|

|

8dba30f31e | ||

|

|

9f78717b3c | ||

|

|

90846dcc28 | ||

|

|

6ed16e13b1 | ||

|

|

c1dc784a3d | ||

|

|

5b0e747f9a | ||

|

|

624c72c266 | ||

|

|

a950287206 | ||

|

|

30383abb12 | ||

|

|

cdb97f3dfb | ||

|

|

b44c8bd969 | ||

|

|

c9189d354a | ||

|

|

622578a022 | ||

|

|

7018806a92 | ||

|

|

bd335ffd64 | ||

|

|

a094c49153 | ||

|

|

99fe023496 | ||

|

|

3ee32a01ea | ||

|

|

c844d1fd46 | ||

|

|

9405af6919 | ||

|

|

357d808484 | ||

|

|

cc423f40f1 | ||

|

|

b053f831cd | ||

|

|

523ad8d2e2 | ||

|

|

31303d0b11 | ||

|

|

494c9d341a | ||

|

|

519f0187b6 | ||

|

|

64c6435545 | ||

|

|

7eba828e1b | ||

|

|

2a7215bc3b | ||

|

|

784d24a1d5 | ||

|

|

aba58e9e2e | ||

|

|

c4a557bdd4 | ||

|

|

97e3666e0d | ||

|

|

7ade419a0e | ||

|

|

a4a2d79087 | ||

|

|

8f21605d71 | ||

|

|

064741db58 | ||

|

|

e3354404ad | ||

|

|

3610ef2830 | ||

|

|

27104d4921 | ||

|

|

4f41e20f09 | ||

|

|

d0062c7a9a | ||

|

|

8e6f599822 | ||

|

|

f276bfad8e | ||

|

|

7bec461782 | ||

|

|

df6865cd52 | ||

|

|

312c319d8b | ||

|

|

0e21463f07 | ||

|

|

dec3750875 | ||

|

|

763f879536 | ||

|

|

56b850648f | ||

|

|

63a5614d23 | ||

|

|

a1b9dfc099 | ||

|

|

68ce68f290 | ||

|

|

b8a7828d1f | ||

|

|

6a4ee07e4f | ||

|

|

23231d65a9 | ||

|

|

3d54b05863 | ||

|

|

bca0935d90 | ||

|

|

882f7964fb | ||

|

|

443992c4d5 | ||

|

|

a83a371069 | ||

|

|

499e76b199 | ||

|

|

8947797250 | ||

|

|

1989e7d4c2 | ||

|

|

dda5259f68 | ||

|

|

f032609f8d | ||

|

|

9ac442624c | ||

|

|

34abcd31b9 | ||

|

|

fe30be6fba | ||

|

|

cfed0497ac | ||

|

|

59157b6891 | ||

|

|

e178008b75 | ||

|

|

1cd8996074 | ||

|

|

cfae03042d | ||

|

|

4b5e850361 | ||

|

|

4d4b43cf5a | ||

|

|

c01f9100e4 | ||

|

|

edb3915ee7 | ||

|

|

fe7dbecfe6 | ||

|

|

02ec72df87 | ||

|

|

92ab27e4b8 | ||

|

|

82baecc892 | ||

|

|

35f1e8f569 | ||

|

|

6c629b54e6 | ||

|

|

3574418a40 | ||

|

|

5bf8772f26 | ||

|

|

924bba5ce9 | ||

|

|

786852e9e6 | ||

|

|

72ef69d1ba | ||

|

|

1aa41b5741 | ||

|

|

c14cff60d0 | ||

|

|

f61858163d | ||

|

|

0824d65a5c | ||

|

|

a0bf856c70 | ||

|

|

166cda2cc6 | ||

|

|

aaad6cc954 | ||

|

|

3989c793fd | ||

|

|

42b892c21b | ||

|

|

81abcae91a | ||

|

|

648b3b3909 | ||

|

|

fd9975dad7 | ||

|

|

d29f74114e | ||

|

|

ce441edd9c | ||

|

|

6f30d68581 | ||

|

|

002da6edc0 | ||

|

|

0963096491 | ||

|

|

c5dd491a21 | ||

|

|

2f15c11b87 | ||

|

|

96db6ed073 | ||

|

|

7e8f832cd6 | ||

|

|

a8e88e1874 | ||

|

|

42167a1e24 | ||

|

|

bb53d9722d | ||

|

|

8a0751dadd | ||

|

|

4b5d427421 | ||

|

|

9becdeaadf | ||

|

|

5457d48416 | ||

|

|

9381005098 | ||

|

|

10e73a3723 | ||

|

|

5bc6dc076e | ||

|

|

6d37d089e9 | ||

|

|

8e3cd3e0dd | ||

|

|

b7765a95a0 | ||

|

|

d480330fae | ||

|

|

6085fe18d4 | ||

|

|

8a35811556 | ||

|

|

71709ad5d5 | ||

|

|

53c67e04d4 | ||

|

|

c6ab1bb3cb | ||

|

|

334b553260 | ||

|

|

ac1320aae8 | ||

|

|

4e28982d2b | ||

|

|

cc7d2e5621 | ||

|

|

424e71705d | ||

|

|

4e43b0efe9 | ||

|

|

3d5f56a8a1 | ||

|

|

047231840d | ||

|

|

5bdb8dd6fe | ||

|

|

d90a287d8f | ||

|

|

b7708bbec6 | ||

|

|

fb83cd4ff4 | ||

|

|

44c8d8a9ac | ||

|

|

af94f1dd97 | ||

|

|

0c84ce1082 | ||

|

|

0b6a650cb4 | ||

|

|

d2ef5d6167 | ||

|

|

23243ae69c | ||

|

|

13ba0177d0 | ||

|

|

0118706fd6 | ||

|

|

c5015d77e2 | ||

|

|

159c560c95 | ||

|

|

926c121b98 | ||

|

|

91446a5e9b | ||

|

|

a5a14405ad | ||

|

|

5a954efdd7 | ||

|

|

4766b20223 | ||

|

|

9962bda70b | ||

|

|

4f3fbd7267 | ||

|

|

28781a6213 | ||

|

|

37dd34bea5 | ||

|

|

e8f224fd3a | ||

|

|

afe884fb96 | ||

|

|

ed37fbaeff | ||

|

|

955c89fccb | ||

|

|

65cc81c479 | ||

|

|

05a05bcb04 | ||

|

|

9d6d8f85da | ||

|

|

af8f5c1a49 | ||

|

|

a83ba44efa | ||

|

|

7b5e160d28 | ||

|

|

45b5640fe5 | ||

|

|

85c1449a96 | ||

|

|

9111f4ca8a | ||

|

|

fb3c73d194 | ||

|

|

3f29742adc | ||

|

|

483821ea3b | ||

|

|

ee3590cb61 | ||

|

|

8c5fbab72d | ||

|

|

d5f3dfa1e1 | ||

|

|

47c3221fda | ||

|

|

511d41114f | ||

|

|

c39ef70aa4 | ||

|

|

1ed708391e | ||

|

|

2bee8d4941 | ||

|

|

b956070f08 | ||

|

|

383c67c1b2 | ||

|

|

3f50feb280 | ||

|

|

6fafcd0a70 | ||

|

|

ab1a3cccac | ||

|

|

6322b6f657 | ||

|

|

3462130e2d | ||

|

|

5d11e5da40 | ||

|

|

7745505482 | ||

|

|

badeeb37b0 | ||

|

|

971458c5de | ||

|

|

5e10e19bfe | ||

|

|

c60954d0f8 | ||

|

|

a1c296bc3c | ||

|

|

c96ac3e591 | ||

|

|

19c2797bed | ||

|

|

3ecdea8be4 | ||

|

|

e08961ab25 | ||

|

|

f0a258555b | ||

|

|

05ad399abe | ||

|

|

98186ef180 | ||

|

|

e46cd3b7db | ||

|

|

52753066ef | ||

|

|

d8ed286200 | ||

|

|

34cba2da32 | ||

|

|

05df480376 | ||

|

|

3ea1e5af1e | ||

|

|

bac676c8e7 | ||

|

|

d8ac274fc2 | ||

|

|

caa8e4742e | ||

|

|

f05f025e41 | ||

|

|

c67c5383fd | ||

|

|

88bebb4caa | ||

|

|

ec727bf166 | ||

|

|

8c45f06d58 | ||

|

|

f30dcc6359 | ||

|

|

d43d430d86 | ||

|

|

012a6dfb16 | ||

|

|

6a31a59400 | ||

|

|

20889205e8 | ||

|

|

fc2502cd81 | ||

|

|

0f0e69adce | ||

|

|

7fb33fca47 | ||

|

|

0c553d2064 | ||

|

|

78abd277ff | ||

|

|

05d8969c79 | ||

|

|

03e5794978 | ||

|

|

6d44a2285c | ||

|

|

0998577dfe | ||

|

|

bbb06ca4cf | ||

|

|

0b6aa6a024 | ||

|

|

10e7297306 | ||

|

|

e51fad1488 | ||

|

|

b7747017d7 | ||

|

|

2e96704d59 | ||

|

|

e9799d6821 | ||

|

|

c2d1d903fa | ||

|

|

055a53c27f | ||

|

|

231da14771 | ||

|

|

6ab432d62e | ||

|

|

07a407d89a | ||

|

|

c64f98e2bb | ||

|

|

5469d898a9 | ||

|

|

3d639d1539 | ||

|

|

91c6cea227 | ||

|

|

ba54d36787 | ||

|

|

5f8082bdd7 | ||

|

|

512c523368 | ||

|

|

e323d0cfb1 | ||

|

|

01fa2d8117 | ||

|

|

8e126bc9bd | ||

|

|

c71027e725 | ||

|

|

e85c53ce68 | ||

|

|

3e1901e1aa | ||

|

|

6a4f602156 | ||

|

|

6023d5be09 | ||

|

|

a306baacd1 | ||

|

|

44ecec3896 | ||

|

|

bc7e56e8df | ||

|

|

afc7f1b892 | ||

|

|

d43250bfa5 | ||

|

|

bc53c928fc | ||

|

|

637c0d6508 | ||

|

|

1e56879d38 | ||

|

|

6bd1529cb7 | ||

|

|

2584663e44 | ||

|

|

cc20b9425e | ||

|

|

cea380174f | ||

|

|

87fad8fc00 | ||

|

|

e2b834e427 | ||

|

|

f95cedc443 | ||

|

|

ba5a2f06b9 | ||

|

|

2ec25ddd4c | ||

|

|

31b054f69d | ||

|

|

93a091cfb8 | ||

|

|

3aa53b44dd | ||

|

|

82c080c6e6 | ||

|

|

71e662e88d | ||

|

|

53d56d7650 | ||

|

|

2a68be3e8d | ||

|

|

8217a2f26c | ||

|

|

7658263bfb | ||

|

|

32b11101d3 | ||

|

|

1614c5f5fd | ||

|

|

a2b699dcd2 | ||

|

|

7cc44b3bdb | ||

|

|

0b9f086d36 | ||

|

|

bcfbc7a818 | ||

|

|

1dd0733515 | ||

|

|

4c79100b15 | ||

|

|

777aaff841 | ||

|

|

e9ef08862d | ||

|

|

364b771743 | ||

|

|

483441d305 | ||

|

|

8df6b68093 | ||

|

|

3f48eed5bd | ||

|

|

933441cc52 | ||

|

|

4a8f5cdf4b | ||

|

|

523ad2e6bd | ||

|

|

fc0cfd7d1f | ||

|

|

4d32441b86 | ||

|

|

23d5f64bda | ||

|

|

0de55048b7 | ||

|

|

d564308e0f | ||

|

|

576609e665 | ||

|

|

3f952eb597 | ||

|

|

ba26a879e0 | ||

|

|

bfabd1d5c0 | ||

|

|

f3508228df | ||

|

|

b4eb043b81 | ||

|

|

06438794e1 | ||

|

|

9f8e05ffd4 | ||

|

|

b0d560be56 | ||

|

|

ebea40ce86 | ||

|

|

b9045f7e0d | ||

|

|

7b4882a2f4 | ||

|

|

5d4b6e4d4e | ||

|

|

94ae126747 | ||

|

|

ae5695ad32 | ||

|

|

cacf4091c0 | ||

|

|

54f9e4287f | ||

|

|

c331009440 | ||

|

|

6086292252 | ||

|

|

b3916f74a7 | ||

|

|

f46f1d28af | ||

|

|

7728a848d0 | ||

|

|

f3da4dc6ba | ||

|

|

ae1b589f60 | ||

|

|

6a20f07f0d | ||

|

|

fb2d7afe71 | ||

|

|

1ad7973cc6 | ||

|

|

5f73d06502 | ||

|

|

248c297f1b | ||

|

|

213c2e33e5 | ||

|

|

2e0219cac0 | ||

|

|

966611bbfa | ||

|

|

7198a1cb22 | ||

|

|

5bb2952860 | ||

|

|

c658f0aed3 | ||

|

|

309d86e339 | ||

|

|

6ad360bdef | ||

|

|

5198d6f541 | ||

|

|

a5d003f0c9 | ||

|

|

924b7ecf89 |

6

.dockerignore

Normal file

6

.dockerignore

Normal file

@@ -0,0 +1,6 @@

|

||||

.venv

|

||||

.github

|

||||

.git

|

||||

.mypy_cache

|

||||

.pytest_cache

|

||||

Dockerfile

|

||||

16

CONTRIBUTING.md → .github/CONTRIBUTING.md

vendored

16

CONTRIBUTING.md → .github/CONTRIBUTING.md

vendored

@@ -46,8 +46,8 @@ good code into the codebase.

|

||||

|

||||

### 🏭Release process

|

||||

|

||||

As of now, LangChain has an ad hoc release process: releases are cut with high frequency via by

|

||||

a developer and published to [PyPI](https://pypi.org/project/ruff/).

|

||||

As of now, LangChain has an ad hoc release process: releases are cut with high frequency by

|

||||

a developer and published to [PyPI](https://pypi.org/project/langchain/).

|

||||

|

||||

LangChain follows the [semver](https://semver.org/) versioning standard. However, as pre-1.0 software,

|

||||

even patch releases may contain [non-backwards-compatible changes](https://semver.org/#spec-item-4).

|

||||

@@ -73,10 +73,14 @@ poetry install -E all

|

||||

|

||||

This will install all requirements for running the package, examples, linting, formatting, tests, and coverage. Note the `-E all` flag will install all optional dependencies necessary for integration testing.

|

||||

|

||||

❗Note: If you're running Poetry 1.4.1 and receive a `WheelFileValidationError` for `debugpy` during installation, you can try either downgrading to Poetry 1.4.0 or disabling "modern installation" (`poetry config installer.modern-installation false`) and re-install requirements. See [this `debugpy` issue](https://github.com/microsoft/debugpy/issues/1246) for more details.

|

||||

|

||||

Now, you should be able to run the common tasks in the following section.

|

||||

|

||||

## ✅Common Tasks

|

||||

|

||||

Type `make` for a list of common tasks.

|

||||

|

||||

### Code Formatting

|

||||

|

||||

Formatting for this project is done via a combination of [Black](https://black.readthedocs.io/en/stable/) and [isort](https://pycqa.github.io/isort/).

|

||||

@@ -116,7 +120,13 @@ Unit tests cover modular logic that does not require calls to outside APIs.

|

||||

To run unit tests:

|

||||

|

||||

```bash

|

||||

make tests

|

||||

make test

|

||||

```

|

||||

|

||||

To run unit tests in Docker:

|

||||

|

||||

```bash

|

||||

make docker_tests

|

||||

```

|

||||

|

||||

If you add new logic, please add a unit test.

|

||||

2

.github/workflows/test.yml

vendored

2

.github/workflows/test.yml

vendored

@@ -31,4 +31,4 @@ jobs:

|

||||

run: poetry install

|

||||

- name: Run unit tests

|

||||

run: |

|

||||

make tests

|

||||

make test

|

||||

|

||||

8

.gitignore

vendored

8

.gitignore

vendored

@@ -106,6 +106,7 @@ celerybeat.pid

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.envrc

|

||||

.venv

|

||||

.venvs

|

||||

env/

|

||||

@@ -134,3 +135,10 @@ dmypy.json

|

||||

|

||||

# macOS display setting files

|

||||

.DS_Store

|

||||

|

||||

# Wandb directory

|

||||

wandb/

|

||||

|

||||

# asdf tool versions

|

||||

.tool-versions

|

||||

/.ruff_cache/

|

||||

|

||||

44

Dockerfile

Normal file

44

Dockerfile

Normal file

@@ -0,0 +1,44 @@

|

||||

# This is a Dockerfile for running unit tests

|

||||

|

||||

# Use the Python base image

|

||||

FROM python:3.11.2-bullseye AS builder

|

||||

|

||||

# Define the version of Poetry to install (default is 1.4.2)

|

||||

ARG POETRY_VERSION=1.4.2

|

||||

|

||||

# Define the directory to install Poetry to (default is /opt/poetry)

|

||||

ARG POETRY_HOME=/opt/poetry

|

||||

|

||||

# Create a Python virtual environment for Poetry and install it

|

||||

RUN python3 -m venv ${POETRY_HOME} && \

|

||||

$POETRY_HOME/bin/pip install --upgrade pip && \

|

||||

$POETRY_HOME/bin/pip install poetry==${POETRY_VERSION}

|

||||

|

||||

# Test if Poetry is installed in the expected path

|

||||

RUN echo "Poetry version:" && $POETRY_HOME/bin/poetry --version

|

||||

|

||||

# Set the working directory for the app

|

||||

WORKDIR /app

|

||||

|

||||

# Use a multi-stage build to install dependencies

|

||||

FROM builder AS dependencies

|

||||

|

||||

# Copy only the dependency files for installation

|

||||

COPY pyproject.toml poetry.lock poetry.toml ./

|

||||

|

||||

# Install the Poetry dependencies (this layer will be cached as long as the dependencies don't change)

|

||||

RUN $POETRY_HOME/bin/poetry install --no-interaction --no-ansi --with test

|

||||

|

||||

# Use a multi-stage build to run tests

|

||||

FROM dependencies AS tests

|

||||

|

||||

# Copy the rest of the app source code (this layer will be invalidated and rebuilt whenever the source code changes)

|

||||

COPY . .

|

||||

|

||||

RUN /opt/poetry/bin/poetry install --no-interaction --no-ansi --with test

|

||||

|

||||

# Set the entrypoint to run tests using Poetry

|

||||

ENTRYPOINT ["/opt/poetry/bin/poetry", "run", "pytest"]

|

||||

|

||||

# Set the default command to run all unit tests

|

||||

CMD ["tests/unit_tests"]

|

||||

43

Makefile

43

Makefile

@@ -1,4 +1,6 @@

|

||||

.PHONY: format lint tests tests_watch integration_tests

|

||||

.PHONY: all clean format lint test tests test_watch integration_tests docker_tests help

|

||||

|

||||

all: help

|

||||

|

||||

coverage:

|

||||

poetry run pytest --cov \

|

||||

@@ -6,6 +8,8 @@ coverage:

|

||||

--cov-report xml \

|

||||

--cov-report term-missing:skip-covered

|

||||

|

||||

clean: docs_clean

|

||||

|

||||

docs_build:

|

||||

cd docs && poetry run make html

|

||||

|

||||

@@ -17,19 +21,42 @@ docs_linkcheck:

|

||||

|

||||

format:

|

||||

poetry run black .

|

||||

poetry run isort .

|

||||

poetry run ruff --select I --fix .

|

||||

|

||||

lint:

|

||||

poetry run mypy .

|

||||

poetry run black . --check

|

||||

poetry run isort . --check

|

||||

poetry run flake8 .

|

||||

PYTHON_FILES=.

|

||||

lint: PYTHON_FILES=.

|

||||

lint_diff: PYTHON_FILES=$(shell git diff --name-only --diff-filter=d master | grep -E '\.py$$')

|

||||

|

||||

lint lint_diff:

|

||||

poetry run mypy $(PYTHON_FILES)

|

||||

poetry run black $(PYTHON_FILES) --check

|

||||

poetry run ruff .

|

||||

|

||||

test:

|

||||

poetry run pytest tests/unit_tests

|

||||

|

||||

tests:

|

||||

poetry run pytest tests/unit_tests

|

||||

|

||||

tests_watch:

|

||||

test_watch:

|

||||

poetry run ptw --now . -- tests/unit_tests

|

||||

|

||||

integration_tests:

|

||||

poetry run pytest tests/integration_tests

|

||||

|

||||

docker_tests:

|

||||

docker build -t my-langchain-image:test .

|

||||

docker run --rm my-langchain-image:test

|

||||

|

||||

help:

|

||||

@echo '----'

|

||||

@echo 'coverage - run unit tests and generate coverage report'

|

||||

@echo 'docs_build - build the documentation'

|

||||

@echo 'docs_clean - clean the documentation build artifacts'

|

||||

@echo 'docs_linkcheck - run linkchecker on the documentation'

|

||||

@echo 'format - run code formatters'

|

||||

@echo 'lint - run linters'

|

||||

@echo 'test - run unit tests'

|

||||

@echo 'test_watch - run unit tests in watch mode'

|

||||

@echo 'integration_tests - run integration tests'

|

||||

@echo 'docker_tests - run unit tests in docker'

|

||||

|

||||

13

README.md

13

README.md

@@ -4,9 +4,14 @@

|

||||

|

||||

[](https://github.com/hwchase17/langchain/actions/workflows/lint.yml) [](https://github.com/hwchase17/langchain/actions/workflows/test.yml) [](https://github.com/hwchase17/langchain/actions/workflows/linkcheck.yml) [](https://opensource.org/licenses/MIT) [](https://twitter.com/langchainai) [](https://discord.gg/6adMQxSpJS)

|

||||

|

||||

**Production Support:** As you move your LangChains into production, we'd love to offer more comprehensive support.

|

||||

Please fill out [this form](https://forms.gle/57d8AmXBYp8PP8tZA) and we'll set up a dedicated support Slack channel.

|

||||

|

||||

## Quick Install

|

||||

|

||||

`pip install langchain`

|

||||

or

|

||||

`conda install langchain -c conda-forge`

|

||||

|

||||

## 🤔 What is this?

|

||||

|

||||

@@ -29,7 +34,7 @@ This library is aimed at assisting in the development of those types of applicat

|

||||

|

||||

**🤖 Agents**

|

||||

|

||||

- [Documentation](https://langchain.readthedocs.io/en/latest/use_cases/agents.html)

|

||||

- [Documentation](https://langchain.readthedocs.io/en/latest/modules/agents.html)

|

||||

- End-to-end Example: [GPT+WolframAlpha](https://huggingface.co/spaces/JavaFXpert/Chat-GPT-LangChain)

|

||||

|

||||

## 📖 Documentation

|

||||

@@ -39,7 +44,7 @@ Please see [here](https://langchain.readthedocs.io/en/latest/?) for full documen

|

||||

- Getting started (installation, setting up the environment, simple examples)

|

||||

- How-To examples (demos, integrations, helper functions)

|

||||

- Reference (full API docs)

|

||||

Resources (high-level explanation of core concepts)

|

||||

- Resources (high-level explanation of core concepts)

|

||||

|

||||

## 🚀 What can this help with?

|

||||

|

||||

@@ -70,10 +75,10 @@ Memory is the concept of persisting state between calls of a chain/agent. LangCh

|

||||

|

||||

[BETA] Generative models are notoriously hard to evaluate with traditional metrics. One new way of evaluating them is using language models themselves to do the evaluation. LangChain provides some prompts/chains for assisting in this.

|

||||

|

||||

For more information on these concepts, please see our [full documentation](https://langchain.readthedocs.io/en/latest/?).

|

||||

For more information on these concepts, please see our [full documentation](https://langchain.readthedocs.io/en/latest/).

|

||||

|

||||

## 💁 Contributing

|

||||

|

||||

As an open source project in a rapidly developing field, we are extremely open to contributions, whether it be in the form of a new feature, improved infra, or better documentation.

|

||||

|

||||

For detailed information on how to contribute, see [here](CONTRIBUTING.md).

|

||||

For detailed information on how to contribute, see [here](.github/CONTRIBUTING.md).

|

||||

|

||||

BIN

docs/_static/ApifyActors.png

vendored

Normal file

BIN

docs/_static/ApifyActors.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 559 KiB |

BIN

docs/_static/DataberryDashboard.png

vendored

Normal file

BIN

docs/_static/DataberryDashboard.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 157 KiB |

BIN

docs/_static/HeliconeDashboard.png

vendored

Normal file

BIN

docs/_static/HeliconeDashboard.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 235 KiB |

BIN

docs/_static/HeliconeKeys.png

vendored

Normal file

BIN

docs/_static/HeliconeKeys.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 148 KiB |

14

docs/_static/css/custom.css

vendored

14

docs/_static/css/custom.css

vendored

@@ -1,3 +1,13 @@

|

||||

pre {

|

||||

white-space: break-spaces;

|

||||

}

|

||||

white-space: break-spaces;

|

||||

}

|

||||

|

||||

@media (min-width: 1200px) {

|

||||

.container,

|

||||

.container-lg,

|

||||

.container-md,

|

||||

.container-sm,

|

||||

.container-xl {

|

||||

max-width: 2560px !important;

|

||||

}

|

||||

}

|

||||

|

||||

@@ -23,13 +23,14 @@ with open("../pyproject.toml") as f:

|

||||

# -- Project information -----------------------------------------------------

|

||||

|

||||

project = "🦜🔗 LangChain"

|

||||

copyright = "2022, Harrison Chase"

|

||||

copyright = "2023, Harrison Chase"

|

||||

author = "Harrison Chase"

|

||||

|

||||

version = data["tool"]["poetry"]["version"]

|

||||

release = version

|

||||

|

||||

html_title = project + " " + version

|

||||

html_last_updated_fmt = "%b %d, %Y"

|

||||

|

||||

|

||||

# -- General configuration ---------------------------------------------------

|

||||

@@ -45,6 +46,7 @@ extensions = [

|

||||

"sphinx.ext.viewcode",

|

||||

"sphinxcontrib.autodoc_pydantic",

|

||||

"myst_nb",

|

||||

"sphinx_copybutton",

|

||||

"sphinx_panels",

|

||||

"IPython.sphinxext.ipython_console_highlighting",

|

||||

]

|

||||

|

||||

@@ -22,3 +22,25 @@ This repo serves as a template for how deploy a LangChain with Gradio.

|

||||

It implements a chatbot interface, with a "Bring-Your-Own-Token" approach (nice for not wracking up big bills).

|

||||

It also contains instructions for how to deploy this app on the Hugging Face platform.

|

||||

This is heavily influenced by James Weaver's [excellent examples](https://huggingface.co/JavaFXpert).

|

||||

|

||||

## [Beam](https://github.com/slai-labs/get-beam/tree/main/examples/langchain-question-answering)

|

||||

|

||||

This repo serves as a template for how deploy a LangChain with [Beam](https://beam.cloud).

|

||||

|

||||

It implements a Question Answering app and contains instructions for deploying the app as a serverless REST API.

|

||||

|

||||

## [Vercel](https://github.com/homanp/vercel-langchain)

|

||||

|

||||

A minimal example on how to run LangChain on Vercel using Flask.

|

||||

|

||||

|

||||

## [SteamShip](https://github.com/steamship-core/steamship-langchain/)

|

||||

This repository contains LangChain adapters for Steamship, enabling LangChain developers to rapidly deploy their apps on Steamship.

|

||||

This includes: production ready endpoints, horizontal scaling across dependencies, persistant storage of app state, multi-tenancy support, etc.

|

||||

|

||||

## [Langchain-serve](https://github.com/jina-ai/langchain-serve)

|

||||

This repository allows users to serve local chains and agents as RESTful, gRPC, or Websocket APIs thanks to [Jina](https://docs.jina.ai/). Deploy your chains & agents with ease and enjoy independent scaling, serverless and autoscaling APIs, as well as a Streamlit playground on Jina AI Cloud.

|

||||

|

||||

## [BentoML](https://github.com/ssheng/BentoChain)

|

||||

|

||||

This repository provides an example of how to deploy a LangChain application with [BentoML](https://github.com/bentoml/BentoML). BentoML is a framework that enables the containerization of machine learning applications as standard OCI images. BentoML also allows for the automatic generation of OpenAPI and gRPC endpoints. With BentoML, you can integrate models from all popular ML frameworks and deploy them as microservices running on the most optimal hardware and scaling independently.

|

||||

|

||||

293

docs/ecosystem/aim_tracking.ipynb

Normal file

293

docs/ecosystem/aim_tracking.ipynb

Normal file

@@ -0,0 +1,293 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# Aim\n",

|

||||

"\n",

|

||||

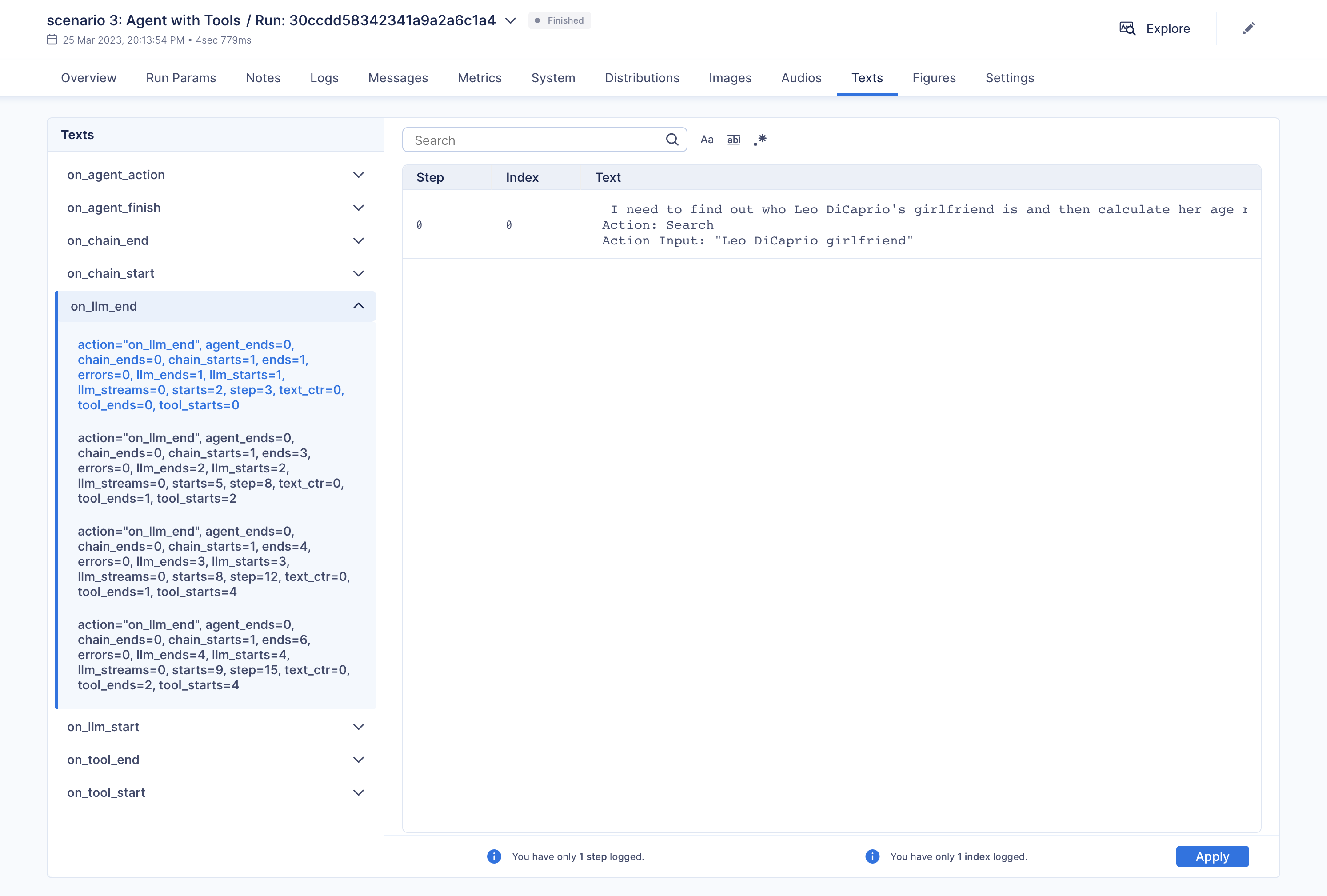

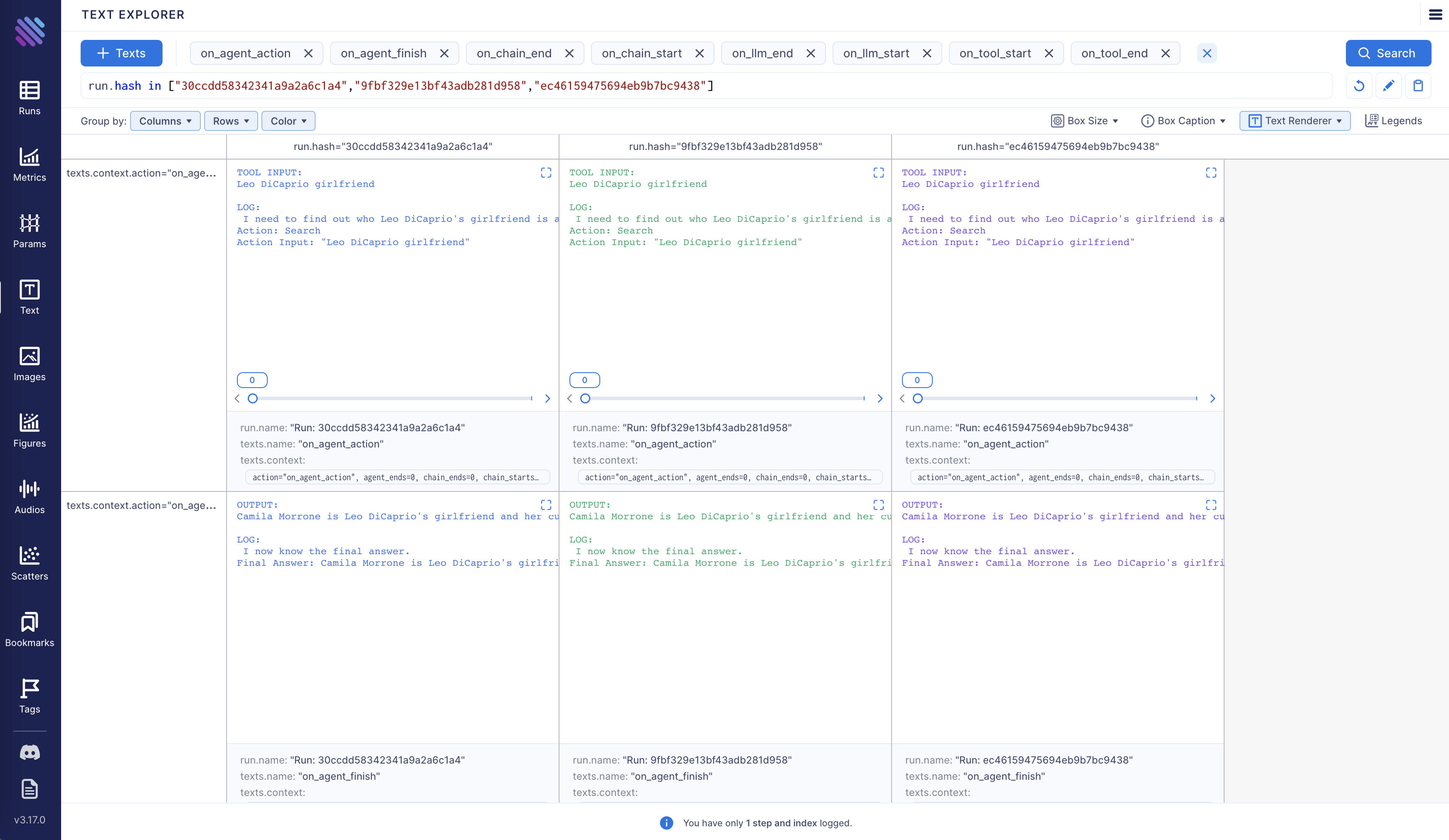

"Aim makes it super easy to visualize and debug LangChain executions. Aim tracks inputs and outputs of LLMs and tools, as well as actions of agents. \n",

|

||||

"\n",

|

||||

"With Aim, you can easily debug and examine an individual execution:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"Additionally, you have the option to compare multiple executions side by side:\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"Aim is fully open source, [learn more](https://github.com/aimhubio/aim) about Aim on GitHub.\n",

|

||||

"\n",

|

||||

"Let's move forward and see how to enable and configure Aim callback."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Tracking LangChain Executions with Aim</h3>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"In this notebook we will explore three usage scenarios. To start off, we will install the necessary packages and import certain modules. Subsequently, we will configure two environment variables that can be established either within the Python script or through the terminal."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "mf88kuCJhbVu"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!pip install aim\n",

|

||||

"!pip install langchain\n",

|

||||

"!pip install openai\n",

|

||||

"!pip install google-search-results"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "g4eTuajwfl6L"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import os\n",

|

||||

"from datetime import datetime\n",

|

||||

"\n",

|

||||

"from langchain.llms import OpenAI\n",

|

||||

"from langchain.callbacks.base import CallbackManager\n",

|

||||

"from langchain.callbacks import AimCallbackHandler, StdOutCallbackHandler"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"Our examples use a GPT model as the LLM, and OpenAI offers an API for this purpose. You can obtain the key from the following link: https://platform.openai.com/account/api-keys .\n",

|

||||

"\n",

|

||||

"We will use the SerpApi to retrieve search results from Google. To acquire the SerpApi key, please go to https://serpapi.com/manage-api-key ."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "T1bSmKd6V2If"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"os.environ[\"OPENAI_API_KEY\"] = \"...\"\n",

|

||||

"os.environ[\"SERPAPI_API_KEY\"] = \"...\""

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "QenUYuBZjIzc"

|

||||

},

|

||||

"source": [

|

||||

"The event methods of `AimCallbackHandler` accept the LangChain module or agent as input and log at least the prompts and generated results, as well as the serialized version of the LangChain module, to the designated Aim run."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "KAz8weWuUeXF"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"session_group = datetime.now().strftime(\"%m.%d.%Y_%H.%M.%S\")\n",

|

||||

"aim_callback = AimCallbackHandler(\n",

|

||||

" repo=\".\",\n",

|

||||

" experiment_name=\"scenario 1: OpenAI LLM\",\n",

|

||||

")\n",

|

||||

"\n",

|

||||

"manager = CallbackManager([StdOutCallbackHandler(), aim_callback])\n",

|

||||

"llm = OpenAI(temperature=0, callback_manager=manager, verbose=True)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "b8WfByB4fl6N"

|

||||

},

|

||||

"source": [

|

||||

"The `flush_tracker` function is used to record LangChain assets on Aim. By default, the session is reset rather than being terminated outright."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 1</h3> In the first scenario, we will use OpenAI LLM."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "o_VmneyIUyx8"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# scenario 1 - LLM\n",

|

||||

"llm_result = llm.generate([\"Tell me a joke\", \"Tell me a poem\"] * 3)\n",

|

||||

"aim_callback.flush_tracker(\n",

|

||||

" langchain_asset=llm,\n",

|

||||

" experiment_name=\"scenario 2: Chain with multiple SubChains on multiple generations\",\n",

|

||||

")\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 2</h3> Scenario two involves chaining with multiple SubChains across multiple generations."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "trxslyb1U28Y"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from langchain.prompts import PromptTemplate\n",

|

||||

"from langchain.chains import LLMChain"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "uauQk10SUzF6"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# scenario 2 - Chain\n",

|

||||

"template = \"\"\"You are a playwright. Given the title of play, it is your job to write a synopsis for that title.\n",

|

||||

"Title: {title}\n",

|

||||

"Playwright: This is a synopsis for the above play:\"\"\"\n",

|

||||

"prompt_template = PromptTemplate(input_variables=[\"title\"], template=template)\n",

|

||||

"synopsis_chain = LLMChain(llm=llm, prompt=prompt_template, callback_manager=manager)\n",

|

||||

"\n",

|

||||

"test_prompts = [\n",

|

||||

" {\"title\": \"documentary about good video games that push the boundary of game design\"},\n",

|

||||

" {\"title\": \"the phenomenon behind the remarkable speed of cheetahs\"},\n",

|

||||

" {\"title\": \"the best in class mlops tooling\"},\n",

|

||||

"]\n",

|

||||

"synopsis_chain.apply(test_prompts)\n",

|

||||

"aim_callback.flush_tracker(\n",

|

||||

" langchain_asset=synopsis_chain, experiment_name=\"scenario 3: Agent with Tools\"\n",

|

||||

")"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"<h3>Scenario 3</h3> The third scenario involves an agent with tools."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "_jN73xcPVEpI"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from langchain.agents import initialize_agent, load_tools\n",

|

||||

"from langchain.agents import AgentType"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"colab": {

|

||||

"base_uri": "https://localhost:8080/"

|

||||

},

|

||||

"id": "Gpq4rk6VT9cu",

|

||||

"outputId": "68ae261e-d0a2-4229-83c4-762562263b66"

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

"output_type": "stream",

|

||||

"text": [

|

||||

"\n",

|

||||

"\n",

|

||||

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

|

||||

"\u001b[32;1m\u001b[1;3m I need to find out who Leo DiCaprio's girlfriend is and then calculate her age raised to the 0.43 power.\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3mLeonardo DiCaprio seemed to prove a long-held theory about his love life right after splitting from girlfriend Camila Morrone just months ...\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I need to find out Camila Morrone's age\n",

|

||||

"Action: Search\n",

|

||||

"Action Input: \"Camila Morrone age\"\u001b[0m\n",

|

||||

"Observation: \u001b[36;1m\u001b[1;3m25 years\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I need to calculate 25 raised to the 0.43 power\n",

|

||||

"Action: Calculator\n",

|

||||

"Action Input: 25^0.43\u001b[0m\n",

|

||||

"Observation: \u001b[33;1m\u001b[1;3mAnswer: 3.991298452658078\n",

|

||||

"\u001b[0m\n",

|

||||

"Thought:\u001b[32;1m\u001b[1;3m I now know the final answer\n",

|

||||

"Final Answer: Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.991298452658078.\u001b[0m\n",

|

||||

"\n",

|

||||

"\u001b[1m> Finished chain.\u001b[0m\n"

|

||||

]

|

||||

}

|

||||

],

|

||||

"source": [

|

||||

"# scenario 3 - Agent with Tools\n",

|

||||

"tools = load_tools([\"serpapi\", \"llm-math\"], llm=llm, callback_manager=manager)\n",

|

||||

"agent = initialize_agent(\n",

|

||||

" tools,\n",

|

||||

" llm,\n",

|

||||

" agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,\n",

|

||||

" callback_manager=manager,\n",

|

||||

" verbose=True,\n",

|

||||

")\n",

|

||||

"agent.run(\n",

|

||||

" \"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?\"\n",

|

||||

")\n",

|

||||

"aim_callback.flush_tracker(langchain_asset=agent, reset=False, finish=True)"

|

||||

]

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"accelerator": "GPU",

|

||||

"colab": {

|

||||

"provenance": []

|

||||

},

|

||||

"gpuClass": "standard",

|

||||

"kernelspec": {

|

||||

"display_name": "Python 3 (ipykernel)",

|

||||

"language": "python",

|

||||

"name": "python3"

|

||||

},

|

||||

"language_info": {

|

||||

"codemirror_mode": {

|

||||

"name": "ipython",

|

||||

"version": 3

|

||||

},

|

||||

"file_extension": ".py",

|

||||

"mimetype": "text/x-python",

|

||||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.9.1"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 1

|

||||

}

|

||||

46

docs/ecosystem/apify.md

Normal file

46

docs/ecosystem/apify.md

Normal file

@@ -0,0 +1,46 @@

|

||||

# Apify

|

||||

|

||||

This page covers how to use [Apify](https://apify.com) within LangChain.

|

||||

|

||||

## Overview

|

||||

|

||||

Apify is a cloud platform for web scraping and data extraction,

|

||||

which provides an [ecosystem](https://apify.com/store) of more than a thousand

|

||||

ready-made apps called *Actors* for various scraping, crawling, and extraction use cases.

|

||||

|

||||

[](https://apify.com/store)

|

||||

|

||||

This integration enables you run Actors on the Apify platform and load their results into LangChain to feed your vector

|

||||

indexes with documents and data from the web, e.g. to generate answers from websites with documentation,

|

||||

blogs, or knowledge bases.

|

||||

|

||||

|

||||

## Installation and Setup

|

||||

|

||||

- Install the Apify API client for Python with `pip install apify-client`

|

||||

- Get your [Apify API token](https://console.apify.com/account/integrations) and either set it as

|

||||

an environment variable (`APIFY_API_TOKEN`) or pass it to the `ApifyWrapper` as `apify_api_token` in the constructor.

|

||||

|

||||

|

||||

## Wrappers

|

||||

|

||||

### Utility

|

||||

|

||||

You can use the `ApifyWrapper` to run Actors on the Apify platform.

|

||||

|

||||

```python

|

||||

from langchain.utilities import ApifyWrapper

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this wrapper, see [this notebook](../modules/agents/tools/examples/apify.ipynb).

|

||||

|

||||

|

||||

### Loader

|

||||

|

||||

You can also use our `ApifyDatasetLoader` to get data from Apify dataset.

|

||||

|

||||

```python

|

||||

from langchain.document_loaders import ApifyDatasetLoader

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of this loader, see [this notebook](../modules/indexes/document_loaders/examples/apify_dataset.ipynb).

|

||||

27

docs/ecosystem/atlas.md

Normal file

27

docs/ecosystem/atlas.md

Normal file

@@ -0,0 +1,27 @@

|

||||

# AtlasDB

|

||||

|

||||

This page covers how to use Nomic's Atlas ecosystem within LangChain.

|

||||

It is broken into two parts: installation and setup, and then references to specific Atlas wrappers.

|

||||

|

||||

## Installation and Setup

|

||||

- Install the Python package with `pip install nomic`

|

||||

- Nomic is also included in langchains poetry extras `poetry install -E all`

|

||||

|

||||

## Wrappers

|

||||

|

||||

### VectorStore

|

||||

|

||||

There exists a wrapper around the Atlas neural database, allowing you to use it as a vectorstore.

|

||||

This vectorstore also gives you full access to the underlying AtlasProject object, which will allow you to use the full range of Atlas map interactions, such as bulk tagging and automatic topic modeling.

|

||||

Please see [the Atlas docs](https://docs.nomic.ai/atlas_api.html) for more detailed information.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

To import this vectorstore:

|

||||

```python

|

||||

from langchain.vectorstores import AtlasDB

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of the AtlasDB wrapper, see [this notebook](../modules/indexes/vectorstores/examples/atlas.ipynb)

|

||||

79

docs/ecosystem/bananadev.md

Normal file

79

docs/ecosystem/bananadev.md

Normal file

@@ -0,0 +1,79 @@

|

||||

# Banana

|

||||

|

||||

This page covers how to use the Banana ecosystem within LangChain.

|

||||

It is broken into two parts: installation and setup, and then references to specific Banana wrappers.

|

||||

|

||||

## Installation and Setup

|

||||

|

||||

- Install with `pip install banana-dev`

|

||||

- Get an Banana api key and set it as an environment variable (`BANANA_API_KEY`)

|

||||

|

||||

## Define your Banana Template

|

||||

|

||||

If you want to use an available language model template you can find one [here](https://app.banana.dev/templates/conceptofmind/serverless-template-palmyra-base).

|

||||

This template uses the Palmyra-Base model by [Writer](https://writer.com/product/api/).

|

||||

You can check out an example Banana repository [here](https://github.com/conceptofmind/serverless-template-palmyra-base).

|

||||

|

||||

## Build the Banana app

|

||||

|

||||

Banana Apps must include the "output" key in the return json.

|

||||

There is a rigid response structure.

|

||||

|

||||

```python

|

||||

# Return the results as a dictionary

|

||||

result = {'output': result}

|

||||

```

|

||||

|

||||

An example inference function would be:

|

||||

|

||||

```python

|

||||

def inference(model_inputs:dict) -> dict:

|

||||

global model

|

||||

global tokenizer

|

||||

|

||||

# Parse out your arguments

|

||||

prompt = model_inputs.get('prompt', None)

|

||||

if prompt == None:

|

||||

return {'message': "No prompt provided"}

|

||||

|

||||

# Run the model

|

||||

input_ids = tokenizer.encode(prompt, return_tensors='pt').cuda()

|

||||

output = model.generate(

|

||||

input_ids,

|

||||

max_length=100,

|

||||

do_sample=True,

|

||||

top_k=50,

|

||||

top_p=0.95,

|

||||

num_return_sequences=1,

|

||||

temperature=0.9,

|

||||

early_stopping=True,

|

||||

no_repeat_ngram_size=3,

|

||||

num_beams=5,

|

||||

length_penalty=1.5,

|

||||

repetition_penalty=1.5,

|

||||

bad_words_ids=[[tokenizer.encode(' ', add_prefix_space=True)[0]]]

|

||||

)

|

||||

|

||||

result = tokenizer.decode(output[0], skip_special_tokens=True)

|

||||

# Return the results as a dictionary

|

||||

result = {'output': result}

|

||||

return result

|

||||

```

|

||||

|

||||

You can find a full example of a Banana app [here](https://github.com/conceptofmind/serverless-template-palmyra-base/blob/main/app.py).

|

||||

|

||||

## Wrappers

|

||||

|

||||

### LLM

|

||||

|

||||

There exists an Banana LLM wrapper, which you can access with

|

||||

|

||||

```python

|

||||

from langchain.llms import Banana

|

||||

```

|

||||

|

||||

You need to provide a model key located in the dashboard:

|

||||

|

||||

```python

|

||||

llm = Banana(model_key="YOUR_MODEL_KEY")

|

||||

```

|

||||

17

docs/ecosystem/cerebriumai.md

Normal file

17

docs/ecosystem/cerebriumai.md

Normal file

@@ -0,0 +1,17 @@

|

||||

# CerebriumAI

|

||||

|

||||

This page covers how to use the CerebriumAI ecosystem within LangChain.

|

||||

It is broken into two parts: installation and setup, and then references to specific CerebriumAI wrappers.

|

||||

|

||||

## Installation and Setup

|

||||

- Install with `pip install cerebrium`

|

||||

- Get an CerebriumAI api key and set it as an environment variable (`CEREBRIUMAI_API_KEY`)

|

||||

|

||||

## Wrappers

|

||||

|

||||

### LLM

|

||||

|

||||

There exists an CerebriumAI LLM wrapper, which you can access with

|

||||

```python

|

||||

from langchain.llms import CerebriumAI

|

||||

```

|

||||

20

docs/ecosystem/chroma.md

Normal file

20

docs/ecosystem/chroma.md

Normal file

@@ -0,0 +1,20 @@

|

||||

# Chroma

|

||||

|

||||

This page covers how to use the Chroma ecosystem within LangChain.

|

||||

It is broken into two parts: installation and setup, and then references to specific Chroma wrappers.

|

||||

|

||||

## Installation and Setup

|

||||

- Install the Python package with `pip install chromadb`

|

||||

## Wrappers

|

||||

|

||||

### VectorStore

|

||||

|

||||

There exists a wrapper around Chroma vector databases, allowing you to use it as a vectorstore,

|

||||

whether for semantic search or example selection.

|

||||

|

||||

To import this vectorstore:

|

||||

```python

|

||||

from langchain.vectorstores import Chroma

|

||||

```

|

||||

|

||||

For a more detailed walkthrough of the Chroma wrapper, see [this notebook](../modules/indexes/vectorstores/getting_started.ipynb)

|

||||

589

docs/ecosystem/clearml_tracking.ipynb

Normal file

589

docs/ecosystem/clearml_tracking.ipynb

Normal file

@@ -0,0 +1,589 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"attachments": {},

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"# ClearML Integration\n",

|

||||

"\n",

|

||||

"In order to properly keep track of your langchain experiments and their results, you can enable the ClearML integration. ClearML is an experiment manager that neatly tracks and organizes all your experiment runs.\n",

|

||||

"\n",

|

||||