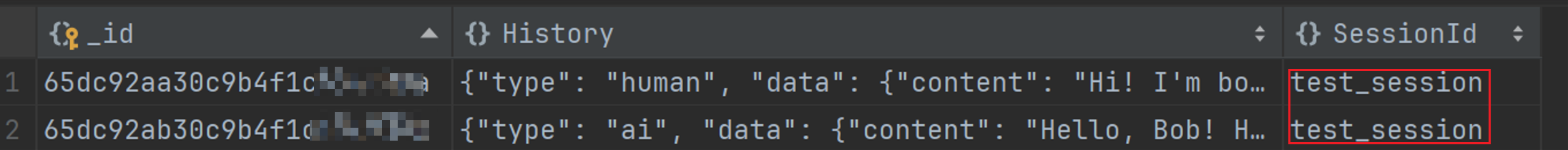

I tried to configure MongoDBChatMessageHistory using the code from the

original documentation to store messages based on the passed session_id

in MongoDB. However, this configuration did not take effect, and the

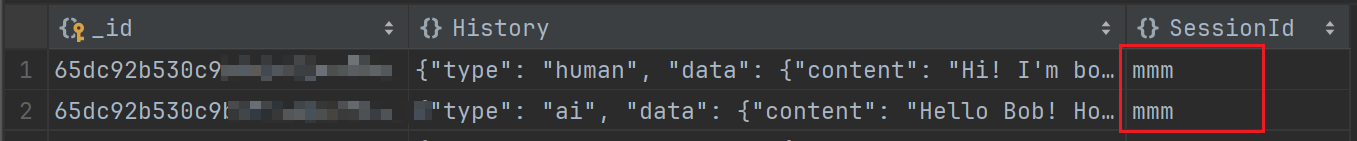

session id in the database remained as 'test_session'. To resolve this

issue, I found that when configuring MongoDBChatMessageHistory, it is

necessary to set session_id=session_id instead of

session_id=test_session.

Issue: DOC: Ineffective Configuration of MongoDBChatMessageHistory for

Custom session_id Storage

previous code:

```python

chain_with_history = RunnableWithMessageHistory(

chain,

lambda session_id: MongoDBChatMessageHistory(

session_id="test_session",

connection_string="mongodb://root:Y181491117cLj@123.56.224.232:27017",

database_name="my_db",

collection_name="chat_histories",

),

input_messages_key="question",

history_messages_key="history",

)

config = {"configurable": {"session_id": "mmm"}}

chain_with_history.invoke({"question": "Hi! I'm bob"}, config)

```

Modified code:

```python

chain_with_history = RunnableWithMessageHistory(

chain,

lambda session_id: MongoDBChatMessageHistory(

session_id=session_id, # here is my modify code

connection_string="mongodb://root:Y181491117cLj@123.56.224.232:27017",

database_name="my_db",

collection_name="chat_histories",

),

input_messages_key="question",

history_messages_key="history",

)

config = {"configurable": {"session_id": "mmm"}}

chain_with_history.invoke({"question": "Hi! I'm bob"}, config)

```

Effect after modification (it works):

**Description:** Update the azure search notebook to have more

descriptive comments, and an option to choose between OpenAI and

AzureOpenAI Embeddings

---------

Co-authored-by: Matt Gotteiner <[email protected]>

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:** Callback handler to integrate fiddler with langchain.

This PR adds the following -

1. `FiddlerCallbackHandler` implementation into langchain/community

2. Example notebook `fiddler.ipynb` for usage documentation

[Internal Tracker : FDL-14305]

**Issue:**

NA

**Dependencies:**

- Installation of langchain-community is unaffected.

- Usage of FiddlerCallbackHandler requires installation of latest

fiddler-client (2.5+)

**Twitter handle:** @fiddlerlabs @behalder

Co-authored-by: Barun Halder <barun@fiddler.ai>

**Description:** Initial pull request for Kinetica LLM wrapper

**Issue:** N/A

**Dependencies:** No new dependencies for unit tests. Integration tests

require gpudb, typeguard, and faker

**Twitter handle:** @chad_juliano

Note: There is another pull request for Kinetica vectorstore. Ultimately

we would like to make a partner package but we are starting with a

community contribution.

- **Description:** Update the Azure Search vector store notebook for the

latest version of the SDK

---------

Co-authored-by: Matt Gotteiner <[email protected]>

**Description:** Clean up Google product names and fix document loader

section

**Issue:** NA

**Dependencies:** None

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:** Update IBM watsonx.ai docs and add IBM as a provider

docs

- **Dependencies:**

[ibm-watsonx-ai](https://pypi.org/project/ibm-watsonx-ai/),

- **Tag maintainer:** :

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally. ✅

**Description:** This PR changes the module import path for SQLDatabase

in the documentation

**Issue:** Updates the documentation to reflect the move of integrations

to langchain-community

- **Description:** The URL in the tigris tutorial was htttps instead of

https, leading to a bad link.

- **Issue:** N/A

- **Dependencies:** N/A

- **Twitter handle:** Speucey

In this pull request, we introduce the add_images method to the

SingleStoreDB vector store class, expanding its capabilities to handle

multi-modal embeddings seamlessly. This method facilitates the

incorporation of image data into the vector store by associating each

image's URI with corresponding document content, metadata, and either

pre-generated embeddings or embeddings computed using the embed_image

method of the provided embedding object.

the change includes integration tests, validating the behavior of the

add_images. Additionally, we provide a notebook showcasing the usage of

this new method.

---------

Co-authored-by: Volodymyr Tkachuk <vtkachuk-ua@singlestore.com>

Description:

In this PR, I am adding a PolygonTickerNews Tool, which can be used to

get the latest news for a given ticker / stock.

Twitter handle: [@virattt](https://twitter.com/virattt)

**Description**: CogniSwitch focusses on making GenAI usage more

reliable. It abstracts out the complexity & decision making required for

tuning processing, storage & retrieval. Using simple APIs documents /

URLs can be processed into a Knowledge Graph that can then be used to

answer questions.

**Dependencies**: No dependencies. Just network calls & API key required

**Tag maintainer**: @hwchase17

**Twitter handle**: https://github.com/CogniSwitch

**Documentation**: Please check

`docs/docs/integrations/toolkits/cogniswitch.ipynb`

**Tests**: The usual tool & toolkits tests using `test_imports.py`

PR has passed linting and testing before this submission.

---------

Co-authored-by: Saicharan Sridhara <145636106+saiCogniswitch@users.noreply.github.com>

Hi, I'm from the LanceDB team.

Improves LanceDB integration by making it easier to use - now you aren't

required to create tables manually and pass them in the constructor,

although that is still backward compatible.

Bug fix - pandas was being used even though it's not a dependency for

LanceDB or langchain

PS - this issue was raised a few months ago but lost traction. It is a

feature improvement for our users kindly review this , Thanks !

This PR replaces the imports of the Astra DB vector store with the

newly-released partner package, in compliance with the deprecation

notice now attached to the community "legacy" store.

**Description:** This PR introduces a new "Astra DB" Partner Package.

So far only the vector store class is _duplicated_ there, all others

following once this is validated and established.

Along with the move to separate package, incidentally, the class name

will change `AstraDB` => `AstraDBVectorStore`.

The strategy has been to duplicate the module (with prospected removal

from community at LangChain 0.2). Until then, the code will be kept in

sync with minimal, known differences (there is a makefile target to

automate drift control. Out of convenience with this check, the

community package has a class `AstraDBVectorStore` aliased to `AstraDB`

at the end of the module).

With this PR several bugfixes and improvement come to the vector store,

as well as a reshuffling of the doc pages/notebooks (Astra and

Cassandra) to align with the move to a separate package.

**Dependencies:** A brand new pyproject.toml in the new package, no

changes otherwise.

**Twitter handle:** `@rsprrs`

---------

Co-authored-by: Christophe Bornet <cbornet@hotmail.com>

Co-authored-by: Erick Friis <erick@langchain.dev>

This PR is adding support for NVIDIA NeMo embeddings issue #16095.

---------

Co-authored-by: Praveen Nakshatrala <pnakshatrala@gmail.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

1. integrate with

[`Yuan2.0`](https://github.com/IEIT-Yuan/Yuan-2.0/blob/main/README-EN.md)

2. update `langchain.llms`

3. add a new doc for [Yuan2.0

integration](docs/docs/integrations/llms/yuan2.ipynb)

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

This pull request introduces support for various Approximate Nearest

Neighbor (ANN) vector index algorithms in the VectorStore class,

starting from version 8.5 of SingleStore DB. Leveraging this enhancement

enables users to harness the power of vector indexing, significantly

boosting search speed, particularly when handling large sets of vectors.

---------

Co-authored-by: Volodymyr Tkachuk <vtkachuk-ua@singlestore.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

Thank you for contributing to LangChain!

Checklist:

- **PR title**: docs: add & update docs for Oracle Cloud Infrastructure

(OCI) integrations

- **Description**: adding and updating documentation for two

integrations - OCI Generative AI & OCI Data Science

(1) adding integration page for OCI Generative AI embeddings (@baskaryan

request,

docs/docs/integrations/text_embedding/oci_generative_ai.ipynb)

(2) updating integration page for OCI Generative AI llms

(docs/docs/integrations/llms/oci_generative_ai.ipynb)

(3) adding platform documentation for OCI (@baskaryan request,

docs/docs/integrations/platforms/oci.mdx). this combines the

integrations of OCI Generative AI & OCI Data Science

(4) if possible, requesting to be added to 'Featured Community

Providers' so supplying a modified

docs/docs/integrations/platforms/index.mdx to reflect the addition

- **Issue:** none

- **Dependencies:** no new dependencies

- **Twitter handle:**

---------

Co-authored-by: MING KANG <ming.kang@oracle.com>

1. integrate chat models with

[`Yuan2.0`](https://github.com/IEIT-Yuan/Yuan-2.0/blob/main/README-EN.md)

2. add a new doc for [Yuan2.0

integration](docs/docs/integrations/llms/yuan2.ipynb)

Yuan2.0 is a new generation Fundamental Large Language Model developed

by IEIT System. We have published all three models, Yuan 2.0-102B, Yuan

2.0-51B, and Yuan 2.0-2B.

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

## Description

I am submitting this for a school project as part of a team of 5. Other

team members are @LeilaChr, @maazh10, @Megabear137, @jelalalamy. This PR

also has contributions from community members @Harrolee and @Mario928.

Initial context is in the issue we opened (#11229).

This pull request adds:

- Generic framework for expanding the languages that `LanguageParser`

can handle, using the

[tree-sitter](https://github.com/tree-sitter/py-tree-sitter#py-tree-sitter)

parsing library and existing language-specific parsers written for it

- Support for the following additional languages in `LanguageParser`:

- C

- C++

- C#

- Go

- Java (contributed by @Mario928

https://github.com/ThatsJustCheesy/langchain/pull/2)

- Kotlin

- Lua

- Perl

- Ruby

- Rust

- Scala

- TypeScript (contributed by @Harrolee

https://github.com/ThatsJustCheesy/langchain/pull/1)

Here is the [design

document](https://docs.google.com/document/d/17dB14cKCWAaiTeSeBtxHpoVPGKrsPye8W0o_WClz2kk)

if curious, but no need to read it.

## Issues

- Closes#11229

- Closes#10996

- Closes#8405

## Dependencies

`tree_sitter` and `tree_sitter_languages` on PyPI. We have tried to add

these as optional dependencies.

## Documentation

We have updated the list of supported languages, and also added a

section to `source_code.ipynb` detailing how to add support for

additional languages using our framework.

## Maintainer

- @hwchase17 (previously reviewed

https://github.com/langchain-ai/langchain/pull/6486)

Thanks!!

## Git commits

We will gladly squash any/all of our commits (esp merge commits) if

necessary. Let us know if this is desirable, or if you will be

squash-merging anyway.

<!-- Thank you for contributing to LangChain!

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes (if applicable),

- **Dependencies:** any dependencies required for this change,

- **Tag maintainer:** for a quicker response, tag the relevant

maintainer (see below),

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc:

https://github.com/langchain-ai/langchain/blob/master/.github/CONTRIBUTING.md

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in `docs/extras`

directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

---------

Co-authored-by: Maaz Hashmi <mhashmi373@gmail.com>

Co-authored-by: LeilaChr <87657694+LeilaChr@users.noreply.github.com>

Co-authored-by: Jeremy La <jeremylai511@gmail.com>

Co-authored-by: Megabear137 <zubair.alnoor27@gmail.com>

Co-authored-by: Lee Harrold <lhharrold@sep.com>

Co-authored-by: Mario928 <88029051+Mario928@users.noreply.github.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

- **Description:** Pebblo opensource project enables developers to

safely load data to their Gen AI apps. It identifies semantic topics and

entities found in the loaded data and summarizes them in a

developer-friendly report.

- **Dependencies:** none

- **Twitter handle:** srics

@hwchase17

**Description:** This PR adds support for

[flashrank](https://github.com/PrithivirajDamodaran/FlashRank) for

reranking as alternative to Cohere.

I'm not sure `libs/langchain` is the right place for this change. At

first, I wanted to put it under `libs/community`. All the compressors

were under `libs/langchain/retrievers/document_compressors` though. Hope

this makes sense!

- Reordered sections

- Applied consistent formatting

- Fixed headers (there were 2 H1 headers; this breaks CoT)

- Added `Settings` header and moved all related sections under it

Description: Updated doc for integrations/chat/anthropic_functions with

new functions: invoke. Changed structure of the document to match the

required one.

Issue: https://github.com/langchain-ai/langchain/issues/15664

Dependencies: None

Twitter handle: None

---------

Co-authored-by: NaveenMaltesh <naveen@onmeta.in>

- **Description:** Adds the document loader for [AWS

Athena](https://aws.amazon.com/athena/), a serverless and interactive

analytics service.

- **Dependencies:** Added boto3 as a dependency

This PR updates the `TF-IDF.ipynb` documentation to reflect the new

import path for TFIDFRetriever in the langchain-community package. The

previous path, `from langchain.retrievers import TFIDFRetriever`, has

been updated to `from langchain_community.retrievers import

TFIDFRetriever` to align with the latest changes in the langchain

library.

according to https://youtu.be/rZus0JtRqXE?si=aFo1JTDnu5kSEiEN&t=678 by

@efriis

- **Description:** Seems the requirements for tool names have changed

and spaces are no longer allowed. Changed the tool name from Google

Search to google_search in the notebook

- **Issue:** n/a

- **Dependencies:** none

- **Twitter handle:** @mesirii

**Description**

Make some functions work with Milvus:

1. get_ids: Get primary keys by field in the metadata

2. delete: Delete one or more entities by ids

3. upsert: Update/Insert one or more entities

**Issue**

None

**Dependencies**

None

**Tag maintainer:**

@hwchase17

**Twitter handle:**

None

---------

Co-authored-by: HoaNQ9 <hoanq.1811@gmail.com>

Co-authored-by: Erick Friis <erick@langchain.dev>

- **Issue:** Issue with model argument support (been there for a while

actually):

- Non-specially-handled arguments like temperature don't work when

passed through constructor.

- Such arguments DO work quite well with `bind`, but also do not abide

by field requirements.

- Since initial push, server-side error messages have gotten better and

v0.0.2 raises better exceptions. So maybe it's better to let server-side

handle such issues?

- **Description:**

- Removed ChatNVIDIA's argument fields in favor of

`model_kwargs`/`model_kws` arguments which aggregates constructor kwargs

(from constructor pathway) and merges them with call kwargs (bind

pathway).

- Shuffled a few functions from `_NVIDIAClient` to `ChatNVIDIA` to

streamline construction for future integrations.

- Minor/Optional: Old services didn't have stop support, so client-side

stopping was implemented. Now do both.

- **Any Breaking Changes:** Minor breaking changes if you strongly rely

on chat_model.temperature, etc. This is captured by

chat_model.model_kwargs.

PR passes tests and example notebooks and example testing. Still gonna

chat with some people, so leaving as draft for now.

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

- **Description: changes to you.com files**

- general cleanup

- adds community/utilities/you.py, moving bulk of code from retriever ->

utility

- removes `snippet` as endpoint

- adds `news` as endpoint

- adds more tests

<s>**Description: update community MAKE file**

- adds `integration_tests`

- adds `coverage`</s>

- **Issue:** the issue # it fixes if applicable,

- [For New Contributors: Update Integration

Documentation](https://github.com/langchain-ai/langchain/issues/15664#issuecomment-1920099868)

- **Dependencies:** n/a

- **Twitter handle:** @scottnath

- **Mastodon handle:** scottnath@mastodon.social

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

Ran

```python

import glob

import re

def update_prompt(x):

return re.sub(

r"(?P<start>\b)PromptTemplate\(template=(?P<template>.*), input_variables=(?:.*)\)",

"\g<start>PromptTemplate.from_template(\g<template>)",

x

)

for fn in glob.glob("docs/**/*", recursive=True):

try:

content = open(fn).readlines()

except:

continue

content = [update_prompt(l) for l in content]

with open(fn, "w") as f:

f.write("".join(content))

```

Several notebooks have Title != file name. That results in corrupted

sorting in Navbar (ToC).

- Fixed titles and file names.

- Changed text formats to the consistent form

- Redirected renamed files in the `Vercel.json`

### Description

support load any github file content based on file extension.

Why not use [git

loader](https://python.langchain.com/docs/integrations/document_loaders/git#load-existing-repository-from-disk)

?

git loader clones the whole repo even only interested part of files,

that's too heavy. This GithubFileLoader only downloads that you are

interested files.

### Twitter handle

my twitter: @shufanhaotop

---------

Co-authored-by: Hao Fan <h_fan@apple.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:** Link to the Brave Website added to the

`brave-search.ipynb` notebook.

This notebook is shown in the docs as an example for the brave tool.

**Issue:** There was to reference on where / how to get an api key

**Dependencies:** none

**Twitter handle:** not for this one :)

- **Description:** docs: update StreamlitCallbackHandler example.

- **Issue:** None

- **Dependencies:** None

I have updated the example for StreamlitCallbackHandler in the

documentation bellow.

https://python.langchain.com/docs/integrations/callbacks/streamlit

Previously, the example used `initialize_agent`, which has been

deprecated, so I've updated it to use `create_react_agent` instead. Many

langchain users are likely searching examples of combining

`create_react_agent` or `openai_tools_agent_chain` with

StreamlitCallbackHandler. I'm sure this update will be really helpful

for them!

Unfortunately, writing unit tests for this example is difficult, so I

have not written any tests. I have run this code in a standalone Python

script file and ensured it runs correctly.

- **Description:** Adds an additional class variable to `BedrockBase`

called `provider` that allows sending a model provider such as amazon,

cohere, ai21, etc.

Up until now, the model provider is extracted from the `model_id` using

the first part before the `.`, such as `amazon` for

`amazon.titan-text-express-v1` (see [supported list of Bedrock model IDs

here](https://docs.aws.amazon.com/bedrock/latest/userguide/model-ids-arns.html)).

But for custom Bedrock models where the ARN of the provisioned

throughput must be supplied, the `model_id` is like

`arn:aws:bedrock:...` so the `model_id` cannot be extracted from this. A

model `provider` is required by the LangChain Bedrock class to perform

model-based processing. To allow the same processing to be performed for

custom-models of a specific base model type, passing this `provider`

argument can help solve the issues.

The alternative considered here was the use of

`provider.arn:aws:bedrock:...` which then requires ARN to be extracted

and passed separately when invoking the model. The proposed solution

here is simpler and also does not cause issues for current models

already using the Bedrock class.

- **Issue:** N/A

- **Dependencies:** N/A

---------

Co-authored-by: Piyush Jain <piyushjain@duck.com>

- **Description:** Several meta/usability updates, including User-Agent.

- **Issue:**

- User-Agent metadata for tracking connector engagement. @milesial

please check and advise.

- Better error messages. Tries harder to find a request ID. @milesial

requested.

- Client-side image resizing for multimodal models. Hope to upgrade to

Assets API solution in around a month.

- `client.payload_fn` allows you to modify payload before network

request. Use-case shown in doc notebook for kosmos_2.

- `client.last_inputs` put back in to allow for advanced

support/debugging.

- **Dependencies:**

- Attempts to pull in PIL for image resizing. If not installed, prints

out "please install" message, warns it might fail, and then tries

without resizing. We are waiting on a more permanent solution.

For LC viz: @hinthornw

For NV viz: @fciannella @milesial @vinaybagade

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

- **Description:** Updating one line code sample for Ollama with new

**langchain_community** package

- **Issue:**

- **Dependencies:** none

- **Twitter handle:** @picsoung

Description: Updated doc for llm/aleph_alpha with new functions: invoke.

Changed structure of the document to match the required one.

Issue: https://github.com/langchain-ai/langchain/issues/15664

Dependencies: None

Twitter handle: None

---------

Co-authored-by: Radhakrishnan Iyer <radhakrishnan.iyer@ibm.com>

Added notification about limited preview status of Guardrails for Amazon

Bedrock feature to code example.

---------

Co-authored-by: Piyush Jain <piyushjain@duck.com>

Description: Added the parameter for a possibility to change a language

model in SpacyEmbeddings. The default value is still the same:

"en_core_web_sm", so it shouldn't affect a code which previously did not

specify this parameter, but it is not hard-coded anymore and easy to

change in case you want to use it with other languages or models.

Issue: At Barcelona Supercomputing Center in Aina project

(https://github.com/projecte-aina), a project for Catalan Language

Models and Resources, we would like to use Langchain for one of our

current projects and we would like to comment that Langchain, while

being a very powerful and useful open-source tool, is pretty much

focused on English language. We would like to contribute to make it a

bit more adaptable for using with other languages.

Dependencies: This change requires the Spacy library and a language

model, specified in the model parameter.

Tag maintainer: @dev2049

Twitter handle: @projecte_aina

---------

Co-authored-by: Marina Pliusnina <marina.pliusnina@bsc.es>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Replace this entire comment with:

- **Description:** Add Baichuan LLM to integration/llm, also updated

related docs.

Co-authored-by: BaiChuanHelper <wintergyc@WinterGYCs-MacBook-Pro.local>

- **Description:**

Filtering in a FAISS vectorstores is very inflexible and doesn't allow

that many use case. I think supporting callable like this enables a lot:

regular expressions, condition on multiple keys etc. **Note** I had to

manually alter a test. I don't understand if it was falty to begin with

or if there is something funky going on.

- **Issue:** None

- **Dependencies:** None

- **Twitter handle:** None

Signed-off-by: thiswillbeyourgithub <26625900+thiswillbeyourgithub@users.noreply.github.com>

This PR includes updates for OctoAI integrations:

- The LLM class was updated to fix a bug that occurs with multiple

sequential calls

- The Embedding class was updated to support the new GTE-Large endpoint

released on OctoAI lately

- The documentation jupyter notebook was updated to reflect using the

new LLM sdk

Thank you!

## Summary

This PR implements the "Connery Action Tool" and "Connery Toolkit".

Using them, you can integrate Connery actions into your LangChain agents

and chains.

Connery is an open-source plugin infrastructure for AI.

With Connery, you can easily create a custom plugin with a set of

actions and seamlessly integrate them into your LangChain agents and

chains. Connery will handle the rest: runtime, authorization, secret

management, access management, audit logs, and other vital features.

Additionally, Connery and our community offer a wide range of

ready-to-use open-source plugins for your convenience.

Learn more about Connery:

- GitHub: https://github.com/connery-io/connery-platform

- Documentation: https://docs.connery.io

- Twitter: https://twitter.com/connery_io

## TODOs

- [x] API wrapper

- [x] Integration tests

- [x] Connery Action Tool

- [x] Docs

- [x] Example

- [x] Integration tests

- [x] Connery Toolkit

- [x] Docs

- [x] Example

- [x] Formatting (`make format`)

- [x] Linting (`make lint`)

- [x] Testing (`make test`)

**Description** : This PR updates the documentation for installing

llama-cpp-python on Windows.

- Updates install command to support pyproject.toml

- Makes CPU/GPU install instructions clearer

- Adds reinstall with GPU support command

**Issue**: Existing

[documentation](https://python.langchain.com/docs/integrations/llms/llamacpp#compiling-and-installing)

lists the following commands for installing llama-cpp-python

```

python setup.py clean

python setup.py install

````

The current version of the repo does not include a `setup.py` and uses a

`pyproject.toml` instead.

This can be replaced with

```

python -m pip install -e .

```

As explained in

https://github.com/abetlen/llama-cpp-python/issues/965#issuecomment-1837268339

**Dependencies**: None

**Twitter handle**: None

---------

Co-authored-by: blacksmithop <angstycoder101@gmaii.com>

- **Description:** The current pubmed tool documentation is referencing

the path to langchain core not the path to the tool in community. The

old tool redirects anyways, but for efficiency of using the more direct

path, just adding this documentation so it references the new path

- **Issue:** doesn't fix an issue

- **Dependencies:** no dependencies

- **Twitter handle:** rooftopzen

- **Description:** Adds Wikidata support to langchain. Can read out

documents from Wikidata.

- **Issue:** N/A

- **Dependencies:** Adds implicit dependencies for

`wikibase-rest-api-client` (for turning items into docs) and

`mediawikiapi` (for hitting the search endpoint)

- **Twitter handle:** @derenrich

You can see an example of this tool used in a chain

[here](https://nbviewer.org/urls/d.erenrich.net/upload/Wikidata_Langchain.ipynb)

or

[here](https://nbviewer.org/urls/d.erenrich.net/upload/Wikidata_Lars_Kai_Hansen.ipynb)

<!-- Thank you for contributing to LangChain!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

- **Description:** Adding Baichuan Text Embedding Model and Baichuan Inc

introduction.

Baichuan Text Embedding ranks #1 in C-MTEB leaderboard:

https://huggingface.co/spaces/mteb/leaderboard

Co-authored-by: BaiChuanHelper <wintergyc@WinterGYCs-MacBook-Pro.local>

- **Description:** This PR adds [EdenAI](https://edenai.co/) for the

chat model (already available in LLM & Embeddings). It supports all

[ChatModel] functionality: generate, async generate, stream, astream and

batch. A detailed notebook was added.

- **Dependencies**: No dependencies are added as we call a rest API.

---------

Co-authored-by: Eugene Yurtsev <eyurtsev@gmail.com>

community:

- **Description:**

- Add new ChatLiteLLMRouter class that allows a client to use a LiteLLM

Router as a LangChain chat model.

- Note: The existing ChatLiteLLM integration did not cover the LiteLLM

Router class.

- Add tests and Jupyter notebook.

- **Issue:** None

- **Dependencies:** Relies on existing ChatLiteLLM integration

- **Twitter handle:** @bburgin_0

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** Adding Oracle Cloud Infrastructure Generative AI

integration. Oracle Cloud Infrastructure (OCI) Generative AI is a fully

managed service that provides a set of state-of-the-art, customizable

large language models (LLMs) that cover a wide range of use cases, and

which is available through a single API. Using the OCI Generative AI

service you can access ready-to-use pretrained models, or create and

host your own fine-tuned custom models based on your own data on

dedicated AI clusters.

https://docs.oracle.com/en-us/iaas/Content/generative-ai/home.htm

- **Issue:** None,

- **Dependencies:** OCI Python SDK,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

Passed

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

we provide unit tests. However, we cannot provide integration tests due

to Oracle policies that prohibit public sharing of api keys.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

---------

Co-authored-by: Arthur Cheng <arthur.cheng@oracle.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

Added support for optionally supplying 'Guardrails for Amazon Bedrock'

on both types of model invocations (batch/regular and streaming) and for

all models supported by the Amazon Bedrock service.

@baskaryan @hwchase17

```python

llm = Bedrock(model_id="<model_id>", client=bedrock,

model_kwargs={},

guardrails={"id": " <guardrail_id>",

"version": "<guardrail_version>",

"trace": True}, callbacks=[BedrockAsyncCallbackHandler()])

class BedrockAsyncCallbackHandler(AsyncCallbackHandler):

"""Async callback handler that can be used to handle callbacks from langchain."""

async def on_llm_error(

self,

error: BaseException,

**kwargs: Any,

) -> Any:

reason = kwargs.get("reason")

if reason == "GUARDRAIL_INTERVENED":

# kwargs contains additional trace information sent by 'Guardrails for Bedrock' service.

print(f"""Guardrails: {kwargs}""")

# streaming

llm = Bedrock(model_id="<model_id>", client=bedrock,

model_kwargs={},

streaming=True,

guardrails={"id": "<guardrail_id>",

"version": "<guardrail_version>"})

```

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:**

This PR adds a VectorStore integration for SAP HANA Cloud Vector Engine,

which is an upcoming feature in the SAP HANA Cloud database

(https://blogs.sap.com/2023/11/02/sap-hana-clouds-vector-engine-announcement/).

- **Issue:** N/A

- **Dependencies:** [SAP HANA Python

Client](https://pypi.org/project/hdbcli/)

- **Twitter handle:** @sapopensource

Implementation of the integration:

`libs/community/langchain_community/vectorstores/hanavector.py`

Unit tests:

`libs/community/tests/unit_tests/vectorstores/test_hanavector.py`

Integration tests:

`libs/community/tests/integration_tests/vectorstores/test_hanavector.py`

Example notebook:

`docs/docs/integrations/vectorstores/hanavector.ipynb`

Access credentials for execution of the integration tests can be

provided to the maintainers.

---------

Co-authored-by: sascha <sascha.stoll@sap.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

Description:

- checked that the doc chat/google_vertex_ai_palm is using new

functions: invoke, stream etc.

- added Gemini example

- fixed wrong output in Sanskrit example

Issue: https://github.com/langchain-ai/langchain/issues/15664

Dependencies: None

Twitter handle: None

- **Description:** Updated `_get_elements()` function of

`UnstructuredFileLoader `class to check if the argument self.file_path

is a file or list of files. If it is a list of files then it iterates

over the list of file paths, calls the partition function for each one,

and appends the results to the elements list. If self.file_path is not a

list, it calls the partition function as before.

- **Issue:** Fixed#15607,

- **Dependencies:** NA

- **Twitter handle:** NA

Co-authored-by: H161961 <Raunak.Raunak@Honeywell.com>

- **Description:** This PR enables LangChain to access the iFlyTek's

Spark LLM via the chat_models wrapper.

- **Dependencies:** websocket-client ^1.6.1

- **Tag maintainer:** @baskaryan

### SparkLLM chat model usage

Get SparkLLM's app_id, api_key and api_secret from [iFlyTek SparkLLM API

Console](https://console.xfyun.cn/services/bm3) (for more info, see

[iFlyTek SparkLLM Intro](https://xinghuo.xfyun.cn/sparkapi) ), then set

environment variables `IFLYTEK_SPARK_APP_ID`, `IFLYTEK_SPARK_API_KEY`

and `IFLYTEK_SPARK_API_SECRET` or pass parameters when using it like the

demo below:

```python3

from langchain.chat_models.sparkllm import ChatSparkLLM

client = ChatSparkLLM(

spark_app_id="<app_id>",

spark_api_key="<api_key>",

spark_api_secret="<api_secret>"

)

```

Description:

- Added output and environment variables

- Updated the documentation for chat/anthropic, changing references from

`langchain.schema` to `langchain_core.prompts`.

Issue: https://github.com/langchain-ai/langchain/issues/15664

Dependencies: None

Twitter handle: None

Since this is my first open-source PR, please feel free to point out any

mistakes, and I'll be eager to make corrections.

This PR introduces update to Konko Integration with LangChain.

1. **New Endpoint Addition**: Integration of a new endpoint to utilize

completion models hosted on Konko.

2. **Chat Model Updates for Backward Compatibility**: We have updated

the chat models to ensure backward compatibility with previous OpenAI

versions.

4. **Updated Documentation**: Comprehensive documentation has been

updated to reflect these new changes, providing clear guidance on

utilizing the new features and ensuring seamless integration.

Thank you to the LangChain team for their exceptional work and for

considering this PR. Please let me know if any additional information is

needed.

---------

Co-authored-by: Shivani Modi <shivanimodi@Shivanis-MacBook-Pro.local>

Co-authored-by: Shivani Modi <shivanimodi@Shivanis-MBP.lan>

- **Description:** Baichuan Chat (with both Baichuan-Turbo and

Baichuan-Turbo-192K models) has updated their APIs. There are breaking

changes. For example, BAICHUAN_SECRET_KEY is removed in the latest API

but is still required in Langchain. Baichuan's Langchain integration

needs to be updated to the latest version.

- **Issue:** #15206

- **Dependencies:** None,

- **Twitter handle:** None

@hwchase17.

Co-authored-by: BaiChuanHelper <wintergyc@WinterGYCs-MacBook-Pro.local>

**Description:**

- Implement `SQLStrStore` and `SQLDocStore` classes that inherits from

`BaseStore` to allow to persist data remotely on a SQL server.

- SQL is widely used and sometimes we do not want to install a caching

solution like Redis.

- Multiple issues/comments complain that there is no easy remote and

persistent solution that are not in memory (users want to replace

InMemoryStore), e.g.,

https://github.com/langchain-ai/langchain/issues/14267,

https://github.com/langchain-ai/langchain/issues/15633,

https://github.com/langchain-ai/langchain/issues/14643,

https://stackoverflow.com/questions/77385587/persist-parentdocumentretriever-of-langchain

- This is particularly painful when wanting to use

`ParentDocumentRetriever `

- This implementation is particularly useful when:

* it's expensive to construct an InMemoryDocstore/dict

* you want to retrieve documents from remote sources

* you just want to reuse existing objects

- This implementation integrates well with PGVector, indeed, when using

PGVector, you already have a SQL instance running. `SQLDocStore` is a

convenient way of using this instance to store documents associated to

vectors. An integration example with ParentDocumentRetriever and

PGVector is provided in docs/docs/integrations/stores/sql.ipynb or

[here](https://github.com/gcheron/langchain/blob/sql-store/docs/docs/integrations/stores/sql.ipynb).

- It persists `str` and `Document` objects but can be easily extended.

**Issue:**

Provide an easy SQL alternative to `InMemoryStore`.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

**Description** : New documents loader for visio files (with extension

.vsdx)

A [visio file](https://fr.wikipedia.org/wiki/Microsoft_Visio) (with

extension .vsdx) is associated with Microsoft Visio, a diagram creation

software. It stores information about the structure, layout, and

graphical elements of a diagram. This format facilitates the creation

and sharing of visualizations in areas such as business, engineering,

and computer science.

A Visio file can contain multiple pages. Some of them may serve as the

background for others, and this can occur across multiple layers. This

loader extracts the textual content from each page and its associated

pages, enabling the extraction of all visible text from each page,

similar to what an OCR algorithm would do.

**Dependencies** : xmltodict package

- **Description:** Updated the Chat/Ollama docs notebook with LCEL chain

examples

- **Issue:** #15664 I'm a new contributor 😊

- **Dependencies:** No dependencies

- **Twitter handle:**

Comments:

- How do I truncate the output of the stream in the notebook if and or

when it goes on and on and on for even the basic of prompts?

Edit:

Looking forward to feedback @baskaryan

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

## Problem

Spent several hours trying to figure out how to pass

`RedisChatMessageHistory` as a `GetSessionHistoryCallable` with a

different REDIS hostname. This example kept connecting to

`redis://localhost:6379`, but I wanted to connect to a server not hosted

locally.

## Cause

Assumption the user knows how to implement `BaseChatMessageHistory` and

`GetSessionHistoryCallable`

## Solution

Update documentation to show how to explicitly set the REDIS hostname

using a lambda function much like the MongoDB and SQLite examples.

After merging [PR

#16304](https://github.com/langchain-ai/langchain/pull/16304), I

realized that our notebook example for integrating TiDB with LangChain

was too basic. To make it more useful and user-friendly, I plan to

create a detailed example. This will show how to use TiDB for saving

history messages in LangChain, offering a clearer, more practical guide

for our users

I also added LANGCHAIN_COMET_TRACING to enable the CometLLM tracing

integration similar to other tracing integrations. This is easier for

end-users to enable it rather than importing the callback and pass it

manually.

(This is the same content as

https://github.com/langchain-ai/langchain/pull/14650 but rebased and

squashed as something seems to confuse Github Action).

- **Description:** add milvus multitenancy doc, it is an example for

this [pr](https://github.com/langchain-ai/langchain/pull/15740) .

- **Issue:** No,

- **Dependencies:** No,

- **Twitter handle:** No

Signed-off-by: ChengZi <chen.zhang@zilliz.com>

**Description:** Add support for querying TigerGraph databases through

the InquiryAI service.

**Issue**: N/A

**Dependencies:** N/A

**Twitter handle:** @TigerGraphDB

This pull request integrates the TiDB database into LangChain for

storing message history, marking one of several steps towards a

comprehensive integration of TiDB with LangChain.

A simple usage

```python

from datetime import datetime

from langchain_community.chat_message_histories import TiDBChatMessageHistory

history = TiDBChatMessageHistory(

connection_string="mysql+pymysql://<host>:<PASSWORD>@<host>:4000/<db>?ssl_ca=/etc/ssl/cert.pem&ssl_verify_cert=true&ssl_verify_identity=true",

session_id="code_gen",

earliest_time=datetime.utcnow(), # Optional to set earliest_time to load messages after this time point.

)

history.add_user_message("hi! How's feature going?")

history.add_ai_message("It's almot done")

```

- **Description:** Some code sources have been moved from `langchain` to

`langchain_community` and so the documentation is not yet up-to-date.

This is specifically true for `StreamlitCallbackHandler` which returns a

`warning` message if not loaded from `langchain_community`.,

- **Issue:** I don't see a # issue that could address this problem but

perhaps #10744,

- **Dependencies:** Since it's a documentation change no dependencies

are required

- **Description:** update documentation on jaguar vector store:

Instruction for setting up jaguar server and usage of text_tag.

- **Issue:**

- **Dependencies:**

- **Twitter handle:**

---------

Co-authored-by: JY <jyjy@jaguardb>

- **Description:** Updating documentation of IBM

[watsonx.ai](https://www.ibm.com/products/watsonx-ai) LLM with using

`invoke` instead of `__call__`

- **Dependencies:**

[ibm-watsonx-ai](https://pypi.org/project/ibm-watsonx-ai/),

- **Tag maintainer:** :

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally. ✅

The following warning information show when i use `run` and `__call__`

method:

```

LangChainDeprecationWarning: The function `__call__` was deprecated in LangChain 0.1.7 and will be removed in 0.2.0. Use invoke instead.

warn_deprecated(

```

We need to update documentation for using `invoke` method

The following warning information will be displayed when i use

`llm(PROMPT)`:

```python

/Users/169/llama.cpp/venv/lib/python3.11/site-packages/langchain_core/_api/deprecation.py:117: LangChainDeprecationWarning: The function `__call__` was deprecated in LangChain 0.1.7 and will be removed in 0.2.0. Use invoke instead.

warn_deprecated(

```

So I changed to standard usage.

**Description:**

In this PR, I am adding a `PolygonLastQuote` Tool, which can be used to

get the latest price quote for a given ticker / stock.

Additionally, I've added a Polygon Toolkit, which we can use to

encapsulate future tools that we build for Polygon.

**Twitter handle:** [@virattt](https://twitter.com/virattt)

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

- **Description:** In Google Vertex AI, Gemini Chat models currently

doesn't have a support for SystemMessage. This PR adds support for it

only if a user provides additional convert_system_message_to_human flag

during model initialization (in this case, SystemMessage would be

prepended to the first HumanMessage). **NOTE:** The implementation is

similar to #14824

- **Twitter handle:** rajesh_thallam

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

- **Description**: Updated doc for llm/google_vertex_ai_palm with new

functions: `invoke`, `stream`... Changed structure of the document to

match the required one.

- **Issue**: #15664

- **Dependencies**: None

- **Twitter handle**: None

---------

Co-authored-by: Jorge Zaldívar <jzaldivar@google.com>

**Description:** Gemini model has quite annoying default safety_settings

settings. In addition, current VertexAI class doesn't provide a property

to override such settings.

So, this PR aims to

- add safety_settings property to VertexAI

- fix issue with incorrect LLM output parsing when LLM responds with

appropriate 'blocked' response

- fix issue with incorrect parsing LLM output when Gemini API blocks

prompt itself as inappropriate

- add safety_settings related tests

I'm not enough familiar with langchain code base and guidelines. So, any

comments and/or suggestions are very welcome.

**Issue:** it will likely fix#14841

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

**Description**: This PR fixes an error in the documentation for Azure

Cosmos DB Integration.

**Issue**: The correct way to import `AzureCosmosDBVectorSearch` is

```python

from langchain_community.vectorstores.azure_cosmos_db import (

AzureCosmosDBVectorSearch,

)

```

While the

[documentation](https://python.langchain.com/docs/integrations/vectorstores/azure_cosmos_db)

states it to be

```python

from langchain_community.vectorstores.azure_cosmos_db_vector_search import (

AzureCosmosDBVectorSearch,

CosmosDBSimilarityType,

)

```

As you can see in

[azure_cosmos_db.py](c323742f4f/libs/langchain/langchain/vectorstores/azure_cosmos_db.py (L1C45-L2))

**Dependencies:**: None

**Twitter handle**: None

- **Description:** Adds MistralAIEmbeddings class for embeddings, using

the new official API.

- **Dependencies:** mistralai

- **Tag maintainer**: @efriis, @hwchase17

- **Twitter handle:** @LMS_David_RS

Create `integrations/text_embedding/mistralai.ipynb`: an example

notebook for MistralAIEmbeddings class

Modify `embeddings/__init__.py`: Import the class

Create `embeddings/mistralai.py`: The embedding class

Create `integration_tests/embeddings/test_mistralai.py`: The test file.

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

**Description:** This new feature enhances the flexibility of pipeline

integration, particularly when working with RESTful APIs.

``JsonRequestsWrapper`` allows for the decoding of JSON output, instead

of the only option for text output.

---------

Co-authored-by: Zhichao HAN <hanzhichao2000@hotmail.com>

- **Description:** Adds documentation for the

`FirestoreChatMessageHistory` integration and lists integration in

Google's documentation

- **Issue:** NA

- **Dependencies:** No

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

- **Description:** add deprecated warning for ErnieBotChat and

ErnieEmbeddings.

- These two classes **lack maintenance** and do not use the sdk provided

by qianfan, which means hard to implement some key feature like

streaming.

- The alternative `langchain_community.chat_models.QianfanChatEndpoint`

and `langchain_community.embeddings.QianfanEmbeddingsEndpoint` can

completely replace these two classes, only need to change configuration

items.

- **Issue:** None,

- **Dependencies:** None,

- **Twitter handle:** None

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:** docs update following the changes introduced in

#15879

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

BigQuery vector search lets you use GoogleSQL to do semantic search,

using vector indexes for fast but approximate results, or using brute

force for exact results.

This PR:

1. Add `metadata[_job_ib]` in Document returned by any similarity search

2. Add `explore_job_stats` to enable users to explore job statistics and

better the debuggability

3. Set the minimum row limit for running create vector index.

- vertex chat

- google

- some pip openai

- percent and openai

- all percent

- more

- pip

- fmt

- docs: google vertex partner docs

- fmt

- docs: more pip installs

- **Description:** Added a `PolygonAPIWrapper` and an initial

`get_last_quote` endpoint, which allows us to get the last price quote

for a given `ticker`. Once merged, I can add a Polygon tool in `tools/`

for agents to use.

- **Twitter handle:** [@virattt](https://twitter.com/virattt)

The Polygon.io Stocks API provides REST endpoints that let you query the

latest market data from all US stock exchanges.

Support [Lantern](https://github.com/lanterndata/lantern) as a new

VectorStore type.

- Added Lantern as VectorStore.

It will support 3 distance functions `l2 squared`, `cosine` and

`hamming` and will use `HNSW` index.

- Added tests

- Added example notebook

**Description:**

Remove section on how to install Action Server and direct the users t o

the instructions on Robocorp repository.

**Reason:**

Robocorp Action Server has moved from a pip installation to a standalone

cli application and is due for changes. Because of that, leaving only

LangChain integration relevant part in the documentation.

**Description:**

Added aembed_documents() and aembed_query() async functions in

HuggingFaceHubEmbeddings class in

langchain_community\embeddings\huggingface_hub.py file. It will support

to make async calls to HuggingFaceHub's

embedding endpoint and generate embeddings asynchronously.

Test Cases: Added test_huggingfacehub_embedding_async_documents() and

test_huggingfacehub_embedding_async_query()

functions in test_huggingface_hub.py file to test the two async

functions created in HuggingFaceHubEmbeddings class.

Documentation: Updated huggingfacehub.ipynb with steps to install

huggingface_hub package and use

HuggingFaceHubEmbeddings.

**Dependencies:** None,

**Twitter handle:** I do not have a Twitter account

---------

Co-authored-by: H161961 <Raunak.Raunak@Honeywell.com>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

Major changes:

- Rename `wasm_chat.py` to `llama_edge.py`

- Rename the `WasmChatService` class to `ChatService`

- Implement the `stream` interface for `ChatService`

- Add `test_chat_wasm_service_streaming` in the integration test

- Update `llama_edge.ipynb`

---------

Signed-off-by: Xin Liu <sam@secondstate.io>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

Community : Modified doc strings and example notebook for Clarifai

Description:

1. Modified doc strings inside clarifai vectorstore class and

embeddings.

2. Modified notebook examples.

---------

Co-authored-by: Eugene Yurtsev <eyurtsev@gmail.com>

- **Description:**

`QianfanChatEndpoint` extends `BaseChatModel` as a super class, which

has a default stream implement might concat the MessageChunk with

`__add__`. When call stream(), a ValueError for duplicated key will be

raise.

- **Issues:**

* #13546

* #13548

* merge two single test file related to qianfan.

- **Dependencies:** no

- **Tag maintainer:**

---------

Co-authored-by: root <liujun45@baidu.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

See preview :

https://langchain-git-fork-cbornet-astra-loader-doc-langchain.vercel.app/docs/integrations/document_loaders/astradb

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

- **Description:** This update rectifies an error in the notebook by

changing the input variable from `zhipu_api_key` to `api_key`. It also

includes revisions to comments to improve program readability.

- **Issue:** The input variable in the notebook example should be

`api_key` instead of `zhipu_api_key`.

- **Dependencies:** No additional dependencies are required for this

change.

To ensure quality and standards, we have performed extensive linting and

testing. Commands such as make format, make lint, and make test have

been run from the root of the modified package to ensure compliance with

LangChain's coding standards.

fix of #14905

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Updates docs and cookbooks to import ChatOpenAI, OpenAI, and OpenAI

Embeddings from `langchain_openai`

There are likely more

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

removed the deprecated model from text embedding page of openai notebook

and added the suggested model from openai page

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

<!-- Thank you for contributing to LangChain!

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes (if applicable),

- **Dependencies:** any dependencies required for this change,

- **Tag maintainer:** for a quicker response, tag the relevant

maintainer (see below),

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc:

https://github.com/langchain-ai/langchain/blob/master/.github/CONTRIBUTING.md

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in `docs/extras`

directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

Adds `WasmChat` integration. `WasmChat` runs GGUF models locally or via

chat service in lightweight and secure WebAssembly containers. In this

PR, `WasmChatService` is introduced as the first step of the

integration. `WasmChatService` is driven by

[llama-api-server](https://github.com/second-state/llama-utils) and

[WasmEdge Runtime](https://wasmedge.org/).

---------

Signed-off-by: Xin Liu <sam@secondstate.io>

BigQuery vector search lets you use GoogleSQL to do semantic search,

using vector indexes for fast but approximate results, or using brute

force for exact results.

This PR integrates LangChain vectorstore with BigQuery Vector Search.

<!-- Thank you for contributing to LangChain!

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes (if applicable),

- **Dependencies:** any dependencies required for this change,

- **Tag maintainer:** for a quicker response, tag the relevant

maintainer (see below),

- **Twitter handle:** we announce bigger features on Twitter. If your PR

gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc:

https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in `docs/extras`

directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

---------

Co-authored-by: Vlad Kolesnikov <vladkol@google.com>

- **Description:** Tool now supports querying over 200 million

scientific articles, vastly expanding its reach beyond the 2 million

articles accessible through Arxiv. This update significantly broadens

access to the entire scope of scientific literature.

- **Dependencies:** semantischolar

https://github.com/danielnsilva/semanticscholar

- **Twitter handle:** @shauryr

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

…tch]: import models from community

ran

```bash

git grep -l 'from langchain\.chat_models' | xargs -L 1 sed -i '' "s/from\ langchain\.chat_models/from\ langchain_community.chat_models/g"

git grep -l 'from langchain\.llms' | xargs -L 1 sed -i '' "s/from\ langchain\.llms/from\ langchain_community.llms/g"

git grep -l 'from langchain\.embeddings' | xargs -L 1 sed -i '' "s/from\ langchain\.embeddings/from\ langchain_community.embeddings/g"

git checkout master libs/langchain/tests/unit_tests/llms

git checkout master libs/langchain/tests/unit_tests/chat_models

git checkout master libs/langchain/tests/unit_tests/embeddings/test_imports.py

make format

cd libs/langchain; make format

cd ../experimental; make format

cd ../core; make format

```

- **Description:** updates/enhancements to IBM

[watsonx.ai](https://www.ibm.com/products/watsonx-ai) LLM provider

(prompt tuned models and prompt templates deployments support)

- **Dependencies:**

[ibm-watsonx-ai](https://pypi.org/project/ibm-watsonx-ai/),

- **Tag maintainer:** : @hwchase17 , @eyurtsev , @baskaryan

- **Twitter handle:** details in comment below.

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally. ✅

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Added some Headers in steam tool notebook to match consistency with the

other toolkit notebooks

- Dependencies: no new dependencies

- Tag maintainer: @hwchase17, @baskaryan

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

`integrations/document_loaders/` `Excel` and `OneNote` pages in the

navbar were in the wrong sort order. It is because the file names are

not equal to the page titles.

- renamed `excel` and `onenote` file names

- **Description:**

- This PR introduces a significant enhancement to the LangChain project

by integrating a new chat model powered by the third-generation base

large model, ChatGLM3, via the zhipuai API.

- This advanced model supports functionalities like function calls, code

interpretation, and intelligent Agent capabilities.

- The additions include the chat model itself, comprehensive

documentation in the form of Python notebook docs, and thorough testing

with both unit and integrated tests.

- **Dependencies:** This update relies on the ZhipuAI package as a key

dependency.

- **Twitter handle:** If this PR receives spotlight attention, we would

be honored to receive a mention for our integration of the advanced

ChatGLM3 model via the ZhipuAI API. Kindly tag us at @kaiwu.

To ensure quality and standards, we have performed extensive linting and

testing. Commands such as make format, make lint, and make test have

been run from the root of the modified package to ensure compliance with

LangChain's coding standards.

TO DO: Continue refining and enhancing both the unit tests and

integrated tests.

---------

Co-authored-by: jing <jingguo92@gmail.com>

Co-authored-by: hyy1987 <779003812@qq.com>

Co-authored-by: jianchuanqi <qijianchuan@hotmail.com>

Co-authored-by: lirq <whuclarence@gmail.com>

Co-authored-by: whucalrence <81530213+whucalrence@users.noreply.github.com>

Co-authored-by: Jing Guo <48378126+JaneCrystall@users.noreply.github.com>

Description: Volcano Ark is an enterprise-grade large-model service

platform for developers, providing a full range of functions and

services such as model training, inference, evaluation, fine-tuning. You

can visit its homepage at https://www.volcengine.com/docs/82379/1099455