- **Description:** add async tests, add tokenize support

- **Dependencies:**

[ibm-watsonx-ai](https://pypi.org/project/ibm-watsonx-ai/),

- **Tag maintainer:**

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally -> ✅

Please make sure integration_tests passing locally -> ✅

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

This PR makes the following updates in the pgvector database:

1. Use JSONB field for metadata instead of JSON

2. Update operator syntax to include required `$` prefix before the

operators (otherwise there will be name collisions with fields)

3. The change is non-breaking, old functionality is still the default,

but it will emit a deprecation warning

4. Previous functionality has bugs associated with comparisons due to

casting to text (so lexical ordering is used incorrectly for numeric

fields)

5. Adds an a GIN index on the JSONB field for more efficient querying

**PR message**: ***Delete this entire checklist*** and replace with

- **Description:** [a description of the change](docs: Add in code

documentation to core Runnable assign method)

- **Issue:** the issue #18804

Fixed typo in line 661 - from 'mimimize' to 'minimize

- [ ] **PR message**:

- **Description:** Fixed typo in streaming document - change 'mimimize'

to 'minimize

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

- **Description:** When calling the `_stream_log_implementation` from

the `astream_log` method in the `Runnable` class, it is not handing over

the `kwargs` argument. Therefore, even if i want to customize APIHandler

and implement additional features with additional arguments, it is not

possible. Conversely, the `astream_events` method normally handing over

the `kwargs` argument.

- **Issue:** https://github.com/langchain-ai/langchain/issues/19054

- **Dependencies:**

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

Co-authored-by: hyungwookyang <hyungwookyang@worksmobile.com>

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

- **Description:** This change fixes a bug where attempts to load data

from Notion using the NotionDBLoader resulted in a 400 Bad Request

error. The issue was traced to the unconditional addition of an empty

'filter' object in the request payload, which Notion's API does not

accept. The modification ensures that the 'filter' object is only

included in the payload when it is explicitly provided and not empty,

thus preventing the 400 error from occurring.

- **Issue:** Fixes

[#18009](https://github.com/langchain-ai/langchain/issues/18009)

- **Dependencies:** None

- **Twitter handle:** @gunnzolder

Co-authored-by: Anton Parkhomenko <anton@merge.rocks>

**Description:**

Updates to LangChain-MongoDB documentation: updates to the Atlas vector

search index definition

**Issue:**

NA

**Dependencies:**

NA

**Twitter handle:**

iprakul

This PR adds `batch as completed` method to the standard Runnable

interface. It takes in a list of inputs and yields the corresponding

outputs as the inputs are completed.

Add documentation notebook for `ElasticsearchRetriever`.

## Dependencies

- [ ] Release new `langchain-elasticsearch` version 0.2.0 that includes

`ElasticsearchRetriever`

**Description:** Circular dependencies when parsing references leading

to `RecursionError: maximum recursion depth exceeded` issue. This PR

address the issue by handling previously seen refs as in any typical DFS

to avoid infinite depths.

**Issue:** https://github.com/langchain-ai/langchain/issues/12163

**Twitter handle:** https://twitter.com/theBhulawat

- [x] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

Co-authored-by: Bagatur <22008038+baskaryan@users.noreply.github.com>

**Description:** Refactor code of FAISS vectorcstore and update the

related documentation.

Details:

- replace `.format()` with f-strings for strings formatting;

- refactor definition of a filtering function to make code more readable

and more flexible;

- slightly improve efficiency of

`max_marginal_relevance_search_with_score_by_vector` method by removing

unnecessary looping over the same elements;

- slightly improve efficiency of `delete` method by using set data

structure for checking if the element was already deleted;

**Issue:** fix small inconsistency in the documentation (the old example

was incorrect and unappliable to faiss vectorstore)

**Dependencies:** basic langchain-community dependencies and `faiss`

(for CPU or for GPU)

**Twitter handle:** antonenkodev

Issue : _call method of LLMRouterChain uses predict_and_parse, which is

slated for deprecation.

Description : Instead of using predict_and_parse, this replaces it with

individual predict and parse functions.

Added deps:

- `@supabase/supabase-js` - for sending inserts

- `supabase` - dev dep, for generating types via cli

- `dotenv` for loading env vars

Added script:

- `yarn gen` - will auto generate the database schema types using the

supabase CLI. Not necessary for development, but is useful. Requires

authing with the supabase CLI (will error out w/ instructions if you're

not authed).

Added functionality:

- pulls users IP address (using a free endpoint: `https://api.ipify.org`

so we can filter out abuse down the line)

TODO:

- [x] add env vars to vercel

**Description:** Update the docstring of OpenAI, OpenAIEmbeddings and

ChatOpenAI classes

**Issue:** Update import module paths to the current LangChain API

**Dependencies:** None

**Lint and test**: `make format` and `make lint` were run

This incorporates the review comments from langchain-ai/langchain#18637

which I closed due to an issue I had in updating that pr branch

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

community: fix - change sparkllm spark_app_url to spark_api_url

- **Description:**

- Change the variable name from `sparkllm spark_app_url` to

`spark_api_url` in the community package.

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:**

Variable name was `openai_poem` but it didn't pass in the `"prompt":

"poem"` config, so the examples were showing a joke being returned from

a variable called `*_poem`.

We could have gone one of two ways:

1. Updating the config line and the output line, or

2. Updating the variable name

The latter seemed simpler, so that's what I went with. But I'd be glad

to re-do this PR if you prefer the former.

Thanks for everything, y'all. You rock 🤘

**Issue:** N/A

**Dependencies:** N/A

**Twitter handle:** `conroywhitney`

**Description:** Update AnthropicLLM deprecation message import path for

ChatAnthropic

**Issue:** Incorrect import path in deprecation message

**Dependencies:** None

**Lint and test**: `make format`, `make lint` and `make test` were run

This PR updates the on_tool_end handlers to return the raw output from the tool instead of casting it to a string.

This is technically a breaking change, though it's impact is expected to be somewhat minimal. It will fix behavior in `astream_events` as well.

Fixes the following issue #18760 raised by @eyurtsev

---------

Co-authored-by: Eugene Yurtsev <eyurtsev@gmail.com>

**Description:** Update callbacks documentation

**Issue:** Change some module imports and a method invocation to reflect

the current LangChainAPI

**Dependencies:** None

BasePDFLoader doesn't parse the suffix of the file correctly when

parsing S3 presigned urls. This fix enables the proper detection and

parsing of S3 presigned URLs to prevent errors such as `OSError: [Errno

36] File name too long`.

No additional dependencies required.

Created the `facebook` page from `facebook_faiss` and `facebook_chat`

pages. Added another Facebook integrations into this page.

Updated `discord` page.

Deduplicate documents using MD5 of the page_content. Also allows for

custom deduplication with graph ingestion method by providing metadata

id attribute

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

- **Description:** Adding an optional parameter `linearization_config`

to the `AmazonTextractPDFLoader` so the caller can define how the output

will be linearized, instead of forcing a predefined set of linearization

configs. It will still have a default configuration as this will be an

optional parameter.

- **Issue:** #17457

- **Dependencies:** The same ones that already exist for

`AmazonTextractPDFLoader`

- **Twitter handle:** [@lvieirajr19](https://twitter.com/lvieirajr19)

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

*Description**: My previous

[PR](https://github.com/langchain-ai/langchain/pull/18521) was

mistakenly closed, so I am reopening this one. Context: AWS released two

Mistral models on Bedrock last Friday (March 1, 2024). This PR includes

some code adjustments to ensure their compatibility with the Bedrock

class.

---------

Co-authored-by: Anis ZAKARI <anis.zakari@hymaia.com>

Co-authored-by: Erick Friis <erick@langchain.dev>

- **Description:** Update azuresearch vectorstore from_texts() method to

include fields argument, necessary for creating an Azure AI Search index

with custom fields.

- **Issue:** Currently index fields are fixed to default fields if Azure

Search index is created using from_texts() method

- **Dependencies:** None

- **Twitter handle:** None

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

Small improvement to the openapi prompt.

The agent was not finding the server base URL (looping through all

nodes). This small change narrows the search and enables finding the url

faster.

No dependency

Twitter : @al1pra

# Proper example for AzureOpenAI usage in error message

The original error message is wrong in part of a usage example it gives.

Corrected to the right one.

Co-authored-by: Dzmitry Kankalovich <dzmitry_kankalovich@epam.com>

Co-authored-by: Bagatur <baskaryan@gmail.com>

This PR is a successor to this PR -

https://github.com/langchain-ai/langchain/pull/17436

This PR updates the cookbook README with the notebook so that it is

available on langchain docs for discoverability.

cc: @baskaryan, @3coins

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:** Fix lists display issues in **Docs > Use Cases > Q&A

with RAG > Quickstart**.

In essence, this PR changes:

```markdown

Some paragraph.

- Item a.

- Item b.

```

to:

```markdown

Some paragraph.

- Item a.

- Item b.

```

There needs an extra empty line to make the list rendered properly.

FYI, the old version is displayed not properly as:

<img width="856" alt="image"

src="https://github.com/langchain-ai/langchain/assets/22856433/65202577-8ea2-47c6-b310-39bf42796fac">

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:** `S3DirectoryLoader` is failing if prefix is a folder

(ex: `my_folder/`) because `S3FileLoader` will try to load that folder

and will fail. This PR skip nested directories so prefix can be set to

folder instead of `my_folder/files_prefix`.

- **Issue:**

- #11917

- #6535

- #4326

- **Dependencies:** none

- **Twitter handle:** @Falydoor

- [x] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

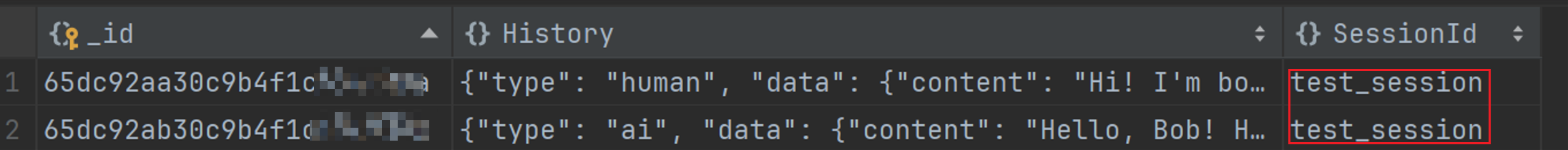

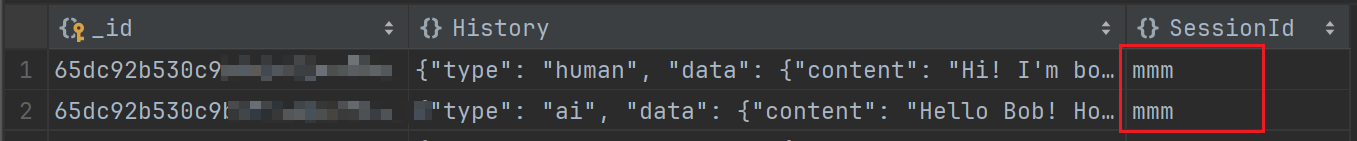

- [ ] Title: Mongodb: MongoDB connection performance improvement.

- [ ] Message:

- **Description:** I made collection index_creation as optional. Index

Creation is one time process.

- **Issue:** MongoDBChatMessageHistory class object is attempting to

create an index during connection, causing each request to take longer

than usual. This should be optional with a parameter.

- **Dependencies:** N/A

- **Branch to be checked:** origin/mongo_index_creation

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

- **Description:** Add embedding instruction to

HuggingFaceBgeEmbeddings, so that it can be compatible with nomic and

other models that need embedding instruction.

---------

Co-authored-by: Tao Wu <tao.wu@rwth-aachen.de>

Co-authored-by: Bagatur <baskaryan@gmail.com>

_generate() and _agenerate() both accept **kwargs, then pass them on to

_format_output; but _format_output doesn't accept **kwargs. Attempting

to pass, e.g.,

timeout=50

to _generate (or invoke()) results in a TypeError.

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

## Add Passio Nutrition AI Food Search Tool to Community Package

### Description

We propose adding a new tool to the `community` package, enabling

integration with Passio Nutrition AI for food search functionality. This

tool will provide a simple interface for retrieving nutrition facts

through the Passio Nutrition AI API, simplifying user access to

nutrition data based on food search queries.

### Implementation Details

- **Class Structure:** Implement `NutritionAI`, extending `BaseTool`. It

includes an `_run` method that accepts a query string and, optionally, a

`CallbackManagerForToolRun`.

- **API Integration:** Use `NutritionAIAPI` for the API wrapper,

encapsulating all interactions with the Passio Nutrition AI and

providing a clean API interface.

- **Error Handling:** Implement comprehensive error handling for API

request failures.

### Expected Outcome

- **User Benefits:** Enable easy querying of nutrition facts from Passio

Nutrition AI, enhancing the utility of the `langchain_community` package

for nutrition-related projects.

- **Functionality:** Provide a straightforward method for integrating

nutrition information retrieval into users' applications.

### Dependencies

- `langchain_core` for base tooling support

- `pydantic` for data validation and settings management

- Consider `requests` or another HTTP client library if not covered by

`NutritionAIAPI`.

### Tests and Documentation

- **Unit Tests:** Include tests that mock network interactions to ensure

tool reliability without external API dependency.

- **Documentation:** Create an example notebook in

`docs/docs/integrations/tools/passio_nutrition_ai.ipynb` showing usage,

setup, and example queries.

### Contribution Guidelines Compliance

- Adhere to the project's linting and formatting standards (`make

format`, `make lint`, `make test`).

- Ensure compliance with LangChain's contribution guidelines,

particularly around dependency management and package modifications.

### Additional Notes

- Aim for the tool to be a lightweight, focused addition, not

introducing significant new dependencies or complexity.

- Potential future enhancements could include caching for common queries

to improve performance.

### Twitter Handle

- Here is our Passio AI [twitter handle](https://twitter.com/@passio_ai)

where we announce our products.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

"community: added a feature to filter documents in Mongoloader"

- **Description:** added a feature to filter documents in Mongoloader

- **Feature:** the feature #18251

- **Dependencies:** No

- **Twitter handle:** https://twitter.com/im_Kushagra

For some DBs with lots of tables, reflection of all the tables can take

very long. So this change will make the tables be reflected lazily when

get_table_info() is called and `lazy_table_reflection` is True.

Allows all chat models that implement _stream, but not _astream to still have async streaming to work.

Amongst other things this should resolve issues with streaming community model implementations through langserve since langserve is exclusively async.

**Description:** Replacing the deprecated predict() and apredict()

methods in the unit tests

**Issue:** Not applicable

**Dependencies:** None

**Lint and test**: `make format`, `make lint` and `make test` have been

run

**Description:** Minor update to Anthropic documentation

**Issue:** Not applicable

**Dependencies:** None

**Lint and test**: `make format` and `make lint` was done

This path updates function "run" to "invoke" in llm_bash.ipynb.

Without this path, you see following warning.

LangChainDeprecationWarning: The function `run` was deprecated in

LangChain 0.1.0

and will be removed in 0.2.0. Use invoke instead.

Signed-off-by: Masanari Iida <standby24x7@gmail.com>

Fixing a minor typo in the package name.

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

- [ ] **PR title:** docs: Fix link to HF TEI in

text_embeddings_inference.ipynb

- [ ] **PR message:**

- **Description:** Fix the link to [Hugging Face Text Embeddings

Inference

(TEI)](https://huggingface.co/docs/text-embeddings-inference/index) in

text_embeddings_inference.ipynb

- **Issue:** Fix#18576

Make `ElasticsearchRetriever` available as top-level import.

The `langchain` package depends on `langchain-community` so we do not

need to depend on it explicitly.

## Description

- Add [Friendli](https://friendli.ai/) integration for `Friendli` LLM

and `ChatFriendli` chat model.

- Unit tests and integration tests corresponding to this change are

added.

- Documentations corresponding to this change are added.

## Dependencies

- Optional dependency

[`friendli-client`](https://pypi.org/project/friendli-client/) package

is added only for those who use `Frienldi` or `ChatFriendli` model.

## Twitter handle

- https://twitter.com/friendliai

This pull request introduces initial support for the TiDB vector store.

The current version is basic, laying the foundation for the vector store

integration. While this implementation provides the essential features,

we plan to expand and improve the TiDB vector store support with

additional enhancements in future updates.

Upcoming Enhancements:

* Support for Vector Index Creation: To enhance the efficiency and

performance of the vector store.

* Support for max marginal relevance search.

* Customized Table Structure Support: Recognizing the need for

flexibility, we plan for more tailored and efficient data store

solutions.

Simple use case exmaple

```python

from typing import List, Tuple

from langchain.docstore.document import Document

from langchain_community.vectorstores import TiDBVectorStore

from langchain_openai import OpenAIEmbeddings

db = TiDBVectorStore.from_texts(

embedding=embeddings,

texts=['Andrew like eating oranges', 'Alexandra is from England', 'Ketanji Brown Jackson is a judge'],

table_name="tidb_vector_langchain",

connection_string=tidb_connection_url,

distance_strategy="cosine",

)

query = "Can you tell me about Alexandra?"

docs_with_score: List[Tuple[Document, float]] = db.similarity_search_with_score(query)

for doc, score in docs_with_score:

print("-" * 80)

print("Score: ", score)

print(doc.page_content)

print("-" * 80)

```

## **Description:**

MongoDB integration tests link to a provided Atlas Cluster. We have very

stringent permissions set against the cluster provided. In order to make

it easier to track and isolate the collections each test gets run

against, we've updated the collection names to map the test file name.

i.e. `langchain_{filename}` => `langchain_test_vectorstores`

Fixes integration test results

## **Dependencies:**

Provided MONGODB_ATLAS_URI

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

cc: @shaneharvey, @blink1073 , @NoahStapp , @caseyclements

- **Description:** Chroma use uuid4 instead of uuid1 as random ids. Use

uuid1 may leak mac address, changing to uuid4 will not cause other

effects.

- **Issue:** None

- **Dependencies:** None

- **Twitter handle:** None

Fixes#18513.

## Description

This PR attempts to fix the support for Anthropic Claude v3 models in

BedrockChat LLM. The changes here has updated the payload to use the

`messages` format instead of the formatted text prompt for all models;

`messages` API is backwards compatible with all models in Anthropic, so

this should not break the experience for any models.

## Notes

The PR in the current form does not support the v3 models for the

non-chat Bedrock LLM. This means, that with these changes, users won't

be able to able to use the v3 models with the Bedrock LLM. I can open a

separate PR to tackle this use-case, the intent here was to get this out

quickly, so users can start using and test the chat LLM. The Bedrock LLM

classes have also grown complex with a lot of conditions to support

various providers and models, and is ripe for a refactor to make future

changes more palatable. This refactor is likely to take longer, and

requires more thorough testing from the community. Credit to PRs

[18579](https://github.com/langchain-ai/langchain/pull/18579) and

[18548](https://github.com/langchain-ai/langchain/pull/18548) for some

of the code here.

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

**Description:**

This integrates Infinispan as a vectorstore.

Infinispan is an open-source key-value data grid, it can work as single

node as well as distributed.

Vector search is supported since release 15.x

For more: [Infinispan Home](https://infinispan.org)

Integration tests are provided as well as a demo notebook

Thank you for contributing to LangChain!

- [x] **PR title**: "templates: rag-multi-modal typo, replace serch with

search "

- **Description:** Two little typos in multi modal templates (replace

serch string with search)

Signed-off-by: José Luis Di Biase <josx@interorganic.com.ar>

ValidationError: 2 validation errors for DocArrayDoc

text

Field required [type=missing, input_value={'embedding': [-0.0191128...9, 0.01005221541175212]}, input_type=dict]

For further information visit https://errors.pydantic.dev/2.5/v/missing

metadata

Field required [type=missing, input_value={'embedding': [-0.0191128...9, 0.01005221541175212]}, input_type=dict]

For further information visit https://errors.pydantic.dev/2.5/v/missing

```

In the `_get_doc_cls` method, the `DocArrayDoc` class is defined as

follows:

```python

class DocArrayDoc(BaseDoc):

text: Optional[str]

embedding: Optional[NdArray] = Field(**embeddings_params)

metadata: Optional[dict]

```

This is a PR that adds a dangerous load parameter to force users to opt in to use pickle.

This is a PR that's meant to raise user awareness that the pickling module is involved.

This is a patch for `CVE-2024-2057`:

https://www.cve.org/CVERecord?id=CVE-2024-2057

This affects users that:

* Use the `TFIDFRetriever`

* Attempt to de-serialize it from an untrusted source that contains a

malicious payload

**Description:** Update to the streaming tutorial notebook in the LCEL

documentation

**Issue:** Fixed an import and (minor) changes in documentation language

**Dependencies:** None

- **Description:** Databricks SerDe uses cloudpickle instead of pickle

when serializing a user-defined function transform_input_fn since pickle

does not support functions defined in `__main__`, and cloudpickle

supports this.

- **Dependencies:** cloudpickle>=2.0.0

Added a unit test.

- **Description:** Fixed some typos and copy errors in the Beta

Structured Output docs

- **Issue:** N/A

- **Dependencies:** Docs only

- **Twitter handle:** @psvann

Co-authored-by: P.S. Vann <psvann@yahoo.com>

Description:

This pull request addresses two key improvements to the langchain

repository:

**Fix for Crash in Flight Search Interface**:

Previously, the code would crash when encountering a failure scenario in

the flight ticket search interface. This PR resolves this issue by

implementing a fix to handle such scenarios gracefully. Now, the code

handles failures in the flight search interface without crashing,

ensuring smoother operation.

**Documentation Update for Amadeus Toolkit**:

Prior to this update, examples provided in the documentation for the

Amadeus Toolkit were unable to run correctly due to outdated

information. This PR includes an update to the documentation, ensuring

that all examples can now be executed successfully. With this update,

users can effectively utilize the Amadeus Toolkit with accurate and

functioning examples.

These changes aim to enhance the reliability and usability of the

langchain repository by addressing issues related to error handling and

ensuring that documentation remains up-to-date and actionable.

Issue: https://github.com/langchain-ai/langchain/issues/17375

Twitter Handle: SingletonYxx

### Description

Changed the value specified for `content_key` in JSONLoader from a

single key to a value based on jq schema.

I created [similar

PR](https://github.com/langchain-ai/langchain/pull/11255) before, but it

has several conflicts because of the architectural change associated

stable version release, so I re-create this PR to fit new architecture.

### Why

For json data like the following, specify `.data[].attributes.message`

for page_content and `.data[].attributes.id` or

`.data[].attributes.attributes. tags`, etc., the `content_key` must also

parse the json structure.

<details>

<summary>sample json data</summary>

```json

{

"data": [

{

"attributes": {

"message": "message1",

"tags": [

"tag1"

]

},

"id": "1"

},

{

"attributes": {

"message": "message2",

"tags": [

"tag2"

]

},

"id": "2"

}

]

}

```

</details>

<details>

<summary>sample code</summary>

```python

def metadata_func(record: dict, metadata: dict) -> dict:

metadata["source"] = None

metadata["id"] = record.get("id")

metadata["tags"] = record["attributes"].get("tags")

return metadata

sample_file = "sample1.json"

loader = JSONLoader(

file_path=sample_file,

jq_schema=".data[]",

content_key=".attributes.message", ## content_key is parsable into jq schema

is_content_key_jq_parsable=True, ## this is added parameter

metadata_func=metadata_func

)

data = loader.load()

data

```

</details>

### Dependencies

none

### Twitter handle

[kzk_maeda](https://twitter.com/kzk_maeda)

Neo4j tools use particular node labels and relationship types to store

metadata, but are irrelevant for text2cypher or graph generation, so we

want to ignore them in the schema representation.

This patch updates function "run" to "invoke" in smart_llm.ipynb.

Without this patch, you see following warning.

LangChainDeprecationWarning: The function `run` was deprecated in

LangChain 0.1.0 and will be removed in 0.2.0. Use invoke instead.

Signed-off-by: Masanari Iida <standby24x7@gmail.com>

Signed-off-by: Masanari Iida <standby24x7@gmail.com>

Deprecates the old langchain-hub repository. Does *not* deprecate the

new https://smith.langchain.com/hub

@PinkDraconian has correctly raised that in the event someone is loading

unsanitized user input into the `try_load_from_hub` function, they have

the ability to load files from other locations in github than the

hwchase17/langchain-hub repository.

This PR adds some more path checking to that function and deprecates the

functionality in favor of the hub built into LangSmith.

**Description:**

modified the user_name to username to conform with the expected inputs

to TelegramChatApiLoader

**Issue:**

Current code fails in langchain-community 0.0.24

<loader = TelegramChatApiLoader(

chat_entity="<CHAT_URL>", # recommended to use Entity here

api_hash="<API HASH >",

api_id="<API_ID>",

user_name="", # needed only for caching the session.

)>

## Description

Adding in Unit Test variation for `MongoDBChatMessageHistory` package

Follow-up to #18590

- [x] **Add tests and docs**: Unit test is what's being added

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

## **Description**

Migrate the `MongoDBChatMessageHistory` to the managed

`langchain-mongodb` partner-package

## **Dependencies**

None

## **Twitter handle**

@mongodb

## **tests and docs**

- [x] Migrate existing integration test

- [x ]~ Convert existing integration test to a unit test~ Creation is

out of scope for this ticket

- [x ] ~Considering delaying work until #17470 merges to leverage the

`MockCollection` object. ~

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

# Description

- **Description:** Adding MongoDB LLM Caching Layer abstraction

- **Issue:** N/A

- **Dependencies:** None

- **Twitter handle:** @mongodb

Checklist:

- [x] PR title: Please title your PR "package: description", where

"package" is whichever of langchain, community, core, experimental, etc.

is being modified. Use "docs: ..." for purely docs changes, "templates:

..." for template changes, "infra: ..." for CI changes.

- Example: "community: add foobar LLM"

- [x] PR Message (above)

- [x] Pass lint and test: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified to check that you're

passing lint and testing. See contribution guidelines for more

information on how to write/run tests, lint, etc:

https://python.langchain.com/docs/contributing/

- [ ] Add tests and docs: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @efriis, @eyurtsev, @hwchase17.

---------

Co-authored-by: Jib <jib@byblack.us>

Thank you for contributing to LangChain!

- [ ] **PR title**: "community: deprecate vectorstores.MatchingEngine"

- [ ] **PR message**:

- **Description:** announced a deprecation since this integration has

been moved to langchain_google_vertexai

**Description:** Update docstrings of ChatAnthropic class

**Issue:** Change to ChatAnthropic from ChatAnthropicMessages

**Dependencies:** None

**Lint and test**: `make format`, `make lint` and `make test` passed

- **Description:**

This PR fixes some issues in the Jupyter notebook for the VectorStore

"SAP HANA Cloud Vector Engine":

* Slight textual adaptations

* Fix of wrong column name VEC_META (was: VEC_METADATA)

- **Issue:** N/A

- **Dependencies:** no new dependecies added

- **Twitter handle:** @sapopensource

path to notebook:

`docs/docs/integrations/vectorstores/hanavector.ipynb`

Currently llm_checker.ipynb uses a function "run".

Update to "invoke" to avoid following warning.

LangChainDeprecationWarning: The function `run` was deprecated in

LangChain 0.1.0

and will be removed in 0.2.0. Use invoke instead.

Signed-off-by: Masanari Iida <standby24x7@gmail.com>

This patch updates function "run" to "invoke".

Without this patch you see following warning.

LangChainDeprecationWarning: The function `run` was deprecated in

LangChain 0.1.0 and will be removed in 0.2.0. Use invoke instead.

Signed-off-by: Masanari Iida <standby24x7@gmail.com>

## PR title

Docs: Updated callbacks/index.mdx adding example on runnable methods

## PR message

- **Description:** Updated callbacks/index.mdx adding an example on how

to pass callbacks to the runnable methods (invoke, batch, ...)

- **Issue:** #16379

- **Dependencies:** None

- **Description:** finishes adding the you.com functionality including:

- add async functions to utility and retriever

- add the You.com Tool

- add async testing for utility, retriever, and tool

- add a tool integration notebook page

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** @scottnath

- **Description:** add tools_renderer for various non-openai agents,

make tools can be render in different ways for your LLM.

- **Issue:** N/A

- **Dependencies:** N/A

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Description:

This pull request introduces several enhancements for Azure Cosmos

Vector DB, primarily focused on improving caching and search

capabilities using Azure Cosmos MongoDB vCore Vector DB. Here's a

summary of the changes:

- **AzureCosmosDBSemanticCache**: Added a new cache implementation

called AzureCosmosDBSemanticCache, which utilizes Azure Cosmos MongoDB

vCore Vector DB for efficient caching of semantic data. Added

comprehensive test cases for AzureCosmosDBSemanticCache to ensure its

correctness and robustness. These tests cover various scenarios and edge

cases to validate the cache's behavior.

- **HNSW Vector Search**: Added HNSW vector search functionality in the

CosmosDB Vector Search module. This enhancement enables more efficient

and accurate vector searches by utilizing the HNSW (Hierarchical

Navigable Small World) algorithm. Added corresponding test cases to

validate the HNSW vector search functionality in both

AzureCosmosDBSemanticCache and AzureCosmosDBVectorSearch. These tests

ensure the correctness and performance of the HNSW search algorithm.

- **LLM Caching Notebook** - The notebook now includes a comprehensive

example showcasing the usage of the AzureCosmosDBSemanticCache. This

example highlights how the cache can be employed to efficiently store

and retrieve semantic data. Additionally, the example provides default

values for all parameters used within the AzureCosmosDBSemanticCache,

ensuring clarity and ease of understanding for users who are new to the

cache implementation.

@hwchase17,@baskaryan, @eyurtsev,

**Description:** Update to the pathspec for 'git grep' in lint check in

the Makefile

**Issue:** The pathspec {docs/docs,templates,cookbook} is not handled

correctly leading to the error during 'make lint' -

"fatal: ambiguous argument '{docs/docs,templates,cookbook}': unknown

revision or path not in the working tree."

See changes made in https://github.com/langchain-ai/langchain/pull/18058

Co-authored-by: Erick Friis <erick@langchain.dev>

### Description

Fixed a small bug in chroma.py add_images(), previously whenever we are

not passing metadata the documents is containing the base64 of the uris

passed, but when we are passing the metadata the documents is containing

normal string uris which should not be the case.

### Issue

In add_images() method when we are calling upsert() we have to use

"b64_texts" instead of normal string "uris".

### Twitter handle

https://twitter.com/whitepegasus01

- [X] Gemini Agent Executor imported `agent.py` has Gemini agent

executor which was not utilised in current template of gemini function

agent 🧑💻 instead openai_function_agent has been used

@sbusso @jarib please someone review it

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

* **Description:** adds `LlamafileEmbeddings` class implementation for

generating embeddings using

[llamafile](https://github.com/Mozilla-Ocho/llamafile)-based models.

Includes related unit tests and notebook showing example usage.

* **Issue:** N/A

* **Dependencies:** N/A

- [x] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** Remove the assert statement on the `count_documents`

in setup_class. It should just delete if there are documents present

- **Issue:** the issue # Crashes on class setup

- **Dependencies:** None

- **Twitter handle:** @mongodb

- [x] **Add tests and docs**: If you're adding a new integration, please

include

1. N/A

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

Co-authored-by: Jib <jib@byblack.us>

Current implementation doesn't have an indexed property that would

optimize the import. I have added a `baseEntityLabel` parameter that

allows you to add a secondary node label, which has an indexed id

`property`. By default, the behaviour is identical to previous version.

Since multi-labeled nodes are terrible for text2cypher, I removed the

secondary label from schema representation object and string, which is

used in text2cypher.

**Description:**

(a) Update to the module import path to reflect the splitting up of

langchain into separate packages

(b) Update to the documentation to include the new calling method

(invoke)

This PR makes `cohere_api_key` in `llms/cohere` a SecretStr, so that the

API Key is not leaked when `Cohere.cohere_api_key` is represented as a

string.

---------

Signed-off-by: Arun <arun@arun.blog>

Co-authored-by: Eugene Yurtsev <eyurtsev@gmail.com>

**Description:**

The URL of the data to index, specified to `WebBaseLoader` to import is

incorrect, causing the `langsmith_search` retriever to return a `404:

NOT_FOUND`.

Incorrect URL: https://docs.smith.langchain.com/overview

Correct URL: https://docs.smith.langchain.com

**Issue:**

This commit corrects the URL and prevents the LangServe Playground from

returning an error from its inability to use the retriever when

inquiring, "how can langsmith help with testing?".

**Dependencies:**

None.

**Twitter Handle:**

@ryanmeinzer

**Description:** Fix `metadata_extractor` type for `RecursiveUrlLoader`,

the default `_metadata_extractor` returns `dict` instead of `str`.

**Issue:** N/A

**Dependencies:** N/A

**Twitter handle:** N/A

Signed-off-by: Hemslo Wang <hemslo.wang@gmail.com>

- **Description:** Removing this line

```python

response = index.query(query, response_mode="no_text", **self.query_kwargs)

```

to

```python

response = index.query(query, **self.query_kwargs)

```

Since llama index query does not support response_mode anymore : ``` |

TypeError: BaseQueryEngine.query() got an unexpected keyword argument

'response_mode'````

- **Twitter handle:** @maximeperrin_

---------

Co-authored-by: Maxime Perrin <mperrin@doing.fr>

- [ ] **PR title**: "cookbook: using Gemma on LangChain"

- [ ] **PR message**:

- **Description:** added a tutorial how to use Gemma with LangChain

(from VertexAI or locally from Kaggle or HF)

- **Dependencies:** langchain-google-vertexai==0.0.7

- **Twitter handle:** lkuligin

In this commit we update the documentation for Google El Carro for Oracle Workloads. We amend the documentation in the Google Providers page to use the correct name which is El Carro for Oracle Workloads. We also add changes to the document_loaders and memory pages to reflect changes we made in our repo.

If the document loader recieves Pathlib path instead of str, it reads

the file correctly, but the problem begins when the document is added to

Deeplake.

This problem arises from casting the path to str in the metadata.

```python

deeplake = True

fname = Path('./lorem_ipsum.txt')

loader = TextLoader(fname, encoding="utf-8")

docs = loader.load_and_split()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

chunks= text_splitter.split_documents(docs)

if deeplake:

db = DeepLake(dataset_path=ds_path, embedding=embeddings, token=activeloop_token)

db.add_documents(chunks)

else:

db = Chroma.from_documents(docs, embeddings)

```

So using this snippet of code the error message for deeplake looks like

this:

```

[part of error message omitted]

Traceback (most recent call last):

File "/home/mwm/repositories/sources/fixing_langchain/main.py", line 53, in <module>

db.add_documents(chunks)

File "/home/mwm/repositories/sources/langchain/libs/core/langchain_core/vectorstores.py", line 139, in add_documents

return self.add_texts(texts, metadatas, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/mwm/repositories/sources/langchain/libs/community/langchain_community/vectorstores/deeplake.py", line 258, in add_texts

return self.vectorstore.add(

^^^^^^^^^^^^^^^^^^^^^

File "/home/mwm/anaconda3/envs/langchain/lib/python3.11/site-packages/deeplake/core/vectorstore/deeplake_vectorstore.py", line 226, in add

return self.dataset_handler.add(

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/mwm/anaconda3/envs/langchain/lib/python3.11/site-packages/deeplake/core/vectorstore/dataset_handlers/client_side_dataset_handler.py", line 139, in add

dataset_utils.extend_or_ingest_dataset(

File "/home/mwm/anaconda3/envs/langchain/lib/python3.11/site-packages/deeplake/core/vectorstore/vector_search/dataset/dataset.py", line 544, in extend_or_ingest_dataset

extend(

File "/home/mwm/anaconda3/envs/langchain/lib/python3.11/site-packages/deeplake/core/vectorstore/vector_search/dataset/dataset.py", line 505, in extend

dataset.extend(batched_processed_tensors, progressbar=False)

File "/home/mwm/anaconda3/envs/langchain/lib/python3.11/site-packages/deeplake/core/dataset/dataset.py", line 3247, in extend

raise SampleExtendError(str(e)) from e.__cause__

deeplake.util.exceptions.SampleExtendError: Failed to append a sample to the tensor 'metadata'. See more details in the traceback. If you wish to skip the samples that cause errors, please specify `ignore_errors=True`.

```

Which is does not explain the error well enough.

The same error for chroma looks like this

```

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/mwm/repositories/sources/fixing_langchain/main.py", line 56, in <module>

db = Chroma.from_documents(docs, embeddings)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/mwm/repositories/sources/langchain/libs/community/langchain_community/vectorstores/chroma.py", line 778, in from_documents

return cls.from_texts(

^^^^^^^^^^^^^^^

File "/home/mwm/repositories/sources/langchain/libs/community/langchain_community/vectorstores/chroma.py", line 736, in from_texts

chroma_collection.add_texts(

File "/home/mwm/repositories/sources/langchain/libs/community/langchain_community/vectorstores/chroma.py", line 309, in add_texts

raise ValueError(e.args[0] + "\n\n" + msg)

ValueError: Expected metadata value to be a str, int, float or bool, got lorem_ipsum.txt which is a <class 'pathlib.PosixPath'>

Try filtering complex metadata from the document using langchain_community.vectorstores.utils.filter_complex_metadata.

```

Which is way more user friendly, so I just added information about

possible mismatch of the type in the error message, the same way it is

covered in chroma

https://github.com/langchain-ai/langchain/blob/master/libs/community/langchain_community/vectorstores/chroma.py#L224

Thank you for contributing to LangChain!

- [x] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [x] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [x] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

- **Description**:

[`bigdl-llm`](https://github.com/intel-analytics/BigDL) is a library for

running LLM on Intel XPU (from Laptop to GPU to Cloud) using

INT4/FP4/INT8/FP8 with very low latency (for any PyTorch model). This PR

adds bigdl-llm integrations to langchain.

- **Issue**: NA

- **Dependencies**: `bigdl-llm` library

- **Contribution maintainer**: @shane-huang

Examples added:

- docs/docs/integrations/llms/bigdl.ipynb

Nvidia provider page is missing a Triton Inference Server package

reference.

Changes:

- added the Triton Inference Server reference

- copied the example notebook from the package into the doc files.

- added the Triton Inference Server description and links, the link to

the above example notebook

- formatted page to the consistent format

NOTE:

It seems that the [example

notebook](https://github.com/langchain-ai/langchain/blob/master/libs/partners/nvidia-trt/docs/llms.ipynb)

was originally created in wrong place. It should be in the LangChain

docs

[here](https://github.com/langchain-ai/langchain/tree/master/docs/docs/integrations/llms).

So, I've created a copy of this example. The original example is still

in the nvidia-trt package.

Description-

- Changed the GitHub endpoint as existing was not working and giving 404

not found error

- Also the existing function was failing if file_filter is not passed as

the tree api return all paths including directory as well, and when

get_file_content was iterating over these path, the function was failing

for directory as the api was returning list of files inside the

directory, so added a condition to ignore the paths if it a directory

- Fixes this issue -

https://github.com/langchain-ai/langchain/issues/17453

Co-authored-by: Radhika Bansal <Radhika.Bansal@veritas.com>

## Description

Updates the `langchain_community.embeddings.fastembed` provider as per

the recent updates to [`FastEmbed`](https://github.com/qdrant/fastembed)

library.

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

This PR migrates the existing MongoDBAtlasVectorSearch abstraction from

the `langchain_community` section to the partners package section of the

codebase.

- [x] Run the partner package script as advised in the partner-packages

documentation.

- [x] Add Unit Tests

- [x] Migrate Integration Tests

- [x] Refactor `MongoDBAtlasVectorStore` (autogenerated) to

`MongoDBAtlasVectorSearch`

- [x] ~Remove~ deprecate the old `langchain_community` VectorStore

references.

## Additional Callouts

- Implemented the `delete` method

- Included any missing async function implementations

- `amax_marginal_relevance_search_by_vector`

- `adelete`

- Added new Unit Tests that test for functionality of

`MongoDBVectorSearch` methods

- Removed [`del

res[self._embedding_key]`](e0c81e1cb0/libs/community/langchain_community/vectorstores/mongodb_atlas.py (L218))

in `_similarity_search_with_score` function as it would make the

`maximal_marginal_relevance` function fail otherwise. The `Document`

needs to store the embedding key in metadata to work.

Checklist:

- [x] PR title: Please title your PR "package: description", where

"package" is whichever of langchain, community, core, experimental, etc.

is being modified. Use "docs: ..." for purely docs changes, "templates:

..." for template changes, "infra: ..." for CI changes.

- Example: "community: add foobar LLM"

- [x] PR message

- [x] Pass lint and test: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified to check that you're

passing lint and testing. See contribution guidelines for more

information on how to write/run tests, lint, etc:

https://python.langchain.com/docs/contributing/

- [x] Add tests and docs: If you're adding a new integration, please

include

1. Existing tests supplied in docs/docs do not change. Updated

docstrings for new functions like `delete`

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory. (This already exists)

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

---------

Co-authored-by: Steven Silvester <steven.silvester@ieee.org>

Co-authored-by: Erick Friis <erick@langchain.dev>

## PR title

partners: changed the README file for the Fireworks integration in the

libs/partners/fireworks folder

## PR message

Description: Changed the README file of partners/fireworks following the

docs on https://python.langchain.com/docs/integrations/llms/Fireworks

The README includes:

- Brief description

- Installation

- Setting-up instructions (API key, model id, ...)

- Basic usage

Issue: https://github.com/langchain-ai/langchain/issues/17545

Dependencies: None

Twitter handle: None

- **Description:** The current embedchain implementation seems to handle

document metadata differently than done in the current implementation of

langchain and a KeyError is thrown. I would love for someone else to

test this...

---------

Co-authored-by: KKUGLER <kai.kugler@mercedes-benz.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Co-authored-by: Deshraj Yadav <deshraj@gatech.edu>

Sometimes, you want to use various parameters in the retrieval query of

Neo4j Vector to personalize/customize results. Before, when there were

only predefined chains, it didn't really make sense. Now that it's all

about custom chains and LCEL, it is worth adding since users can inject

any params they wish at query time. Isn't prone to SQL injection-type

attacks since we use parameters and not concatenating strings.

**Description:** Add facility to pass the optional output parser to

customize the parsing logic

---------

Co-authored-by: hasan <hasan@m2sys.com>

Co-authored-by: Erick Friis <erick@langchain.dev>

This PR adds links to some more free resources for people to get

acquainted with Langhchain without having to configure their system.

<!-- If no one reviews your PR within a few days, please @-mention one

of baskaryan, efriis, eyurtsev, hwchase17. -->

Co-authored-by: Filip Schouwenaars <filipsch@users.noreply.github.com>

**Description:**

In this PR, I am adding a `PolygonFinancials` tool, which can be used to

get financials data for a given ticker. The financials data is the

fundamental data that is found in income statements, balance sheets, and

cash flow statements of public US companies.

**Twitter**:

[@virattt](https://twitter.com/virattt)

Several URL-s were broken (in the yesterday PR). Like

[Integrations/platforms/google/Document

Loaders](https://python.langchain.com/docs/integrations/platforms/google#document-loaders)

page, Example link to "Document Loaders / Cloud SQL for PostgreSQL" and

most of the new example links in the Document Loaders, Vectorstores,

Memory sections.

- fixed URL-s (manually verified all example links)

- sorted sections in page to follow the "integrations/components" menu

item order.

- fixed several page titles to fix Navbar item order

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

**Description:** Update to the list of partner packages in the list of

providers

**Issue:** Google & Nvidia had two entries each, both pointing to the

same page

**Dependencies:** None

**Description**

This PR sets the "caller identity" of the Astra DB clients used by the

integration plugins (`AstraDBChatMessageHistory`, `AstraDBStore`,

`AstraDBByteStore` and, pending #17767 , `AstraDBVectorStore`). In this

way, the requests to the Astra DB Data API coming from within LangChain

are identified as such (the purpose is anonymous usage stats to best

improve the Astra DB service).

- **Description:** A generic document loader adapter for SQLAlchemy on

top of LangChain's `SQLDatabaseLoader`.

- **Needed by:** https://github.com/crate-workbench/langchain/pull/1

- **Depends on:** GH-16655

- **Addressed to:** @baskaryan, @cbornet, @eyurtsev

Hi from CrateDB again,

in the same spirit like GH-16243 and GH-16244, this patch breaks out

another commit from https://github.com/crate-workbench/langchain/pull/1,

in order to reduce the size of this patch before submitting it, and to

separate concerns.

To accompany the SQLAlchemy adapter implementation, the patch includes

integration tests for both SQLite and PostgreSQL. Let me know if

corresponding utility resources should be added at different spots.

With kind regards,

Andreas.

### Software Tests

```console

docker compose --file libs/community/tests/integration_tests/document_loaders/docker-compose/postgresql.yml up

```

```console

cd libs/community

pip install psycopg2-binary

pytest -vvv tests/integration_tests -k sqldatabase

```

```

14 passed

```

---------

Co-authored-by: Andreas Motl <andreas.motl@crate.io>

some mails from flipkart , amazon are encoded with other plain text

format so to handle UnicodeDecode error , added exception and latin

decoder

Thank you for contributing to LangChain!

@hwchase17

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

## PR title

langchain_nvidia_ai_endpoints[patch]: Invoke callback prior to yielding

## PR message

**Description:** Invoke callback prior to yielding token in _stream and

_astream methods for nvidia_ai_endpoints.

**Issue:** https://github.com/langchain-ai/langchain/issues/16913

**Dependencies:** None

- **Description:** Add possibility to pass ModelInference or Model

object to WatsonxLLM class

- **Dependencies:**

[ibm-watsonx-ai](https://pypi.org/project/ibm-watsonx-ai/),

- **Tag maintainer:** :

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` to check this

locally. ✅

### Description

This PR moves the Elasticsearch classes to a partners package.

Note that we will not move (and later remove) `ElasticKnnSearch`. It

were previously deprecated.

`ElasticVectorSearch` is going to stay in the community package since it

is used quite a lot still.

Also note that I left the `ElasticsearchTranslator` for self query

untouched because it resides in main `langchain` package.

### Dependencies

There will be another PR that updates the notebooks (potentially pulling

them into the partners package) and templates and removes the classes

from the community package, see

https://github.com/langchain-ai/langchain/pull/17468

#### Open question

How to make the transition smooth for users? Do we move the import

aliases and require people to install `langchain-elasticsearch`? Or do

we remove the import aliases from the `langchain` package all together?

What has worked well for other partner packages?

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

**Description**

Adding different threshold types to the semantic chunker. I’ve had much

better and predictable performance when using standard deviations

instead of percentiles.

For all the documents I’ve tried, the distribution of distances look

similar to the above: positively skewed normal distribution. All skews

I’ve seen are less than 1 so that explains why standard deviations

perform well, but I’ve included IQR if anyone wants something more

robust.

Also, using the percentile method backwards, you can declare the number

of clusters and use semantic chunking to get an ‘optimal’ splitting.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

- **Description:** By default it expects a list but that's not the case

in corner scenarios when there is no document ingested(use case:

Bootstrap application).

\

Hence added as check, if the instance is panda Dataframe instead of list

then it will procced with return immediately.

- **Issue:** NA

- **Dependencies:** NA

- **Twitter handle:** jaskiratsingh1

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

## Description & Issue

While following the official doc to use clickhouse as a vectorstore, I

found only the default `annoy` index is properly supported. But I want

to try another engine `usearch` for `annoy` is not properly supported on

ARM platforms.

Here is the settings I prefer:

``` python

settings = ClickhouseSettings(

table="wiki_Ethereum",

index_type="usearch", # annoy by default

index_param=[],

)

```

The above settings do not work for the command `set

allow_experimental_annoy_index=1` is hard-coded.

This PR will make sure the experimental feature follow the `index_type`

which is also consistent with Clickhouse's naming conventions.

**Description:** Update the example fiddler notebook to use community

path, instead of langchain.callback

**Dependencies:** None

**Twitter handle:** @bhalder

Co-authored-by: Barun Halder <barun@fiddler.ai>

h/t @hinthornw

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

Avoids deprecation warning that triggered at import time, e.g. with

`python -c 'import langchain.smith'`

/opt/venv/lib/python3.12/site-packages/langchain/callbacks/__init__.py:37: